John McCall

Evolutionary Computation and Explainable AI: A Roadmap to Transparent Intelligent Systems

Jun 12, 2024

Abstract:AI methods are finding an increasing number of applications, but their often black-box nature has raised concerns about accountability and trust. The field of explainable artificial intelligence (XAI) has emerged in response to the need for human understanding of AI models. Evolutionary computation (EC), as a family of powerful optimization and learning tools, has significant potential to contribute to XAI. In this paper, we provide an introduction to XAI and review various techniques in current use for explaining machine learning (ML) models. We then focus on how EC can be used in XAI, and review some XAI approaches which incorporate EC techniques. Additionally, we discuss the application of XAI principles within EC itself, examining how these principles can shed some light on the behavior and outcomes of EC algorithms in general, on the (automatic) configuration of these algorithms, and on the underlying problem landscapes that these algorithms optimize. Finally, we discuss some open challenges in XAI and opportunities for future research in this field using EC. Our aim is to demonstrate that EC is well-suited for addressing current problems in explainability and to encourage further exploration of these methods to contribute to the development of more transparent and trustworthy ML models and EC algorithms.

Mining Potentially Explanatory Patterns via Partial Solutions

Apr 05, 2024Abstract:Genetic Algorithms have established their capability for solving many complex optimization problems. Even as good solutions are produced, the user's understanding of a problem is not necessarily improved, which can lead to a lack of confidence in the results. To mitigate this issue, explainability aims to give insight to the user by presenting them with the knowledge obtained by the algorithm. In this paper we introduce Partial Solutions in order to improve the explainability of solutions to combinatorial optimization problems. Partial Solutions represent beneficial traits found by analyzing a population, and are presented to the user for explainability, but also provide an explicit model from which new solutions can be generated. We present an algorithm that assembles a collection of Partial Solutions chosen to strike a balance between high fitness, simplicity and atomicity. Experiments with standard benchmarks show that the proposed algorithm is able to find Partial Solutions which improve explainability at reasonable computational cost without affecting search performance.

An Application of a Multivariate Estimation of Distribution Algorithm to Cancer Chemotherapy

May 17, 2022

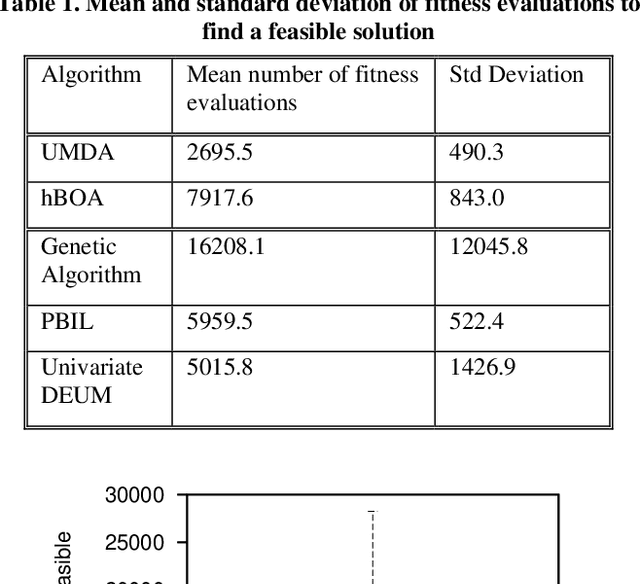

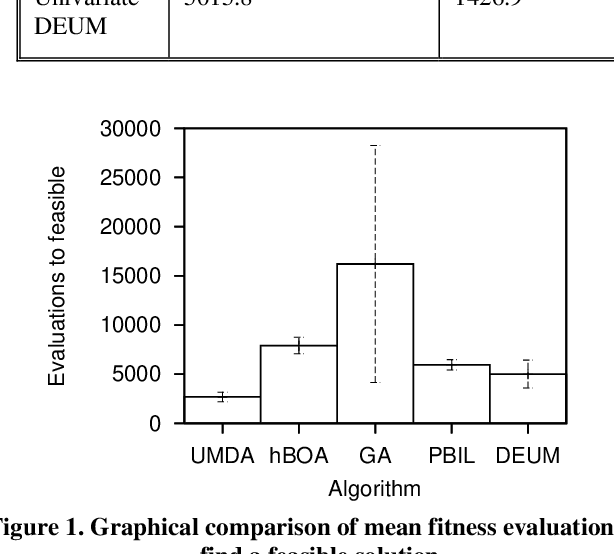

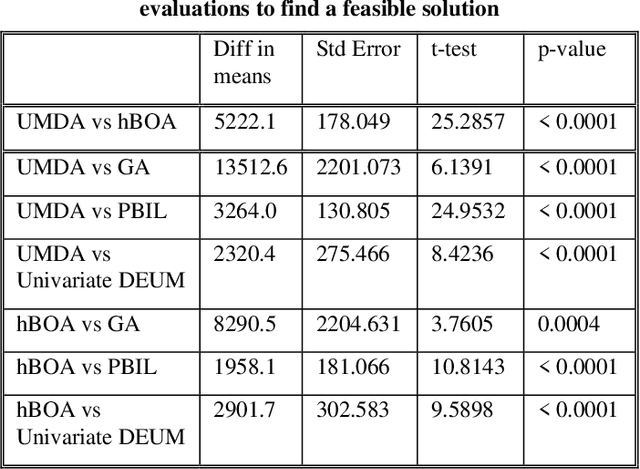

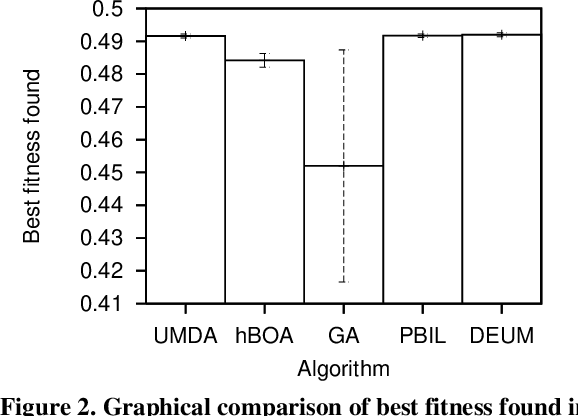

Abstract:Chemotherapy treatment for cancer is a complex optimisation problem with a large number of interacting variables and constraints. A number of different probabilistic algorithms have been applied to it with varying success. In this paper we expand on this by applying two estimation of distribution algorithms to the problem. One is UMDA, which uses a univariate probabilistic model similar to previously applied EDAs. The other is hBOA, the first EDA using a multivariate probabilistic model to be applied to the chemotherapy problem. While instinct would lead us to predict that the more sophisticated algorithm would yield better performance on a complex problem like this, we show that it is outperformed by the algorithms using the simpler univariate model. We hypothesise that this is caused by the more sophisticated algorithm being impeded by the large number of interactions in the problem which are unnecessary for its solution.

Ensemble Learning based on Classifier Prediction Confidence and Comprehensive Learning Particle Swarm Optimisation for polyp localisation

Apr 10, 2021

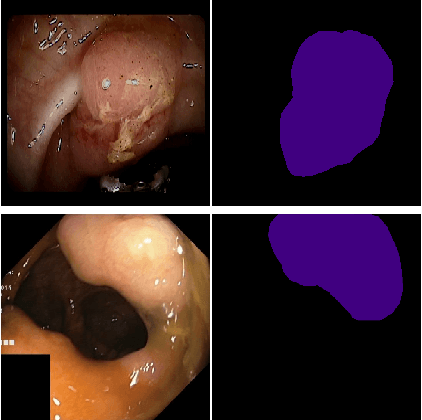

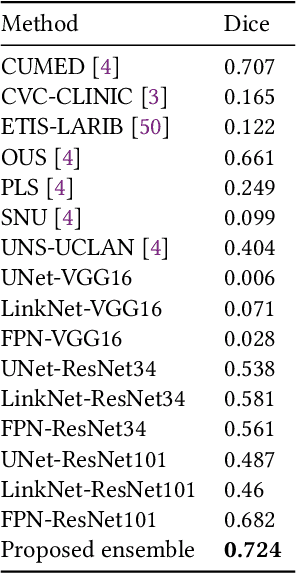

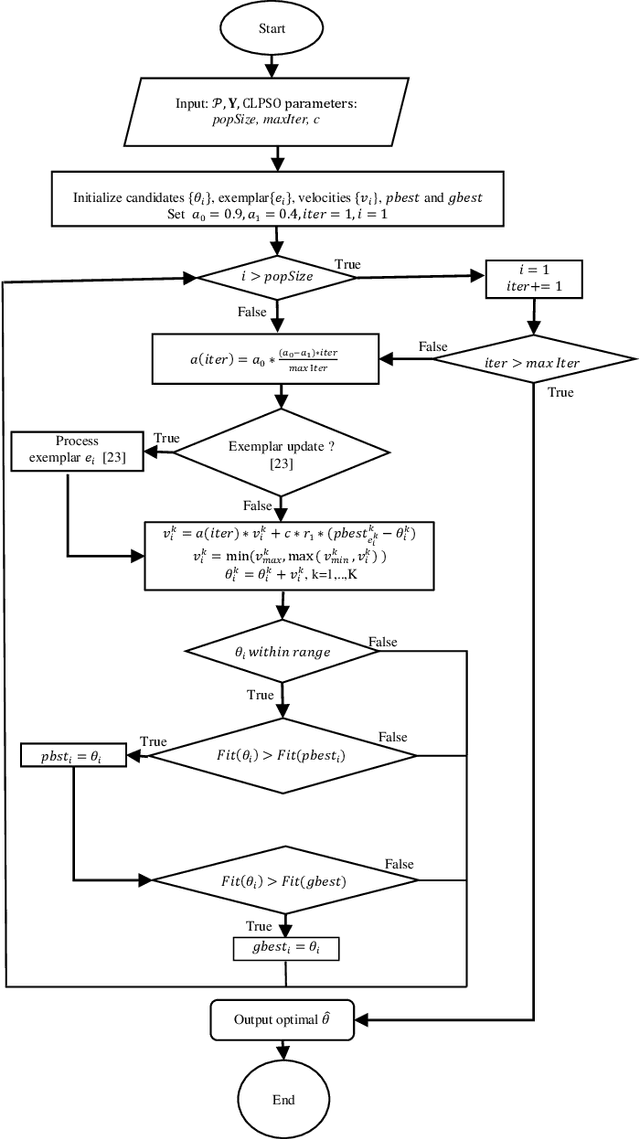

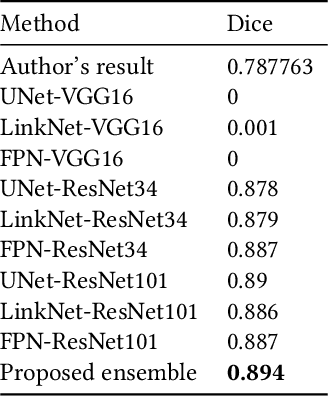

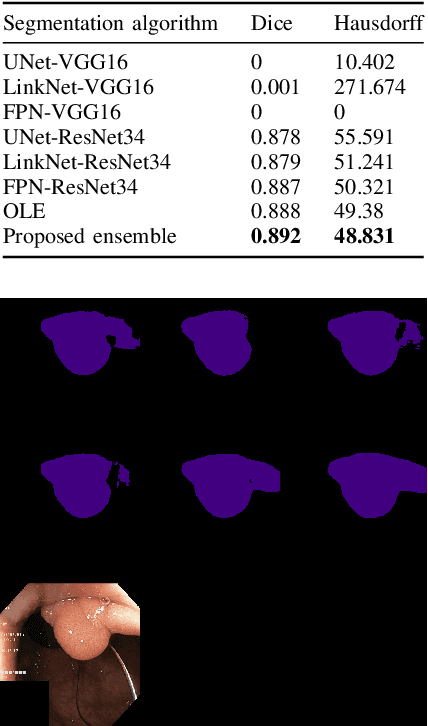

Abstract:Colorectal cancer (CRC) is the first cause of death in many countries. CRC originates from a small clump of cells on the lining of the colon called polyps, which over time might grow and become malignant. Early detection and removal of polyps are therefore necessary for the prevention of colon cancer. In this paper, we introduce an ensemble of medical polyp segmentation algorithms. Based on an observation that different segmentation algorithms will perform well on different subsets of examples because of the nature and size of training sets they have been exposed to and because of method-intrinsic factors, we propose to measure the confidence in the prediction of each algorithm and then use an associate threshold to determine whether the confidence is acceptable or not. An algorithm is selected for the ensemble if the confidence is below its associate threshold. The optimal threshold for each segmentation algorithm is found by using Comprehensive Learning Particle Swarm Optimization (CLPSO), a swarm intelligence algorithm. The Dice coefficient, a popular performance metric for image segmentation, is used as the fitness criteria. Experimental results on two polyp segmentation datasets MICCAI2015 and Kvasir-SEG confirm that our ensemble achieves better results compared to some well-known segmentation algorithms.

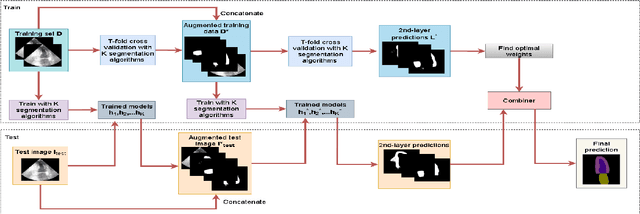

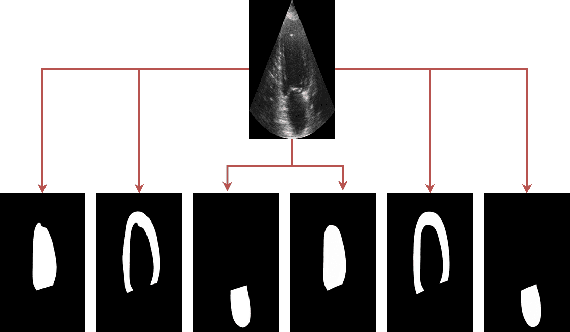

Two layer Ensemble of Deep Learning Models for Medical Image Segmentation

Apr 10, 2021

Abstract:In recent years, deep learning has rapidly become a method of choice for the segmentation of medical images. Deep Neural Network (DNN) architectures such as UNet have achieved state-of-the-art results on many medical datasets. To further improve the performance in the segmentation task, we develop an ensemble system which combines various deep learning architectures. We propose a two-layer ensemble of deep learning models for the segmentation of medical images. The prediction for each training image pixel made by each model in the first layer is used as the augmented data of the training image for the second layer of the ensemble. The prediction of the second layer is then combined by using a weights-based scheme in which each model contributes differently to the combined result. The weights are found by solving linear regression problems. Experiments conducted on two popular medical datasets namely CAMUS and Kvasir-SEG show that the proposed method achieves better results concerning two performance metrics (Dice Coefficient and Hausdorff distance) compared to some well-known benchmark algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge