John Karigiannis

Hybrid Robot Learning for Automatic Robot Motion Planning in Manufacturing

Feb 26, 2025Abstract:Industrial robots are widely used in diverse manufacturing environments. Nonetheless, how to enable robots to automatically plan trajectories for changing tasks presents a considerable challenge. Further complexities arise when robots operate within work cells alongside machines, humans, or other robots. This paper introduces a multi-level hybrid robot motion planning method combining a task space Reinforcement Learning-based Learning from Demonstration (RL-LfD) agent and a joint-space based Deep Reinforcement Learning (DRL) based agent. A higher level agent learns to switch between the two agents to enable feasible and smooth motion. The feasibility is computed by incorporating reachability, joint limits, manipulability, and collision risks of the robot in the given environment. Therefore, the derived hybrid motion planning policy generates a feasible trajectory that adheres to task constraints. The effectiveness of the method is validated through sim ulated robotic scenarios and in a real-world setup.

Understanding the Unforeseen via the Intentional Stance

Nov 01, 2022

Abstract:We present an architecture and system for understanding novel behaviors of an observed agent. The two main features of our approach are the adoption of Dennett's intentional stance and analogical reasoning as one of the main computational mechanisms for understanding unforeseen experiences. Our approach uses analogy with past experiences to construct hypothetical rationales that explain the behavior of an observed agent. Moreover, we view analogies as partial; thus multiple past experiences can be blended to analogically explain an unforeseen event, leading to greater inferential flexibility. We argue that this approach results in more meaningful explanations of observed behavior than approaches based on surface-level comparisons. A key advantage of behavior explanation over classification is the ability to i) take appropriate responses based on reasoning and ii) make non-trivial predictions that allow for the verification of the hypothesized explanation. We provide a simple use case to demonstrate novel experience understanding through analogy in a gas station environment.

Uncertainty-aware Perception Models for Off-road Autonomous Unmanned Ground Vehicles

Sep 22, 2022

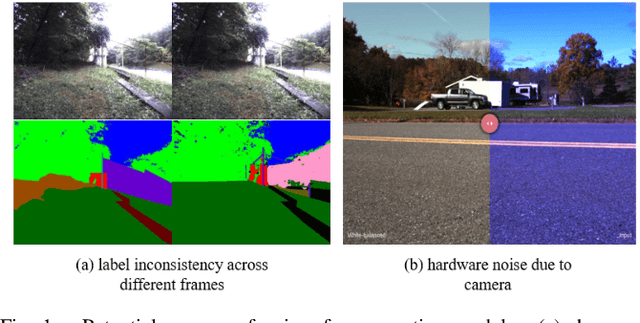

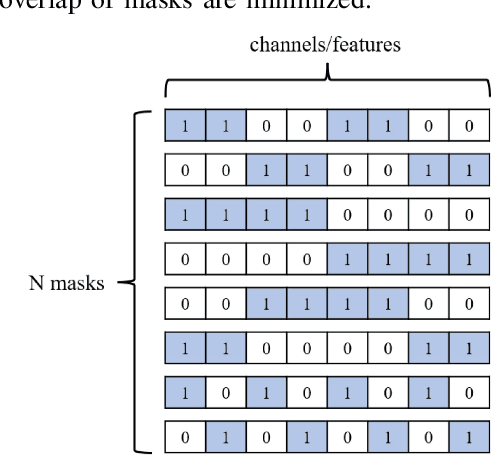

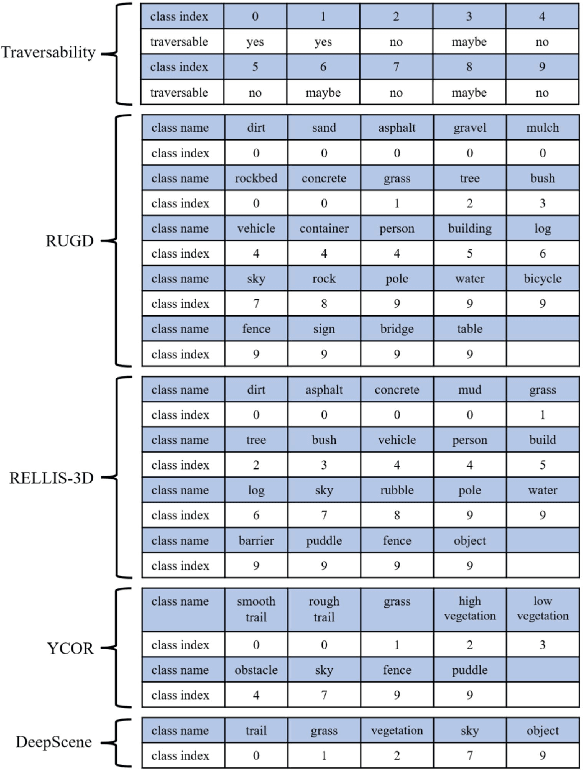

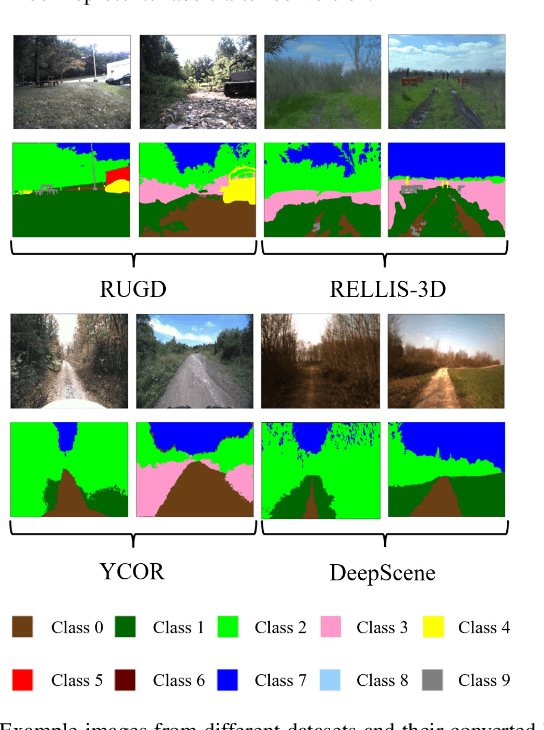

Abstract:Off-road autonomous unmanned ground vehicles (UGVs) are being developed for military and commercial use to deliver crucial supplies in remote locations, help with mapping and surveillance, and to assist war-fighters in contested environments. Due to complexity of the off-road environments and variability in terrain, lighting conditions, diurnal and seasonal changes, the models used to perceive the environment must handle a lot of input variability. Current datasets used to train perception models for off-road autonomous navigation lack of diversity in seasons, locations, semantic classes, as well as time of day. We test the hypothesis that model trained on a single dataset may not generalize to other off-road navigation datasets and new locations due to the input distribution drift. Additionally, we investigate how to combine multiple datasets to train a semantic segmentation-based environment perception model and we show that training the model to capture uncertainty could improve the model performance by a significant margin. We extend the Masksembles approach for uncertainty quantification to the semantic segmentation task and compare it with Monte Carlo Dropout and standard baselines. Finally, we test the approach against data collected from a UGV platform in a new testing environment. We show that the developed perception model with uncertainty quantification can be feasibly deployed on an UGV to support online perception and navigation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge