Johannes Cox

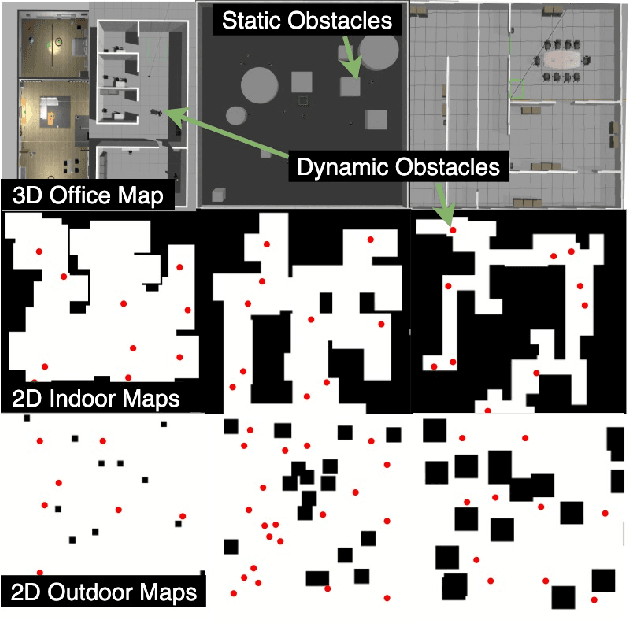

Arena-Bench: A Benchmarking Suite for Obstacle Avoidance Approaches in Highly Dynamic Environments

Jun 12, 2022

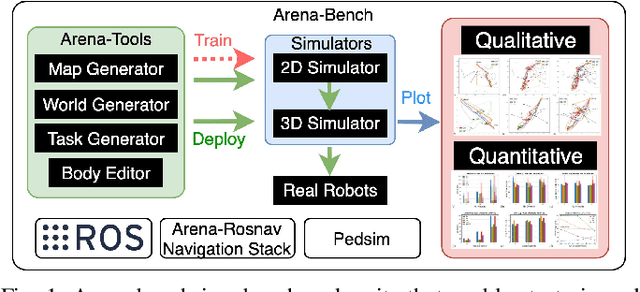

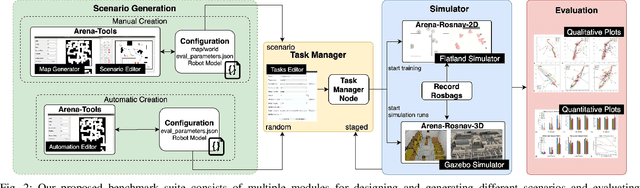

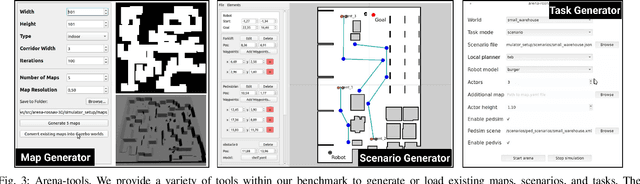

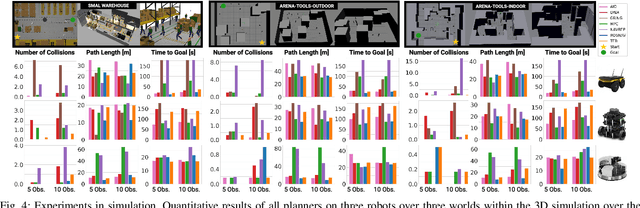

Abstract:The ability to autonomously navigate safely, especially within dynamic environments, is paramount for mobile robotics. In recent years, DRL approaches have shown superior performance in dynamic obstacle avoidance. However, these learning-based approaches are often developed in specially designed simulation environments and are hard to test against conventional planning approaches. Furthermore, the integration and deployment of these approaches into real robotic platforms are not yet completely solved. In this paper, we present Arena-bench, a benchmark suite to train, test, and evaluate navigation planners on different robotic platforms within 3D environments. It provides tools to design and generate highly dynamic evaluation worlds, scenarios, and tasks for autonomous navigation and is fully integrated into the robot operating system. To demonstrate the functionalities of our suite, we trained a DRL agent on our platform and compared it against a variety of existing different model-based and learning-based navigation approaches on a variety of relevant metrics. Finally, we deployed the approaches towards real robots and demonstrated the reproducibility of the results. The code is publicly available at github.com/ignc-research/arena-bench.

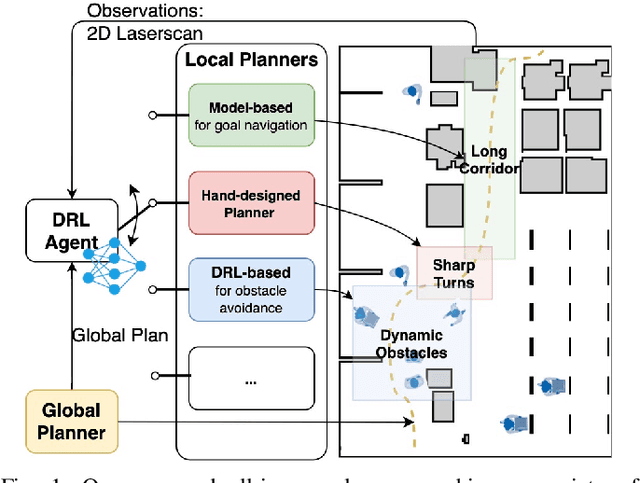

All-in-One: A DRL-based Control Switch Combining State-of-the-art Navigation Planners

Sep 23, 2021

Abstract:Autonomous navigation of mobile robots is an essential aspect in use cases such as delivery, assistance or logistics. Although traditional planning methods are well integrated into existing navigation systems, they struggle in highly dynamic environments. On the other hand, Deep-Reinforcement-Learning-based methods show superior performance in dynamic obstacle avoidance but are not suitable for long-range navigation and struggle with local minima. In this paper, we propose a Deep-Reinforcement-Learning-based control switch, which has the ability to select between different planning paradigms based solely on sensor data observations. Therefore, we develop an interface to efficiently operate multiple model-based, as well as learning-based local planners and integrate a variety of state-of-the-art planners to be selected by the control switch. Subsequently, we evaluate our approach against each planner individually and found improvements in navigation performance especially for highly dynamic scenarios. Our planner was able to prefer learning-based approaches in situations with a high number of obstacles while relying on the traditional model-based planners in long corridors or empty spaces.

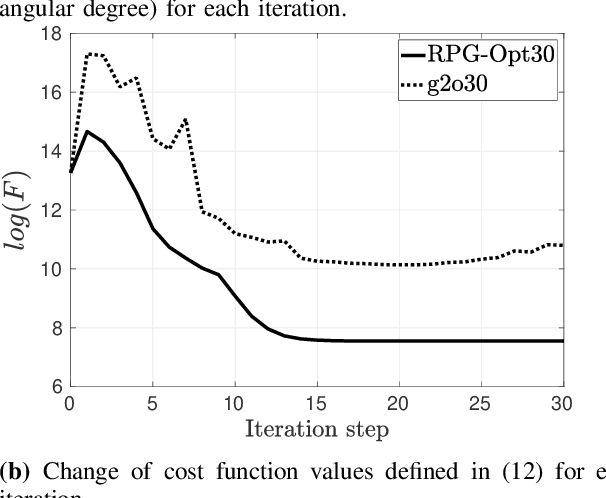

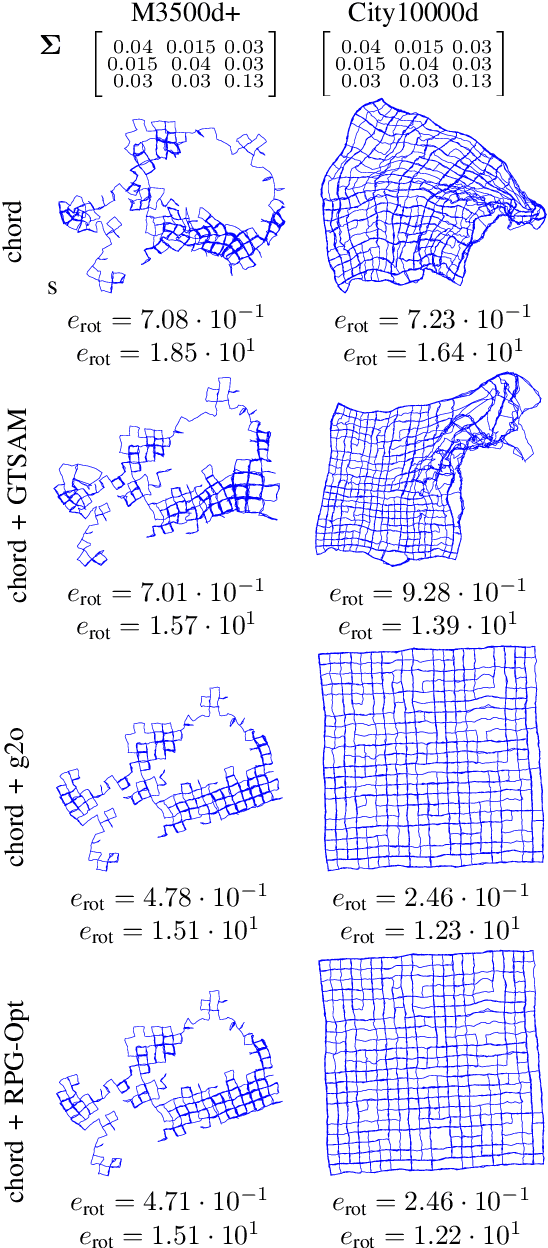

Improved Pose Graph Optimization for Planar Motions Using Riemannian Geometry on the Manifold of Dual Quaternions

Jul 31, 2019

Abstract:We present a novel Riemannian approach for planar pose graph optimization problems. By formulating the cost function based on the Riemannian metric on the manifold of dual quaternions representing planar motions, the nonlinear structure of the SE(2) group is inherently considered. To solve the on-manifold least squares problem, a Riemannian Gauss-Newton method using the exponential retraction is applied. The proposed Riemannian pose graph optimizer (RPG-Opt) is further compared with currently popular optimization frameworks using public planar pose graph datasets. Evaluations show that the proposed method gives equivalently accurate results as the state-of-the-art frameworks and shows better convergence robustness under large uncertainties of odometry measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge