Jens Lambrecht

Arena 3.0: Advancing Social Navigation in Collaborative and Highly Dynamic Environments

Jun 02, 2024

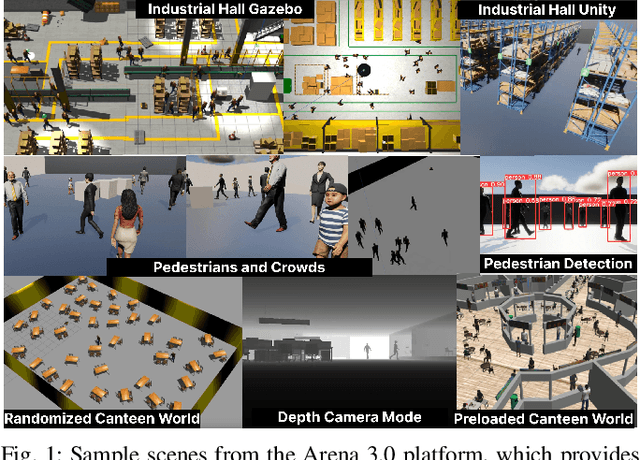

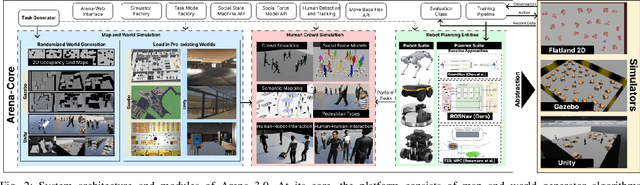

Abstract:Building upon our previous contributions, this paper introduces Arena 3.0, an extension of Arena-Bench, Arena 1.0, and Arena 2.0. Arena 3.0 is a comprehensive software stack containing multiple modules and simulation environments focusing on the development, simulation, and benchmarking of social navigation approaches in collaborative environments. We significantly enhance the realism of human behavior simulation by incorporating a diverse array of new social force models and interaction patterns, encompassing both human-human and human-robot dynamics. The platform provides a comprehensive set of new task modes, designed for extensive benchmarking and testing and is capable of generating realistic and human-centric environments dynamically, catering to a broad spectrum of social navigation scenarios. In addition, the platform's functionalities have been abstracted across three widely used simulators, each tailored for specific training and testing purposes. The platform's efficacy has been validated through an extensive benchmark and user evaluations of the platform by a global community of researchers and students, which noted the substantial improvement compared to previous versions and expressed interests to utilize the platform for future research and development. Arena 3.0 is openly available at https://github.com/Arena-Rosnav.

* 11 pages, 6 figures

Mono Video-Based AI Corridor for Model-Free Detection of Collision-Relevant Obstacles

Apr 24, 2023Abstract:The detection of previously unseen, unexpected obstacles on the road is a major challenge for automated driving systems. Different from the detection of ordinary objects with pre-definable classes, detecting unexpected obstacles on the road cannot be resolved by upscaling the sensor technology alone (e.g., high resolution video imagers / radar antennas, denser LiDAR scan lines). This is due to the fact, that there is a wide variety in the types of unexpected obstacles that also do not share a common appearance (e.g., lost cargo as a suitcase or bicycle, tire fragments, a tree stem). Also adding object classes or adding \enquote{all} of these objects to a common \enquote{unexpected obstacle} class does not scale. In this contribution, we study the feasibility of using a deep learning video-based lane corridor (called \enquote{AI ego-corridor}) to ease the challenge by inverting the problem: Instead of detecting a previously unseen object, the AI ego-corridor detects that the ego-lane ahead ends. A smart ground-truth definition enables an easy feature-based classification of an abrupt end of the ego-lane. We propose two neural network designs and research among other things the potential of training with synthetic data. We evaluate our approach on a test vehicle platform. It is shown that the approach is able to detect numerous previously unseen obstacles at a distance of up to 300 m with a detection rate of 95 %.

HabitatDyn Dataset: Dynamic Object Detection to Kinematics Estimation

Apr 21, 2023Abstract:The advancement of computer vision and machine learning has made datasets a crucial element for further research and applications. However, the creation and development of robots with advanced recognition capabilities are hindered by the lack of appropriate datasets. Existing image or video processing datasets are unable to accurately depict observations from a moving robot, and they do not contain the kinematics information necessary for robotic tasks. Synthetic data, on the other hand, are cost-effective to create and offer greater flexibility for adapting to various applications. Hence, they are widely utilized in both research and industry. In this paper, we propose the dataset HabitatDyn, which contains both synthetic RGB videos, semantic labels, and depth information, as well as kinetics information. HabitatDyn was created from the perspective of a mobile robot with a moving camera, and contains 30 scenes featuring six different types of moving objects with varying velocities. To demonstrate the usability of our dataset, two existing algorithms are used for evaluation and an approach to estimate the distance between the object and camera is implemented based on these segmentation methods and evaluated through the dataset. With the availability of this dataset, we aspire to foster further advancements in the field of mobile robotics, leading to more capable and intelligent robots that can navigate and interact with their environments more effectively. The code is publicly available at https://github.com/ignc-research/HabitatDyn.

Arena-Rosnav 2.0: A Development and Benchmarking Platform for Robot Navigation in Highly Dynamic Environments

Feb 20, 2023Abstract:Following up on our previous works, in this paper, we present Arena-Rosnav 2.0 an extension to our previous works Arena-Bench and Arena-Rosnav, which adds a variety of additional modules for developing and benchmarking robotic navigation approaches. The platform is fundamentally restructured and provides unified APIs to add additional functionalities such as planning algorithms, simulators, or evaluation functionalities. We have included more realistic simulation and pedestrian behavior and provide a profound documentation to lower the entry barrier. We evaluated our system by first, conducting a user study in which we asked experienced researchers as well as new practitioners and students to test our system. The feedback was mostly positive and a high number of participants are utilizing our system for other research endeavors. Finally, we demonstrate the feasibility of our system by integrating two new simulators and a variety of state of the art navigation approaches and benchmark them against one another. The platform is openly available at https://github.com/Arena-Rosnav.

Arena-Web -- A Web-based Development and Benchmarking Platform for Autonomous Navigation Approaches

Feb 06, 2023

Abstract:In recent years, mobile robot navigation approaches have become increasingly important due to various application areas ranging from healthcare to warehouse logistics. In particular, Deep Reinforcement Learning approaches have gained popularity for robot navigation but are not easily accessible to non-experts and complex to develop. In recent years, efforts have been made to make these sophisticated approaches accessible to a wider audience. In this paper, we present Arena-Web, a web-based development and evaluation suite for developing, training, and testing DRL-based navigation planners for various robotic platforms and scenarios. The interface is designed to be intuitive and engaging to appeal to non-experts and make the technology accessible to a wider audience. With Arena-Web and its interface, training and developing Deep Reinforcement Learning agents is simplified and made easy without a single line of code. The web-app is free to use and openly available under the link stated in the supplementary materials.

Holistic Deep-Reinforcement-Learning-based Training of Autonomous Navigation Systems

Feb 06, 2023

Abstract:In recent years, Deep Reinforcement Learning emerged as a promising approach for autonomous navigation of ground vehicles and has been utilized in various areas of navigation such as cruise control, lane changing, or obstacle avoidance. However, most research works either focus on providing an end-to-end solution training the whole system using Deep Reinforcement Learning or focus on one specific aspect such as local motion planning. This however, comes along with a number of problems such as catastrophic forgetfulness, inefficient navigation behavior, and non-optimal synchronization between different entities of the navigation stack. In this paper, we propose a holistic Deep Reinforcement Learning training approach in which the training procedure is involving all entities of the navigation stack. This should enhance the synchronization between- and understanding of all entities of the navigation stack and as a result, improve navigational performance. We trained several agents with a number of different observation spaces to study the impact of different input on the navigation behavior of the agent. In profound evaluations against multiple learning-based and classic model-based navigation approaches, our proposed agent could outperform the baselines in terms of efficiency and safety attaining shorter path lengths, less roundabout paths, and less collisions.

Deep-Reinforcement-Learning-based Path Planning for Industrial Robots using Distance Sensors as Observation

Jan 14, 2023Abstract:Industrial robots are widely used in various manufacturing environments due to their efficiency in doing repetitive tasks such as assembly or welding. A common problem for these applications is to reach a destination without colliding with obstacles or other robot arms. Commonly used sampling-based path planning approaches such as RRT require long computation times, especially in complex environments. Furthermore, the environment in which they are employed needs to be known beforehand. When utilizing the approaches in new environments, a tedious engineering effort in setting hyperparameters needs to be conducted, which is time- and cost-intensive. On the other hand, Deep Reinforcement Learning has shown remarkable results in dealing with unknown environments, generalizing new problem instances, and solving motion planning problems efficiently. On that account, this paper proposes a Deep-Reinforcement-Learning-based motion planner for robotic manipulators. We evaluated our model against state-of-the-art sampling-based planners in several experiments. The results show the superiority of our planner in terms of path length and execution time.

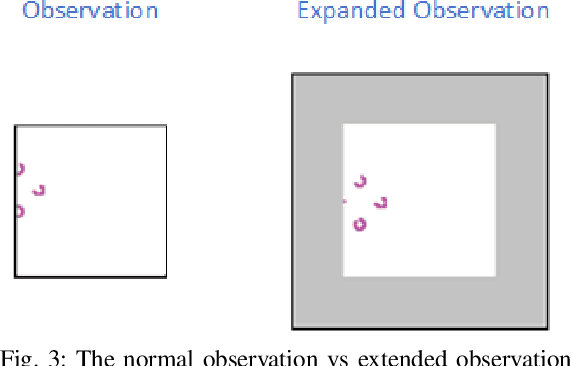

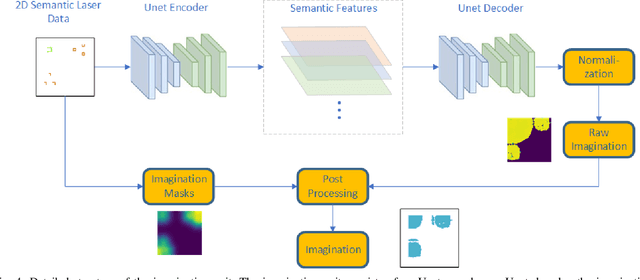

Imagination-augmented Navigation Based on 2D Laser Sensor Observations

Jun 12, 2022

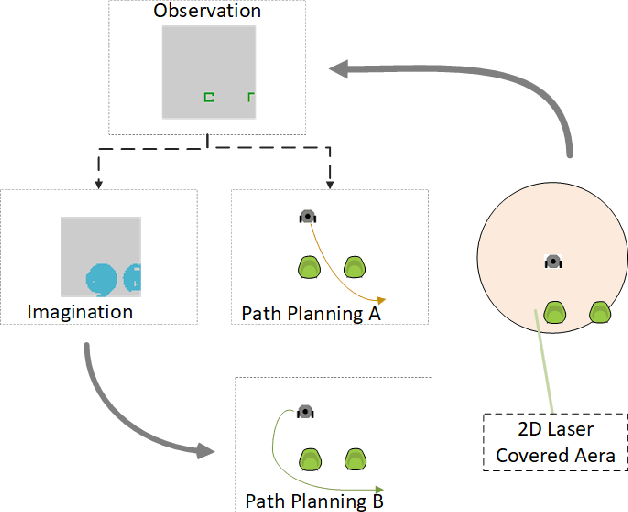

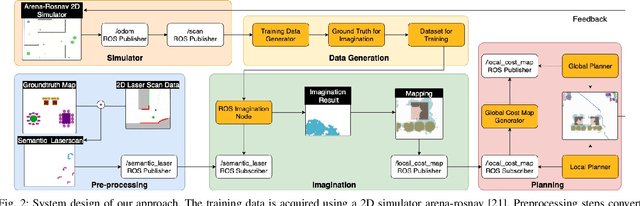

Abstract:Autonomous navigation of mobile robots is an essential task for various industries. Sensor data is crucial to ensure safe and reliable navigation. However, sensor observations are often limited by different factors. Imagination can assist to enhance the view and aid navigation in dangerous or unknown situations where only limited sensor observation is available. In this paper, we propose an imagination-enhanced navigation based on 2D semantic laser scan data. The system contains an imagination module, which can predict the entire occupied area of the object. The imagination module is trained in a supervised manner using a collected training dataset from a 2D simulator. Four different imagination models are trained, and the imagination results are evaluated. Subsequently, the imagination results are integrated into the local and global cost map to benefit the navigation procedure. The approach is validated on three different test maps, with seven different paths for each map. The quality and numeric results showed that the agent with the imagination module could generate more reliable paths without passing beneath the object, with the cost of a longer path and slower velocity.

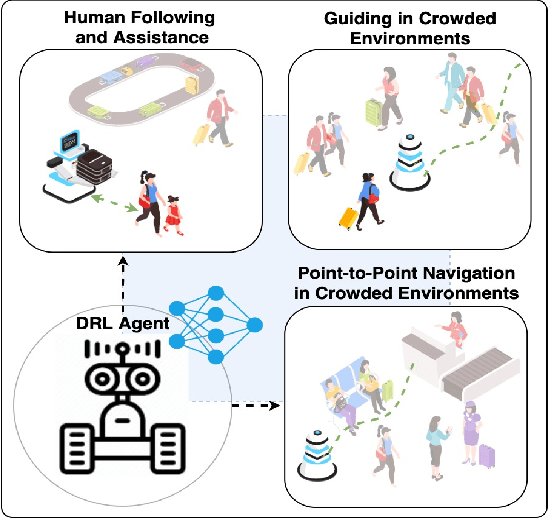

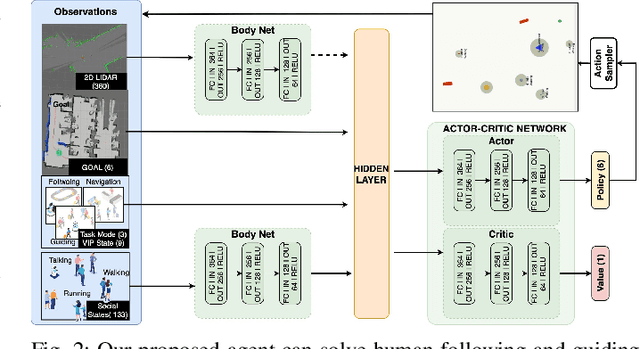

Human-Following and -guiding in Crowded Environments using Semantic Deep-Reinforcement-Learning for Mobile Service Robots

Jun 12, 2022

Abstract:Assistance robots have gained widespread attention in various industries such as logistics and human assistance. The tasks of guiding or following a human in a crowded environment such as airports or train stations to carry weight or goods is still an open problem. In these use cases, the robot is not only required to intelligently interact with humans, but also to navigate safely among crowds. Thus, especially highly dynamic environments pose a grand challenge due to the volatile behavior patterns and unpredictable movements of humans. In this paper, we propose a Deep-Reinforcement-Learning-based agent for human-guiding and -following tasks in crowded environments. Therefore, we incorporate semantic information to provide the agent with high-level information like the social states of humans, safety models, and class types. We evaluate our proposed approach against a benchmark approach without semantic information and demonstrated enhanced navigational safety and robustness. Moreover, we demonstrate that the agent could learn to adapt its behavior to humans, which improves the human-robot interaction significantly.

Arena-Bench: A Benchmarking Suite for Obstacle Avoidance Approaches in Highly Dynamic Environments

Jun 12, 2022

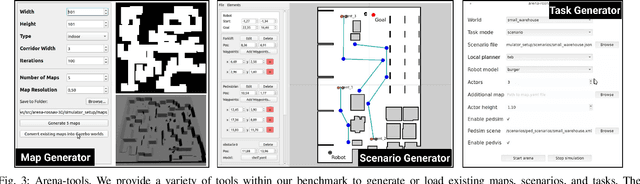

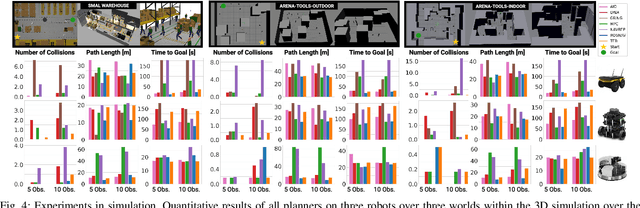

Abstract:The ability to autonomously navigate safely, especially within dynamic environments, is paramount for mobile robotics. In recent years, DRL approaches have shown superior performance in dynamic obstacle avoidance. However, these learning-based approaches are often developed in specially designed simulation environments and are hard to test against conventional planning approaches. Furthermore, the integration and deployment of these approaches into real robotic platforms are not yet completely solved. In this paper, we present Arena-bench, a benchmark suite to train, test, and evaluate navigation planners on different robotic platforms within 3D environments. It provides tools to design and generate highly dynamic evaluation worlds, scenarios, and tasks for autonomous navigation and is fully integrated into the robot operating system. To demonstrate the functionalities of our suite, we trained a DRL agent on our platform and compared it against a variety of existing different model-based and learning-based navigation approaches on a variety of relevant metrics. Finally, we deployed the approaches towards real robots and demonstrated the reproducibility of the results. The code is publicly available at github.com/ignc-research/arena-bench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge