Teham Bhuiyan

Arena-Rosnav 2.0: A Development and Benchmarking Platform for Robot Navigation in Highly Dynamic Environments

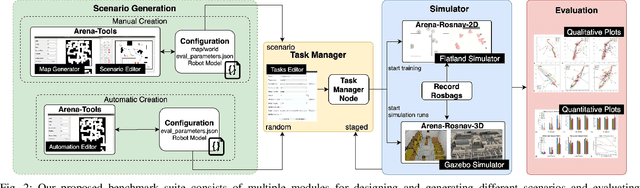

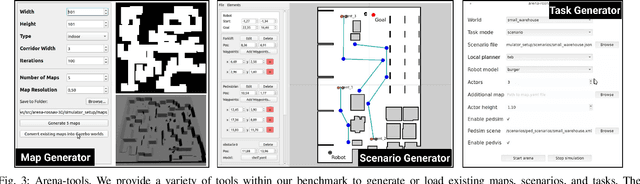

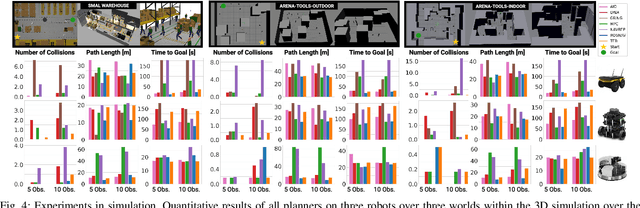

Feb 20, 2023Abstract:Following up on our previous works, in this paper, we present Arena-Rosnav 2.0 an extension to our previous works Arena-Bench and Arena-Rosnav, which adds a variety of additional modules for developing and benchmarking robotic navigation approaches. The platform is fundamentally restructured and provides unified APIs to add additional functionalities such as planning algorithms, simulators, or evaluation functionalities. We have included more realistic simulation and pedestrian behavior and provide a profound documentation to lower the entry barrier. We evaluated our system by first, conducting a user study in which we asked experienced researchers as well as new practitioners and students to test our system. The feedback was mostly positive and a high number of participants are utilizing our system for other research endeavors. Finally, we demonstrate the feasibility of our system by integrating two new simulators and a variety of state of the art navigation approaches and benchmark them against one another. The platform is openly available at https://github.com/Arena-Rosnav.

Holistic Deep-Reinforcement-Learning-based Training of Autonomous Navigation Systems

Feb 06, 2023

Abstract:In recent years, Deep Reinforcement Learning emerged as a promising approach for autonomous navigation of ground vehicles and has been utilized in various areas of navigation such as cruise control, lane changing, or obstacle avoidance. However, most research works either focus on providing an end-to-end solution training the whole system using Deep Reinforcement Learning or focus on one specific aspect such as local motion planning. This however, comes along with a number of problems such as catastrophic forgetfulness, inefficient navigation behavior, and non-optimal synchronization between different entities of the navigation stack. In this paper, we propose a holistic Deep Reinforcement Learning training approach in which the training procedure is involving all entities of the navigation stack. This should enhance the synchronization between- and understanding of all entities of the navigation stack and as a result, improve navigational performance. We trained several agents with a number of different observation spaces to study the impact of different input on the navigation behavior of the agent. In profound evaluations against multiple learning-based and classic model-based navigation approaches, our proposed agent could outperform the baselines in terms of efficiency and safety attaining shorter path lengths, less roundabout paths, and less collisions.

Deep-Reinforcement-Learning-based Path Planning for Industrial Robots using Distance Sensors as Observation

Jan 14, 2023Abstract:Industrial robots are widely used in various manufacturing environments due to their efficiency in doing repetitive tasks such as assembly or welding. A common problem for these applications is to reach a destination without colliding with obstacles or other robot arms. Commonly used sampling-based path planning approaches such as RRT require long computation times, especially in complex environments. Furthermore, the environment in which they are employed needs to be known beforehand. When utilizing the approaches in new environments, a tedious engineering effort in setting hyperparameters needs to be conducted, which is time- and cost-intensive. On the other hand, Deep Reinforcement Learning has shown remarkable results in dealing with unknown environments, generalizing new problem instances, and solving motion planning problems efficiently. On that account, this paper proposes a Deep-Reinforcement-Learning-based motion planner for robotic manipulators. We evaluated our model against state-of-the-art sampling-based planners in several experiments. The results show the superiority of our planner in terms of path length and execution time.

Arena-Bench: A Benchmarking Suite for Obstacle Avoidance Approaches in Highly Dynamic Environments

Jun 12, 2022

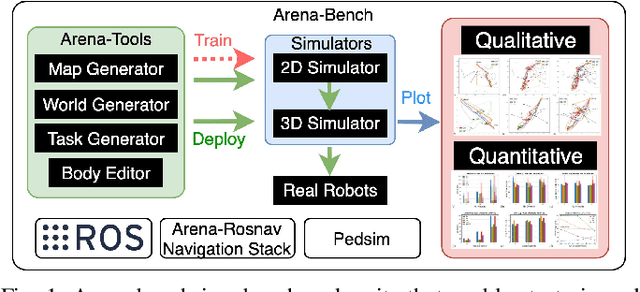

Abstract:The ability to autonomously navigate safely, especially within dynamic environments, is paramount for mobile robotics. In recent years, DRL approaches have shown superior performance in dynamic obstacle avoidance. However, these learning-based approaches are often developed in specially designed simulation environments and are hard to test against conventional planning approaches. Furthermore, the integration and deployment of these approaches into real robotic platforms are not yet completely solved. In this paper, we present Arena-bench, a benchmark suite to train, test, and evaluate navigation planners on different robotic platforms within 3D environments. It provides tools to design and generate highly dynamic evaluation worlds, scenarios, and tasks for autonomous navigation and is fully integrated into the robot operating system. To demonstrate the functionalities of our suite, we trained a DRL agent on our platform and compared it against a variety of existing different model-based and learning-based navigation approaches on a variety of relevant metrics. Finally, we deployed the approaches towards real robots and demonstrated the reproducibility of the results. The code is publicly available at github.com/ignc-research/arena-bench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge