Joe Ledsam

The unreasonable effectiveness of AI CADe polyp detectors to generalize to new countries

Dec 17, 2023Abstract:$\textbf{Background and aims}$: Artificial Intelligence (AI) Computer-Aided Detection (CADe) is commonly used for polyp detection, but data seen in clinical settings can differ from model training. Few studies evaluate how well CADe detectors perform on colonoscopies from countries not seen during training, and none are able to evaluate performance without collecting expensive and time-intensive labels. $\textbf{Methods}$: We trained a CADe polyp detector on Israeli colonoscopy videos (5004 videos, 1106 hours) and evaluated on Japanese videos (354 videos, 128 hours) by measuring the True Positive Rate (TPR) versus false alarms per minute (FAPM). We introduce a colonoscopy dissimilarity measure called "MAsked mediCal Embedding Distance" (MACE) to quantify differences between colonoscopies, without labels. We evaluated CADe on all Japan videos and on those with the highest MACE. $\textbf{Results}$: MACE correctly quantifies that narrow-band imaging (NBI) and chromoendoscopy (CE) frames are less similar to Israel data than Japan whitelight (bootstrapped z-test, |z| > 690, p < $10^{-8}$ for both). Despite differences in the data, CADe performance on Japan colonoscopies was non-inferior to Israel ones without additional training (TPR at 0.5 FAPM: 0.957 and 0.972 for Israel and Japan; TPR at 1.0 FAPM: 0.972 and 0.989 for Israel and Japan; superiority test t > 45.2, p < $10^{-8}$). Despite not being trained on NBI or CE, TPR on those subsets were non-inferior to Japan overall (non-inferiority test t > 47.3, p < $10^{-8}$, $\delta$ = 1.5% for both). $\textbf{Conclusion}$: Differences that prevent CADe detectors from performing well in non-medical settings do not degrade the performance of our AI CADe polyp detector when applied to data from a new country. MACE can help medical AI models internationalize by identifying the most "dissimilar" data on which to evaluate models.

Predicting optical coherence tomography-derived diabetic macular edema grades from fundus photographs using deep learning

Oct 18, 2018

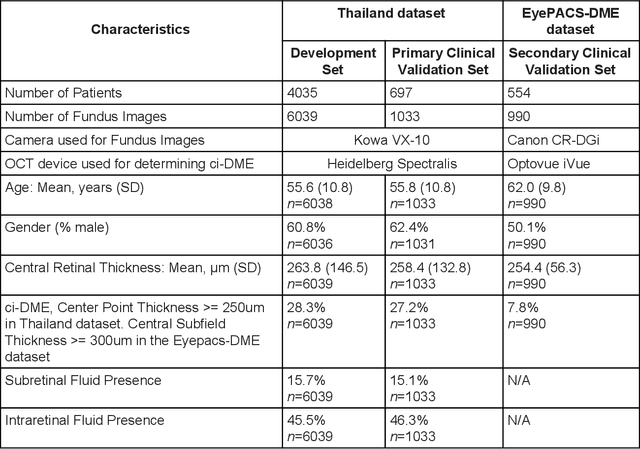

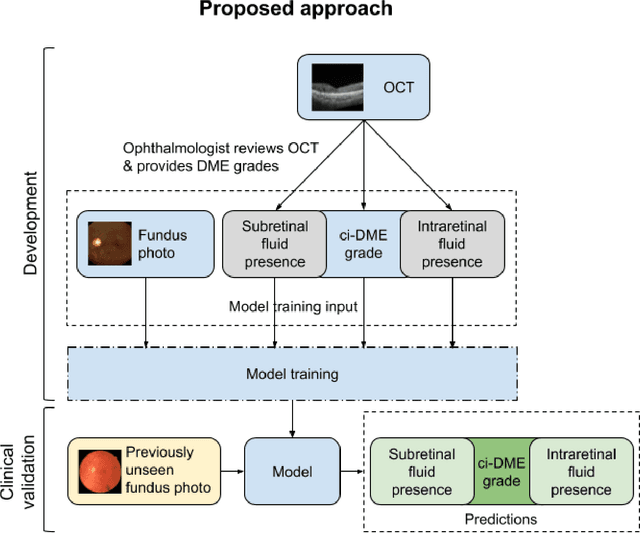

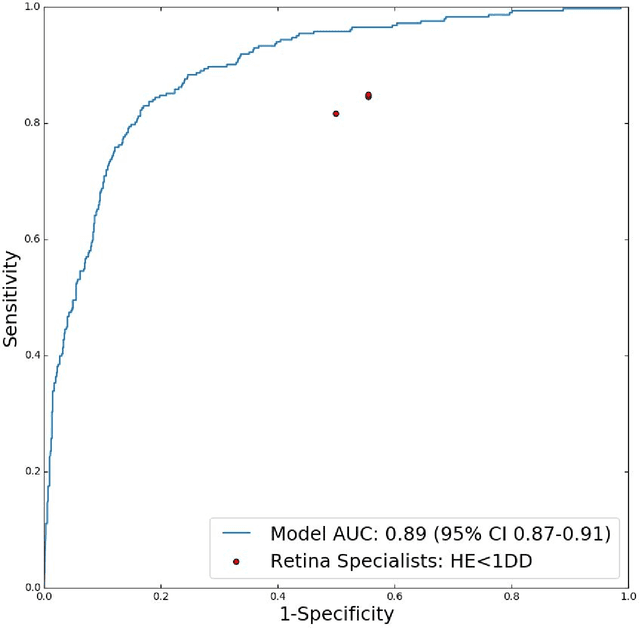

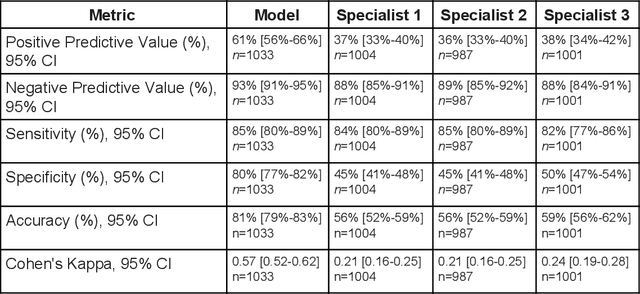

Abstract:Diabetic eye disease is one of the fastest growing causes of preventable blindness. With the advent of anti-VEGF (vascular endothelial growth factor) therapies, it has become increasingly important to detect center-involved diabetic macular edema. However, center-involved diabetic macular edema is diagnosed using optical coherence tomography (OCT), which is not generally available at screening sites because of cost and workflow constraints. Instead, screening programs rely on the detection of hard exudates as a proxy for DME on color fundus photographs, often resulting in high false positive or false negative calls. To improve the accuracy of DME screening, we trained a deep learning model to use color fundus photographs to predict DME grades derived from OCT exams. Our "OCT-DME" model had an AUC of 0.89 (95% CI: 0.87-0.91), which corresponds to a sensitivity of 85% at a specificity of 80%. In comparison, three retinal specialists had similar sensitivities (82-85%), but only half the specificity (45-50%, p<0.001 for each comparison with model). The positive predictive value (PPV) of the OCT-DME model was 61% (95% CI: 56-66%), approximately double the 36-38% by the retina specialists. In addition, we used saliency and other techniques to examine how the model is making its prediction. The ability of deep learning algorithms to make clinically relevant predictions that generally require sophisticated 3D-imaging equipment from simple 2D images has broad relevance to many other applications in medical imaging.

Deep learning for predicting refractive error from retinal fundus images

Dec 21, 2017

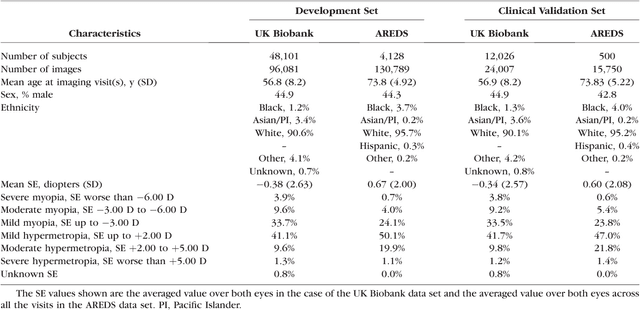

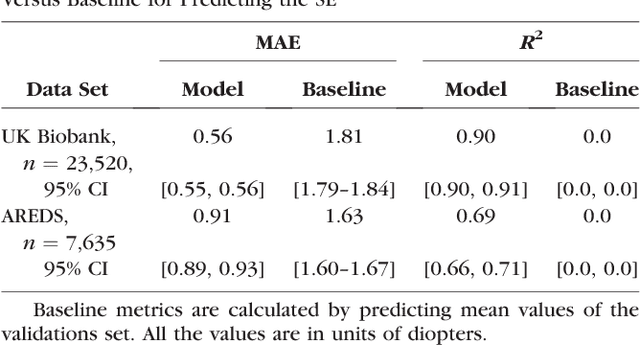

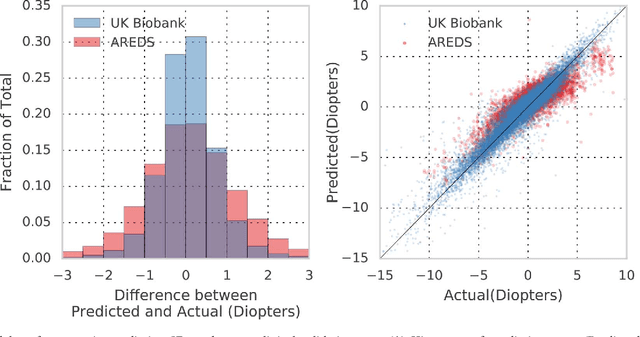

Abstract:Refractive error, one of the leading cause of visual impairment, can be corrected by simple interventions like prescribing eyeglasses. We trained a deep learning algorithm to predict refractive error from the fundus photographs from participants in the UK Biobank cohort, which were 45 degree field of view images and the AREDS clinical trial, which contained 30 degree field of view images. Our model use the "attention" method to identify features that are correlated with refractive error. Mean absolute error (MAE) of the algorithm's prediction compared to the refractive error obtained in the AREDS and UK Biobank. The resulting algorithm had a MAE of 0.56 diopters (95% CI: 0.55-0.56) for estimating spherical equivalent on the UK Biobank dataset and 0.91 diopters (95% CI: 0.89-0.92) for the AREDS dataset. The baseline expected MAE (obtained by simply predicting the mean of this population) was 1.81 diopters (95% CI: 1.79-1.84) for UK Biobank and 1.63 (95% CI: 1.60-1.67) for AREDS. Attention maps suggested that the foveal region was one of the most important areas used by the algorithm to make this prediction, though other regions also contribute to the prediction. The ability to estimate refractive error with high accuracy from retinal fundus photos has not been previously known and demonstrates that deep learning can be applied to make novel predictions from medical images. Given that several groups have recently shown that it is feasible to obtain retinal fundus photos using mobile phones and inexpensive attachments, this work may be particularly relevant in regions of the world where autorefractors may not be readily available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge