Jinxiao Zhang

RS-Prune: Training-Free Data Pruning at High Ratios for Efficient Remote Sensing Diffusion Foundation Models

Dec 29, 2025Abstract:Diffusion-based remote sensing (RS) generative foundation models are cruial for downstream tasks. However, these models rely on large amounts of globally representative data, which often contain redundancy, noise, and class imbalance, reducing training efficiency and preventing convergence. Existing RS diffusion foundation models typically aggregate multiple classification datasets or apply simplistic deduplication, overlooking the distributional requirements of generation modeling and the heterogeneity of RS imagery. To address these limitations, we propose a training-free, two-stage data pruning approach that quickly select a high-quality subset under high pruning ratios, enabling a preliminary foundation model to converge rapidly and serve as a versatile backbone for generation, downstream fine-tuning, and other applications. Our method jointly considers local information content with global scene-level diversity and representativeness. First, an entropy-based criterion efficiently removes low-information samples. Next, leveraging RS scene classification datasets as reference benchmarks, we perform scene-aware clustering with stratified sampling to improve clustering effectiveness while reducing computational costs on large-scale unlabeled data. Finally, by balancing cluster-level uniformity and sample representativeness, the method enables fine-grained selection under high pruning ratios while preserving overall diversity and representativeness. Experiments show that, even after pruning 85\% of the training data, our method significantly improves convergence and generation quality. Furthermore, diffusion foundation models trained with our method consistently achieve state-of-the-art performance across downstream tasks, including super-resolution and semantic image synthesis. This data pruning paradigm offers practical guidance for developing RS generative foundation models.

Task-Oriented Data Synthesis and Control-Rectify Sampling for Remote Sensing Semantic Segmentation

Dec 18, 2025Abstract:With the rapid progress of controllable generation, training data synthesis has become a promising way to expand labeled datasets and alleviate manual annotation in remote sensing (RS). However, the complexity of semantic mask control and the uncertainty of sampling quality often limit the utility of synthetic data in downstream semantic segmentation tasks. To address these challenges, we propose a task-oriented data synthesis framework (TODSynth), including a Multimodal Diffusion Transformer (MM-DiT) with unified triple attention and a plug-and-play sampling strategy guided by task feedback. Built upon the powerful DiT-based generative foundation model, we systematically evaluate different control schemes, showing that a text-image-mask joint attention scheme combined with full fine-tuning of the image and mask branches significantly enhances the effectiveness of RS semantic segmentation data synthesis, particularly in few-shot and complex-scene scenarios. Furthermore, we propose a control-rectify flow matching (CRFM) method, which dynamically adjusts sampling directions guided by semantic loss during the early high-plasticity stage, mitigating the instability of generated images and bridging the gap between synthetic data and downstream segmentation tasks. Extensive experiments demonstrate that our approach consistently outperforms state-of-the-art controllable generation methods, producing more stable and task-oriented synthetic data for RS semantic segmentation.

Urban1960SatSeg: Unsupervised Semantic Segmentation of Mid-20$^{th}$ century Urban Landscapes with Satellite Imageries

Jun 12, 2025Abstract:Historical satellite imagery, such as mid-20$^{th}$ century Keyhole data, offers rare insights into understanding early urban development and long-term transformation. However, severe quality degradation (e.g., distortion, misalignment, and spectral scarcity) and annotation absence have long hindered semantic segmentation on such historical RS imagery. To bridge this gap and enhance understanding of urban development, we introduce $\textbf{Urban1960SatBench}$, an annotated segmentation dataset based on historical satellite imagery with the earliest observation time among all existing segmentation datasets, along with a benchmark framework for unsupervised segmentation tasks, $\textbf{Urban1960SatUSM}$. First, $\textbf{Urban1960SatBench}$ serves as a novel, expertly annotated semantic segmentation dataset built on mid-20$^{th}$ century Keyhole imagery, covering 1,240 km$^2$ and key urban classes (buildings, roads, farmland, water). As the earliest segmentation dataset of its kind, it provides a pioneering benchmark for historical urban understanding. Second, $\textbf{Urban1960SatUSM}$(Unsupervised Segmentation Model) is a novel unsupervised semantic segmentation framework for historical RS imagery. It employs a confidence-aware alignment mechanism and focal-confidence loss based on a self-supervised learning architecture, which generates robust pseudo-labels and adaptively prioritizes prediction difficulty and label reliability to improve unsupervised segmentation on noisy historical data without manual supervision. Experiments show Urban1960SatUSM significantly outperforms existing unsupervised segmentation methods on Urban1960SatSeg for segmenting historical urban scenes, promising in paving the way for quantitative studies of long-term urban change using modern computer vision. Our benchmark and supplementary material are available at https://github.com/Tianxiang-Hao/Urban1960SatSeg.

TianQuan-Climate: A Subseasonal-to-Seasonal Global Weather Model via Incorporate Climatology State

Apr 14, 2025Abstract:Subseasonal forecasting serves as an important support for Sustainable Development Goals (SDGs), such as climate challenges, agricultural yield and sustainable energy production. However, subseasonal forecasting is a complex task in meteorology due to dissipating initial conditions and delayed external forces. Although AI models are increasingly pushing the boundaries of this forecasting limit, they face two major challenges: error accumulation and Smoothness. To address these two challenges, we propose Climate Furnace Subseasonal-to-Seasonal (TianQuan-Climate), a novel machine learning model designed to provide global daily mean forecasts up to 45 days, covering five upper-air atmospheric variables at 13 pressure levels and two surface variables. Our proposed TianQuan-Climate has two advantages: 1) it utilizes a multi-model prediction strategy to reduce system error impacts in long-term subseasonal forecasts; 2) it incorporates a Content Fusion Module for climatological integration and extends ViT with uncertainty blocks (UD-ViT) to improve generalization by learning from uncertainty. We demonstrate the effectiveness of TianQuan-Climate on benchmarks for weather forecasting and climate projections within the 15 to 45-day range, where TianQuan-Climate outperforms existing numerical and AI methods.

Evidential Graph Contrastive Alignment for Source-Free Blending-Target Domain Adaptation

Aug 14, 2024Abstract:In this paper, we firstly tackle a more realistic Domain Adaptation (DA) setting: Source-Free Blending-Target Domain Adaptation (SF-BTDA), where we can not access to source domain data while facing mixed multiple target domains without any domain labels in prior. Compared to existing DA scenarios, SF-BTDA generally faces the co-existence of different label shifts in different targets, along with noisy target pseudo labels generated from the source model. In this paper, we propose a new method called Evidential Contrastive Alignment (ECA) to decouple the blending target domain and alleviate the effect from noisy target pseudo labels. First, to improve the quality of pseudo target labels, we propose a calibrated evidential learning module to iteratively improve both the accuracy and certainty of the resulting model and adaptively generate high-quality pseudo target labels. Second, we design a graph contrastive learning with the domain distance matrix and confidence-uncertainty criterion, to minimize the distribution gap of samples of a same class in the blended target domains, which alleviates the co-existence of different label shifts in blended targets. We conduct a new benchmark based on three standard DA datasets and ECA outperforms other methods with considerable gains and achieves comparable results compared with those that have domain labels or source data in prior.

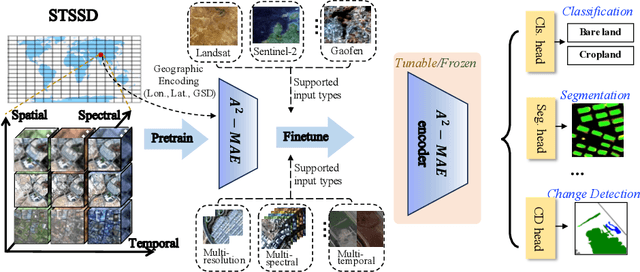

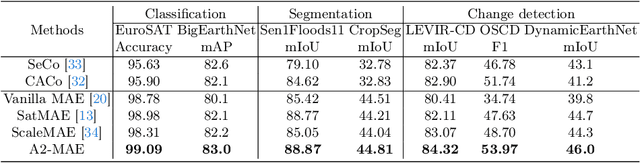

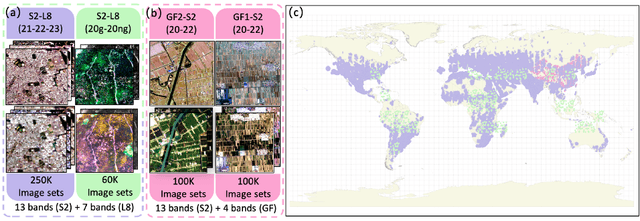

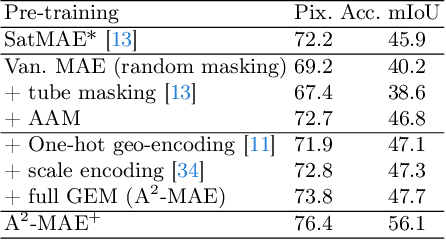

A$^{2}$-MAE: A spatial-temporal-spectral unified remote sensing pre-training method based on anchor-aware masked autoencoder

Jun 12, 2024

Abstract:Vast amounts of remote sensing (RS) data provide Earth observations across multiple dimensions, encompassing critical spatial, temporal, and spectral information which is essential for addressing global-scale challenges such as land use monitoring, disaster prevention, and environmental change mitigation. Despite various pre-training methods tailored to the characteristics of RS data, a key limitation persists: the inability to effectively integrate spatial, temporal, and spectral information within a single unified model. To unlock the potential of RS data, we construct a Spatial-Temporal-Spectral Structured Dataset (STSSD) characterized by the incorporation of multiple RS sources, diverse coverage, unified locations within image sets, and heterogeneity within images. Building upon this structured dataset, we propose an Anchor-Aware Masked AutoEncoder method (A$^{2}$-MAE), leveraging intrinsic complementary information from the different kinds of images and geo-information to reconstruct the masked patches during the pre-training phase. A$^{2}$-MAE integrates an anchor-aware masking strategy and a geographic encoding module to comprehensively exploit the properties of RS images. Specifically, the proposed anchor-aware masking strategy dynamically adapts the masking process based on the meta-information of a pre-selected anchor image, thereby facilitating the training on images captured by diverse types of RS sources within one model. Furthermore, we propose a geographic encoding method to leverage accurate spatial patterns, enhancing the model generalization capabilities for downstream applications that are generally location-related. Extensive experiments demonstrate our method achieves comprehensive improvements across various downstream tasks compared with existing RS pre-training methods, including image classification, semantic segmentation, and change detection tasks.

FUSU: A Multi-temporal-source Land Use Change Segmentation Dataset for Fine-grained Urban Semantic Understanding

May 29, 2024Abstract:Fine urban change segmentation using multi-temporal remote sensing images is essential for understanding human-environment interactions. Despite advances in remote sensing data for urban monitoring, coarse-grained classification systems and the lack of continuous temporal observations hinder the application of deep learning to urban change analysis. To address this, we introduce FUSU, a multi-source, multi-temporal change segmentation dataset for fine-grained urban semantic understanding. FUSU features the most detailed land use classification system to date, with 17 classes and 30 billion pixels of annotations. It includes bi-temporal high-resolution satellite images with 20-50 cm ground sample distance and monthly optical and radar satellite time series, covering 847 km2 across five urban areas in China. The fine-grained pixel-wise annotations and high spatial-temporal resolution data provide a robust foundation for deep learning models to understand urbanization and land use changes. To fully leverage FUSU, we propose a unified time-series architecture for both change detection and segmentation and benchmark FUSU on various methods for several tasks. Dataset and code will be available at: https://github.com/yuanshuai0914/FUSU.

Decomposing weather forecasting into advection and convection with neural networks

May 10, 2024Abstract:Operational weather forecasting models have advanced for decades on both the explicit numerical solvers and the empirical physical parameterization schemes. However, the involved high computational costs and uncertainties in these existing schemes are requiring potential improvements through alternative machine learning methods. Previous works use a unified model to learn the dynamics and physics of the atmospheric model. Contrarily, we propose a simple yet effective machine learning model that learns the horizontal movement in the dynamical core and vertical movement in the physical parameterization separately. By replacing the advection with a graph attention network and the convection with a multi-layer perceptron, our model provides a new and efficient perspective to simulate the transition of variables in atmospheric models. We also assess the model's performance over a 5-day iterative forecasting. Under the same input variables and training methods, our model outperforms existing data-driven methods with a significantly-reduced number of parameters with a resolution of 5.625 deg. Overall, this work aims to contribute to the ongoing efforts that leverage machine learning techniques for improving both the accuracy and efficiency of global weather forecasting.

Building Bridges across Spatial and Temporal Resolutions: Reference-Based Super-Resolution via Change Priors and Conditional Diffusion Model

Mar 26, 2024Abstract:Reference-based super-resolution (RefSR) has the potential to build bridges across spatial and temporal resolutions of remote sensing images. However, existing RefSR methods are limited by the faithfulness of content reconstruction and the effectiveness of texture transfer in large scaling factors. Conditional diffusion models have opened up new opportunities for generating realistic high-resolution images, but effectively utilizing reference images within these models remains an area for further exploration. Furthermore, content fidelity is difficult to guarantee in areas without relevant reference information. To solve these issues, we propose a change-aware diffusion model named Ref-Diff for RefSR, using the land cover change priors to guide the denoising process explicitly. Specifically, we inject the priors into the denoising model to improve the utilization of reference information in unchanged areas and regulate the reconstruction of semantically relevant content in changed areas. With this powerful guidance, we decouple the semantics-guided denoising and reference texture-guided denoising processes to improve the model performance. Extensive experiments demonstrate the superior effectiveness and robustness of the proposed method compared with state-of-the-art RefSR methods in both quantitative and qualitative evaluations. The code and data are available at https://github.com/dongrunmin/RefDiff.

DeepLight: Reconstructing High-Resolution Observations of Nighttime Light With Multi-Modal Remote Sensing Data

Feb 24, 2024Abstract:Nighttime light (NTL) remote sensing observation serves as a unique proxy for quantitatively assessing progress toward meeting a series of Sustainable Development Goals (SDGs), such as poverty estimation, urban sustainable development, and carbon emission. However, existing NTL observations often suffer from pervasive degradation and inconsistency, limiting their utility for computing the indicators defined by the SDGs. In this study, we propose a novel approach to reconstruct high-resolution NTL images using multi-modal remote sensing data. To support this research endeavor, we introduce DeepLightMD, a comprehensive dataset comprising data from five heterogeneous sensors, offering fine spatial resolution and rich spectral information at a national scale. Additionally, we present DeepLightSR, a calibration-aware method for building bridges between spatially heterogeneous modality data in the multi-modality super-resolution. DeepLightSR integrates calibration-aware alignment, an auxiliary-to-main multi-modality fusion, and an auxiliary-embedded refinement to effectively address spatial heterogeneity, fuse diversely representative features, and enhance performance in $8\times$ super-resolution (SR) tasks. Extensive experiments demonstrate the superiority of DeepLightSR over 8 competing methods, as evidenced by improvements in PSNR (2.01 dB $ \sim $ 13.25 dB) and PIQE (0.49 $ \sim $ 9.32). Our findings underscore the practical significance of our proposed dataset and model in reconstructing high-resolution NTL data, supporting efficiently and quantitatively assessing the SDG progress.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge