Jieun Kim

K-EXAONE Technical Report

Jan 05, 2026Abstract:This technical report presents K-EXAONE, a large-scale multilingual language model developed by LG AI Research. K-EXAONE is built on a Mixture-of-Experts architecture with 236B total parameters, activating 23B parameters during inference. It supports a 256K-token context window and covers six languages: Korean, English, Spanish, German, Japanese, and Vietnamese. We evaluate K-EXAONE on a comprehensive benchmark suite spanning reasoning, agentic, general, Korean, and multilingual abilities. Across these evaluations, K-EXAONE demonstrates performance comparable to open-weight models of similar size. K-EXAONE, designed to advance AI for a better life, is positioned as a powerful proprietary AI foundation model for a wide range of industrial and research applications.

Image-Guided Microstructure Optimization using Diffusion Models: Validated with Li-Mn-rich Cathode Precursors

May 12, 2025Abstract:Microstructure often dictates materials performance, yet it is rarely treated as an explicit design variable because microstructure is hard to quantify, predict, and optimize. Here, we introduce an image centric, closed-loop framework that makes microstructural morphology into a controllable objective and demonstrate its use case with Li- and Mn-rich layered oxide cathode precursors. This work presents an integrated, AI driven framework for the predictive design and optimization of lithium-ion battery cathode precursor synthesis. This framework integrates a diffusion-based image generation model, a quantitative image analysis pipeline, and a particle swarm optimization (PSO) algorithm. By extracting key morphological descriptors such as texture, sphericity, and median particle size (D50) from SEM images, the platform accurately predicts SEM like morphologies resulting from specific coprecipitation conditions, including reaction time-, solution concentration-, and pH-dependent structural changes. Optimization then pinpoints synthesis parameters that yield user defined target morphologies, as experimentally validated by the close agreement between predicted and synthesized structures. This framework offers a practical strategy for data driven materials design, enabling both forward prediction and inverse design of synthesis conditions and paving the way toward autonomous, image guided microstructure engineering.

Multi-LLM Text Summarization

Dec 20, 2024

Abstract:In this work, we propose a Multi-LLM summarization framework, and investigate two different multi-LLM strategies including centralized and decentralized. Our multi-LLM summarization framework has two fundamentally important steps at each round of conversation: generation and evaluation. These steps are different depending on whether our multi-LLM decentralized summarization is used or centralized. In both our multi-LLM decentralized and centralized strategies, we have k different LLMs that generate diverse summaries of the text. However, during evaluation, our multi-LLM centralized summarization approach leverages a single LLM to evaluate the summaries and select the best one whereas k LLMs are used for decentralized multi-LLM summarization. Overall, we find that our multi-LLM summarization approaches significantly outperform the baselines that leverage only a single LLM by up to 3x. These results indicate the effectiveness of multi-LLM approaches for summarization.

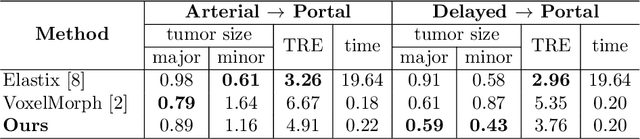

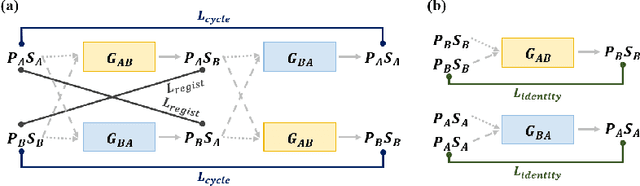

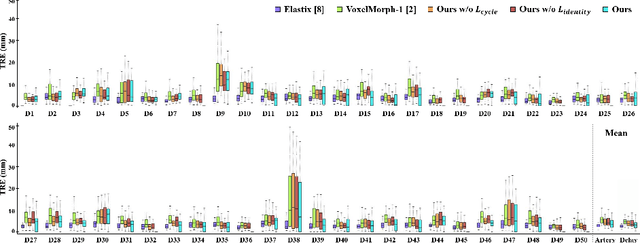

CycleMorph: Cycle Consistent Unsupervised Deformable Image Registration

Aug 13, 2020

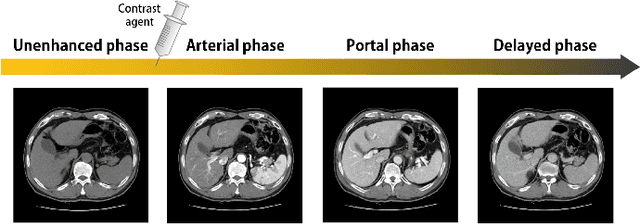

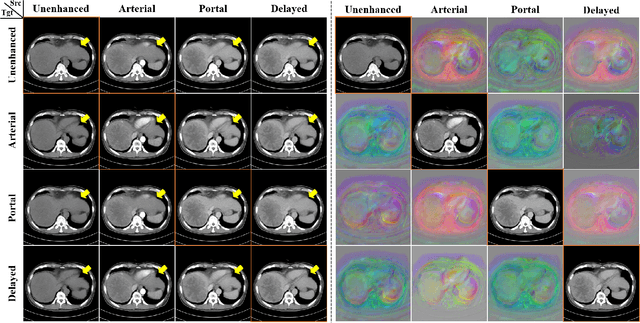

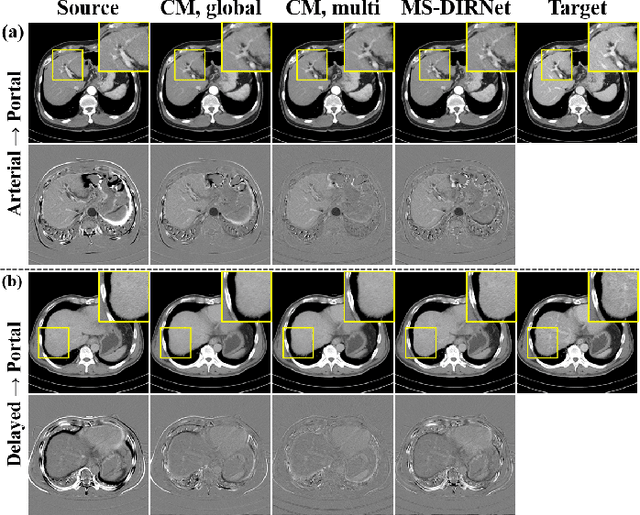

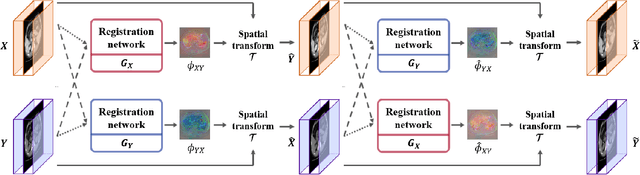

Abstract:Image registration is a fundamental task in medical image analysis. Recently, deep learning based image registration methods have been extensively investigated due to their excellent performance despite the ultra-fast computational time. However, the existing deep learning methods still have limitation in the preservation of original topology during the deformation with registration vector fields. To address this issues, here we present a cycle-consistent deformable image registration. The cycle consistency enhances image registration performance by providing an implicit regularization to preserve topology during the deformation. The proposed method is so flexible that can be applied for both 2D and 3D registration problems for various applications, and can be easily extended to multi-scale implementation to deal with the memory issues in large volume registration. Experimental results on various datasets from medical and non-medical applications demonstrate that the proposed method provides effective and accurate registration on diverse image pairs within a few seconds. Qualitative and quantitative evaluations on deformation fields also verify the effectiveness of the cycle consistency of the proposed method.

Unsupervised Deformable Image Registration Using Cycle-Consistent CNN

Jul 02, 2019

Abstract:Medical image registration is one of the key processing steps for biomedical image analysis such as cancer diagnosis. Recently, deep learning based supervised and unsupervised image registration methods have been extensively studied due to its excellent performance in spite of ultra-fast computational time compared to the classical approaches. In this paper, we present a novel unsupervised medical image registration method that trains deep neural network for deformable registration of 3D volumes using a cycle-consistency. Thanks to the cycle consistency, the proposed deep neural networks can take diverse pair of image data with severe deformation for accurate registration. Experimental results using multiphase liver CT images demonstrate that our method provides very precise 3D image registration within a few seconds, resulting in more accurate cancer size estimation.

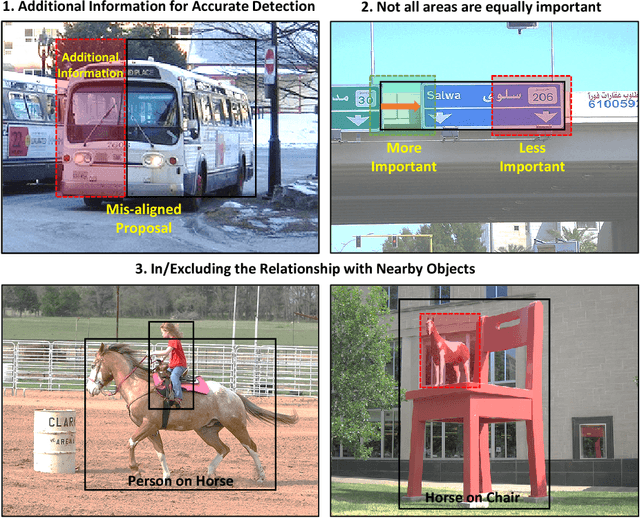

BAN: Focusing on Boundary Context for Object Detection

Nov 13, 2018

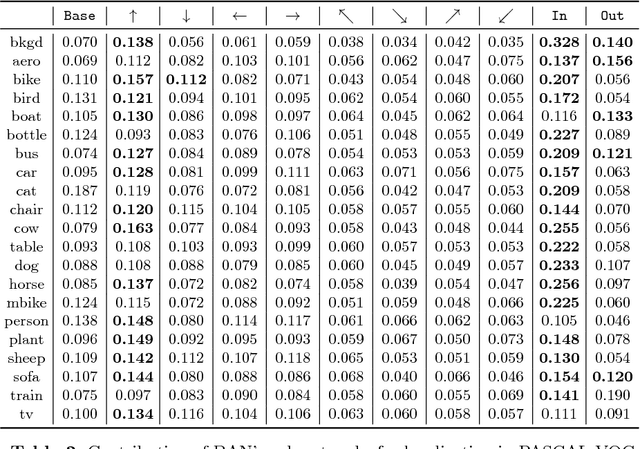

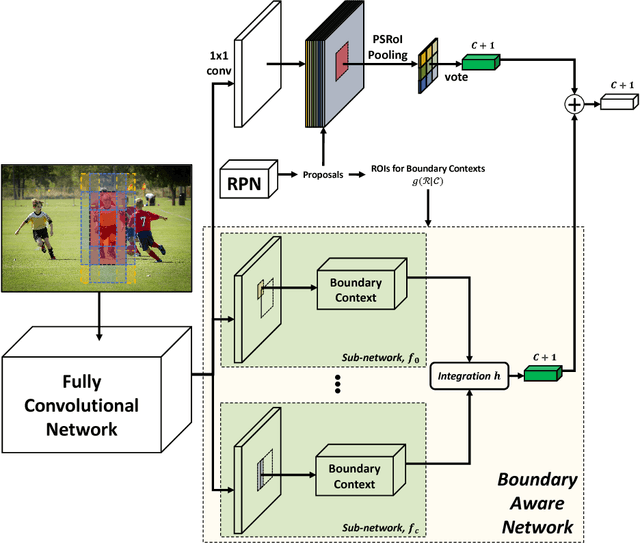

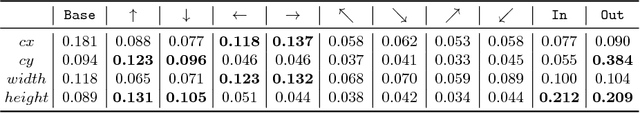

Abstract:Visual context is one of the important clue for object detection and the context information for boundaries of an object is especially valuable. We propose a boundary aware network (BAN) designed to exploit the visual contexts including boundary information and surroundings, named boundary context, and define three types of the boundary contexts: side, vertex and in/out-boundary context. Our BAN consists of 10 sub-networks for the area belonging to the boundary contexts. The detection head of BAN is defined as an ensemble of these sub-networks with different contributions depending on the sub-problem of detection. To verify our method, we visualize the activation of the sub-networks according to the boundary contexts and empirically show that the sub-networks contribute more to the related sub-problem in detection. We evaluate our method on PASCAL VOC detection benchmark and MS COCO dataset. The proposed method achieves the mean Average Precision (mAP) of 83.4% on PASCAL VOC and 36.9% on MS COCO. BAN allows the convolution network to provide an additional source of contexts for detection and selectively focus on the more important contexts, and it can be generally applied to many other detection methods as well to enhance the accuracy in detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge