Bong-Nam Kang

Detector With Focus: Normalizing Gradient In Image Pyramid

Sep 05, 2019

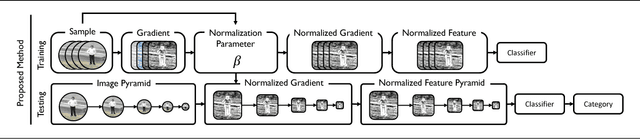

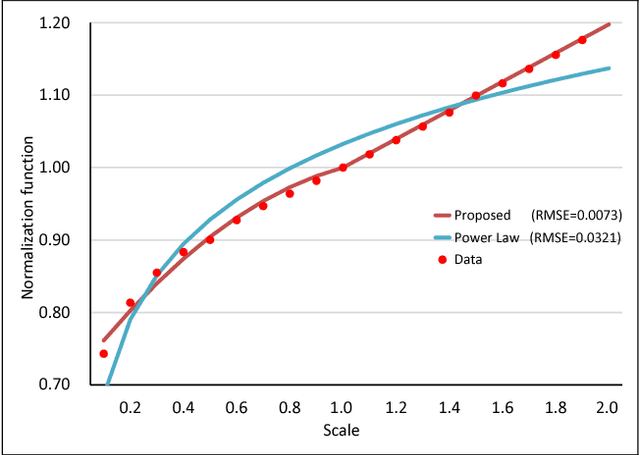

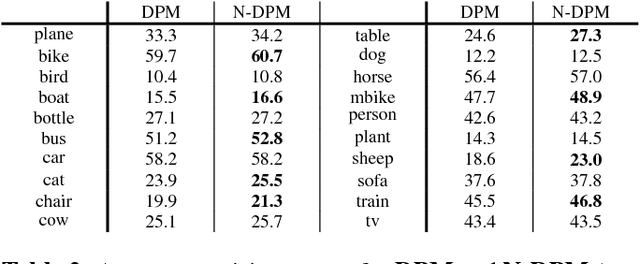

Abstract:An image pyramid can extend many object detection algorithms to solve detection on multiple scales. However, interpolation during the resampling process of an image pyramid causes gradient variation, which is the difference of the gradients between the original image and the scaled images. Our key insight is that the increased variance of gradients makes the classifiers have difficulty in correctly assigning categories. We prove the existence of the gradient variation by formulating the ratio of gradient expectations between an original image and scaled images, then propose a simple and novel gradient normalization method to eliminate the effect of this variation. The proposed normalization method reduce the variance in an image pyramid and allow the classifier to focus on a smaller coverage. We show the improvement in three different visual recognition problems: pedestrian detection, pose estimation, and object detection. The method is generally applicable to many vision algorithms based on an image pyramid with gradients.

Attentional Feature-Pair Relation Networks for Accurate Face Recognition

Aug 17, 2019

Abstract:Human face recognition is one of the most important research areas in biometrics. However, the robust face recognition under a drastic change of the facial pose, expression, and illumination is a big challenging problem for its practical application. Such variations make face recognition more difficult. In this paper, we propose a novel face recognition method, called Attentional Feature-pair Relation Network (AFRN), which represents the face by the relevant pairs of local appearance block features with their attention scores. The AFRN represents the face by all possible pairs of the 9x9 local appearance block features, the importance of each pair is considered by the attention map that is obtained from the low-rank bilinear pooling, and each pair is weighted by its corresponding attention score. To increase the accuracy, we select top-K pairs of local appearance block features as relevant facial information and drop the remaining irrelevant. The weighted top-K pairs are propagated to extract the joint feature-pair relation by using bilinear attention network. In experiments, we show the effectiveness of the proposed AFRN and achieve the outstanding performance in the 1:1 face verification and 1:N face identification tasks compared to existing state-of-the-art methods on the challenging LFW, YTF, CALFW, CPLFW, CFP, AgeDB, IJB-A, IJB-B, and IJB-C datasets.

Pairwise Relational Networks using Local Appearance Features for Face Recognition

Nov 15, 2018

Abstract:We propose a new face recognition method, called a pairwise relational network (PRN), which takes local appearance features around landmark points on the feature map, and captures unique pairwise relations with the same identity and discriminative pairwise relations between different identities. The PRN aims to determine facial part-relational structure from local appearance feature pairs. Because meaningful pairwise relations should be identity dependent, we add a face identity state feature, which obtains from the long short-term memory (LSTM) units network with the sequential local appearance features. To further improve accuracy, we combined the global appearance features with the pairwise relational feature. Experimental results on the LFW show that the PRN achieved 99.76% accuracy. On the YTF, PRN achieved the state-of-the-art accuracy (96.3%). The PRN also achieved comparable results to the state-of-the-art for both face verification and face identification tasks on the IJB-A and IJB-B. This work is already published on ECCV 2018.

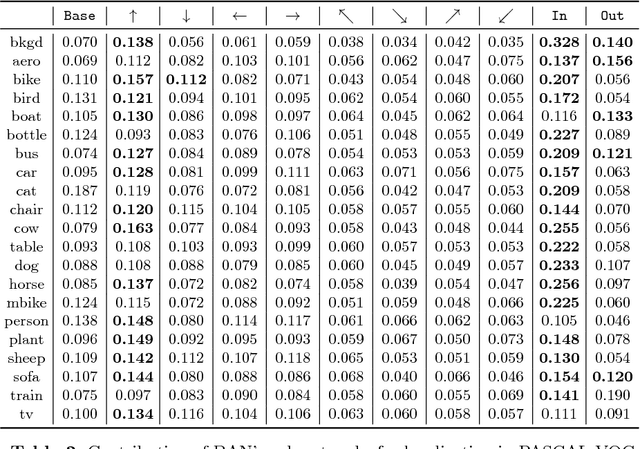

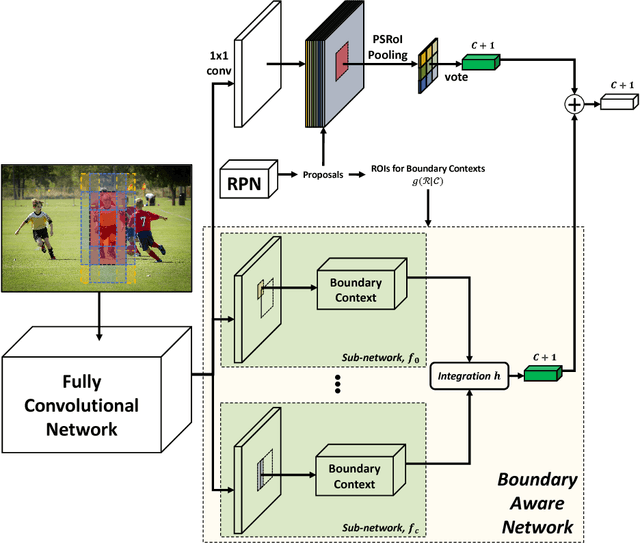

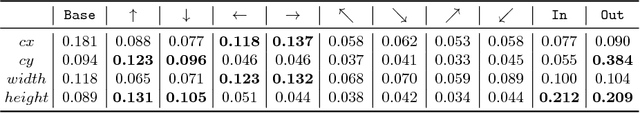

BAN: Focusing on Boundary Context for Object Detection

Nov 13, 2018

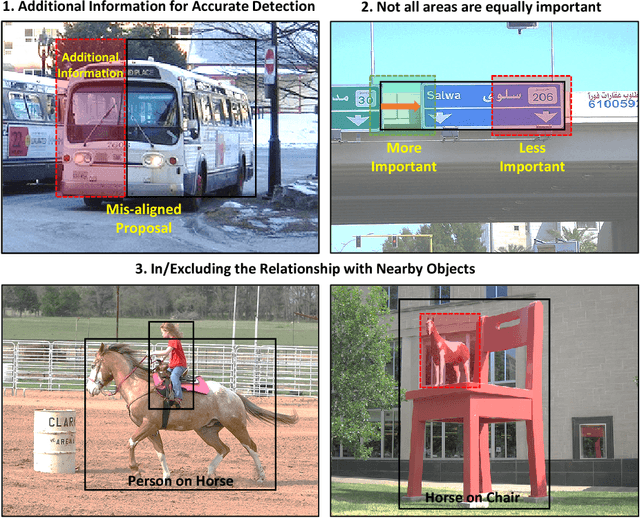

Abstract:Visual context is one of the important clue for object detection and the context information for boundaries of an object is especially valuable. We propose a boundary aware network (BAN) designed to exploit the visual contexts including boundary information and surroundings, named boundary context, and define three types of the boundary contexts: side, vertex and in/out-boundary context. Our BAN consists of 10 sub-networks for the area belonging to the boundary contexts. The detection head of BAN is defined as an ensemble of these sub-networks with different contributions depending on the sub-problem of detection. To verify our method, we visualize the activation of the sub-networks according to the boundary contexts and empirically show that the sub-networks contribute more to the related sub-problem in detection. We evaluate our method on PASCAL VOC detection benchmark and MS COCO dataset. The proposed method achieves the mean Average Precision (mAP) of 83.4% on PASCAL VOC and 36.9% on MS COCO. BAN allows the convolution network to provide an additional source of contexts for detection and selectively focus on the more important contexts, and it can be generally applied to many other detection methods as well to enhance the accuracy in detection.

Pairwise Relational Networks for Face Recognition

Aug 15, 2018

Abstract:Existing face recognition using deep neural networks is difficult to know what kind of features are used to discriminate the identities of face images clearly. To investigate the effective features for face recognition, we propose a novel face recognition method, called a pairwise relational network (PRN), that obtains local appearance patches around landmark points on the feature map, and captures the pairwise relation between a pair of local appearance patches. The PRN is trained to capture unique and discriminative pairwise relations among different identities. Because the existence and meaning of pairwise relations should be identity dependent, we add a face identity state feature, which obtains from the long short-term memory (LSTM) units network with the sequential local appearance patches on the feature maps, to the PRN. To further improve accuracy of face recognition, we combined the global appearance representation with the pairwise relational feature. Experimental results on the LFW show that the PRN using only pairwise relations achieved 99.65% accuracy and the PRN using both pairwise relations and face identity state feature achieved 99.76% accuracy. On the YTF, both the PRN using only pairwise relations and the PRN using pairwise relations and the face identity state feature achieved the state-of-the-art (95.7% and 96.3%). The PRN also achieved comparable results to the state-of-the-art for both face verification and face identification tasks on the IJB-A, and the state-of-the-art on the IJB-B.

SAN: Learning Relationship between Convolutional Features for Multi-Scale Object Detection

Aug 15, 2018

Abstract:Most of the recent successful methods in accurate object detection build on the convolutional neural networks (CNN). However, due to the lack of scale normalization in CNN-based detection methods, the activated channels in the feature space can be completely different according to a scale and this difference makes it hard for the classifier to learn samples. We propose a Scale Aware Network (SAN) that maps the convolutional features from the different scales onto a scale-invariant subspace to make CNN-based detection methods more robust to the scale variation, and also construct a unique learning method which considers purely the relationship between channels without the spatial information for the efficient learning of SAN. To show the validity of our method, we visualize how convolutional features change according to the scale through a channel activation matrix and experimentally show that SAN reduces the feature differences in the scale space. We evaluate our method on VOC PASCAL and MS COCO dataset. We demonstrate SAN by conducting several experiments on structures and parameters. The proposed SAN can be generally applied to many CNN-based detection methods to enhance the detection accuracy with a slight increase in the computing time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge