Jiayu Han

Probing for Understanding of English Verb Classes and Alternations in Large Pre-trained Language Models

Sep 11, 2022

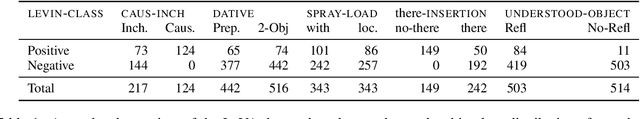

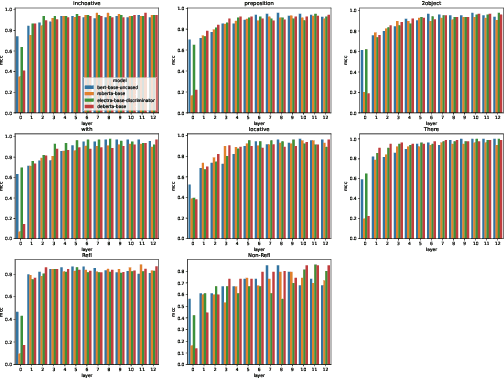

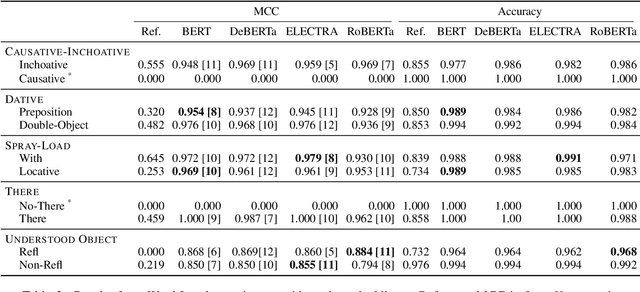

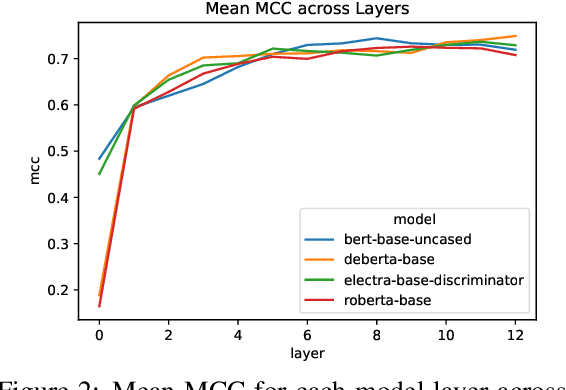

Abstract:We investigate the extent to which verb alternation classes, as described by Levin (1993), are encoded in the embeddings of Large Pre-trained Language Models (PLMs) such as BERT, RoBERTa, ELECTRA, and DeBERTa using selectively constructed diagnostic classifiers for word and sentence-level prediction tasks. We follow and expand upon the experiments of Kann et al. (2019), which aim to probe whether static embeddings encode frame-selectional properties of verbs. At both the word and sentence level, we find that contextual embeddings from PLMs not only outperform non-contextual embeddings, but achieve astonishingly high accuracies on tasks across most alternation classes. Additionally, we find evidence that the middle-to-upper layers of PLMs achieve better performance on average than the lower layers across all probing tasks.

Event-driven Two-stage Solution to Non-intrusive Load Monitoring

Jul 27, 2021

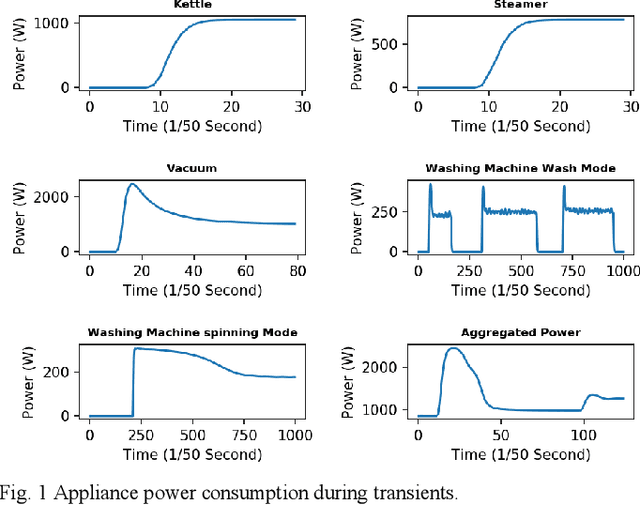

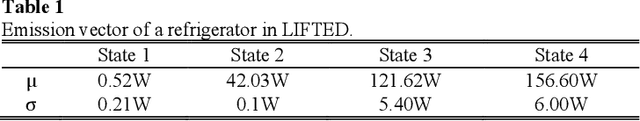

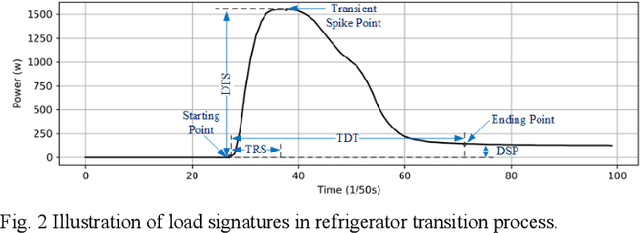

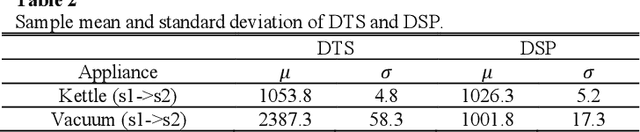

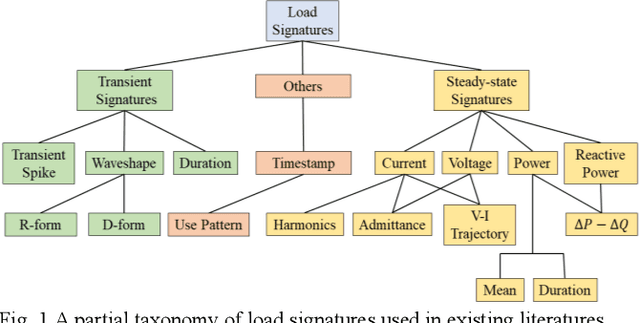

Abstract:Existing methods of non-intrusive load monitoring (NILM) in literatures generally suffer from high computational complexity and/or low accuracy in identifying working household appliances. This paper proposes an event-driven Factorial Hidden Markov model (eFHMM) for multiple appliances with multiple states in a household, aiming for low computational complexity and high load disaggregation accuracy. The proposed eFHMM decreases the computational complexity to be linear to the event number, which ensures online load disaggregation. Furthermore, the eFHMM is solved in two stages, where the first stage identifies state-changing appliance using transient signatures and the second stage confirms the inferred states using steady-state signatures. The combination of transient and steady-state signatures, which are extracted from transient and steady periods segmented by detected events, enhances the uniqueness of each state transition and associated appliances, which ensures accurate load disaggregation. The event-driven two-stage NILM solution, termed as eFHMM-TS, is naturally fit into an edge-cloud framework, which makes possible the real-world application of NILM. The proposed eFHMM-TS method is validated on the LIFTED and synD datasets. Results demonstrate that the eFHMM-TS method outperforms other methods and can be applied in practice.

Adaptive Event Detection for Representative Load Signature Extraction

Jul 23, 2021

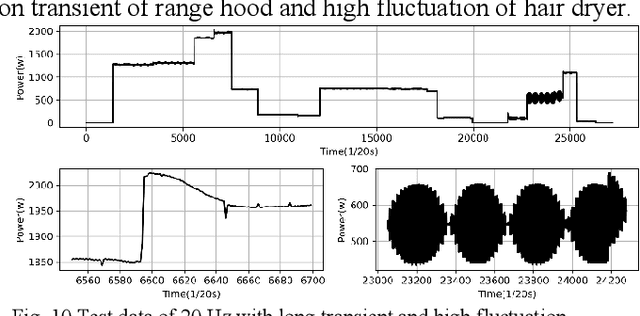

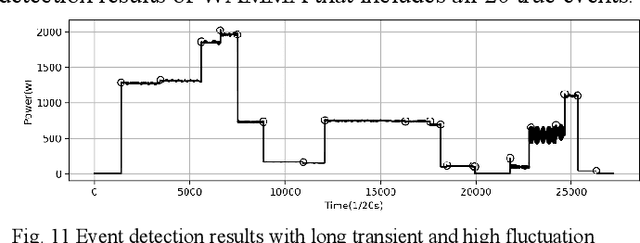

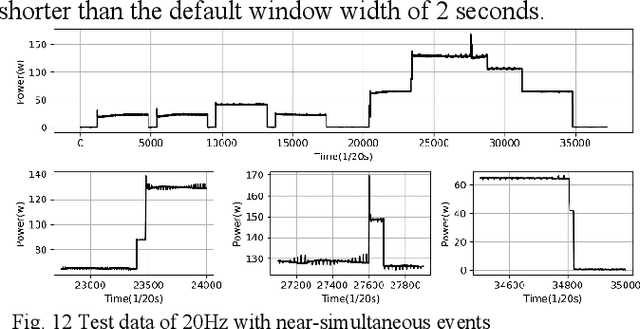

Abstract:Event detection is the first step in event-based non-intrusive load monitoring (NILM) and it can provide useful transient information to identify appliances. However, existing event detection methods with fixed parameters may fail in case of unpredictable and complicated residential load changes such as high fluctuation, long transition, and near simultaneity. This paper proposes a dynamic time-window approach to deal with these highly complex load variations. Specifically, a window with adaptive margins, multi-timescale window screening, and adaptive threshold (WAMMA) method is proposed to detect events in aggregated home appliance load data with high sampling rate (>1Hz). The proposed method accurately captures the transient process by adaptively tuning parameters including window width, margin width, and change threshold. Furthermore, representative transient and steady-state load signatures are extracted and, for the first time, quantified from transient and steady periods segmented by detected events. Case studies on a 20Hz dataset, the 50Hz LIFTED dataset, and the 60Hz BLUED dataset show that the proposed method can robustly outperform other state-of-art event detection methods. This paper also shows that the extracted load signatures can improve NILM accuracy and help develop other applications such as load reconstruction to generate realistic load data for NILM research.

Distantly Supervised Relation Extraction via Recursive Hierarchy-Interactive Attention and Entity-Order Perception

May 18, 2021

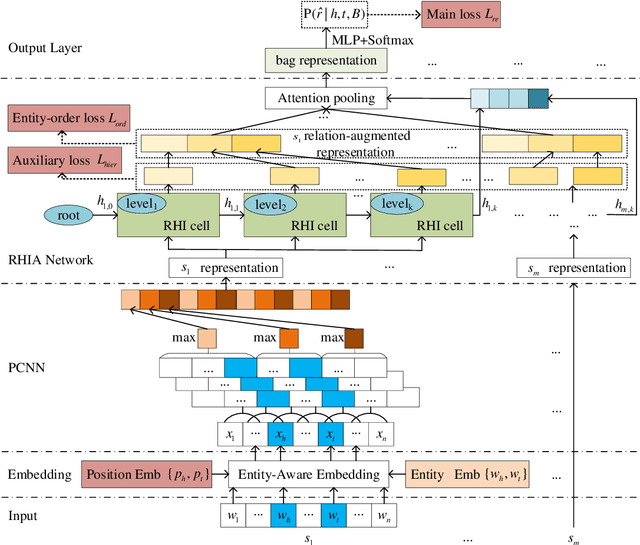

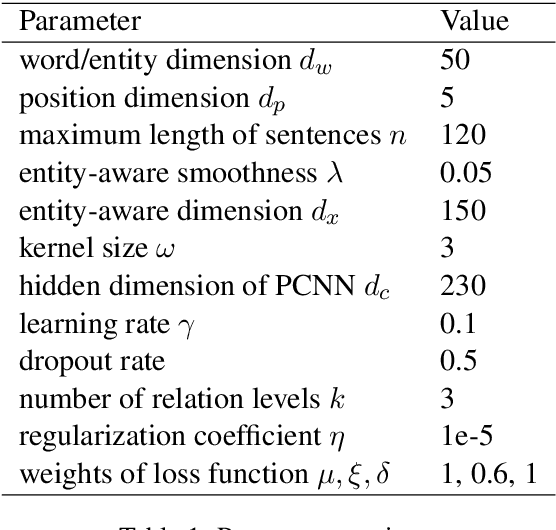

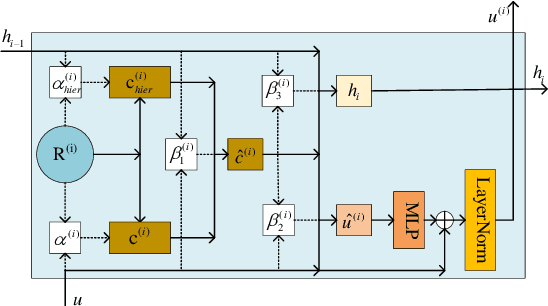

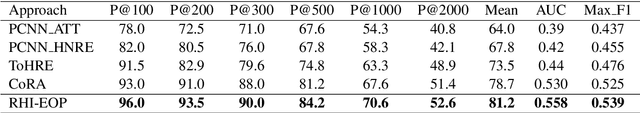

Abstract:Distantly supervised relation extraction has drawn significant attention recently. However, almost all prior works ignore the fact that, in a sentence, the appearance order of two entities contributes to the understanding of its semantics. Furthermore, they leverage relation hierarchies but don't fully exploit the heuristic effect between relation levels, i.e., higher-level relations can give useful information to the lower ones. In this paper, we design a novel Recursive Hierarchy-Interactive Attention network (RHIA), which uses the hierarchical structure of the relation to model the interactive information between the relation levels to further handle long-tail relations. It generates relation-augmented sentence representations along hierarchical relation chains in a recursive structure. Besides, we introduce a newfangled training objective, called Entity-Order Perception (EOP), to make the sentence encoder retain more entity appearance information. Substantial experiments on the popular New York Times (NYT) dataset are conducted. Compared to prior baselines, our approach achieves state-of-the-art performance in terms of precision-recall (P-R) curves, AUC, Top-N precision and other evaluation metrics.

JSCN: Joint Spectral Convolutional Network for Cross Domain Recommendation

Oct 18, 2019

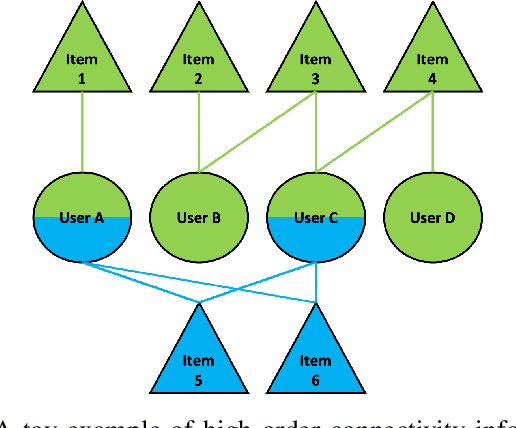

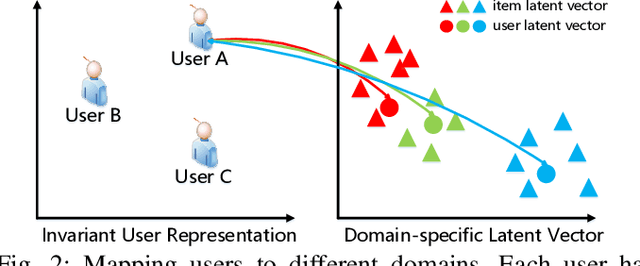

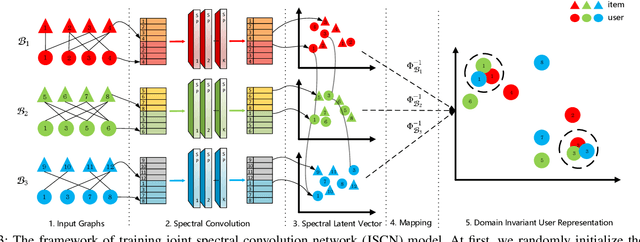

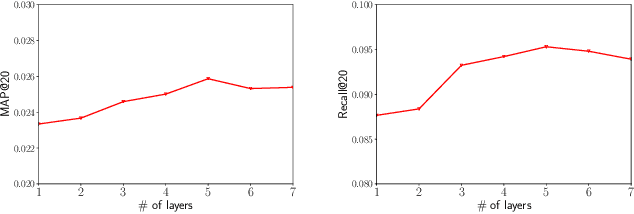

Abstract:Cross-domain recommendation can alleviate the data sparsity problem in recommender systems. To transfer the knowledge from one domain to another, one can either utilize the neighborhood information or learn a direct mapping function. However, all existing methods ignore the high-order connectivity information in cross-domain recommendation area and suffer from the domain-incompatibility problem. In this paper, we propose a \textbf{J}oint \textbf{S}pectral \textbf{C}onvolutional \textbf{N}etwork (JSCN) for cross-domain recommendation. JSCN will simultaneously operate multi-layer spectral convolutions on different graphs, and jointly learn a domain-invariant user representation with a domain adaptive user mapping module. As a result, the high-order comprehensive connectivity information can be extracted by the spectral convolutions and the information can be transferred across domains with the domain-invariant user mapping. The domain adaptive user mapping module can help the incompatible domains to transfer the knowledge across each other. Extensive experiments on $24$ Amazon rating datasets show the effectiveness of JSCN in the cross-domain recommendation, with $9.2\%$ improvement on recall and $36.4\%$ improvement on MAP compared with state-of-the-art methods. Our code is available online ~\footnote{https://github.com/JimLiu96/JSCN}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge