Distantly Supervised Relation Extraction via Recursive Hierarchy-Interactive Attention and Entity-Order Perception

Paper and Code

May 18, 2021

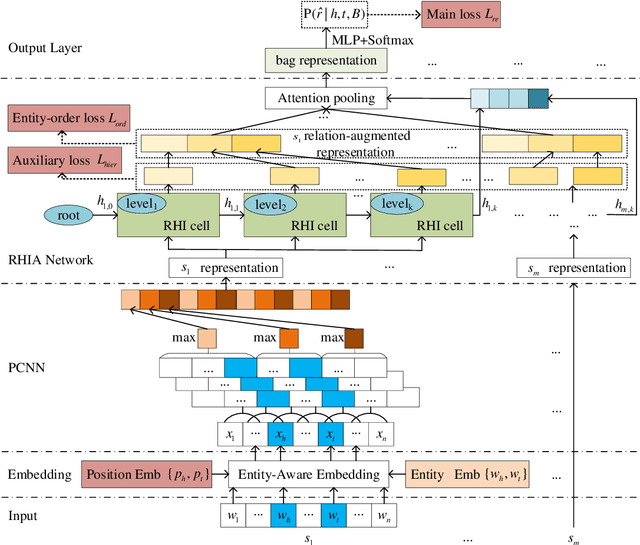

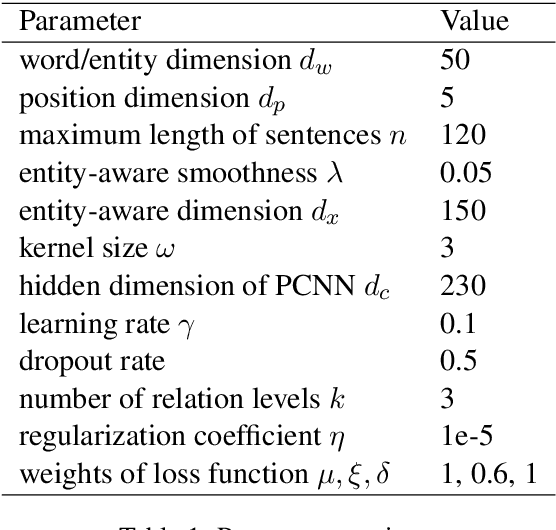

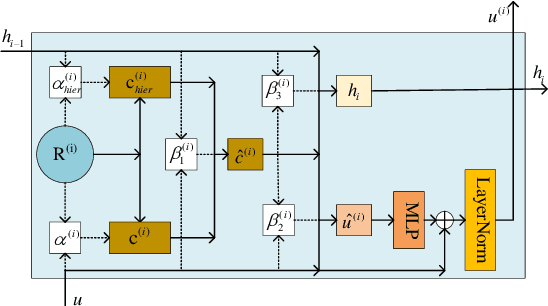

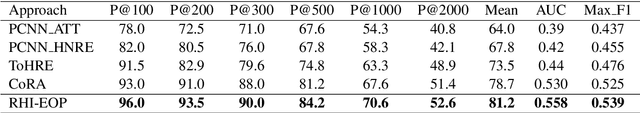

Distantly supervised relation extraction has drawn significant attention recently. However, almost all prior works ignore the fact that, in a sentence, the appearance order of two entities contributes to the understanding of its semantics. Furthermore, they leverage relation hierarchies but don't fully exploit the heuristic effect between relation levels, i.e., higher-level relations can give useful information to the lower ones. In this paper, we design a novel Recursive Hierarchy-Interactive Attention network (RHIA), which uses the hierarchical structure of the relation to model the interactive information between the relation levels to further handle long-tail relations. It generates relation-augmented sentence representations along hierarchical relation chains in a recursive structure. Besides, we introduce a newfangled training objective, called Entity-Order Perception (EOP), to make the sentence encoder retain more entity appearance information. Substantial experiments on the popular New York Times (NYT) dataset are conducted. Compared to prior baselines, our approach achieves state-of-the-art performance in terms of precision-recall (P-R) curves, AUC, Top-N precision and other evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge