Jianxin Zhao

Systematic Literature Review on Vehicular Collaborative Perception -- A Computer Vision Perspective

Apr 06, 2025Abstract:The effectiveness of autonomous vehicles relies on reliable perception capabilities. Despite significant advancements in artificial intelligence and sensor fusion technologies, current single-vehicle perception systems continue to encounter limitations, notably visual occlusions and limited long-range detection capabilities. Collaborative Perception (CP), enabled by Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) communication, has emerged as a promising solution to mitigate these issues and enhance the reliability of autonomous systems. Beyond advancements in communication, the computer vision community is increasingly focusing on improving vehicular perception through collaborative approaches. However, a systematic literature review that thoroughly examines existing work and reduces subjective bias is still lacking. Such a systematic approach helps identify research gaps, recognize common trends across studies, and inform future research directions. In response, this study follows the PRISMA 2020 guidelines and includes 106 peer-reviewed articles. These publications are analyzed based on modalities, collaboration schemes, and key perception tasks. Through a comparative analysis, this review illustrates how different methods address practical issues such as pose errors, temporal latency, communication constraints, domain shifts, heterogeneity, and adversarial attacks. Furthermore, it critically examines evaluation methodologies, highlighting a misalignment between current metrics and CP's fundamental objectives. By delving into all relevant topics in-depth, this review offers valuable insights into challenges, opportunities, and risks, serving as a reference for advancing research in vehicular collaborative perception.

Gradient Coordination for Quantifying and Maximizing Knowledge Transference in Multi-Task Learning

Mar 10, 2023

Abstract:Multi-task learning (MTL) has been widely applied in online advertising and recommender systems. To address the negative transfer issue, recent studies have proposed optimization methods that thoroughly focus on the gradient alignment of directions or magnitudes. However, since prior study has proven that both general and specific knowledge exist in the limited shared capacity, overemphasizing on gradient alignment may crowd out task-specific knowledge, and vice versa. In this paper, we propose a transference-driven approach CoGrad that adaptively maximizes knowledge transference via Coordinated Gradient modification. We explicitly quantify the transference as loss reduction from one task to another, and then derive an auxiliary gradient from optimizing it. We perform the optimization by incorporating this gradient into original task gradients, making the model automatically maximize inter-task transfer and minimize individual losses. Thus, CoGrad can harmonize between general and specific knowledge to boost overall performance. Besides, we introduce an efficient approximation of the Hessian matrix, making CoGrad computationally efficient and simple to implement. Both offline and online experiments verify that CoGrad significantly outperforms previous methods.

User-centric Composable Services: A New Generation of Personal Data Analytics

Nov 26, 2017

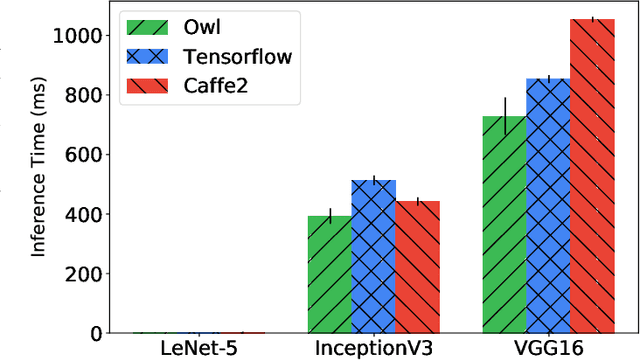

Abstract:Machine Learning (ML) techniques, such as Neural Network, are widely used in today's applications. However, there is still a big gap between the current ML systems and users' requirements. ML systems focus on improving the performance of models in training, while individual users cares more about response time and expressiveness of the tool. Many existing research and product begin to move computation towards edge devices. Based on the numerical computing system Owl, we propose to build the Zoo system to support construction, compose, and deployment of ML models on edge and local devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge