Jiahao Qin

DCER: Dual-Stage Compression and Energy-Based Reconstruction

Feb 03, 2026Abstract:Multimodal fusion faces two robustness challenges: noisy inputs degrade representation quality, and missing modalities cause prediction failures. We propose DCER, a unified framework addressing both challenges through dual-stage compression and energy-based reconstruction. The compression stage operates at two levels: within-modality frequency transforms (wavelet for audio, DCT for video) remove noise while preserving task-relevant patterns, and cross-modality bottleneck tokens force genuine integration rather than modality-specific shortcuts. For missing modalities, energy-based reconstruction recovers representations via gradient descent on a learned energy function, with the final energy providing intrinsic uncertainty quantification (\r{ho} > 0.72 correlation with prediction error). Experiments on CMU-MOSI, CMU-MOSEI, and CH-SIMS demonstrate state-of-the-art performance across all benchmarks, with a U-shaped robustness pattern favoring multimodal fusion at both complete and high-missing conditions. The code will be available on Github.

Bio-Inspired Mamba: Temporal Locality and Bioplausible Learning in Selective State Space Models

Sep 17, 2024

Abstract:This paper introduces Bio-Inspired Mamba (BIM), a novel online learning framework for selective state space models that integrates biological learning principles with the Mamba architecture. BIM combines Real-Time Recurrent Learning (RTRL) with Spike-Timing-Dependent Plasticity (STDP)-like local learning rules, addressing the challenges of temporal locality and biological plausibility in training spiking neural networks. Our approach leverages the inherent connection between backpropagation through time and STDP, offering a computationally efficient alternative that maintains the ability to capture long-range dependencies. We evaluate BIM on language modeling, speech recognition, and biomedical signal analysis tasks, demonstrating competitive performance against traditional methods while adhering to biological learning principles. Results show improved energy efficiency and potential for neuromorphic hardware implementation. BIM not only advances the field of biologically plausible machine learning but also provides insights into the mechanisms of temporal information processing in biological neural networks.

Mamba-Spike: Enhancing the Mamba Architecture with a Spiking Front-End for Efficient Temporal Data Processing

Aug 04, 2024

Abstract:The field of neuromorphic computing has gained significant attention in recent years, aiming to bridge the gap between the efficiency of biological neural networks and the performance of artificial intelligence systems. This paper introduces Mamba-Spike, a novel neuromorphic architecture that integrates a spiking front-end with the Mamba backbone to achieve efficient and robust temporal data processing. The proposed approach leverages the event-driven nature of spiking neural networks (SNNs) to capture and process asynchronous, time-varying inputs, while harnessing the power of the Mamba backbone's selective state spaces and linear-time sequence modeling capabilities to model complex temporal dependencies effectively. The spiking front-end of Mamba-Spike employs biologically inspired neuron models, along with adaptive threshold and synaptic dynamics. These components enable efficient spatiotemporal feature extraction and encoding of the input data. The Mamba backbone, on the other hand, utilizes a hierarchical structure with gated recurrent units and attention mechanisms to capture long-term dependencies and selectively process relevant information. To evaluate the efficacy of the proposed architecture, a comprehensive empirical study is conducted on both neuromorphic datasets, including DVS Gesture and TIDIGITS, and standard datasets, such as Sequential MNIST and CIFAR10-DVS. The results demonstrate that Mamba-Spike consistently outperforms state-of-the-art baselines, achieving higher accuracy, lower latency, and improved energy efficiency. Moreover, the model exhibits robustness to various input perturbations and noise levels, highlighting its potential for real-world applications. The code will be available at https://github.com/ECNU-Cross-Innovation-Lab/Mamba-Spike.

Zoom and Shift are All You Need

Jun 13, 2024

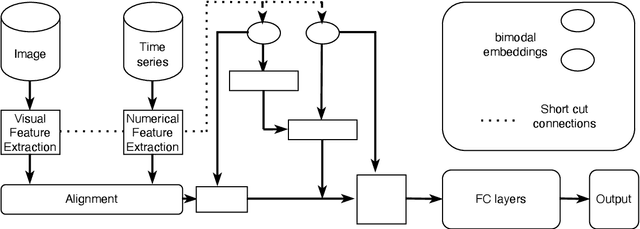

Abstract:Feature alignment serves as the primary mechanism for fusing multimodal data. We put forth a feature alignment approach that achieves full integration of multimodal information. This is accomplished via an alternating process of shifting and expanding feature representations across modalities to obtain a consistent unified representation in a joint feature space. The proposed technique can reliably capture high-level interplay between features originating from distinct modalities. Consequently, substantial gains in multimodal learning performance are attained. Additionally, we demonstrate the superiority of our approach over other prevalent multimodal fusion schemes on a range of tasks. Extensive experimental evaluation conducted on multimodal datasets comprising time series, image, and text demonstrates that our method achieves state-of-the-art results.

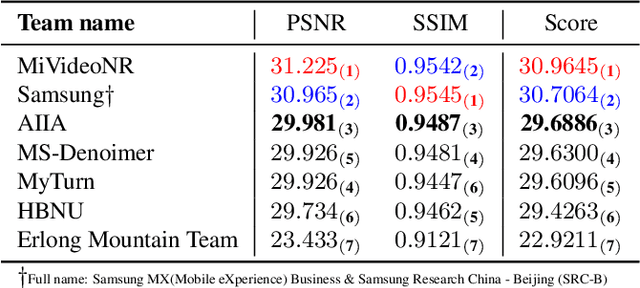

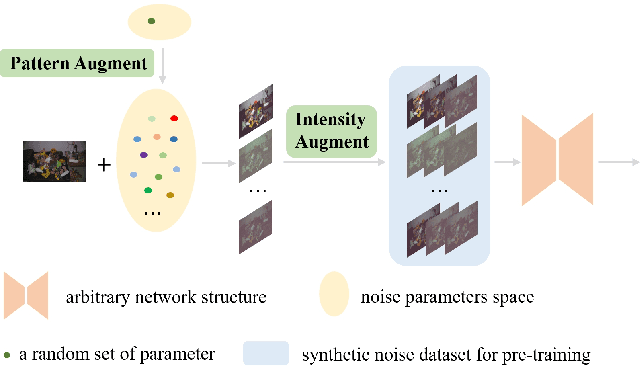

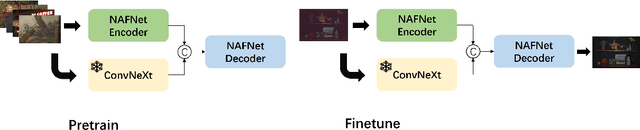

MIPI 2024 Challenge on Few-shot RAW Image Denoising: Methods and Results

Jun 11, 2024

Abstract:The increasing demand for computational photography and imaging on mobile platforms has led to the widespread development and integration of advanced image sensors with novel algorithms in camera systems. However, the scarcity of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). Building on the achievements of the previous MIPI Workshops held at ECCV 2022 and CVPR 2023, we introduce our third MIPI challenge including three tracks focusing on novel image sensors and imaging algorithms. In this paper, we summarize and review the Few-shot RAW Image Denoising track on MIPI 2024. In total, 165 participants were successfully registered, and 7 teams submitted results in the final testing phase. The developed solutions in this challenge achieved state-of-the-art erformance on Few-shot RAW Image Denoising. More details of this challenge and the link to the dataset can be found at https://mipichallenge.org/MIPI2024.

Alternative Telescopic Displacement: An Efficient Multimodal Alignment Method

Jun 29, 2023

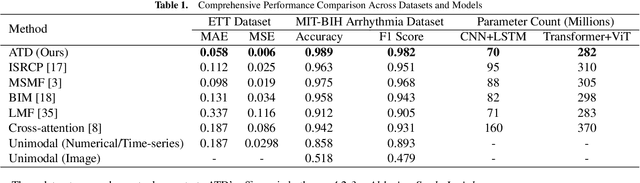

Abstract:Feature alignment is the primary means of fusing multimodal data. We propose a feature alignment method that fully fuses multimodal information, which alternately shifts and expands feature information from different modalities to have a consistent representation in a feature space. The proposed method can robustly capture high-level interactions between features of different modalities, thus significantly improving the performance of multimodal learning. We also show that the proposed method outperforms other popular multimodal schemes on multiple tasks. Experimental evaluation of ETT and MIT-BIH-Arrhythmia, datasets shows that the proposed method achieves state of the art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge