Jeremy Charlier

XtracTree for Regulator Validation of Bagging Methods Used in Retail Banking

Apr 08, 2020

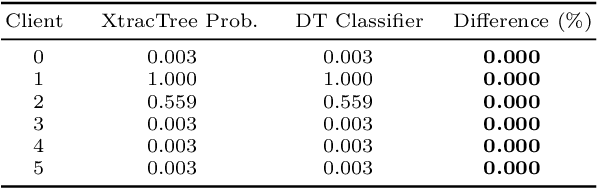

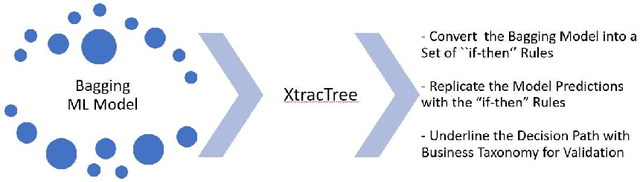

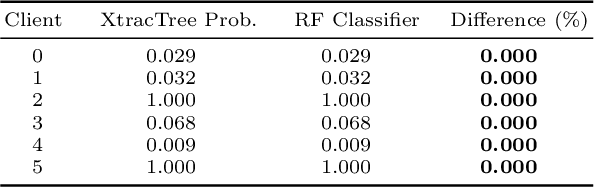

Abstract:Bootstrap aggregation, known as bagging, is one of the most popular ensemble methods used in machine learning (ML). An ensemble method is a supervised ML method that combines multiple hypotheses to form a single hypothesis used for prediction. A bagging algorithm combines multiple classifiers modelled on different sub-samples of the same data set to build one large classifier. Large retail banks are nowadays using the power of ML algorithms, including decision trees and random forests, to optimize the retail banking activities. However, AI bank researchers face a strong challenge from their own model validation department as well as from national financial regulators. Each proposed ML model has to be validated and clear rules for every algorithm-based decision have to be established. In this context, we propose XtracTree, an algorithm that is capable of effectively converting an ML bagging classifier, such as a decision tree or a random forest, into simple "if-then" rules satisfying the requirements of model validation. Our algorithm is also capable of highlighting the decision path for each individual sample or a group of samples, addressing any concern from the regulators regarding ML "black-box". We use a public loan data set from Kaggle to illustrate the usefulness of our approach. Our experiments indicate that, using XtracTree, we are able to ensure a better understanding for our model, leading to an easier model validation by national financial regulators and the internal model validation department.

From Persistent Homology to Reinforcement Learning with Applications for Retail Banking

Nov 23, 2019

Abstract:The retail banking services are one of the pillars of the modern economic growth. However, the evolution of the client's habits in modern societies and the recent European regulations promoting more competition mean the retail banks will encounter serious challenges for the next few years, endangering their activities. They now face an impossible compromise: maximizing the satisfaction of their hyper-connected clients while avoiding any risk of default and being regulatory compliant. Therefore, advanced and novel research concepts are a serious game-changer to gain a competitive advantage. In this context, we investigate in this thesis different concepts bridging the gap between persistent homology, neural networks, recommender engines and reinforcement learning with the aim of improving the quality of the retail banking services. Our contribution is threefold. First, we highlight how to overcome insufficient financial data by generating artificial data using generative models and persistent homology. Then, we present how to perform accurate financial recommendations in multi-dimensions. Finally, we underline a reinforcement learning model-free approach to determine the optimal policy of money management based on the aggregated financial transactions of the clients. Our experimental data sets, extracted from well-known institutions where the privacy and the confidentiality of the clients were not put at risk, support our contributions. In this work, we provide the motivations of our retail banking research project, describe the theory employed to improve the financial services quality and evaluate quantitatively and qualitatively our methodologies for each of the proposed research scenarios.

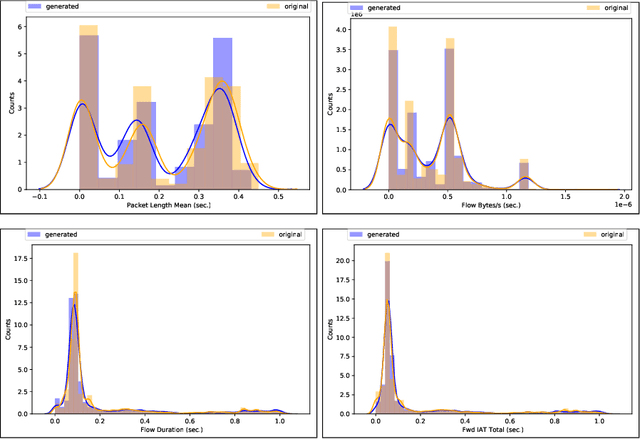

SynGAN: Towards Generating Synthetic Network Attacks using GANs

Aug 26, 2019

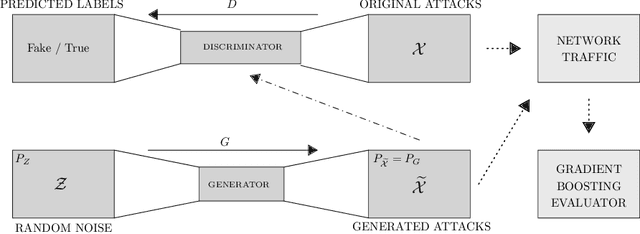

Abstract:The rapid digital transformation without security considerations has resulted in the rise of global-scale cyberattacks. The first line of defense against these attacks are Network Intrusion Detection Systems (NIDS). Once deployed, however, these systems work as blackboxes with a high rate of false positives with no measurable effectiveness. There is a need to continuously test and improve these systems by emulating real-world network attack mutations. We present SynGAN, a framework that generates adversarial network attacks using the Generative Adversial Networks (GAN). SynGAN generates malicious packet flow mutations using real attack traffic, which can improve NIDS attack detection rates. As a first step, we compare two public datasets, NSL-KDD and CICIDS2017, for generating synthetic Distributed Denial of Service (DDoS) network attacks. We evaluate the attack quality (real vs. synthetic) using a gradient boosting classifier.

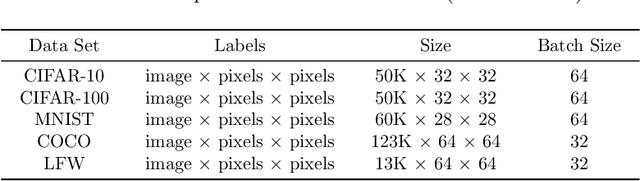

VecHGrad for solving accurately complex tensor decomposition

May 24, 2019

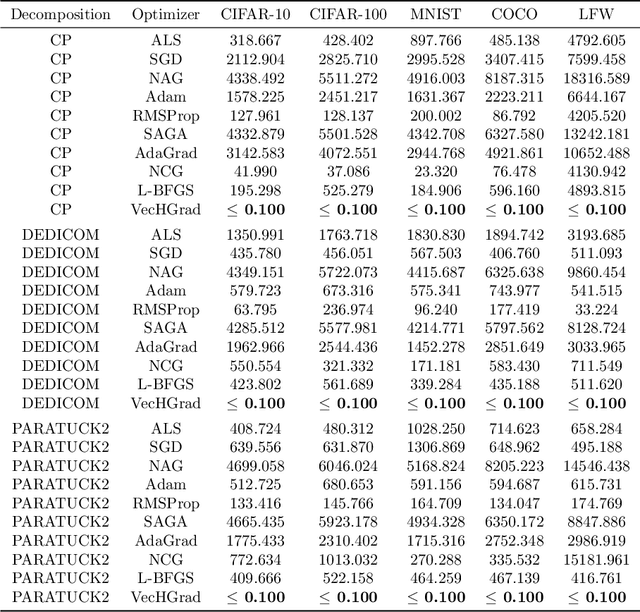

Abstract:Tensor decomposition, a collection of factorization techniques for multidimensional arrays, are among the most general and powerful tools for scientific analysis. However, because of their increasing size, today's data sets require more complex tensor decomposition involving factorization with multiple matrices and diagonal tensors such as DEDICOM or PARATUCK2. Traditional tensor resolution algorithms such as Stochastic Gradient Descent (SGD), Non-linear Conjugate Gradient descent (NCG) or Alternating Least Square (ALS), cannot be easily applied to complex tensor decomposition or often lead to poor accuracy at convergence. We propose a new resolution algorithm, called VecHGrad, for accurate and efficient stochastic resolution over all existing tensor decomposition, specifically designed for complex decomposition. VecHGrad relies on gradient, Hessian-vector product and adaptive line search to ensure the convergence during optimization. Our experiments on five real-world data sets with the state-of-the-art deep learning gradient optimization models show that VecHGrad is capable of converging considerably faster because of its superior theoretical convergence rate per step. Therefore, VecHGrad targets as well deep learning optimizer algorithms. The experiments are performed for various tensor decomposition including CP, DEDICOM and PARATUCK2. Although it involves a slightly more complex update rule, VecHGrad's runtime is similar in practice to that of gradient methods such as SGD, Adam or RMSProp.

MQLV: Modified Q-Learning for Vasicek Model

May 24, 2019

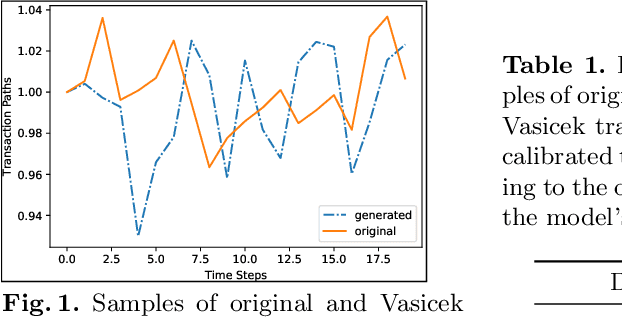

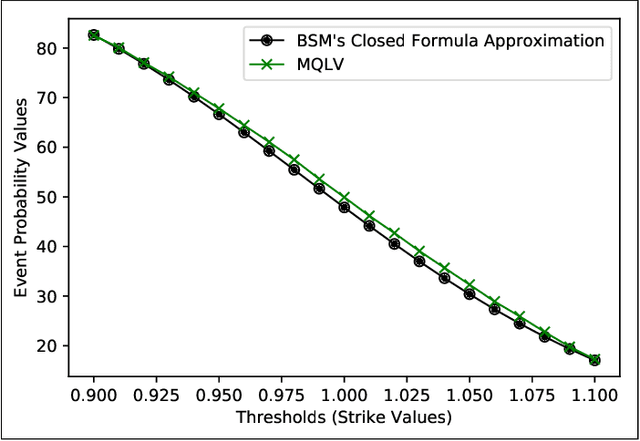

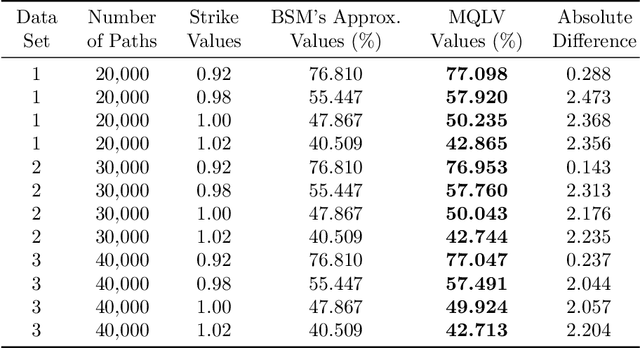

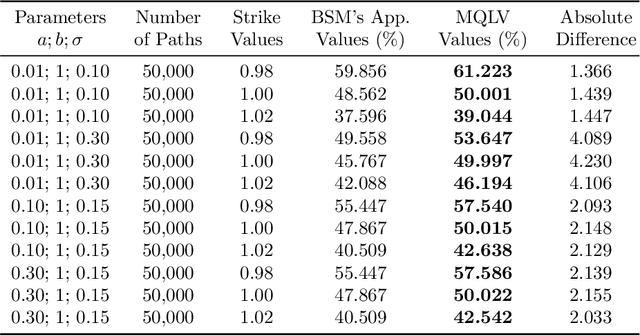

Abstract:In a reinforcement learning approach, an optimal value function is learned across a set of actions, or decisions, that leads to a set of states giving different rewards, with the objective to maximize the overall reward. A policy assigns to each state-action pairs an expected return. We call an optimal policy a policy for which the value function is optimal. QLBS, Q-Learner in the Black-Scholes(-Merton) Worlds, applies the reinforcement learning concepts, and noticeably, the popular Q-learning algorithm, to the financial stochastic model described by Black, Scholes and Merton. However, QLBS is specifically optimized for the geometric Brownian motion and the pricing of vanilla options. Consequently, it suffers from the traditional over-estimation of the Q-values reflected by an over-estimation of the vanilla option prices. Furthermore, its range of application is limited to vanilla option pricing within the financial markets. We propose MQLV, Modified Q-Learner for the Vasicek model, a new reinforcement learning approach that limits the Q-values over-estimation observed in QLBS and extends the simulation to mean reverting stochastic diffusion processes. Additionally, MQLV uses a digital function to estimate the future probability of an event, thus widening the scope of the financial application to any other domain involving time series. Our experiments underline the potential of MQLV on generated Monte Carlo simulations, particularly representative of the retail banking time series. In particular, MQLV is able to determine the optimal policy of money management based on the aggregated financial transactions of the clients, unlocking new frontiers to establish personalized credit card limits or loans. Finally, MQLV is the first methodology compatible with the Vasicek model capable of an event probability estimation targeting simulation of event probabilities in retail banking.

PHom-WAE: Persitent Homology for Wasserstein Auto-Encoders

May 24, 2019

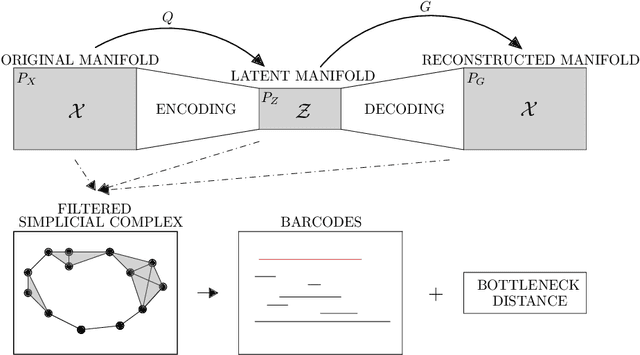

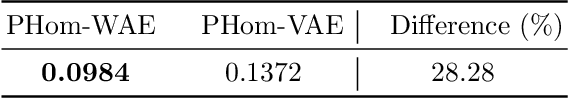

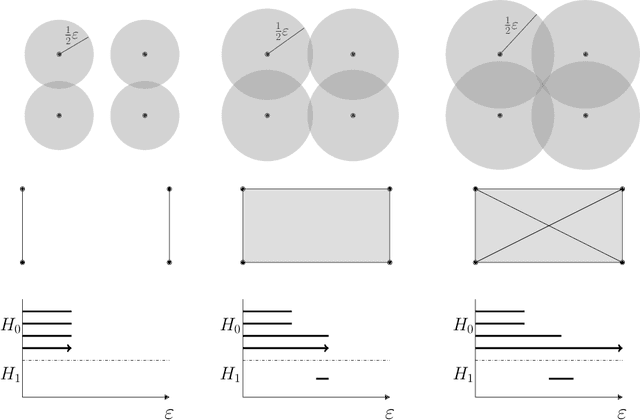

Abstract:Auto-encoders are among the most popular neural network architecture for dimension reduction. They are composed of two parts: the encoder which maps the model distribution to a latent manifold and the decoder which maps the latent manifold to a reconstructed distribution. However, auto-encoders are known to provoke chaotically scattered data distribution in the latent manifold resulting in an incomplete reconstructed distribution. Current distance measures fail to detect this problem because they are not able to acknowledge the shape of the data manifolds, i.e. their topological features, and the scale at which the manifolds should be analyzed. We propose Persistent Homology for Wasserstein Auto-Encoders, called PHom-WAE, a new methodology to assess and measure the data distribution of a generative model. PHom-WAE minimizes the Wasserstein distance between the true distribution and the reconstructed distribution and uses persistent homology, the study of the topological features of a space at different spatial resolutions, to compare the nature of the latent manifold and the reconstructed distribution. Our experiments underline the potential of persistent homology for Wasserstein Auto-Encoders in comparison to Variational Auto-Encoders, another type of generative model. The experiments are conducted on a real-world data set particularly challenging for traditional distance measures and auto-encoders. PHom-WAE is the first methodology to propose a topological distance measure, the bottleneck distance, for Wasserstein Auto-Encoders used to compare decoded samples of high quality in the context of credit card transactions.

PHom-GeM: Persistent Homology for Generative Models

May 23, 2019

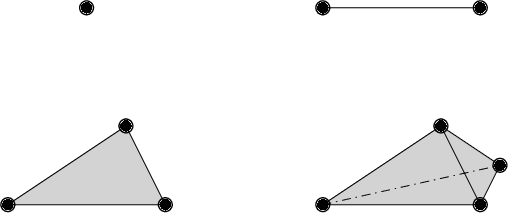

Abstract:Generative neural network models, including Generative Adversarial Network (GAN) and Auto-Encoders (AE), are among the most popular neural network models to generate adversarial data. The GAN model is composed of a generator that produces synthetic data and of a discriminator that discriminates between the generator's output and the true data. AE consist of an encoder which maps the model distribution to a latent manifold and of a decoder which maps the latent manifold to a reconstructed distribution. However, generative models are known to provoke chaotically scattered reconstructed distribution during their training, and consequently, incomplete generated adversarial distributions. Current distance measures fail to address this problem because they are not able to acknowledge the shape of the data manifold, i.e. its topological features, and the scale at which the manifold should be analyzed. We propose Persistent Homology for Generative Models, PHom-GeM, a new methodology to assess and measure the distribution of a generative model. PHom-GeM minimizes an objective function between the true and the reconstructed distributions and uses persistent homology, the study of the topological features of a space at different spatial resolutions, to compare the nature of the true and the generated distributions. Our experiments underline the potential of persistent homology for Wasserstein GAN in comparison to Wasserstein AE and Variational AE. The experiments are conducted on a real-world data set particularly challenging for traditional distance measures and generative neural network models. PHom-GeM is the first methodology to propose a topological distance measure, the bottleneck distance, for generative models used to compare adversarial samples in the context of credit card transactions.

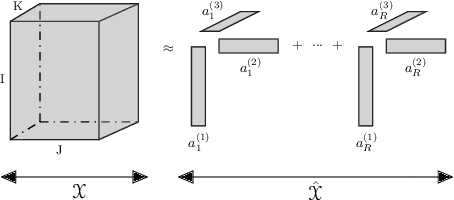

Predicting Sparse Clients' Actions with CPOPT-Net in the Banking Environment

May 23, 2019

Abstract:The digital revolution of the banking system with evolving European regulations have pushed the major banking actors to innovate by a newly use of their clients' digital information. Given highly sparse client activities, we propose CPOPT-Net, an algorithm that combines the CP canonical tensor decomposition, a multidimensional matrix decomposition that factorizes a tensor as the sum of rank-one tensors, and neural networks. CPOPT-Net removes efficiently sparse information with a gradient-based resolution while relying on neural networks for time series predictions. Our experiments show that CPOPT-Net is capable to perform accurate predictions of the clients' actions in the context of personalized recommendation. CPOPT-Net is the first algorithm to use non-linear conjugate gradient tensor resolution with neural networks to propose predictions of financial activities on a public data set.

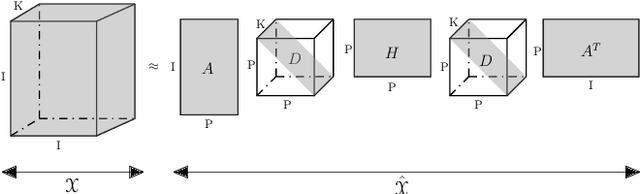

User-Device Authentication in Mobile Banking using APHEN for Paratuck2 Tensor Decomposition

May 23, 2019

Abstract:The new financial European regulations such as PSD2 are changing the retail banking services. Noticeably, the monitoring of the personal expenses is now opened to other institutions than retail banks. Nonetheless, the retail banks are looking to leverage the user-device authentication on the mobile banking applications to enhance the personal financial advertisement. To address the profiling of the authentication, we rely on tensor decomposition, a higher dimensional analogue of matrix decomposition. We use Paratuck2, which expresses a tensor as a multiplication of matrices and diagonal tensors, because of the imbalance between the number of users and devices. We highlight why Paratuck2 is more appropriate in this case than the popular CP tensor decomposition, which decomposes a tensor as a sum of rank-one tensors. However, the computation of Paratuck2 is computational intensive. We propose a new APproximate HEssian-based Newton resolution algorithm, APHEN, capable of solving Paratuck2 more accurately and faster than the other popular approaches based on alternating least square or gradient descent. The results of Paratuck2 are used for the predictions of users' authentication with neural networks. We apply our method for the concrete case of targeting clients for financial advertising campaigns based on the authentication events generated by mobile banking applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge