Jen-Tang Lu

Multimodal Volume-Aware Detection and Segmentation for Brain Metastases Radiosurgery

Aug 15, 2019

Abstract:Stereotactic radiosurgery (SRS), which delivers high doses of irradiation in a single or few shots to small targets, has been a standard of care for brain metastases. While very effective, SRS currently requires manually intensive delineation of tumors. In this work, we present a deep learning approach for automated detection and segmentation of brain metastases using multimodal imaging and ensemble neural networks. In order to address small and multiple brain metastases, we further propose a volume-aware Dice loss which optimizes model performance using the information of lesion size. This work surpasses current benchmark levels and demonstrates a reliable AI-assisted system for SRS treatment planning for multiple brain metastases.

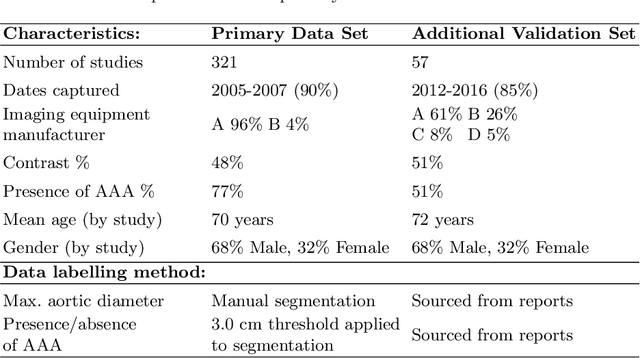

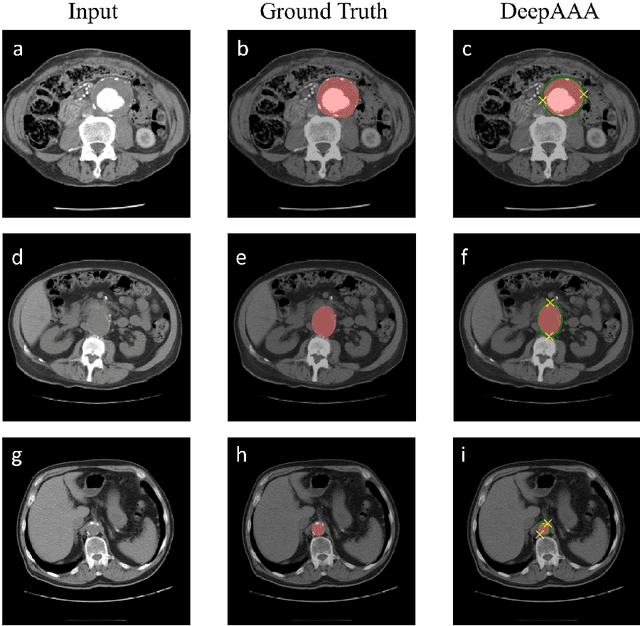

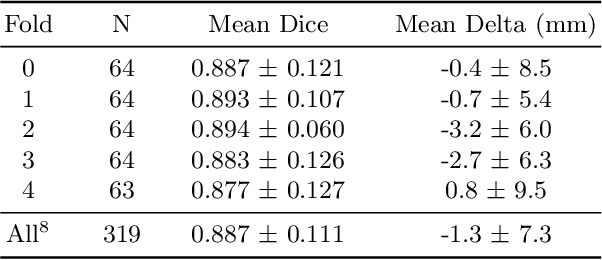

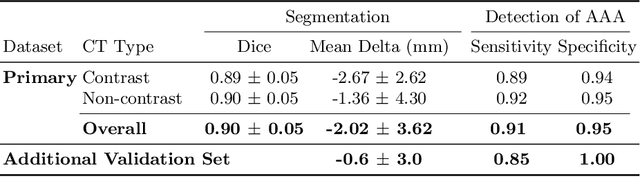

DeepAAA: clinically applicable and generalizable detection of abdominal aortic aneurysm using deep learning

Jul 04, 2019

Abstract:We propose a deep learning-based technique for detection and quantification of abdominal aortic aneurysms (AAAs). The condition, which leads to more than 10,000 deaths per year in the United States, is asymptomatic, often detected incidentally, and often missed by radiologists. Our model architecture is a modified 3D U-Net combined with ellipse fitting that performs aorta segmentation and AAA detection. The study uses 321 abdominal-pelvic CT examinations performed by Massachusetts General Hospital Department of Radiology for training and validation. The model is then further tested for generalizability on a separate set of 57 examinations with differing patient demographics and acquisition characteristics than the original dataset. DeepAAA achieves high performance on both sets of data (sensitivity/specificity 0.91/0.95 and 0.85 / 1.0 respectively), on contrast and non-contrast CT scans and works with image volumes with varying numbers of images. We find that DeepAAA exceeds literature-reported performance of radiologists on incidental AAA detection. It is expected that the model can serve as an effective background detector in routine CT examinations to prevent incidental AAAs from being missed.

DeepSPINE: Automated Lumbar Vertebral Segmentation, Disc-level Designation, and Spinal Stenosis Grading Using Deep Learning

Jul 26, 2018

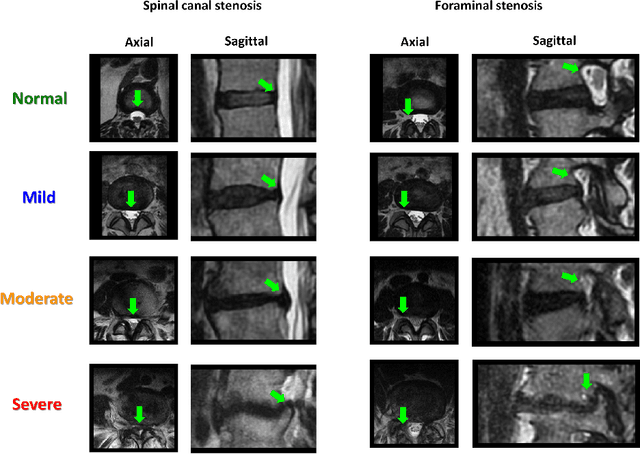

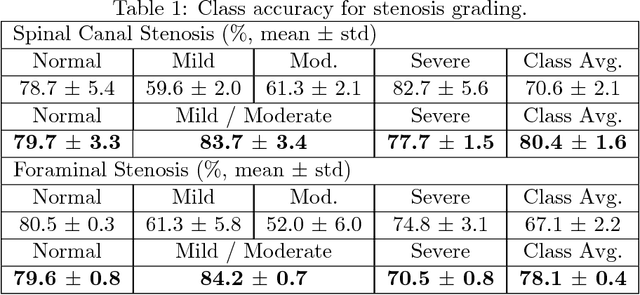

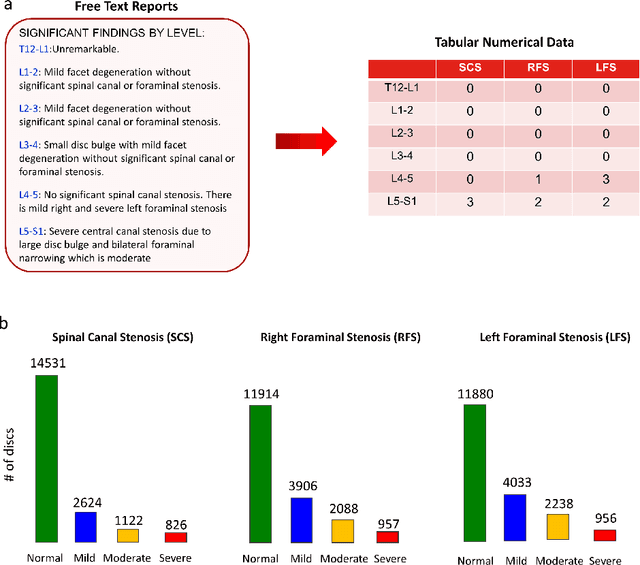

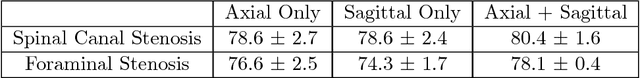

Abstract:The high prevalence of spinal stenosis results in a large volume of MRI imaging, yet interpretation can be time-consuming with high inter-reader variability even among the most specialized radiologists. In this paper, we develop an efficient methodology to leverage the subject-matter-expertise stored in large-scale archival reporting and image data for a deep-learning approach to fully-automated lumbar spinal stenosis grading. Specifically, we introduce three major contributions: (1) a natural-language-processing scheme to extract level-by-level ground-truth labels from free-text radiology reports for the various types and grades of spinal stenosis (2) accurate vertebral segmentation and disc-level localization using a U-Net architecture combined with a spine-curve fitting method, and (3) a multi-input, multi-task, and multi-class convolutional neural network to perform central canal and foraminal stenosis grading on both axial and sagittal imaging series inputs with the extracted report-derived labels applied to corresponding imaging level segments. This study uses a large dataset of 22796 disc-levels extracted from 4075 patients. We achieve state-of-the-art performance on lumbar spinal stenosis classification and expect the technique will increase both radiology workflow efficiency and the perceived value of radiology reports for referring clinicians and patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge