Jean-Marc Andreoli

GOAL: A Generalist Combinatorial Optimization Agent Learner

Jun 21, 2024

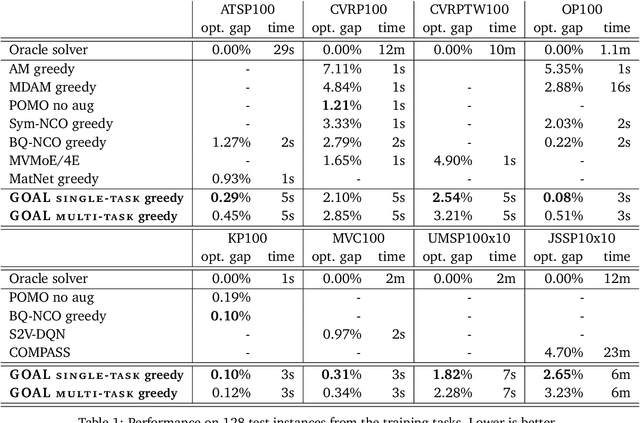

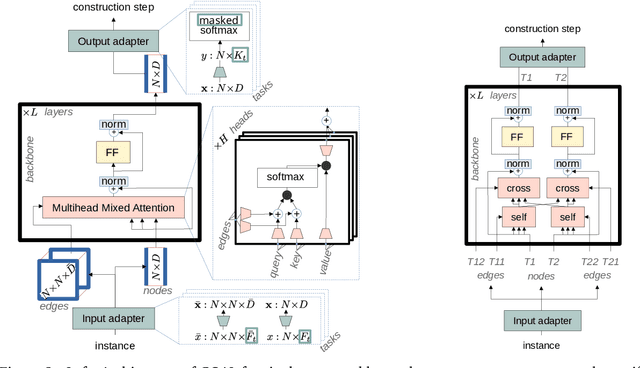

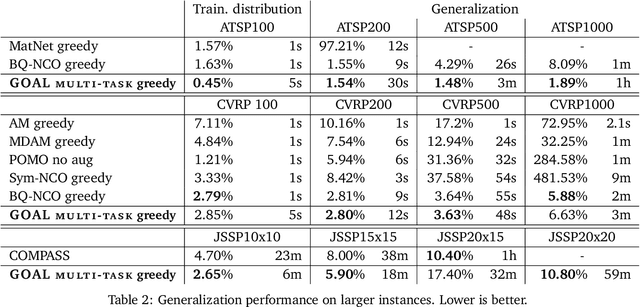

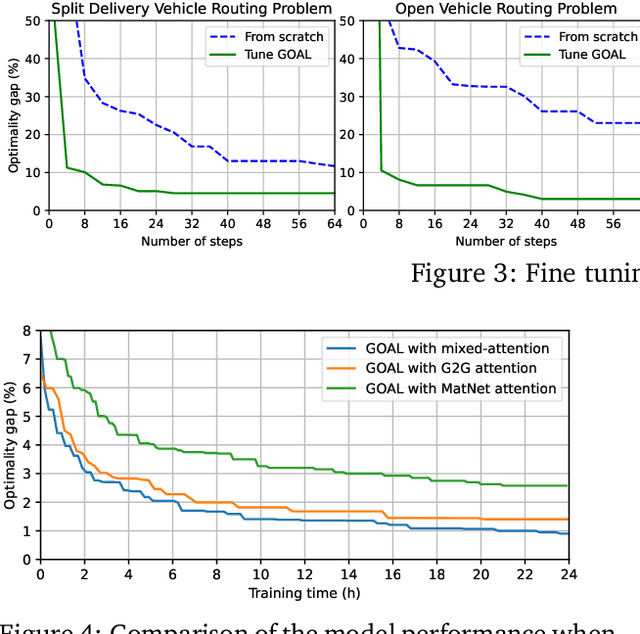

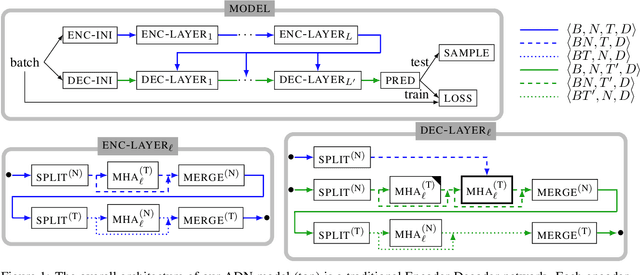

Abstract:Machine Learning-based heuristics have recently shown impressive performance in solving a variety of hard combinatorial optimization problems (COPs). However they generally rely on a separate neural model, specialized and trained for each single problem. Any variation of a problem requires adjustment of its model and re-training from scratch. In this paper, we propose GOAL (for Generalist combinatorial Optimization Agent Learning), a generalist model capable of efficiently solving multiple COPs and which can be fine-tuned to solve new COPs. GOAL consists of a single backbone plus light-weight problem-specific adapters, mostly for input and output processing. The backbone is based on a new form of mixed-attention blocks which allows to handle problems defined on graphs with arbitrary combinations of node, edge and instance-level features. Additionally, problems which involve heterogeneous nodes or edges, such as in multi-partite graphs, are handled through a novel multi-type transformer architecture, where the attention blocks are duplicated to attend only the relevant combination of types while relying on the same shared parameters. We train GOAL on a set of routing, scheduling and classic graph problems and show that it is only slightly inferior to the specialized baselines while being the first multi-task model that solves a variety of COPs. Finally, we showcase the strong transfer learning capacity of GOAL by fine-tuning or learning the adapters for new problems, with only few shots and little data.

BQ-NCO: Bisimulation Quotienting for Generalizable Neural Combinatorial Optimization

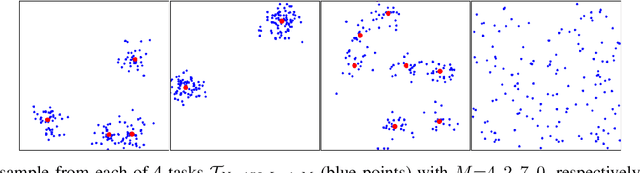

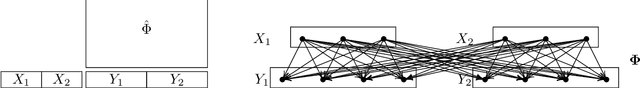

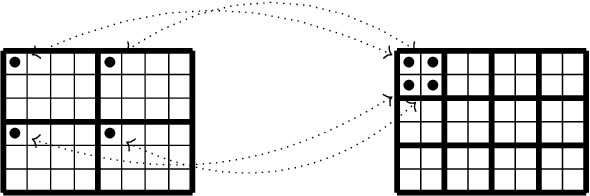

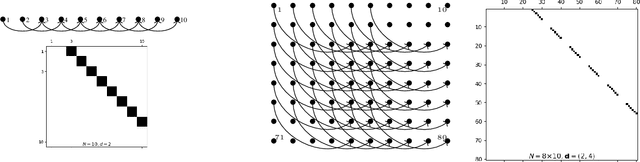

Jan 12, 2023Abstract:Despite the success of Neural Combinatorial Optimization methods for end-to-end heuristic learning, out-of-distribution generalization remains a challenge. In this paper, we present a novel formulation of combinatorial optimization (CO) problems as Markov Decision Processes (MDPs) that effectively leverages symmetries of the CO problems to improve out-of-distribution robustness. Starting from the standard MDP formulation of constructive heuristics, we introduce a generic transformation based on bisimulation quotienting (BQ) in MDPs. This transformation allows to reduce the state space by accounting for the intrinsic symmetries of the CO problem and facilitates the MDP solving. We illustrate our approach on the Traveling Salesman, Capacitated Vehicle Routing and Knapsack Problems. We present a BQ reformulation of these problems and introduce a simple attention-based policy network that we train by imitation of (near) optimal solutions for small instances from a single distribution. We obtain new state-of-the-art generalization results for instances with up to 1000 nodes from synthetic and realistic benchmarks that vary both in size and node distributions.

On the Generalization of Neural Combinatorial Optimization Heuristics

Jun 01, 2022

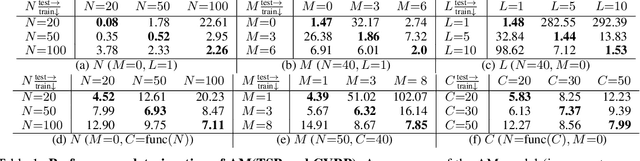

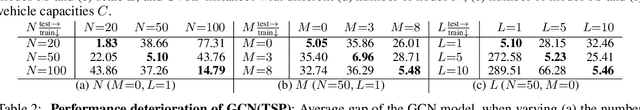

Abstract:Neural Combinatorial Optimization approaches have recently leveraged the expressiveness and flexibility of deep neural networks to learn efficient heuristics for hard Combinatorial Optimization (CO) problems. However, most of the current methods lack generalization: for a given CO problem, heuristics which are trained on instances with certain characteristics underperform when tested on instances with different characteristics. While some previous works have focused on varying the training instances properties, we postulate that a one-size-fit-all model is out of reach. Instead, we formalize solving a CO problem over a given instance distribution as a separate learning task and investigate meta-learning techniques to learn a model on a variety of tasks, in order to optimize its capacity to adapt to new tasks. Through extensive experiments, on two CO problems, using both synthetic and realistic instances, we show that our proposed meta-learning approach significantly improves the generalization of two state-of-the-art models.

Structured Time Series Prediction without Structural Prior

Feb 07, 2022

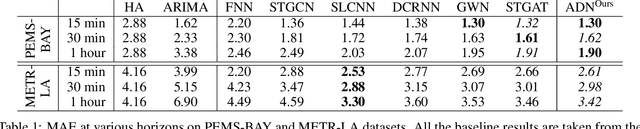

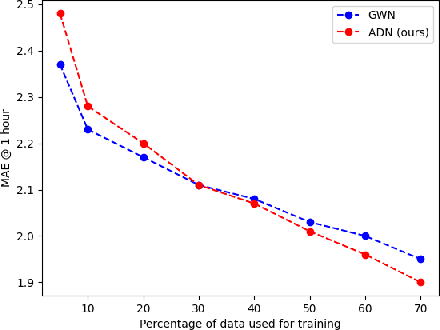

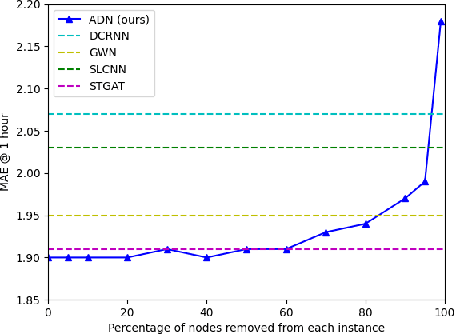

Abstract:Time series prediction is a widespread and well studied problem with applications in many domains (medical, geoscience, network analysis, finance, econometry etc.). In the case of multivariate time series, the key to good performances is to properly capture the dependencies between the variates. Often, these variates are structured, i.e. they are localised in an abstract space, usually representing an aspect of the physical world, and prediction amounts to a form of diffusion of the information across that space over time. Several neural network models of diffusion have been proposed in the literature. However, most of the existing proposals rely on some a priori knowledge on the structure of the space, usually in the form of a graph weighing the pairwise diffusion capacity of its points. We argue that this piece of information can often be dispensed with, since data already contains the diffusion capacity information, and in a more reliable form than that obtained from the usually largely hand-crafted graphs. We propose instead a fully data-driven model which does not rely on such a graph, nor any other prior structural information. We conduct a first set of experiments to measure the impact on performance of a structural prior, as used in baseline models, and show that, except at very low data levels, it remains negligible, and beyond a threshold, it may even become detrimental. We then investigate, through a second set of experiments, the capacity of our model in two respects: treatment of missing data and domain adaptation.

Simple and Effective Balance of Contrastive Losses

Dec 22, 2021

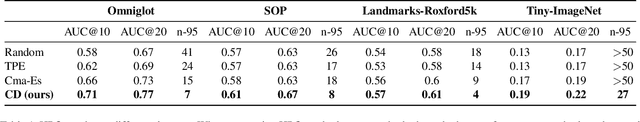

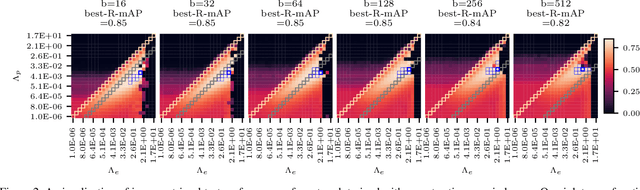

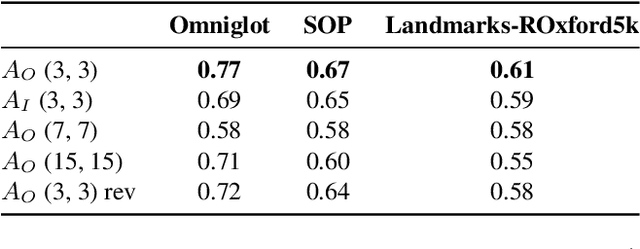

Abstract:Contrastive losses have long been a key ingredient of deep metric learning and are now becoming more popular due to the success of self-supervised learning. Recent research has shown the benefit of decomposing such losses into two sub-losses which act in a complementary way when learning the representation network: a positive term and an entropy term. Although the overall loss is thus defined as a combination of two terms, the balance of these two terms is often hidden behind implementation details and is largely ignored and sub-optimal in practice. In this work, we approach the balance of contrastive losses as a hyper-parameter optimization problem, and propose a coordinate descent-based search method that efficiently find the hyper-parameters that optimize evaluation performance. In the process, we extend existing balance analyses to the contrastive margin loss, include batch size in the balance, and explain how to aggregate loss elements from the batch to maintain near-optimal performance over a larger range of batch sizes. Extensive experiments with benchmarks from deep metric learning and self-supervised learning show that optimal hyper-parameters are found faster with our method than with other common search methods.

Distributional Reinforcement Learning for Energy-Based Sequential Models

Dec 18, 2019

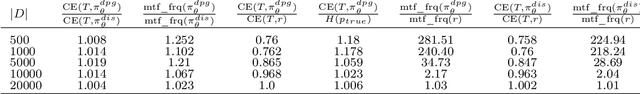

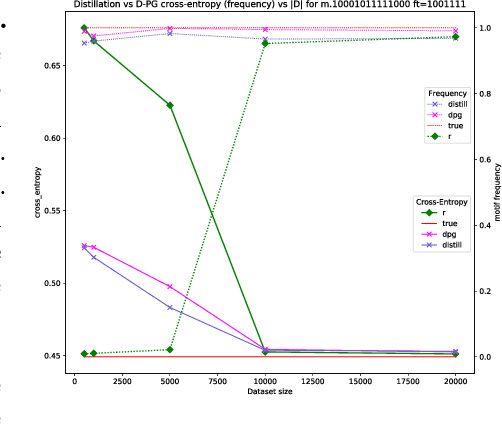

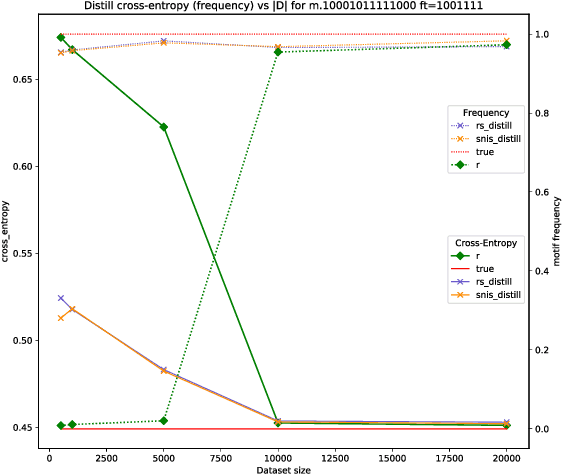

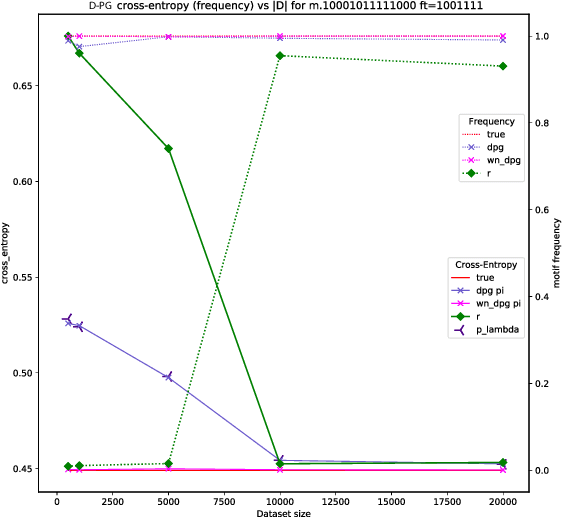

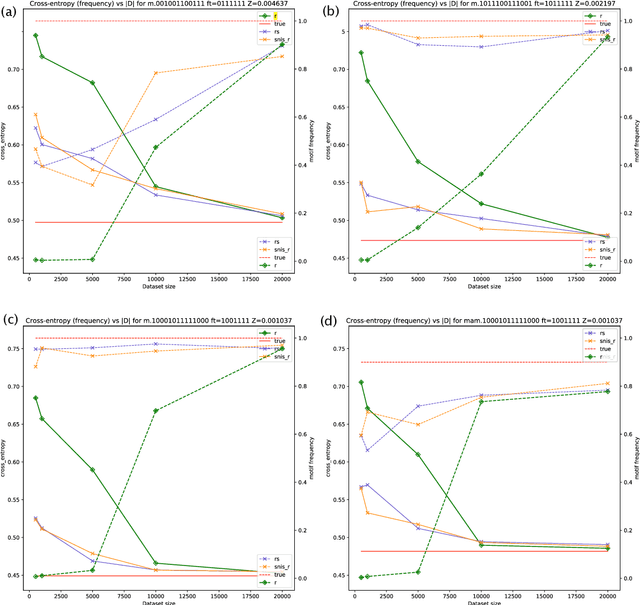

Abstract:Global Autoregressive Models (GAMs) are a recent proposal [Parshakova et al., CoNLL 2019] for exploiting global properties of sequences for data-efficient learning of seq2seq models. In the first phase of training, an Energy-Based model (EBM) over sequences is derived. This EBM has high representational power, but is unnormalized and cannot be directly exploited for sampling. To address this issue [Parshakova et al., CoNLL 2019] proposes a distillation technique, which can only be applied under limited conditions. By relating this problem to Policy Gradient techniques in RL, but in a \emph{distributional} rather than \emph{optimization} perspective, we propose a general approach applicable to any sequential EBM. Its effectiveness is illustrated on GAM-based experiments.

Global Autoregressive Models for Data-Efficient Sequence Learning

Sep 19, 2019

Abstract:Standard autoregressive seq2seq models are easily trained by max-likelihood, but tend to show poor results under small-data conditions. We introduce a class of seq2seq models, GAMs (Global Autoregressive Models), which combine an autoregressive component with a log-linear component, allowing the use of global \textit{a priori} features to compensate for lack of data. We train these models in two steps. In the first step, we obtain an \emph{unnormalized} GAM that maximizes the likelihood of the data, but is improper for fast inference or evaluation. In the second step, we use this GAM to train (by distillation) a second autoregressive model that approximates the \emph{normalized} distribution associated with the GAM, and can be used for fast inference and evaluation. Our experiments focus on language modelling under synthetic conditions and show a strong perplexity reduction of using the second autoregressive model over the standard one.

Convolution is outer product

May 03, 2019

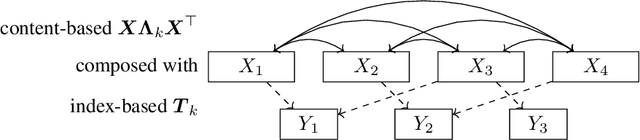

Abstract:The inner product operation between tensors is the corner stone of deep neural network architectures, directly inherited from linear algebra. There is a striking contrast between the unicity of this basic construct and the extreme diversity of high level constructs which have been invented to address various application domains. This paper is interested in an intermediate construct, convolution, and its corollary, attention, which have become ubiquitous in many applications, but are still presented in an ad-hoc fashion depending on the application context. We first identify the common problem addressed by most existing forms of convolution, and show how the solution to that problem naturally involves another very generic operation of linear algebra, the outer product between tensors. We then proceed to show that attention is a form of convolution, called "content based" convolution, hence amenable to the generic formulation based on the outer product. The reader looking for yet another architecture yielding better performance results on a specific task is in for some disappointment. The reader aiming at a better, more grounded understanding of familiar concepts may find food for thought.

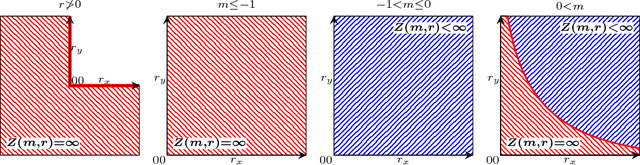

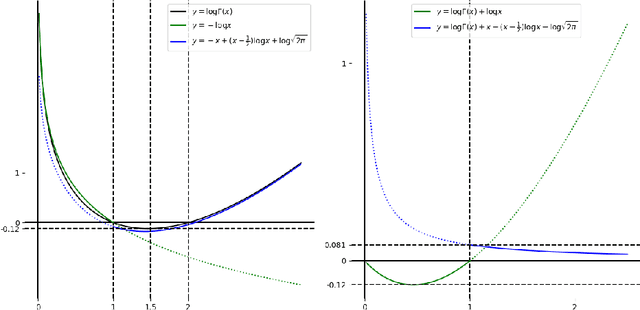

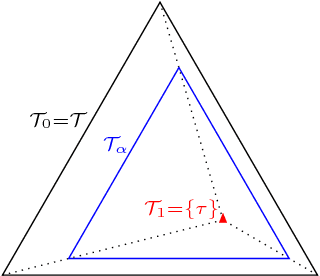

A conjugate prior for the Dirichlet distribution

Nov 13, 2018

Abstract:This note investigates a conjugate class for the Dirichlet distribution class in the exponential family.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge