GOAL: A Generalist Combinatorial Optimization Agent Learner

Paper and Code

Jun 21, 2024

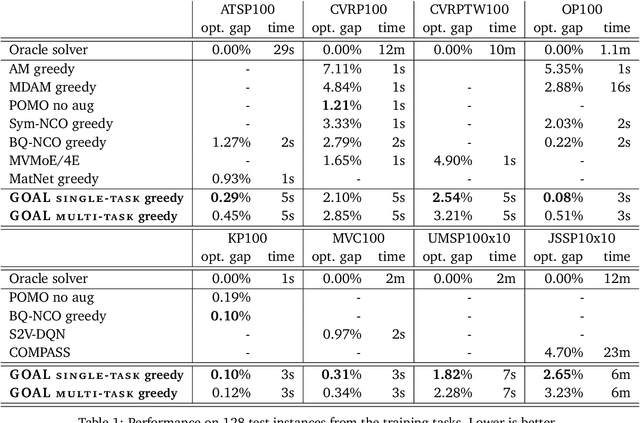

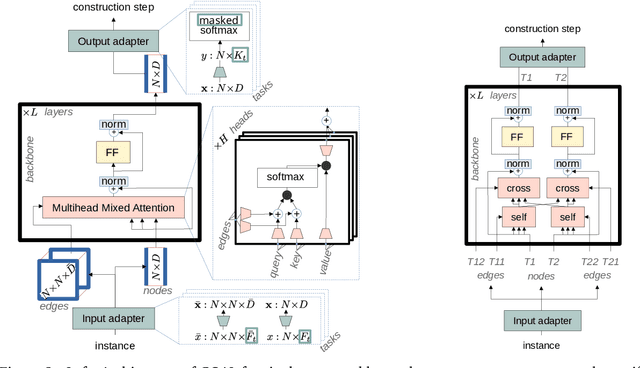

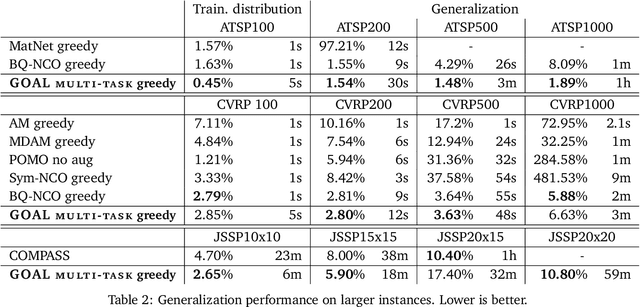

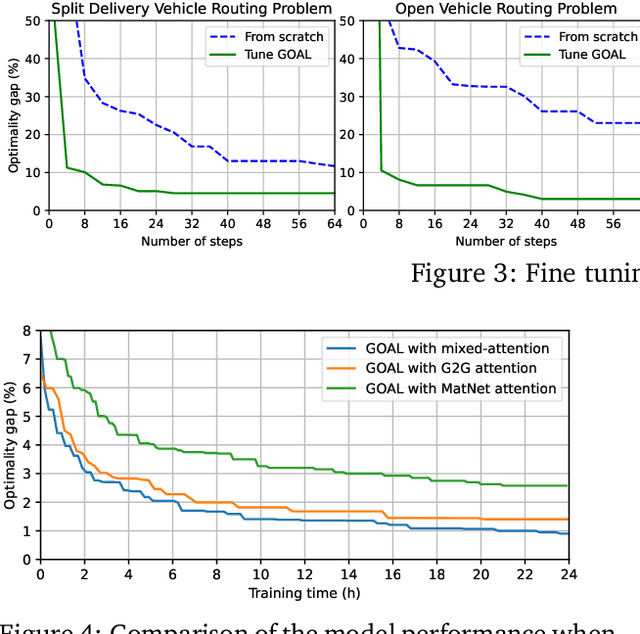

Machine Learning-based heuristics have recently shown impressive performance in solving a variety of hard combinatorial optimization problems (COPs). However they generally rely on a separate neural model, specialized and trained for each single problem. Any variation of a problem requires adjustment of its model and re-training from scratch. In this paper, we propose GOAL (for Generalist combinatorial Optimization Agent Learning), a generalist model capable of efficiently solving multiple COPs and which can be fine-tuned to solve new COPs. GOAL consists of a single backbone plus light-weight problem-specific adapters, mostly for input and output processing. The backbone is based on a new form of mixed-attention blocks which allows to handle problems defined on graphs with arbitrary combinations of node, edge and instance-level features. Additionally, problems which involve heterogeneous nodes or edges, such as in multi-partite graphs, are handled through a novel multi-type transformer architecture, where the attention blocks are duplicated to attend only the relevant combination of types while relying on the same shared parameters. We train GOAL on a set of routing, scheduling and classic graph problems and show that it is only slightly inferior to the specialized baselines while being the first multi-task model that solves a variety of COPs. Finally, we showcase the strong transfer learning capacity of GOAL by fine-tuning or learning the adapters for new problems, with only few shots and little data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge