Jean-Francois Chamberland

Department of Electrical and Computer Engineering, Texas A&M University

Approximate Message Passing for Multi-Preamble Detection in OTFS Random Access

Sep 04, 2025Abstract:This article addresses the problem of multiple preamble detection in random access systems based on orthogonal time frequency space (OTFS) signaling. This challenge is formulated as a structured sparse recovery problem in the complex domain. To tackle it, the authors propose a new approximate message passing (AMP) algorithm that enforces double sparsity: the sparse selection of preambles and the inherent sparsity of OTFS signals in the delay-Doppler domain. From an algorithmic standpoint, the non-separable complex sparsity constraint necessitates a careful derivation and leads to the design of a novel AMP denoiser. Simulation results demonstrate that the proposed method achieves robust detection performance and delivers significant gains over state-of-the-art techniques.

Camera-Based Localization and Enhanced Normalized Mutual Information

Dec 20, 2024

Abstract:Robust and fine localization algorithms are crucial for autonomous driving. For the production of such vehicles as a commodity, affordable sensing solutions and reliable localization algorithms must be designed. This work considers scenarios where the sensor data comes from images captured by an inexpensive camera mounted on the vehicle and where the vehicle contains a fine global map. Such localization algorithms typically involve finding the section in the global map that best matches the captured image. In harsh environments, both the global map and the captured image can be noisy. Because of physical constraints on camera placement, the image captured by the camera can be viewed as a noisy perspective transformed version of the road in the global map. Thus, an optimal algorithm should take into account the unequal noise power in various regions of the captured image, and the intrinsic uncertainty in the global map due to environmental variations. This article briefly reviews two matching methods: (i) standard inner product (SIP) and (ii) normalized mutual information (NMI). It then proposes novel and principled modifications to improve the performance of these algorithms significantly in noisy environments. These enhancements are inspired by the physical constraints associated with autonomous vehicles. They are grounded in statistical signal processing and, in some context, are provably better. Numerical simulations demonstrate the effectiveness of such modifications.

Multi-User SR-LDPC Codes via Coded Demixing with Applications to Cell-Free Systems

Feb 10, 2024

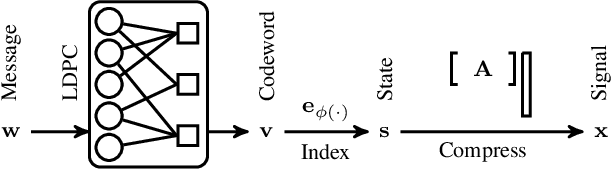

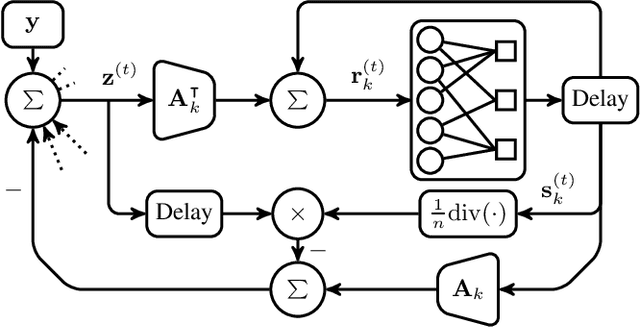

Abstract:Novel sparse regression LDPC (SR-LDPC) codes exhibit excellent performance over additive white Gaussian noise (AWGN) channels in part due to their natural provision of shaping gains. Though SR-LDPC-like codes have been considered within the context of single-user error correction and massive random access, they are yet to be examined as candidates for coordinated multi-user communication scenarios. This article explores this gap in the literature and demonstrates that SR-LDPC codes, when combined with coded demixing techniques, offer a new framework for efficient non-orthogonal multiple access (NOMA) in the context of coordinated multi-user communication channels. The ensuing communication scheme is referred to as MU-SR-LDPC coding. Empirical evidence suggests that, for a fixed SNR, MU-SR-LDPC coding can achieve a target bit error rate (BER) at a higher sum rate than orthogonal multiple access (OMA) techniques such as time division multiple access (TDMA) and frequency division multiple access (FDMA). Importantly, MU-SR-LDPC codes enable a pragmatic solution path for user-centric cell-free communication systems with (local) joint decoding. Results are supported by numerical simulations.

Transformers are Efficient In-Context Estimators for Wireless Communication

Nov 01, 2023

Abstract:Pre-trained transformers can perform in-context learning, where they adapt to a new task using only a small number of prompts without any explicit model optimization. Inspired by this attribute, we propose a novel approach, called in-context estimation, for the canonical communication problem of estimating transmitted symbols from received symbols. A communication channel is essentially a noisy function that maps transmitted symbols to received symbols, and this function can be represented by an unknown parameter whose statistics depend on an (also unknown) latent context. Conventional approaches ignore this hierarchical structure and simply attempt to use known transmissions, called pilots, to perform a least-squares estimate of the channel parameter, which is then used to estimate successive, unknown transmitted symbols. We make the basic connection that transformers show excellent contextual sequence completion with a few prompts, and so they should be able to implicitly determine the latent context from pilot symbols to perform end-to-end in-context estimation of transmitted symbols. Furthermore, the transformer should use information efficiently, i.e., it should utilize any pilots received to attain the best possible symbol estimates. Through extensive simulations, we show that in-context estimation not only significantly outperforms standard approaches, but also achieves the same performance as an estimator with perfect knowledge of the latent context within a few context examples. Thus, we make a strong case that transformers are efficient in-context estimators in the communication setting.

LLMZip: Lossless Text Compression using Large Language Models

Jun 26, 2023

Abstract:We provide new estimates of an asymptotic upper bound on the entropy of English using the large language model LLaMA-7B as a predictor for the next token given a window of past tokens. This estimate is significantly smaller than currently available estimates in \cite{cover1978convergent}, \cite{lutati2023focus}. A natural byproduct is an algorithm for lossless compression of English text which combines the prediction from the large language model with a lossless compression scheme. Preliminary results from limited experiments suggest that our scheme outperforms state-of-the-art text compression schemes such as BSC, ZPAQ, and paq8h.

Scalable Cell-Free Massive MIMO Unsourced Random Access System

Apr 12, 2023

Abstract:Cell-Free Massive MIMO systems aim to expand the coverage area of wireless networks by replacing a single high-performance Access Point (AP) with multiple small, distributed APs connected to a Central Processing Unit (CPU) through a fronthaul. Another novel wireless approach, known as the unsourced random access (URA) paradigm, enables a large number of devices to communicate concurrently on the uplink. This article considers a quasi-static Rayleigh fading channel paired to a scalable cell-free system, wherein a small number of receive antennas in the distributed APs serve devices equipped with a single antenna each. The goal of the study is to extend previous URA results to more realistic channels by examining the performance of a scalable cell-free system. To achieve this goal, we propose a coding scheme that adapts the URA paradigm to various cell-free scenarios. Empirical evidence suggests that using a cell-free architecture can improve the performance of a URA system, especially when taking into account large-scale attenuation and fading.

PolarAir: A Compressed Sensing Scheme for Over-the-Air Federated Learning

Jan 24, 2023

Abstract:We explore a scheme that enables the training of a deep neural network in a Federated Learning configuration over an additive white Gaussian noise channel. The goal is to create a low complexity, linear compression strategy, called PolarAir, that reduces the size of the gradient at the user side to lower the number of channel uses needed to transmit it. The suggested approach belongs to the family of compressed sensing techniques, yet it constructs the sensing matrix and the recovery procedure using multiple access techniques. Simulations show that it can reduce the number of channel uses by ~30% when compared to conveying the gradient without compression. The main advantage of the proposed scheme over other schemes in the literature is its low time complexity. We also investigate the behavior of gradient updates and the performance of PolarAir throughout the training process to obtain insight on how best to construct this compression scheme based on compressed sensing.

Safe Exploration for Constrained Reinforcement Learning with Provable Guarantees

Dec 01, 2021

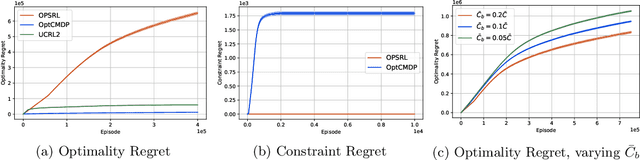

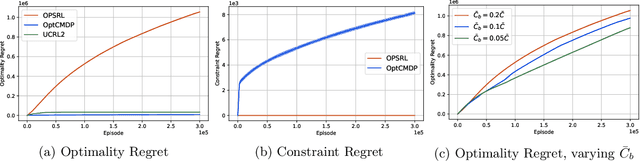

Abstract:We consider the problem of learning an episodic safe control policy that minimizes an objective function, while satisfying necessary safety constraints -- both during learning and deployment. We formulate this safety constrained reinforcement learning (RL) problem using the framework of a finite-horizon Constrained Markov Decision Process (CMDP) with an unknown transition probability function. Here, we model the safety requirements as constraints on the expected cumulative costs that must be satisfied during all episodes of learning. We propose a model-based safe RL algorithm that we call the Optimistic-Pessimistic Safe Reinforcement Learning (OPSRL) algorithm, and show that it achieves an $\tilde{\mathcal{O}}(S^{2}\sqrt{A H^{7}K}/ (\bar{C} - \bar{C}_{b}))$ cumulative regret without violating the safety constraints during learning, where $S$ is the number of states, $A$ is the number of actions, $H$ is the horizon length, $K$ is the number of learning episodes, and $(\bar{C} - \bar{C}_{b})$ is the safety gap, i.e., the difference between the constraint value and the cost of a known safe baseline policy. The scaling as $\tilde{\mathcal{O}}(\sqrt{K})$ is the same as the traditional approach where constraints may be violated during learning, which means that our algorithm suffers no additional regret in spite of providing a safety guarantee. Our key idea is to use an optimistic exploration approach with pessimistic constraint enforcement for learning the policy. This approach simultaneously incentivizes the exploration of unknown states while imposing a penalty for visiting states that are likely to cause violation of safety constraints. We validate our algorithm by evaluating its performance on benchmark problems against conventional approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge