Jawadul H. Bappy

Pose Guided Fashion Image Synthesis Using Deep Generative Model

Jun 17, 2019

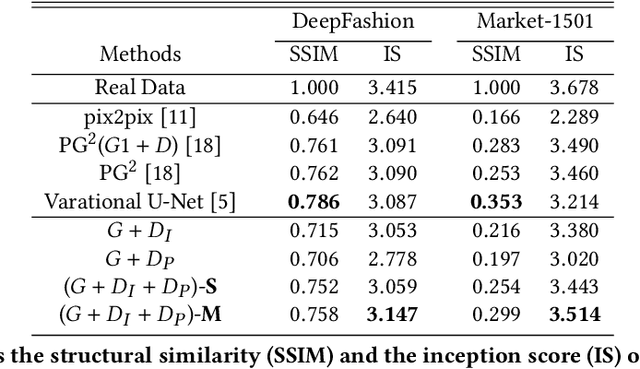

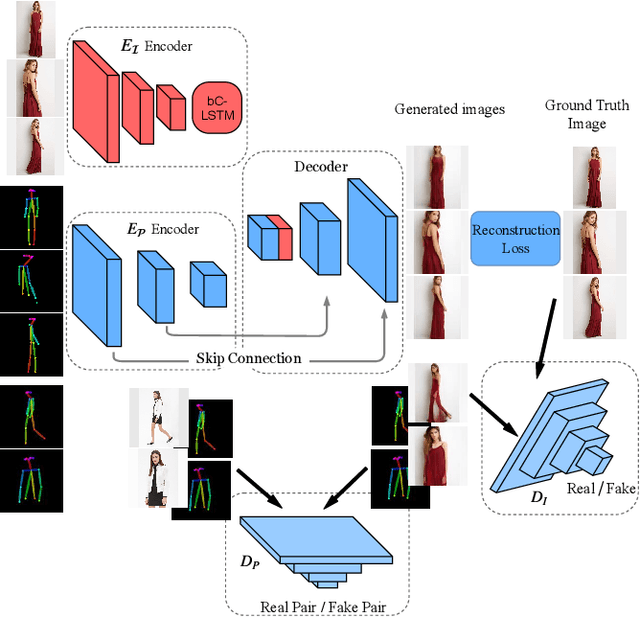

Abstract:Generating a photorealistic image with intended human pose is a promising yet challenging research topic for many applications such as smart photo editing, movie making, virtual try-on, and fashion display. In this paper, we present a novel deep generative model to transfer an image of a person from a given pose to a new pose while keeping fashion item consistent. In order to formulate the framework, we employ one generator and two discriminators for image synthesis. The generator includes an image encoder, a pose encoder and a decoder. The two encoders provide good representation of visual and geometrical context which will be utilized by the decoder in order to generate a photorealistic image. Unlike existing pose-guided image generation models, we exploit two discriminators to guide the synthesis process where one discriminator differentiates between generated image and real images (training samples), and another discriminator verifies the consistency of appearance between a target pose and a generated image. We perform end-to-end training of the network to learn the parameters through back-propagation given ground-truth images. The proposed generative model is capable of synthesizing a photorealistic image of a person given a target pose. We have demonstrated our results by conducting rigorous experiments on two data sets, both quantitatively and qualitatively.

Detecting GAN generated Fake Images using Co-occurrence Matrices

Mar 15, 2019

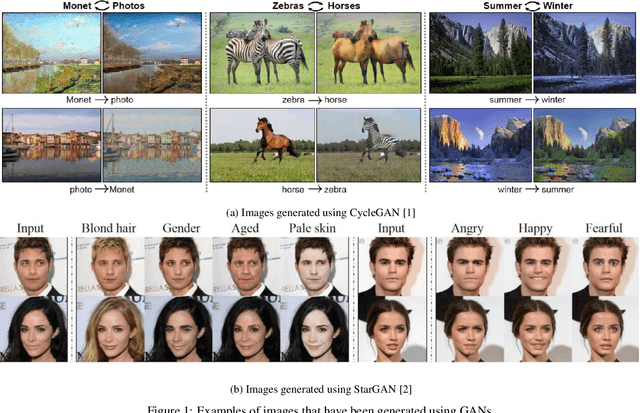

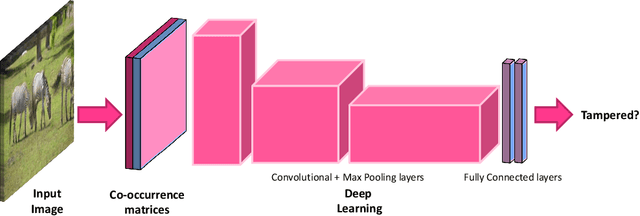

Abstract:The advent of Generative Adversarial Networks (GANs) has brought about completely novel ways of transforming and manipulating pixels in digital images. GAN based techniques such as Image-to-Image translations, DeepFakes, and other automated methods have become increasingly popular in creating fake images. In this paper, we propose a novel approach to detect GAN generated fake images using a combination of co-occurrence matrices and deep learning. We extract co-occurrence matrices on three color channels in the pixel domain and train a model using a deep convolutional neural network (CNN) framework. Experimental results on two diverse and challenging GAN datasets comprising more than 56,000 images based on unpaired image-to-image translations (cycleGAN [1]) and facial attributes/expressions (StarGAN [2]) show that our approach is promising and achieves more than 99% classification accuracy in both datasets. Further, our approach also generalizes well and achieves good results when trained on one dataset and tested on the other.

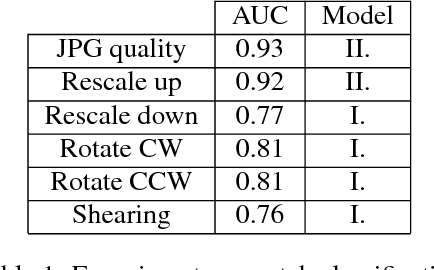

Hybrid LSTM and Encoder-Decoder Architecture for Detection of Image Forgeries

Mar 06, 2019

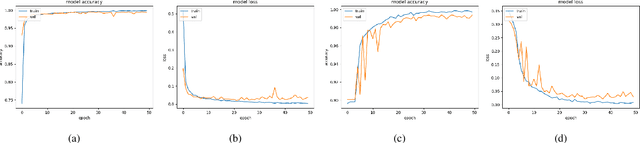

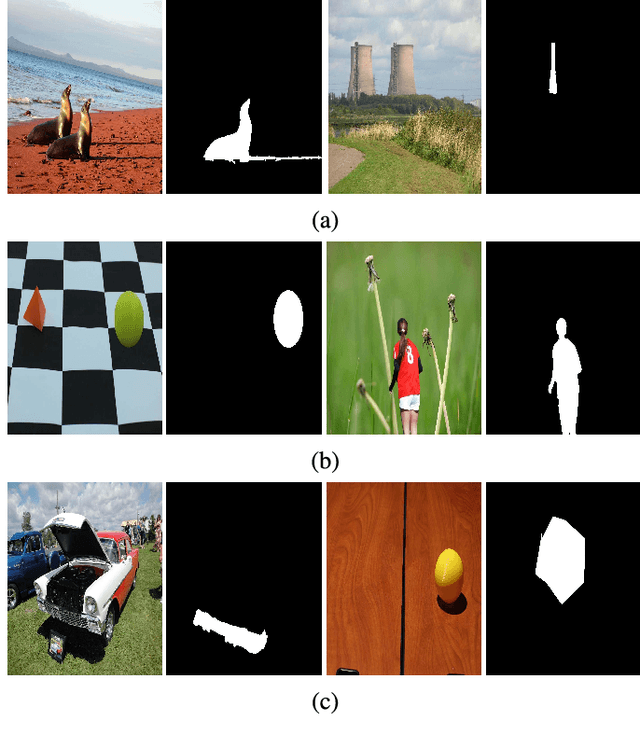

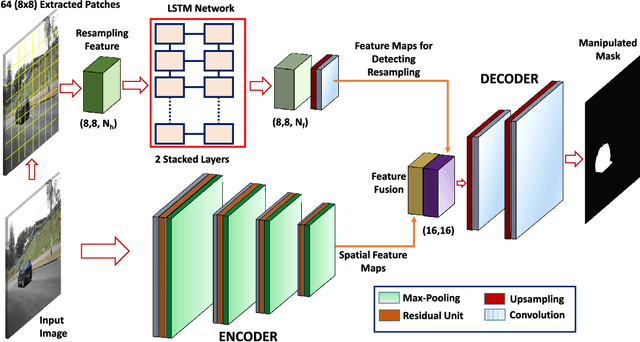

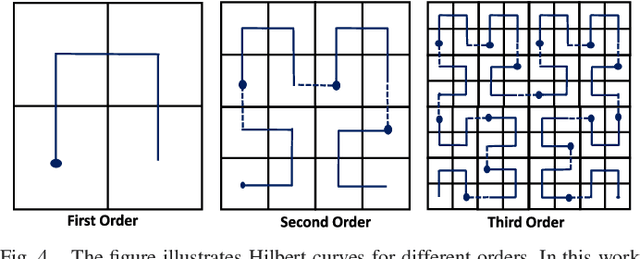

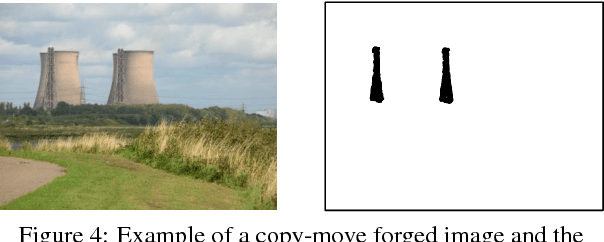

Abstract:With advanced image journaling tools, one can easily alter the semantic meaning of an image by exploiting certain manipulation techniques such as copy-clone, object splicing, and removal, which mislead the viewers. In contrast, the identification of these manipulations becomes a very challenging task as manipulated regions are not visually apparent. This paper proposes a high-confidence manipulation localization architecture which utilizes resampling features, Long-Short Term Memory (LSTM) cells, and encoder-decoder network to segment out manipulated regions from non-manipulated ones. Resampling features are used to capture artifacts like JPEG quality loss, upsampling, downsampling, rotation, and shearing. The proposed network exploits larger receptive fields (spatial maps) and frequency domain correlation to analyze the discriminative characteristics between manipulated and non-manipulated regions by incorporating encoder and LSTM network. Finally, decoder network learns the mapping from low-resolution feature maps to pixel-wise predictions for image tamper localization. With predicted mask provided by final layer (softmax) of the proposed architecture, end-to-end training is performed to learn the network parameters through back-propagation using ground-truth masks. Furthermore, a large image splicing dataset is introduced to guide the training process. The proposed method is capable of localizing image manipulations at pixel level with high precision, which is demonstrated through rigorous experimentation on three diverse datasets.

Boosting Image Forgery Detection using Resampling Features and Copy-move analysis

Feb 19, 2018

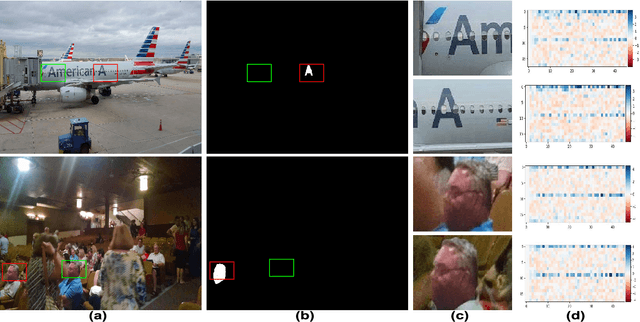

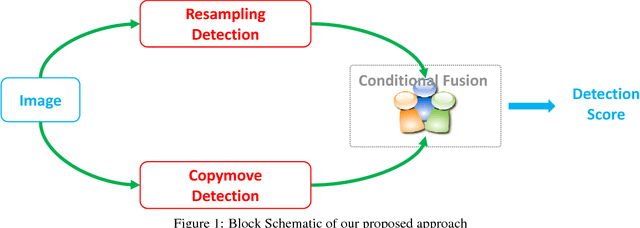

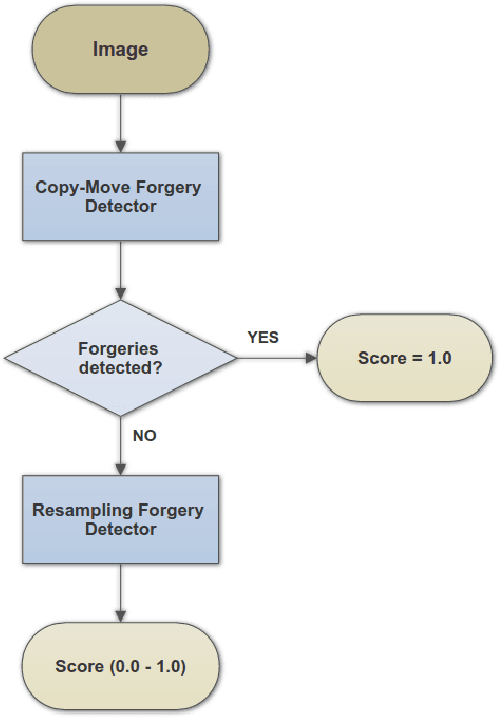

Abstract:Realistic image forgeries involve a combination of splicing, resampling, cloning, region removal and other methods. While resampling detection algorithms are effective in detecting splicing and resampling, copy-move detection algorithms excel in detecting cloning and region removal. In this paper, we combine these complementary approaches in a way that boosts the overall accuracy of image manipulation detection. We use the copy-move detection method as a pre-filtering step and pass those images that are classified as untampered to a deep learning based resampling detection framework. Experimental results on various datasets including the 2017 NIST Nimble Challenge Evaluation dataset comprising nearly 10,000 pristine and tampered images shows that there is a consistent increase of 8%-10% in detection rates, when copy-move algorithm is combined with different resampling detection algorithms.

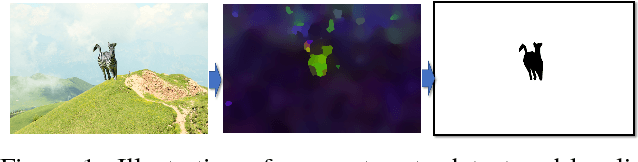

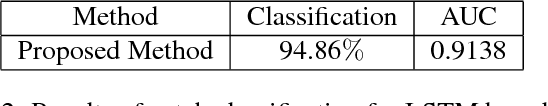

Detection and Localization of Image Forgeries using Resampling Features and Deep Learning

Jul 03, 2017

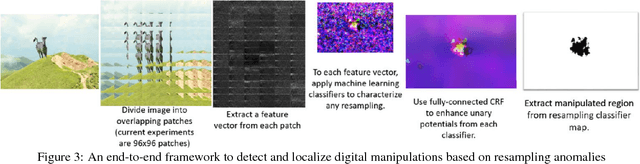

Abstract:Resampling is an important signature of manipulated images. In this paper, we propose two methods to detect and localize image manipulations based on a combination of resampling features and deep learning. In the first method, the Radon transform of resampling features are computed on overlapping image patches. Deep learning classifiers and a Gaussian conditional random field model are then used to create a heatmap. Tampered regions are located using a Random Walker segmentation method. In the second method, resampling features computed on overlapping image patches are passed through a Long short-term memory (LSTM) based network for classification and localization. We compare the performance of detection/localization of both these methods. Our experimental results show that both techniques are effective in detecting and localizing digital image forgeries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge