Lakshmanan Nataraj

CIMGEN: Controlled Image Manipulation by Finetuning Pretrained Generative Models on Limited Data

Jan 23, 2024Abstract:Content creation and image editing can benefit from flexible user controls. A common intermediate representation for conditional image generation is a semantic map, that has information of objects present in the image. When compared to raw RGB pixels, the modification of semantic map is much easier. One can take a semantic map and easily modify the map to selectively insert, remove, or replace objects in the map. The method proposed in this paper takes in the modified semantic map and alter the original image in accordance to the modified map. The method leverages traditional pre-trained image-to-image translation GANs, such as CycleGAN or Pix2Pix GAN, that are fine-tuned on a limited dataset of reference images associated with the semantic maps. We discuss the qualitative and quantitative performance of our technique to illustrate its capacity and possible applications in the fields of image forgery and image editing. We also demonstrate the effectiveness of the proposed image forgery technique in thwarting the numerous deep learning-based image forensic techniques, highlighting the urgent need to develop robust and generalizable image forensic tools in the fight against the spread of fake media.

MalGrid: Visualization Of Binary Features In Large Malware Corpora

Nov 04, 2022

Abstract:The number of malware is constantly on the rise. Though most new malware are modifications of existing ones, their sheer number is quite overwhelming. In this paper, we present a novel system to visualize and map millions of malware to points in a 2-dimensional (2D) spatial grid. This enables visualizing relationships within large malware datasets that can be used to develop triage solutions to screen different malware rapidly and provide situational awareness. Our approach links two visualizations within an interactive display. Our first view is a spatial point-based visualization of similarity among the samples based on a reduced dimensional projection of binary feature representations of malware. Our second spatial grid-based view provides a better insight into similarities and differences between selected malware samples in terms of the binary-based visual representations they share. We also provide a case study where the effect of packing on the malware data is correlated with the complexity of the packing algorithm.

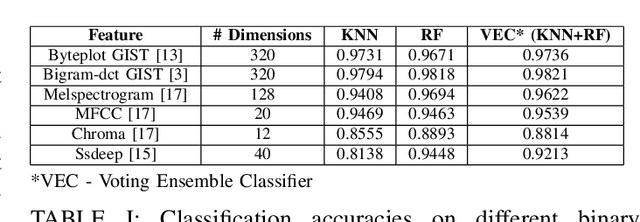

OMD: Orthogonal Malware Detection Using Audio, Image, and Static Features

Nov 08, 2021

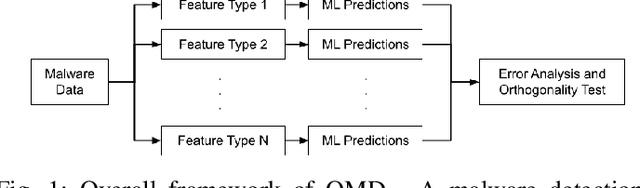

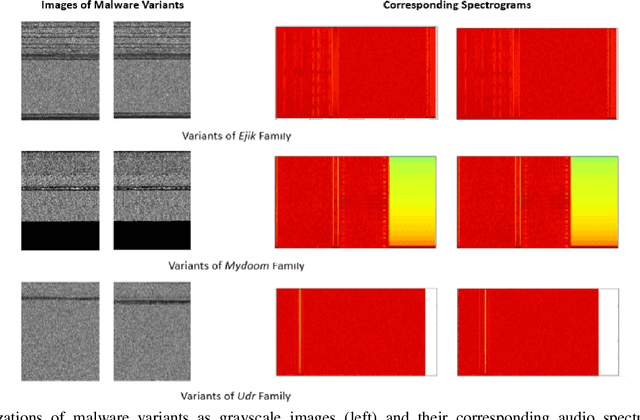

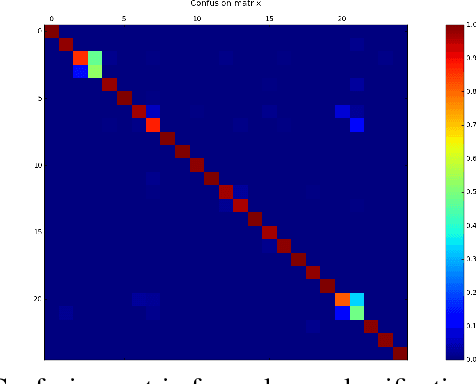

Abstract:With the growing number of malware and cyber attacks, there is a need for "orthogonal" cyber defense approaches, which are complementary to existing methods by detecting unique malware samples that are not predicted by other methods. In this paper, we propose a novel and orthogonal malware detection (OMD) approach to identify malware using a combination of audio descriptors, image similarity descriptors and other static/statistical features. First, we show how audio descriptors are effective in classifying malware families when the malware binaries are represented as audio signals. Then, we show that the predictions made on the audio descriptors are orthogonal to the predictions made on image similarity descriptors and other static features. Further, we develop a framework for error analysis and a metric to quantify how orthogonal a new feature set (or type) is with respect to other feature sets. This allows us to add new features and detection methods to our overall framework. Experimental results on malware datasets show that our approach provides a robust framework for orthogonal malware detection.

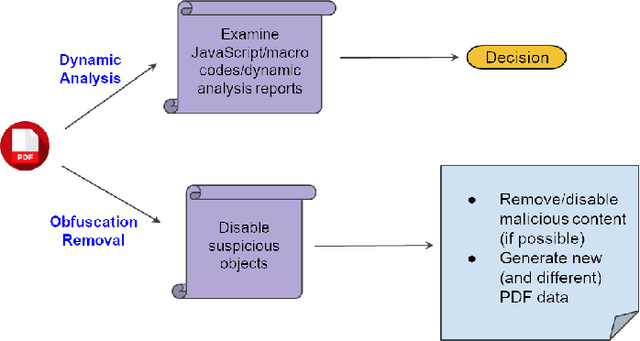

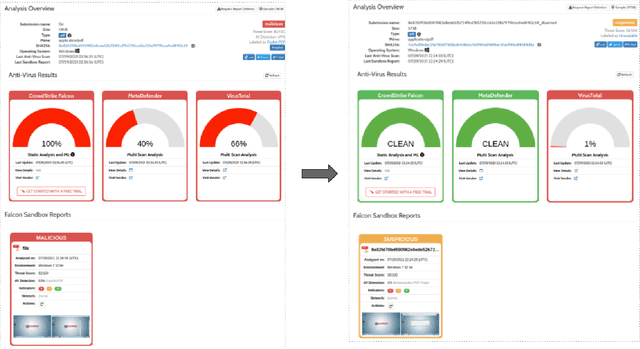

HAPSSA: Holistic Approach to PDF Malware Detection Using Signal and Statistical Analysis

Nov 08, 2021

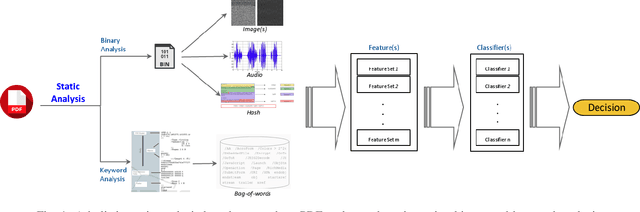

Abstract:Malicious PDF documents present a serious threat to various security organizations that require modern threat intelligence platforms to effectively analyze and characterize the identity and behavior of PDF malware. State-of-the-art approaches use machine learning (ML) to learn features that characterize PDF malware. However, ML models are often susceptible to evasion attacks, in which an adversary obfuscates the malware code to avoid being detected by an Antivirus. In this paper, we derive a simple yet effective holistic approach to PDF malware detection that leverages signal and statistical analysis of malware binaries. This includes combining orthogonal feature space models from various static and dynamic malware detection methods to enable generalized robustness when faced with code obfuscations. Using a dataset of nearly 30,000 PDF files containing both malware and benign samples, we show that our holistic approach maintains a high detection rate (99.92%) of PDF malware and even detects new malicious files created by simple methods that remove the obfuscation conducted by malware authors to hide their malware, which are undetected by most antiviruses.

Seam Carving Detection and Localization using Two-Stage Deep Neural Networks

Sep 04, 2021

Abstract:Seam carving is a method to resize an image in a content aware fashion. However, this method can also be used to carve out objects from images. In this paper, we propose a two-step method to detect and localize seam carved images. First, we build a detector to detect small patches in an image that has been seam carved. Next, we compute a heatmap on an image based on the patch detector's output. Using these heatmaps, we build another detector to detect if a whole image is seam carved or not. Our experimental results show that our approach is effective in detecting and localizing seam carved images.

SeeTheSeams: Localized Detection of Seam Carving based Image Forgery in Satellite Imagery

Aug 28, 2021

Abstract:Seam carving is a popular technique for content aware image retargeting. It can be used to deliberately manipulate images, for example, change the GPS locations of a building or insert/remove roads in a satellite image. This paper proposes a novel approach for detecting and localizing seams in such images. While there are methods to detect seam carving based manipulations, this is the first time that robust localization and detection of seam carving forgery is made possible. We also propose a seam localization score (SLS) metric to evaluate the effectiveness of localization. The proposed method is evaluated extensively on a large collection of images from different sources, demonstrating a high level of detection and localization performance across these datasets. The datasets curated during this work will be released to the public.

Holistic Image Manipulation Detection using Pixel Co-occurrence Matrices

Apr 12, 2021

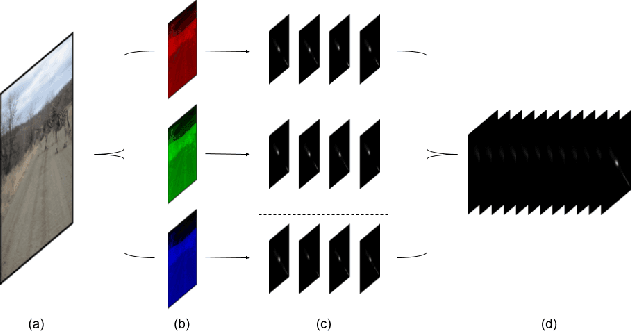

Abstract:Digital image forensics aims to detect images that have been digitally manipulated. Realistic image forgeries involve a combination of splicing, resampling, region removal, smoothing and other manipulation methods. While most detection methods in literature focus on detecting a particular type of manipulation, it is challenging to identify doctored images that involve a host of manipulations. In this paper, we propose a novel approach to holistically detect tampered images using a combination of pixel co-occurrence matrices and deep learning. We extract horizontal and vertical co-occurrence matrices on three color channels in the pixel domain and train a model using a deep convolutional neural network (CNN) framework. Our method is agnostic to the type of manipulation and classifies an image as tampered or untampered. We train and validate our model on a dataset of more than 86,000 images. Experimental results show that our approach is promising and achieves more than 0.99 area under the curve (AUC) evaluation metric on the training and validation subsets. Further, our approach also generalizes well and achieves around 0.81 AUC on an unseen test dataset comprising more than 19,740 images released as part of the Media Forensics Challenge (MFC) 2020. Our score was highest among all other teams that participated in the challenge, at the time of announcement of the challenge results.

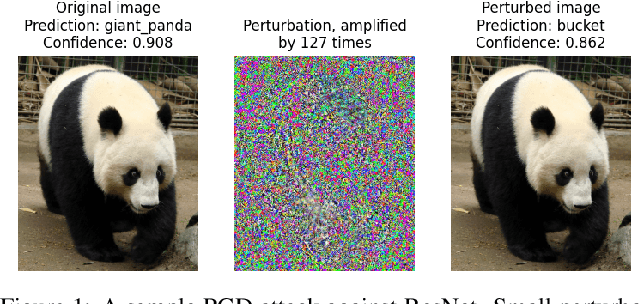

Attribution of Gradient Based Adversarial Attacks for Reverse Engineering of Deceptions

Mar 19, 2021

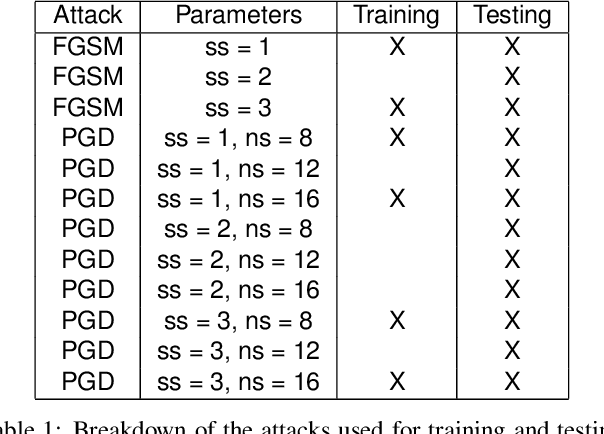

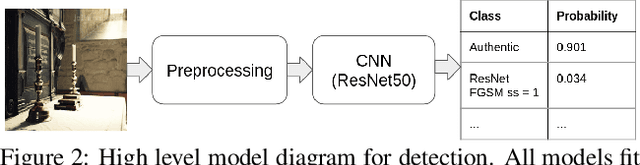

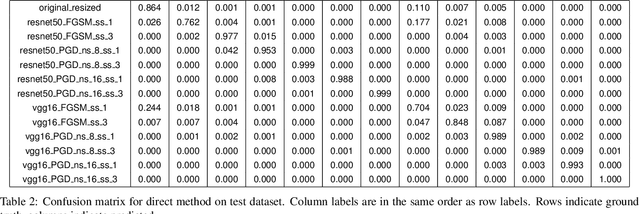

Abstract:Machine Learning (ML) algorithms are susceptible to adversarial attacks and deception both during training and deployment. Automatic reverse engineering of the toolchains behind these adversarial machine learning attacks will aid in recovering the tools and processes used in these attacks. In this paper, we present two techniques that support automated identification and attribution of adversarial ML attack toolchains using Co-occurrence Pixel statistics and Laplacian Residuals. Our experiments show that the proposed techniques can identify parameters used to generate adversarial samples. To the best of our knowledge, this is the first approach to attribute gradient based adversarial attacks and estimate their parameters. Source code and data is available at: https://github.com/michael-goebel/ei_red

Malware Detection Using Frequency Domain-Based Image Visualization and Deep Learning

Jan 26, 2021

Abstract:We propose a novel method to detect and visualize malware through image classification. The executable binaries are represented as grayscale images obtained from the count of N-grams (N=2) of bytes in the Discrete Cosine Transform (DCT) domain and a neural network is trained for malware detection. A shallow neural network is trained for classification, and its accuracy is compared with deep-network architectures such as ResNet that are trained using transfer learning. Neither dis-assembly nor behavioral analysis of malware is required for these methods. Motivated by the visual similarity of these images for different malware families, we compare our deep neural network models with standard image features like GIST descriptors to evaluate the performance. A joint feature measure is proposed to combine different features using error analysis to get an accurate ensemble model for improved classification performance. A new dataset called MaleX which contains around 1 million malware and benign Windows executable samples is created for large-scale malware detection and classification experiments. Experimental results are quite promising with 96% binary classification accuracy on MaleX. The proposed model is also able to generalize well on larger unseen malware samples and the results compare favorably with state-of-the-art static analysis-based malware detection algorithms.

Detection, Attribution and Localization of GAN Generated Images

Jul 20, 2020

Abstract:Recent advances in Generative Adversarial Networks (GANs) have led to the creation of realistic-looking digital images that pose a major challenge to their detection by humans or computers. GANs are used in a wide range of tasks, from modifying small attributes of an image (StarGAN [14]), transferring attributes between image pairs (CycleGAN [91]), as well as generating entirely new images (ProGAN [36], StyleGAN [37], SPADE/GauGAN [64]). In this paper, we propose a novel approach to detect, attribute and localize GAN generated images that combines image features with deep learning methods. For every image, co-occurrence matrices are computed on neighborhood pixels of RGB channels in different directions (horizontal, vertical and diagonal). A deep learning network is then trained on these features to detect, attribute and localize these GAN generated/manipulated images. A large scale evaluation of our approach on 5 GAN datasets comprising over 2.76 million images (ProGAN, StarGAN, CycleGAN, StyleGAN and SPADE/GauGAN) shows promising results in detecting GAN generated images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge