Javad Amirian

Upgrading Pepper Robot s Social Interaction with Advanced Hardware and Perception Enhancements

Sep 02, 2024

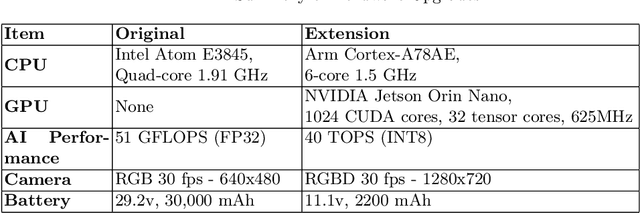

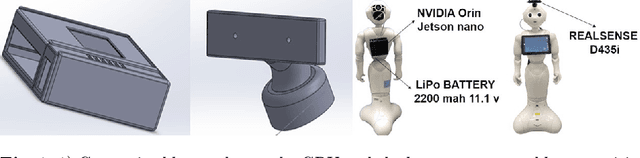

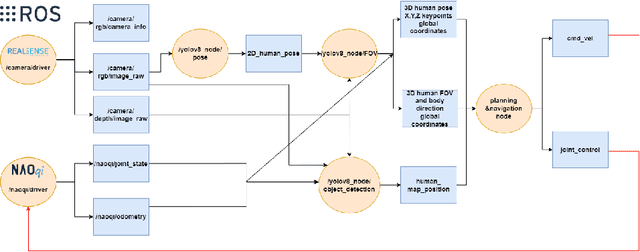

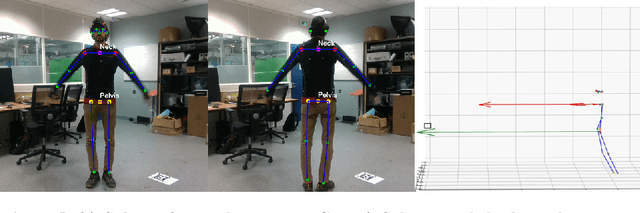

Abstract:In this paper, we propose hardware and software enhancements for the Pepper robot to improve its human-robot interaction capabilities. This includes the integration of an NVIDIA Jetson GPU to enhance computational capabilities and execute real time algorithms, and a RealSense D435i camera to capture depth images, as well as the computer vision algorithms to detect and localize the humans around the robot and estimate their body orientation and gaze direction. The new stack is implemented on ROS and is running on the extended Pepper hardware, and the communication with the robot s firmware is done through the NAOqi ROS driver API. We have also collected a MoCap dataset of human activities in a controlled environment, together with the corresponding RGB-D data, to validate the proposed perception algorithms.

Legibot: Generating Legible Motions for Service Robots Using Cost-Based Local Planners

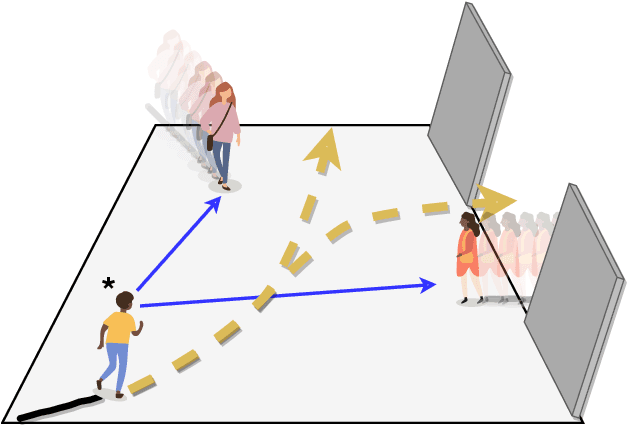

Apr 07, 2024Abstract:With the increasing presence of social robots in various environments and applications, there is an increasing need for these robots to exhibit socially-compliant behaviors. Legible motion, characterized by the ability of a robot to clearly and quickly convey intentions and goals to the individuals in its vicinity, through its motion, holds significant importance in this context. This will improve the overall user experience and acceptance of robots in human environments. In this paper, we introduce a novel approach to incorporate legibility into local motion planning for mobile robots. This can enable robots to generate legible motions in real-time and dynamic environments. To demonstrate the effectiveness of our proposed methodology, we also provide a robotic stack designed for deploying legibility-aware motion planning in a social robot, by integrating perception and localization components.

AggNet: Learning to Aggregate Faces for Group Membership Verification

Jun 17, 2022

Abstract:In some face recognition applications, we are interested to verify whether an individual is a member of a group, without revealing their identity. Some existing methods, propose a mechanism for quantizing precomputed face descriptors into discrete embeddings and aggregating them into one group representation. However, this mechanism is only optimized for a given closed set of individuals and needs to learn the group representations from scratch every time the groups are changed. In this paper, we propose a deep architecture that jointly learns face descriptors and the aggregation mechanism for better end-to-end performances. The system can be applied to new groups with individuals never seen before and the scheme easily manages new memberships or membership endings. We show through experiments on multiple large-scale wild-face datasets, that the proposed method leads to higher verification performance compared to other baselines.

What we see and What we don't see: Imputing Occluded Crowd Structures from Robot Sensing

Sep 17, 2021

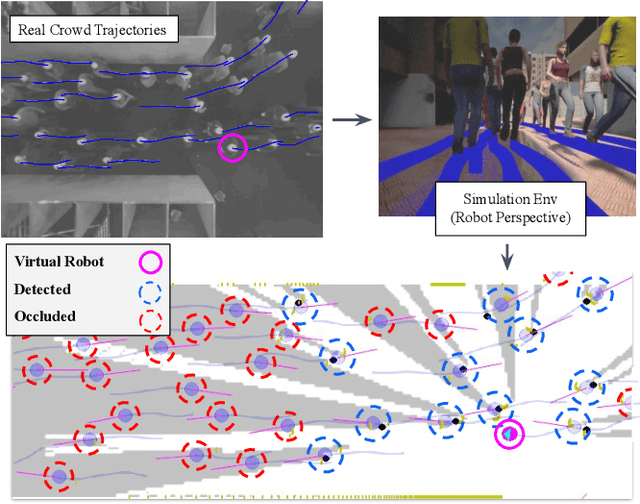

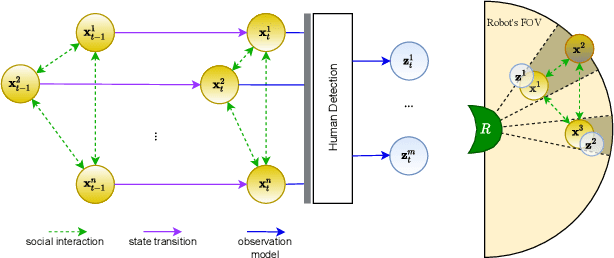

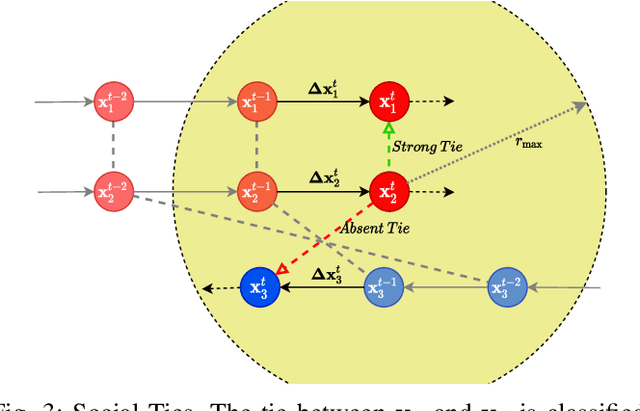

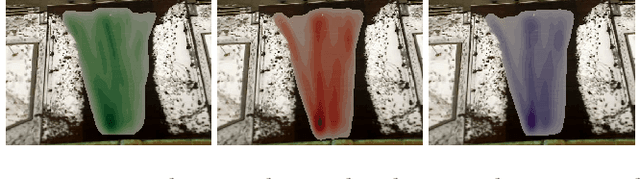

Abstract:We consider the navigation of mobile robots in crowded environments, for which onboard sensing of the crowd is typically limited by occlusions. We address the problem of inferring the human occupancy in the space around the robot, in blind spots, beyond the range of its sensing capabilities. This problem is rather unexplored in spite of the important impact it has on the robot crowd navigation efficiency and safety, which requires the estimation and the prediction of the crowd state around it. In this work, we propose the first solution to sample predictions of possible human presence based on the state of a fewer set of sensed people around the robot as well as previous observations of the crowd activity.

OpenTraj: Assessing Prediction Complexity in Human Trajectories Datasets

Oct 02, 2020

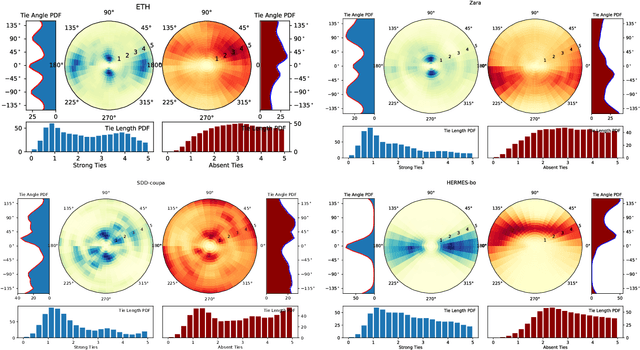

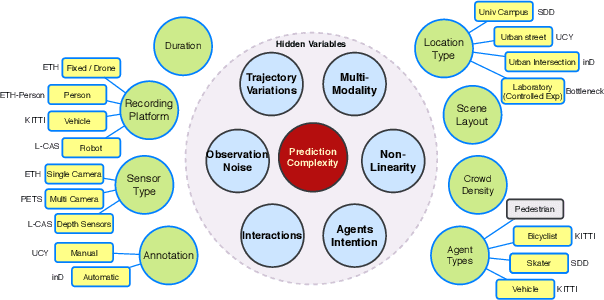

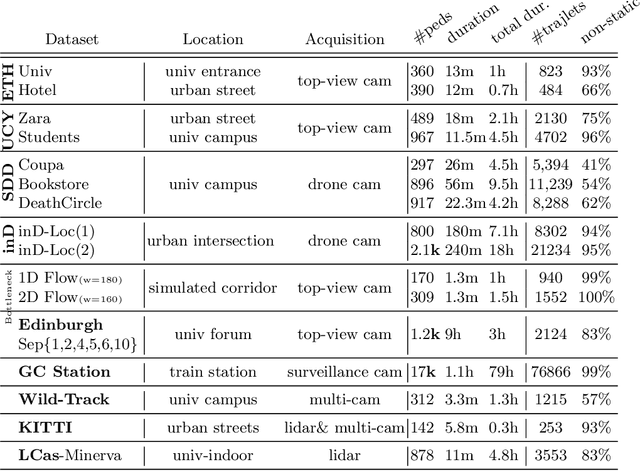

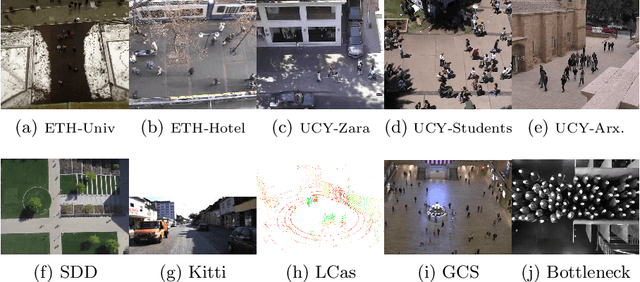

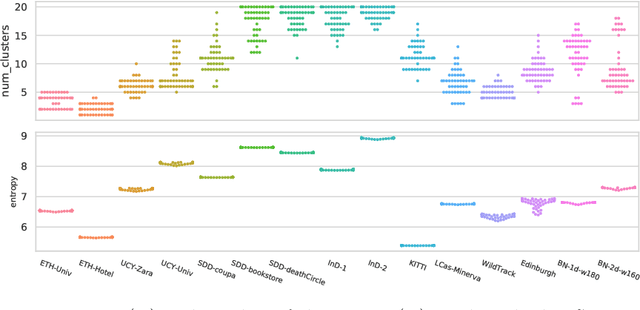

Abstract:Human Trajectory Prediction (HTP) has gained much momentum in the last years and many solutions have been proposed to solve it. Proper benchmarking being a key issue for comparing methods, this paper addresses the question of evaluating how complex is a given dataset with respect to the prediction problem. For assessing a dataset complexity, we define a series of indicators around three concepts: Trajectory predictability; Trajectory regularity; Context complexity. We compare the most common datasets used in HTP in the light of these indicators and discuss what this may imply on benchmarking of HTP algorithms. Our source code is released on

Data-Driven Crowd Simulation with Generative Adversarial Networks

May 23, 2019

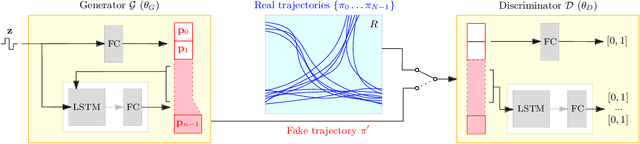

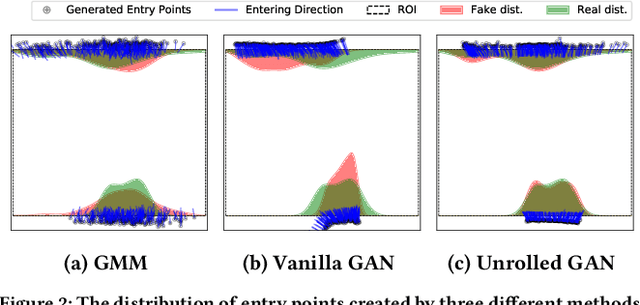

Abstract:This paper presents a novel data-driven crowd simulation method that can mimic the observed traffic of pedestrians in a given environment. Given a set of observed trajectories, we use a recent form of neural networks, Generative Adversarial Networks (GANs), to learn the properties of this set and generate new trajectories with similar properties. We define a way for simulated pedestrians (agents) to follow such a trajectory while handling local collision avoidance. As such, the system can generate a crowd that behaves similarly to observations, while still enabling real-time interactions between agents. Via experiments with real-world data, we show that our simulated trajectories preserve the statistical properties of their input. Our method simulates crowds in real time that resemble existing crowds, while also allowing insertion of extra agents, combination with other simulation methods, and user interaction.

Social Ways: Learning Multi-Modal Distributions of Pedestrian Trajectories with GANs

Apr 24, 2019

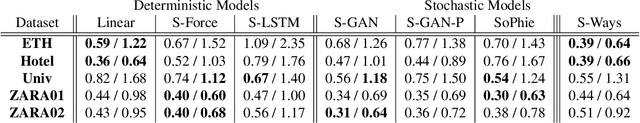

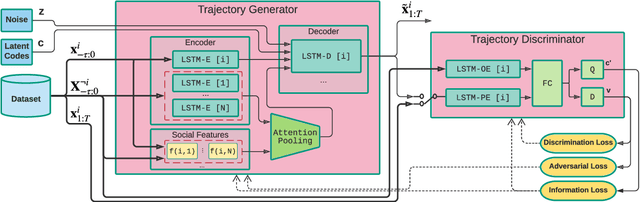

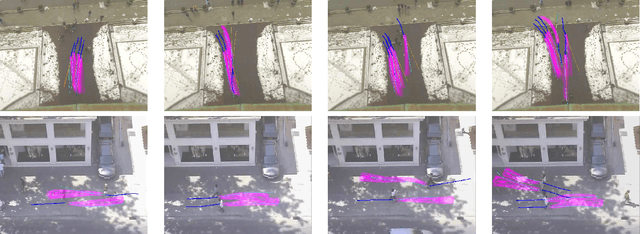

Abstract:This paper proposes a novel approach for predicting the motion of pedestrians interacting with others. It uses a Generative Adversarial Network (GAN) to sample plausible predictions for any agent in the scene. As GANs are very susceptible to mode collapsing and dropping, we show that the recently proposed Info-GAN allows dramatic improvements in multi-modal pedestrian trajectory prediction to avoid these issues. We also left out L2-loss in training the generator, unlike some previous works, because it causes serious mode collapsing though faster convergence. We show through experiments on real and synthetic data that the proposed method leads to generate more diverse samples and to preserve the modes of the predictive distribution. In particular, to prove this claim, we have designed a toy example dataset of trajectories that can be used to assess the performance of different methods in preserving the predictive distribution modes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge