Jason Ziglar

Lidar Panoptic Segmentation and Tracking without Bells and Whistles

Oct 19, 2023

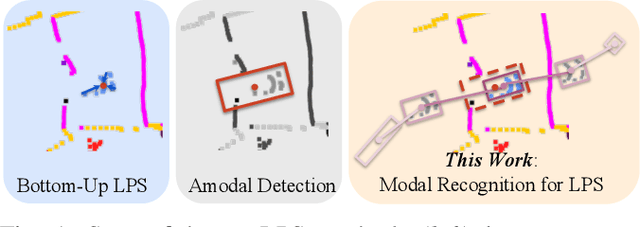

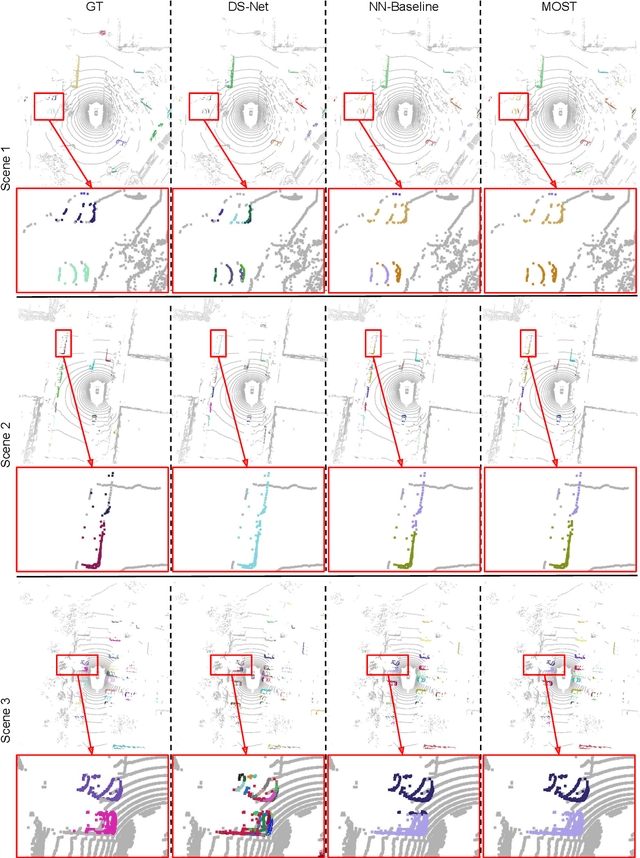

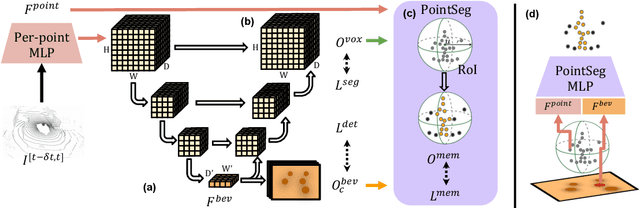

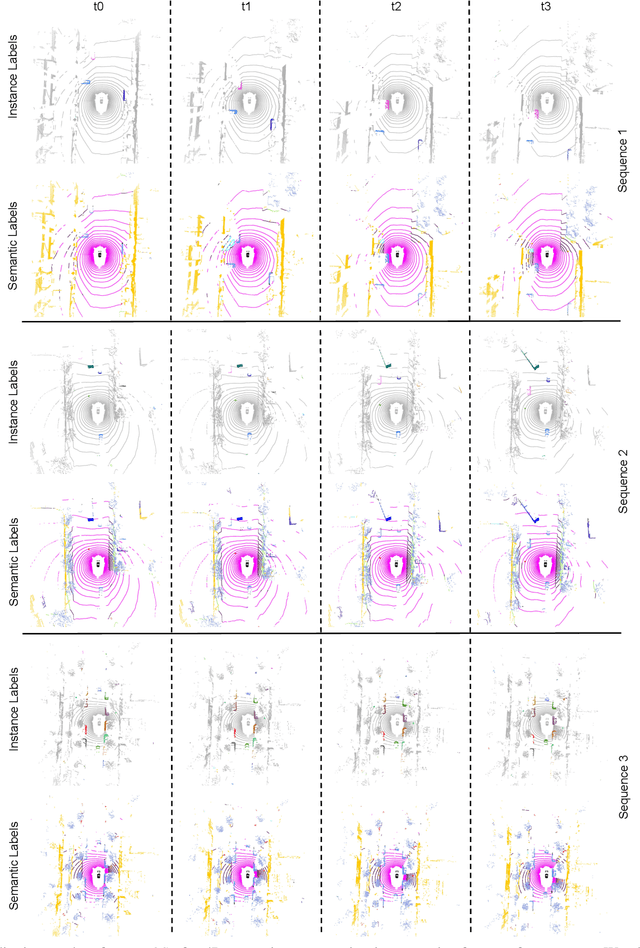

Abstract:State-of-the-art lidar panoptic segmentation (LPS) methods follow bottom-up segmentation-centric fashion wherein they build upon semantic segmentation networks by utilizing clustering to obtain object instances. In this paper, we re-think this approach and propose a surprisingly simple yet effective detection-centric network for both LPS and tracking. Our network is modular by design and optimized for all aspects of both the panoptic segmentation and tracking task. One of the core components of our network is the object instance detection branch, which we train using point-level (modal) annotations, as available in segmentation-centric datasets. In the absence of amodal (cuboid) annotations, we regress modal centroids and object extent using trajectory-level supervision that provides information about object size, which cannot be inferred from single scans due to occlusions and the sparse nature of the lidar data. We obtain fine-grained instance segments by learning to associate lidar points with detected centroids. We evaluate our method on several 3D/4D LPS benchmarks and observe that our model establishes a new state-of-the-art among open-sourced models, outperforming recent query-based models.

What You See is What You Get: Exploiting Visibility for 3D Object Detection

Dec 10, 2019

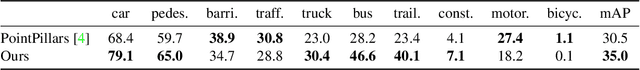

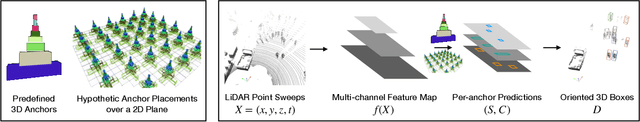

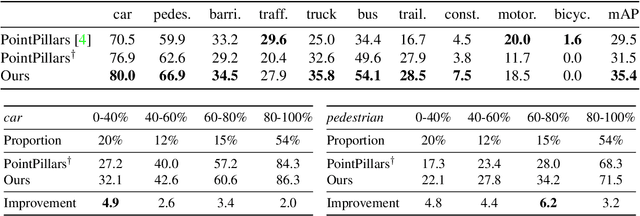

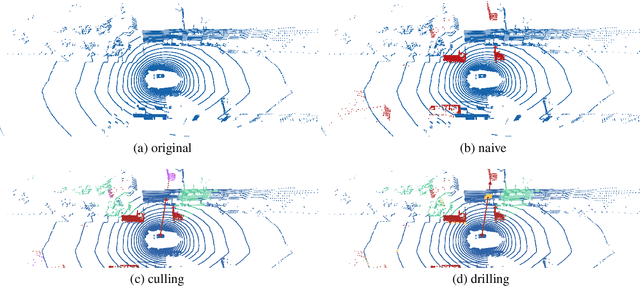

Abstract:Recent advances in 3D sensing have created unique challenges for computer vision. One fundamental challenge is finding a good representation for 3D sensor data. Most popular representations (such as PointNet) are proposed in the context of processing truly 3D data (e.g. points sampled from mesh models), ignoring the fact that 3D sensored data such as a LiDAR sweep is in fact 2.5D. We argue that representing 2.5D data as collections of (x, y, z) points fundamentally destroys hidden information about freespace. In this paper, we demonstrate such knowledge can be efficiently recovered through 3D raycasting and readily incorporated into batch-based gradient learning. We describe a simple approach to augmenting voxel-based networks with visibility: we add a voxelized visibility map as an additional input stream. In addition, we show that visibility can be combined with two crucial modifications common to state-of-the-art 3D detectors: synthetic data augmentation of virtual objects and temporal aggregation of LiDAR sweeps over multiple time frames. On the NuScenes 3D detection benchmark, we show that, by adding an additional stream for visibility input, we can significantly improve the overall detection accuracy of a state-of-the-art 3D detector.

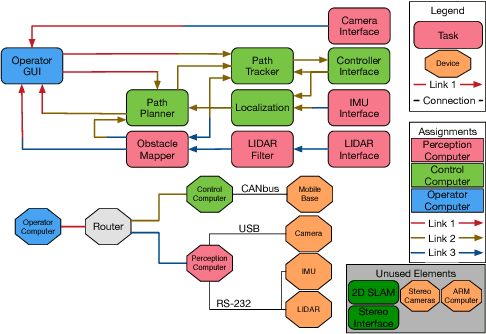

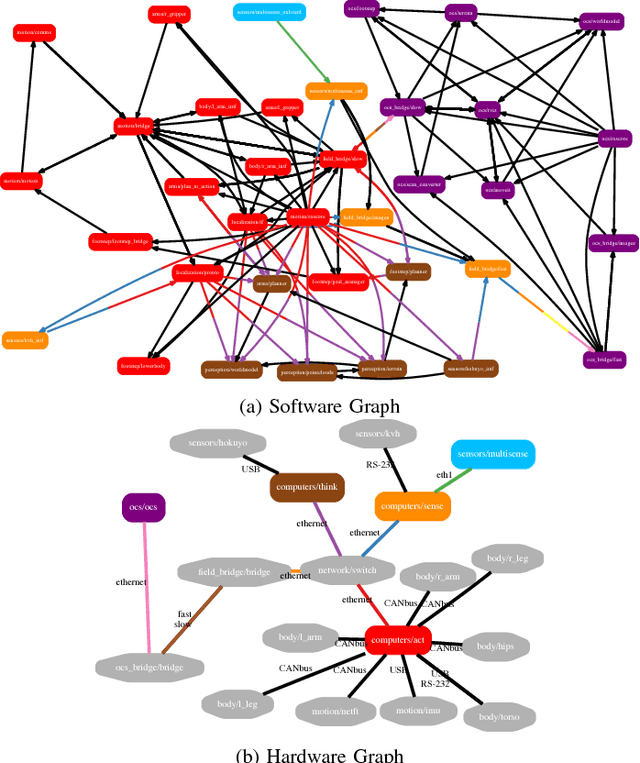

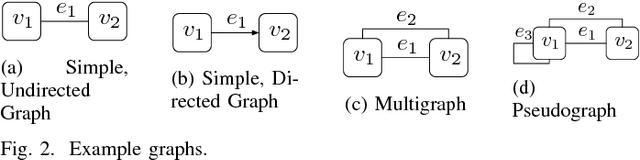

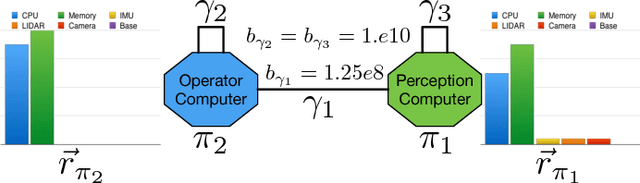

Context-Aware System Synthesis, Task Assignment, and Routing

Aug 25, 2017

Abstract:The design and organization of complex robotic systems traditionally requires laborious trial-and-error processes to ensure both hardware and software components are correctly connected with the resources necessary for computation. This paper presents a novel generalization of the quadratic assignment and routing problem, introducing formalisms for selecting components and interconnections to synthesize a complete system capable of providing some user-defined functionality. By introducing mission context, functional requirements, and modularity directly into the assignment problem, we derive a solution where components are automatically selected and then organized into an optimal hardware and software interconnection structure, all while respecting restrictions on component viability and required functionality. The ability to generate \emph{complete} functional systems directly from individual components reduces manual design effort by allowing for a guided exploration of the design space. Additionally, our formulation increases resiliency by quantifying resource margins and enabling adaptation of system structure in response to changing environments, hardware or software failure. The proposed formulation is cast as an integer linear program which is provably $\mathcal{NP}$-hard. Two case studies are developed and analyzed to highlight the expressiveness and complexity of problems that can be addressed by this approach: the first explores the iterative development of a ground-based search-and-rescue robot in a variety of mission contexts, while the second explores the large-scale, complex design of a humanoid disaster robot for the DARPA Robotics Challenge. Numerical simulations quantify real world performance and demonstrate tractable time complexity for the scale of problems encountered in many modern robotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge