Jani Käpylä

DepthTrack : Unveiling the Power of RGBD Tracking

Aug 31, 2021

Abstract:RGBD (RGB plus depth) object tracking is gaining momentum as RGBD sensors have become popular in many application fields such as robotics.However, the best RGBD trackers are extensions of the state-of-the-art deep RGB trackers. They are trained with RGB data and the depth channel is used as a sidekick for subtleties such as occlusion detection. This can be explained by the fact that there are no sufficiently large RGBD datasets to 1) train deep depth trackers and to 2) challenge RGB trackers with sequences for which the depth cue is essential. This work introduces a new RGBD tracking dataset - Depth-Track - that has twice as many sequences (200) and scene types (40) than in the largest existing dataset, and three times more objects (90). In addition, the average length of the sequences (1473), the number of deformable objects (16) and the number of annotated tracking attributes (15) have been increased. Furthermore, by running the SotA RGB and RGBD trackers on DepthTrack, we propose a new RGBD tracking baseline, namely DeT, which reveals that deep RGBD tracking indeed benefits from genuine training data. The code and dataset is available at https://github.com/xiaozai/DeT

A Benchmark for Temporal Color Constancy

Mar 08, 2020

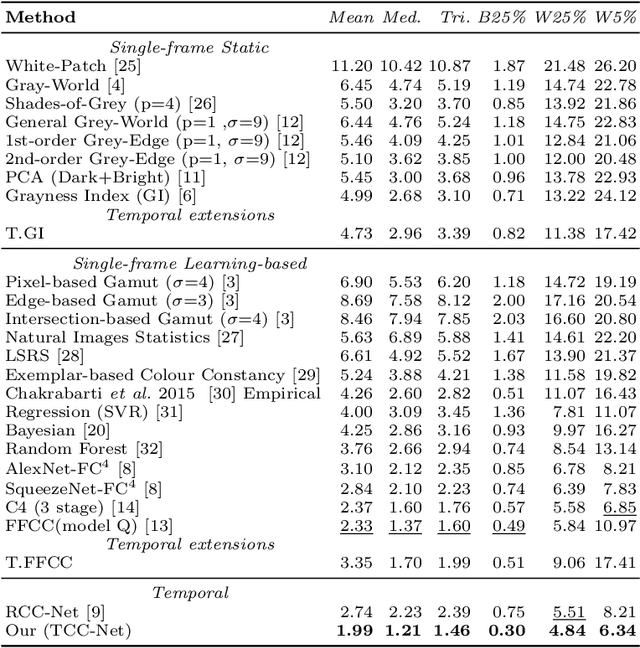

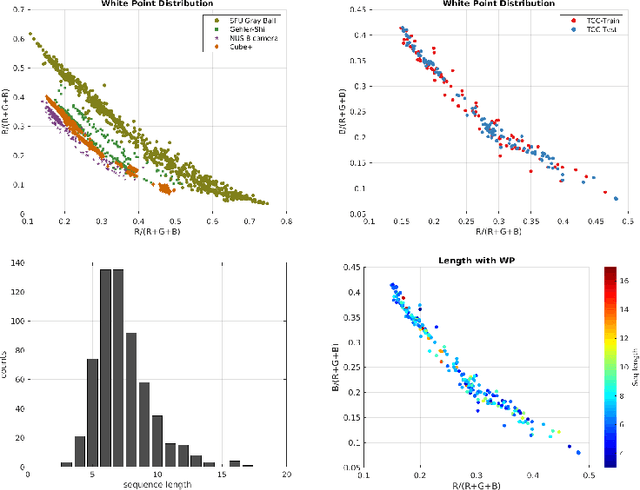

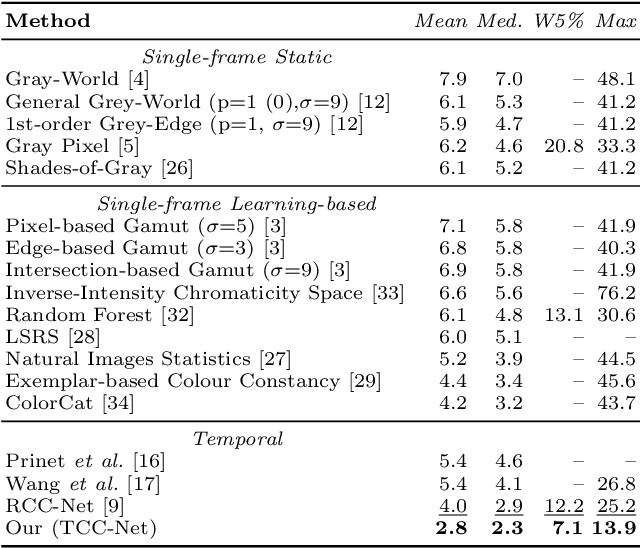

Abstract:Temporal Color Constancy (CC) is a recently proposed approach that challenges the conventional single-frame color constancy. The conventional approach is to use a single frame - shot frame - to estimate the scene illumination color. In temporal CC, multiple frames from the view finder sequence are used to estimate the color. However, there are no realistic large scale temporal color constancy datasets for method evaluation. In this work, a new temporal CC benchmark is introduced. The benchmark comprises of (1) 600 real-world sequences recorded with a high-resolution mobile phone camera, (2) a fixed train-test split which ensures consistent evaluation, and (3) a baseline method which achieves high accuracy in the new benchmark and the dataset used in previous works. Results for more than 20 well-known color constancy methods including the recent state-of-the-arts are reported in our experiments.

CDTB: A Color and Depth Visual Object Tracking Dataset and Benchmark

Jul 01, 2019

Abstract:A long-term visual object tracking performance evaluation methodology and a benchmark are proposed. Performance measures are designed by following a long-term tracking definition to maximize the analysis probing strength. The new measures outperform existing ones in interpretation potential and in better distinguishing between different tracking behaviors. We show that these measures generalize the short-term performance measures, thus linking the two tracking problems. Furthermore, the new measures are highly robust to temporal annotation sparsity and allow annotation of sequences hundreds of times longer than in the current datasets without increasing manual annotation labor. A new challenging dataset of carefully selected sequences with many target disappearances is proposed. A new tracking taxonomy is proposed to position trackers on the short-term/long-term spectrum. The benchmark contains an extensive evaluation of the largest number of long-term tackers and comparison to state-of-the-art short-term trackers. We analyze the influence of tracking architecture implementations to long-term performance and explore various re-detection strategies as well as influence of visual model update strategies to long-term tracking drift. The methodology is integrated in the VOT toolkit to automate experimental analysis and benchmarking and to facilitate future development of long-term trackers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge