Jan Leusmann

Eliciting Understandable Architectonic Gestures for Robotic Furniture through Co-Design Improvisation

Jan 03, 2025

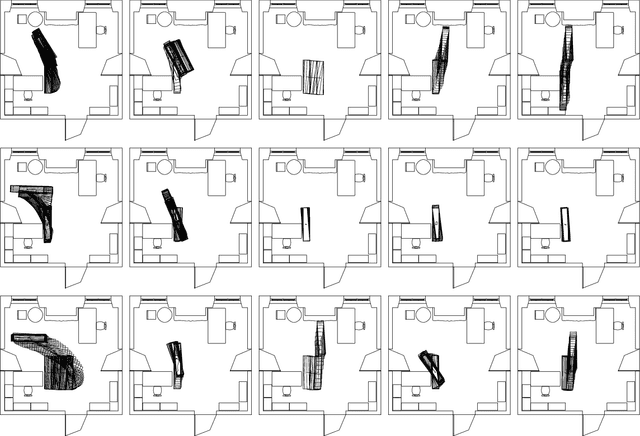

Abstract:The vision of adaptive architecture proposes that robotic technologies could enable interior spaces to physically transform in a bidirectional interaction with occupants. Yet, it is still unknown how this interaction could unfold in an understandable way. Inspired by HRI studies where robotic furniture gestured intents to occupants by deliberately positioning or moving in space, we hypothesise that adaptive architecture could also convey intents through gestures performed by a mobile robotic partition. To explore this design space, we invited 15 multidisciplinary experts to join co-design improvisation sessions, where they manually manoeuvred a deactivated robotic partition to design gestures conveying six architectural intents that varied in purpose and urgency. Using a gesture elicitation method alongside motion-tracking data, a Laban-based questionnaire, and thematic analysis, we identified 20 unique gestural strategies. Through categorisation, we introduced architectonic gestures as a novel strategy for robotic furniture to convey intent by indexically leveraging its spatial impact, complementing the established deictic and emblematic gestures. Our study thus represents an exploratory step toward making the autonomous gestures of adaptive architecture more legible. By understanding how robotic gestures are interpreted based not only on their motion but also on their spatial impact, we contribute to bridging HRI with Human-Building Interaction research.

An Approach to Elicit Human-Understandable Robot Expressions to Support Human-Robot Interaction

Oct 01, 2024Abstract:Understanding the intentions of robots is essential for natural and seamless human-robot collaboration. Ensuring that robots have means for non-verbal communication is a basis for intuitive and implicit interaction. For this, we contribute an approach to elicit and design human-understandable robot expressions. We outline the approach in the context of non-humanoid robots. We paired human mimicking and enactment with research from gesture elicitation in two phases: first, to elicit expressions, and second, to ensure they are understandable. We present an example application through two studies (N=16 \& N=260) of our approach to elicit expressions for a simple 6-DoF robotic arm. We show that it enabled us to design robot expressions that signal curiosity and interest in getting attention. Our main contribution is an approach to generate and validate understandable expressions for robots, enabling more natural human-robot interaction.

Understanding the Uncertainty Loop of Human-Robot Interaction

Mar 14, 2023Abstract:Recently the field of Human-Robot Interaction gained popularity, due to the wide range of possibilities of how robots can support humans during daily tasks. One form of supportive robots are socially assistive robots which are specifically built for communicating with humans, e.g., as service robots or personal companions. As they understand humans through artificial intelligence, these robots will at some point make wrong assumptions about the humans' current state and give an unexpected response. In human-human conversations, unexpected responses happen frequently. However, it is currently unclear how such robots should act if they understand that the human did not expect their response, or even showing the uncertainty of their response in the first place. For this, we explore the different forms of potential uncertainties during human-robot conversations and how humanoids can, through verbal and non-verbal cues, communicate these uncertainties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge