Jan Kronenberger

Confidence Calibration for Object Detection and Segmentation

Mar 02, 2022

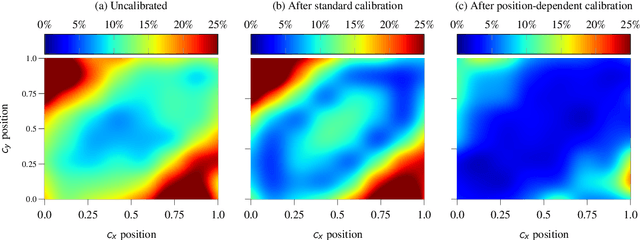

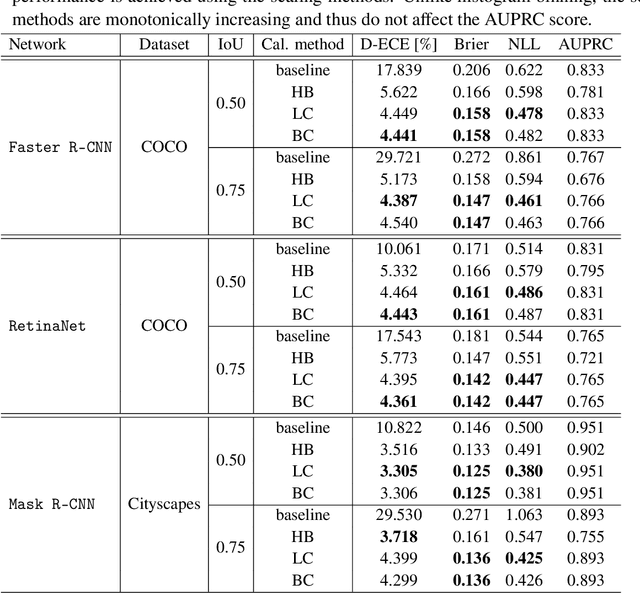

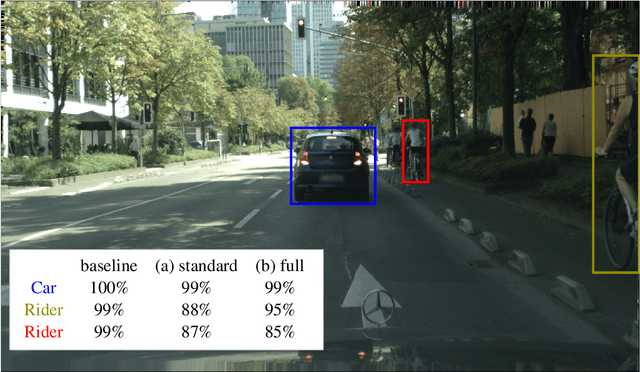

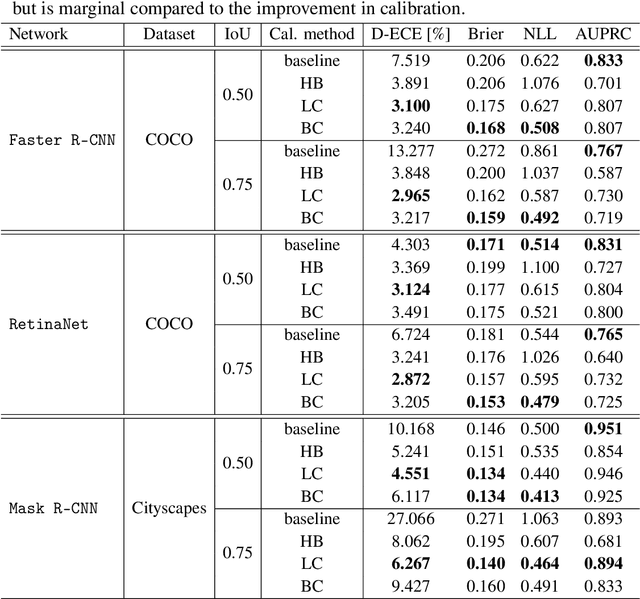

Abstract:Calibrated confidence estimates obtained from neural networks are crucial, particularly for safety-critical applications such as autonomous driving or medical image diagnosis. However, although the task of confidence calibration has been investigated on classification problems, thorough investigations on object detection and segmentation problems are still missing. Therefore, we focus on the investigation of confidence calibration for object detection and segmentation models in this chapter. We introduce the concept of multivariate confidence calibration that is an extension of well-known calibration methods to the task of object detection and segmentation. This allows for an extended confidence calibration that is also aware of additional features such as bounding box/pixel position, shape information, etc. Furthermore, we extend the expected calibration error (ECE) to measure miscalibration of object detection and segmentation models. We examine several network architectures on MS COCO as well as on Cityscapes and show that especially object detection as well as instance segmentation models are intrinsically miscalibrated given the introduced definition of calibration. Using our proposed calibration methods, we have been able to improve calibration so that it also has a positive impact on the quality of segmentation masks as well.

Bayesian Confidence Calibration for Epistemic Uncertainty Modelling

Sep 21, 2021

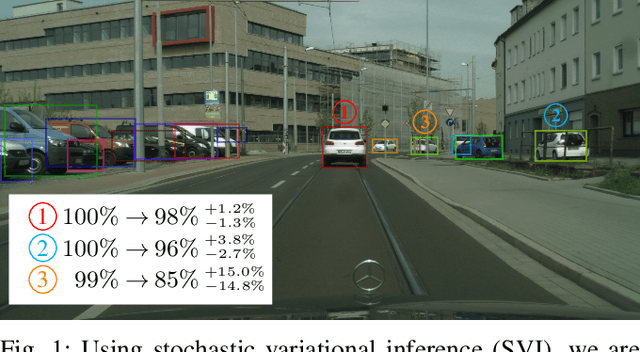

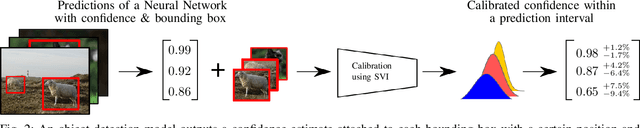

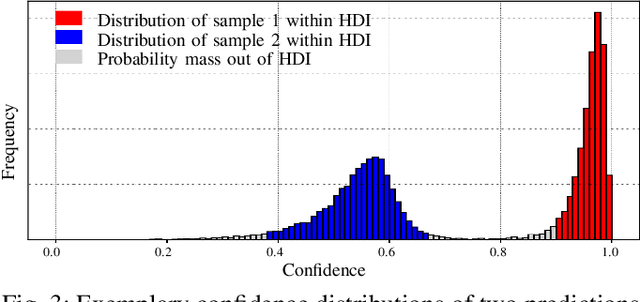

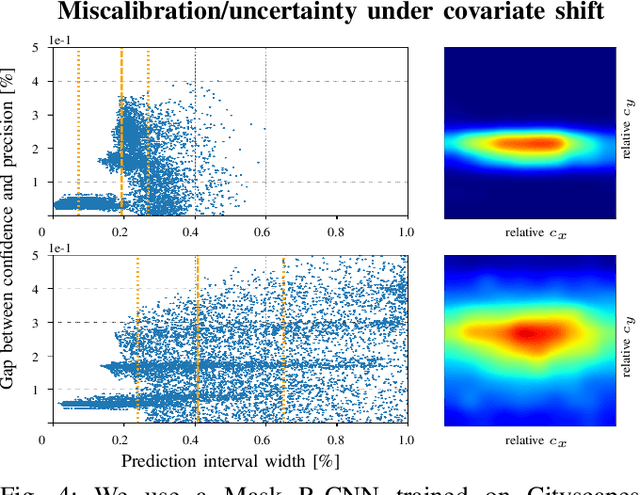

Abstract:Modern neural networks have found to be miscalibrated in terms of confidence calibration, i.e., their predicted confidence scores do not reflect the observed accuracy or precision. Recent work has introduced methods for post-hoc confidence calibration for classification as well as for object detection to address this issue. Especially in safety critical applications, it is crucial to obtain a reliable self-assessment of a model. But what if the calibration method itself is uncertain, e.g., due to an insufficient knowledge base? We introduce Bayesian confidence calibration - a framework to obtain calibrated confidence estimates in conjunction with an uncertainty of the calibration method. Commonly, Bayesian neural networks (BNN) are used to indicate a network's uncertainty about a certain prediction. BNNs are interpreted as neural networks that use distributions instead of weights for inference. We transfer this idea of using distributions to confidence calibration. For this purpose, we use stochastic variational inference to build a calibration mapping that outputs a probability distribution rather than a single calibrated estimate. Using this approach, we achieve state-of-the-art calibration performance for object detection calibration. Finally, we show that this additional type of uncertainty can be used as a sufficient criterion for covariate shift detection. All code is open source and available at https://github.com/EFS-OpenSource/calibration-framework.

Inspect, Understand, Overcome: A Survey of Practical Methods for AI Safety

Apr 29, 2021Abstract:The use of deep neural networks (DNNs) in safety-critical applications like mobile health and autonomous driving is challenging due to numerous model-inherent shortcomings. These shortcomings are diverse and range from a lack of generalization over insufficient interpretability to problems with malicious inputs. Cyber-physical systems employing DNNs are therefore likely to suffer from safety concerns. In recent years, a zoo of state-of-the-art techniques aiming to address these safety concerns has emerged. This work provides a structured and broad overview of them. We first identify categories of insufficiencies to then describe research activities aiming at their detection, quantification, or mitigation. Our paper addresses both machine learning experts and safety engineers: The former ones might profit from the broad range of machine learning topics covered and discussions on limitations of recent methods. The latter ones might gain insights into the specifics of modern ML methods. We moreover hope that our contribution fuels discussions on desiderata for ML systems and strategies on how to propel existing approaches accordingly.

Dependency Decomposition and a Reject Option for Explainable Models

Dec 11, 2020

Abstract:Deploying machine learning models in safety-related do-mains (e.g. autonomous driving, medical diagnosis) demands for approaches that are explainable, robust against adversarial attacks and aware of the model uncertainty. Recent deep learning models perform extremely well in various inference tasks, but the black-box nature of these approaches leads to a weakness regarding the three requirements mentioned above. Recent advances offer methods to visualize features, describe attribution of the input (e.g.heatmaps), provide textual explanations or reduce dimensionality. However,are explanations for classification tasks dependent or are they independent of each other? For in-stance, is the shape of an object dependent on the color? What is the effect of using the predicted class for generating explanations and vice versa? In the context of explainable deep learning models, we present the first analysis of dependencies regarding the probability distribution over the desired image classification outputs and the explaining variables (e.g. attributes, texts, heatmaps). Therefore, we perform an Explanation Dependency Decomposition (EDD). We analyze the implications of the different dependencies and propose two ways of generating the explanation. Finally, we use the explanation to verify (accept or reject) the prediction

Multivariate Confidence Calibration for Object Detection

Apr 28, 2020

Abstract:Unbiased confidence estimates of neural networks are crucial especially for safety-critical applications. Many methods have been developed to calibrate biased confidence estimates. Though there is a variety of methods for classification, the field of object detection has not been addressed yet. Therefore, we present a novel framework to measure and calibrate biased (or miscalibrated) confidence estimates of object detection methods. The main difference to related work in the field of classifier calibration is that we also use additional information of the regression output of an object detector for calibration. Our approach allows, for the first time, to obtain calibrated confidence estimates with respect to image location and box scale. In addition, we propose a new measure to evaluate miscalibration of object detectors. Finally, we show that our developed methods outperform state-of-the-art calibration models for the task of object detection and provides reliable confidence estimates across different locations and scales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge