James O'Reilly

Feature-level and Model-level Audiovisual Fusion for Emotion Recognition in the Wild

Jun 06, 2019

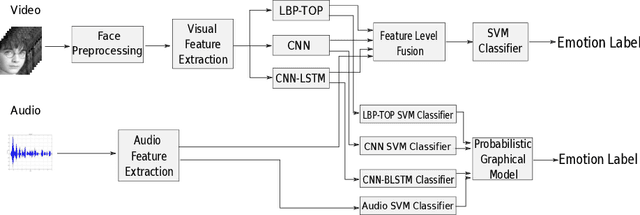

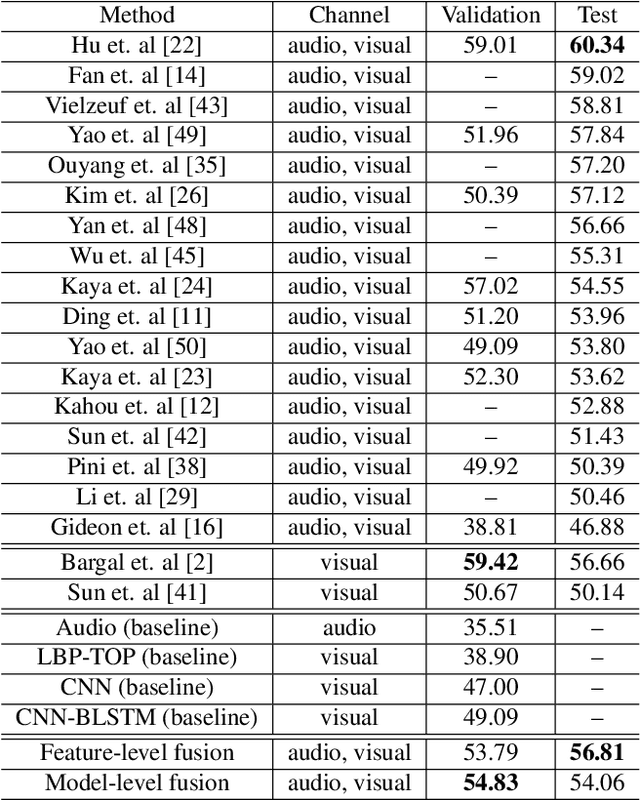

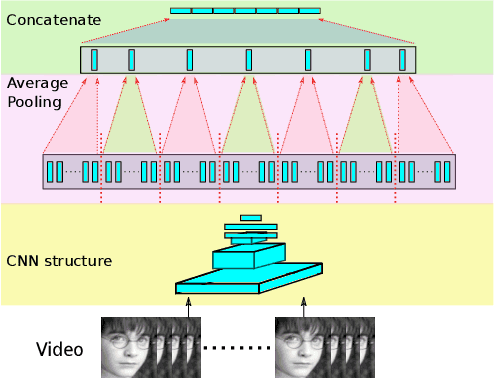

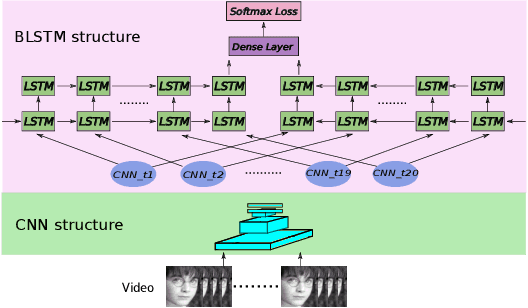

Abstract:Emotion recognition plays an important role in human-computer interaction (HCI) and has been extensively studied for decades. Although tremendous improvements have been achieved for posed expressions, recognizing human emotions in "close-to-real-world" environments remains a challenge. In this paper, we proposed two strategies to fuse information extracted from different modalities, i.e., audio and visual. Specifically, we utilized LBP-TOP, an ensemble of CNNs, and a bi-directional LSTM (BLSTM) to extract features from the visual channel, and the OpenSmile toolkit to extract features from the audio channel. Two kinds of fusion methods, i,e., feature-level fusion and model-level fusion, were developed to utilize the information extracted from the two channels. Experimental results on the EmotiW2018 AFEW dataset have shown that the proposed fusion methods outperform the baseline methods significantly and achieve better or at least comparable performance compared with the state-of-the-art methods, where the model-level fusion performs better when one of the channels totally fails.

Identity-Free Facial Expression Recognition using conditional Generative Adversarial Network

Mar 19, 2019

Abstract:In this paper, we proposed a novel Identity-free conditional Generative Adversarial Network (IF-GAN) to explicitly reduce inter-subject variations for facial expression recognition. Specifically, for any given input face image, a conditional generative model was developed to transform an average neutral face, which is calculated from various subjects showing neutral expressions, to an average expressive face with the same expression as the input image. Since the transformed images have the same synthetic "average" identity, they differ from each other by only their expressions and thus, can be used for identity-free expression classification. In this work, an end-to-end system was developed to perform expression transformation and expression recognition in the IF-GAN framework. Experimental results on three facial expression datasets have demonstrated that the proposed IF-GAN outperforms the baseline CNN model and achieves comparable or better performance compared with the state-of-the-art methods for facial expression recognition.

Probabilistic Attribute Tree in Convolutional Neural Networks for Facial Expression Recognition

Dec 17, 2018

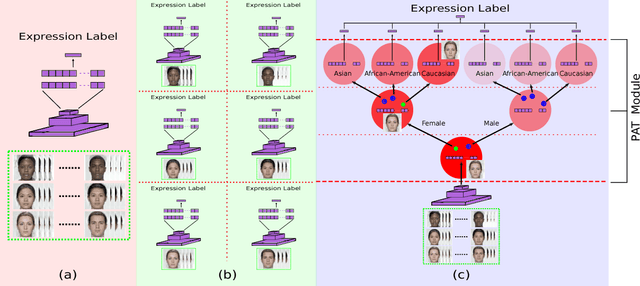

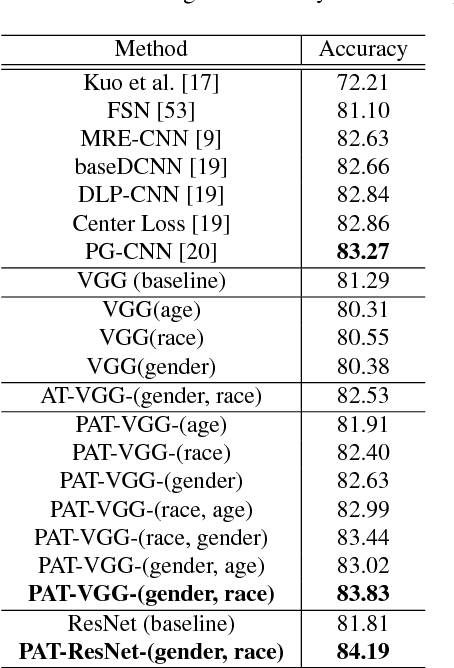

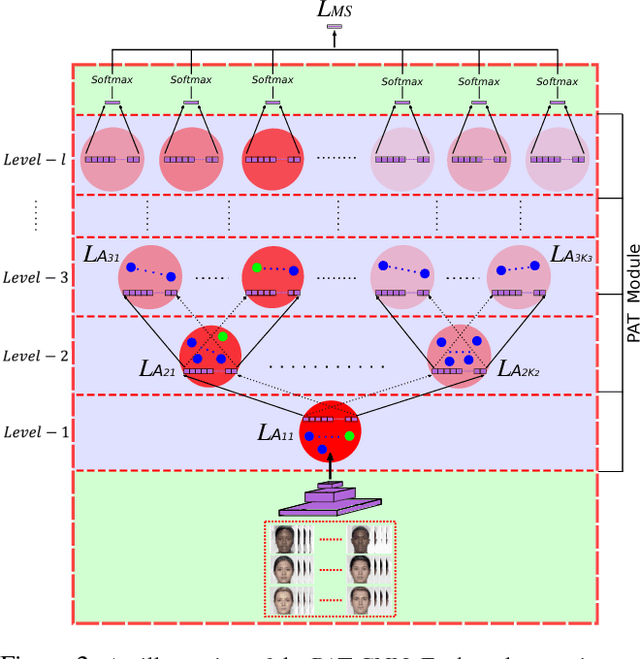

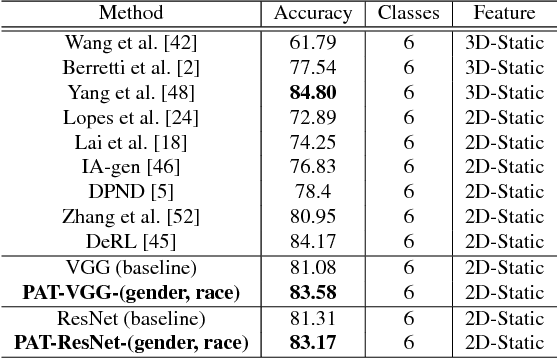

Abstract:In this paper, we proposed a novel Probabilistic Attribute Tree-CNN (PAT-CNN) to explicitly deal with the large intra-class variations caused by identity-related attributes, e.g., age, race, and gender. Specifically, a novel PAT module with an associated PAT loss was proposed to learn features in a hierarchical tree structure organized according to attributes, where the final features are less affected by the attributes. Then, expression-related features are extracted from leaf nodes. Samples are probabilistically assigned to tree nodes at different levels such that expression-related features can be learned from all samples weighted by probabilities. We further proposed a semi-supervised strategy to learn the PAT-CNN from limited attribute-annotated samples to make the best use of available data. Experimental results on five facial expression datasets have demonstrated that the proposed PAT-CNN outperforms the baseline models by explicitly modeling attributes. More impressively, the PAT-CNN using a single model achieves the best performance for faces in the wild on the SFEW dataset, compared with the state-of-the-art methods using an ensemble of hundreds of CNNs.

Optimizing Filter Size in Convolutional Neural Networks for Facial Action Unit Recognition

Nov 22, 2017

Abstract:Recognizing facial action units (AUs) during spontaneous facial displays is a challenging problem. Most recently, Convolutional Neural Networks (CNNs) have shown promise for facial AU recognition, where predefined and fixed convolution filter sizes are employed. In order to achieve the best performance, the optimal filter size is often empirically found by conducting extensive experimental validation. Such a training process suffers from expensive training cost, especially as the network becomes deeper. This paper proposes a novel Optimized Filter Size CNN (OFS-CNN), where the filter sizes and weights of all convolutional layers are learned simultaneously from the training data along with learning convolution filters. Specifically, the filter size is defined as a continuous variable, which is optimized by minimizing the training loss. Experimental results on two AU-coded spontaneous databases have shown that the proposed OFS-CNN is capable of estimating optimal filter size for varying image resolution and outperforms traditional CNNs with the best filter size obtained by exhaustive search. The OFS-CNN also beats the CNN using multiple filter sizes and more importantly, is much more efficient during testing with the proposed forward-backward propagation algorithm.

Island Loss for Learning Discriminative Features in Facial Expression Recognition

Oct 23, 2017

Abstract:Over the past few years, Convolutional Neural Networks (CNNs) have shown promise on facial expression recognition. However, the performance degrades dramatically under real-world settings due to variations introduced by subtle facial appearance changes, head pose variations, illumination changes, and occlusions. In this paper, a novel island loss is proposed to enhance the discriminative power of the deeply learned features. Specifically, the IL is designed to reduce the intra-class variations while enlarging the inter-class differences simultaneously. Experimental results on four benchmark expression databases have demonstrated that the CNN with the proposed island loss (IL-CNN) outperforms the baseline CNN models with either traditional softmax loss or the center loss and achieves comparable or better performance compared with the state-of-the-art methods for facial expression recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge