Jae-Woo Choi

External Large Foundation Model: How to Efficiently Serve Trillions of Parameters for Online Ads Recommendation

Feb 26, 2025

Abstract:Ads recommendation is a prominent service of online advertising systems and has been actively studied. Recent studies indicate that scaling-up and advanced design of the recommendation model can bring significant performance improvement. However, with a larger model scale, such prior studies have a significantly increasing gap from industry as they often neglect two fundamental challenges in industrial-scale applications. First, training and inference budgets are restricted for the model to be served, exceeding which may incur latency and impair user experience. Second, large-volume data arrive in a streaming mode with data distributions dynamically shifting, as new users/ads join and existing users/ads leave the system. We propose the External Large Foundation Model (ExFM) framework to address the overlooked challenges. Specifically, we develop external distillation and a data augmentation system (DAS) to control the computational cost of training/inference while maintaining high performance. We design the teacher in a way like a foundation model (FM) that can serve multiple students as vertical models (VMs) to amortize its building cost. We propose Auxiliary Head and Student Adapter to mitigate the data distribution gap between FM and VMs caused by the streaming data issue. Comprehensive experiments on internal industrial-scale applications and public datasets demonstrate significant performance gain by ExFM.

LoTa-Bench: Benchmarking Language-oriented Task Planners for Embodied Agents

Feb 13, 2024

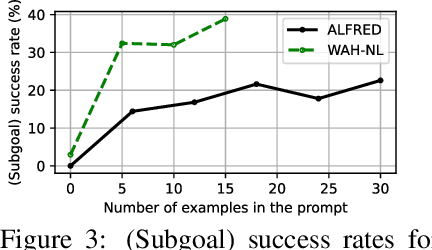

Abstract:Large language models (LLMs) have recently received considerable attention as alternative solutions for task planning. However, comparing the performance of language-oriented task planners becomes difficult, and there exists a dearth of detailed exploration regarding the effects of various factors such as pre-trained model selection and prompt construction. To address this, we propose a benchmark system for automatically quantifying performance of task planning for home-service embodied agents. Task planners are tested on two pairs of datasets and simulators: 1) ALFRED and AI2-THOR, 2) an extension of Watch-And-Help and VirtualHome. Using the proposed benchmark system, we perform extensive experiments with LLMs and prompts, and explore several enhancements of the baseline planner. We expect that the proposed benchmark tool would accelerate the development of language-oriented task planners.

Style Similarity as Feedback for Product Design

May 25, 2021

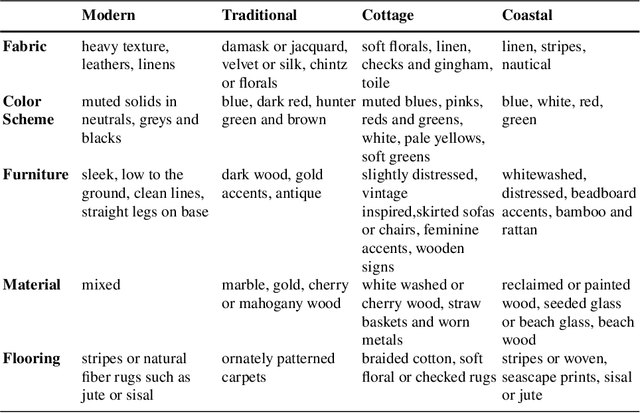

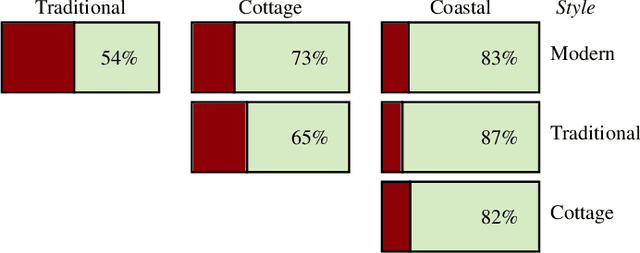

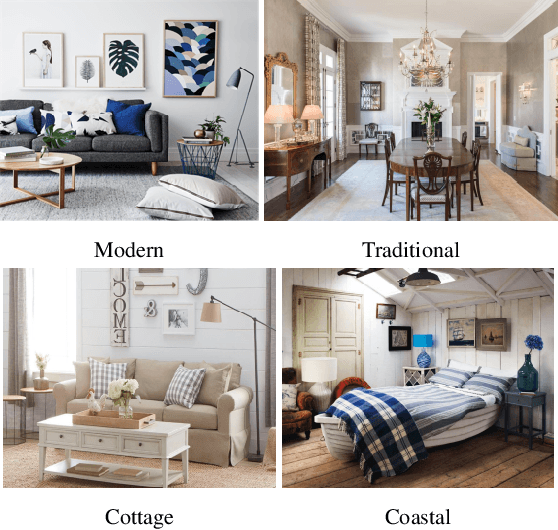

Abstract:Matching and recommending products is beneficial for both customers and companies. With the rapid increase in home goods e-commerce, there is an increasing demand for quantitative methods for providing such recommendations for millions of products. This approach is facilitated largely by online stores such as Amazon and Wayfair, in which the goal is to maximize overall sales. Instead of focusing on overall sales, we take a product design perspective, by employing big-data analysis for determining the design qualities of a highly recommended product. Specifically, we focus on the visual style compatibility of such products. We build off previous work which implemented a style-based similarity metric for thousands of furniture products. Using analysis and visualization, we extract attributes of furniture products that are highly compatible style-wise. We propose a designer in-the-loop workflow that mirrors methods of displaying similar products to consumers browsing e-commerce websites. Our findings are useful when designing new products, since they provide insight regarding what furniture will be strongly compatible across multiple styles, and hence, more likely to be recommended.

* 15 pages, 9 figures, interdisciplinary book chapter on using computer vision and style similarity for industrial design

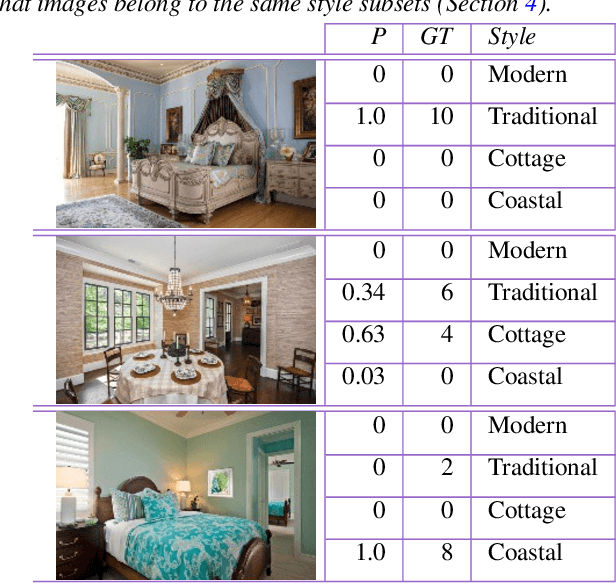

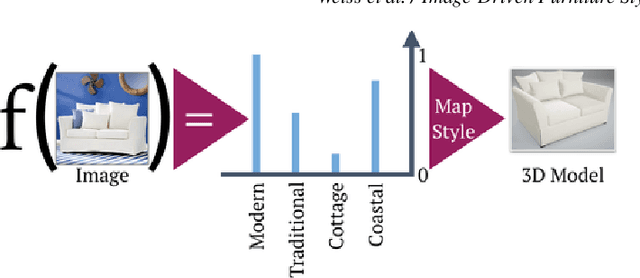

Image-Driven Furniture Style for Interactive 3D Scene Modeling

Oct 20, 2020

Abstract:Creating realistic styled spaces is a complex task, which involves design know-how for what furniture pieces go well together. Interior style follows abstract rules involving color, geometry and other visual elements. Following such rules, users manually select similar-style items from large repositories of 3D furniture models, a process which is both laborious and time-consuming. We propose a method for fast-tracking style-similarity tasks, by learning a furniture's style-compatibility from interior scene images. Such images contain more style information than images depicting single furniture. To understand style, we train a deep learning network on a classification task. Based on image embeddings extracted from our network, we measure stylistic compatibility of furniture. We demonstrate our method with several 3D model style-compatibility results, and with an interactive system for modeling style-consistent scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge