Jacob Schrum

Evolving Flying Machines in Minecraft Using Quality Diversity

Feb 01, 2023

Abstract:Minecraft is a great testbed for human creativity that has inspired the design of various structures and even functioning machines, including flying machines. EvoCraft is an API for programmatically generating structures in Minecraft, but the initial work in this domain was not capable of evolving flying machines. This paper applies fitness-based evolution and quality diversity search in order to evolve flying machines. Although fitness alone can occasionally produce flying machines, thanks in part to a more sophisticated fitness function than was used previously, the quality diversity algorithm MAP-Elites is capable of discovering flying machines much more reliably, at least when an appropriate behavior characterization is used to guide the search for diverse solutions.

Hybrid Encoding For Generating Large Scale Game Level Patterns With Local Variations Using a GAN

May 27, 2021

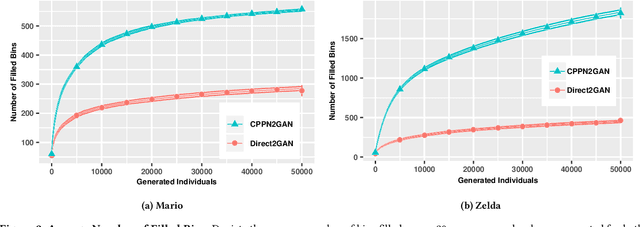

Abstract:Generative Adversarial Networks (GANs) are a powerful indirect genotype-to-phenotype mapping for evolutionary search, but they have limitations. In particular, GAN output does not scale to arbitrary dimensions, and there is no obvious way to combine GAN outputs into a cohesive whole, which would be useful in many areas, such as video game level generation. Game levels often consist of several segments, sometimes repeated directly or with variation, organized into an engaging pattern. Such patterns can be produced with Compositional Pattern Producing Networks (CPPNs). Specifically, a CPPN can define latent vector GAN inputs as a function of geometry, which provides a way to organize level segments output by a GAN into a complete level. However, a collection of latent vectors can also be evolved directly, to produce more chaotic levels. Here, we propose a new hybrid approach that evolves CPPNs first, but allows the latent vectors to evolve later, and combines the benefits of both approaches. These approaches are evaluated in Super Mario Bros. and The Legend of Zelda. We previously demonstrated via divergent search (MAP-Elites) that CPPNs better cover the space of possible levels than directly evolved levels. Here, we show that the hybrid approach can cover areas that neither of the other methods can and achieves comparable or superior QD scores.

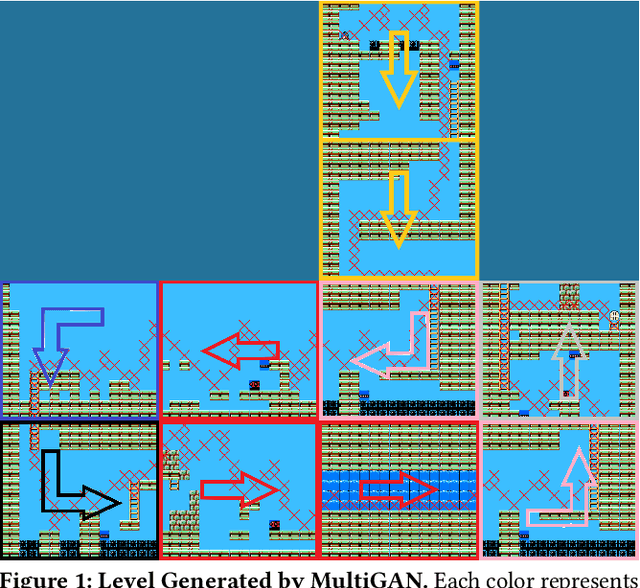

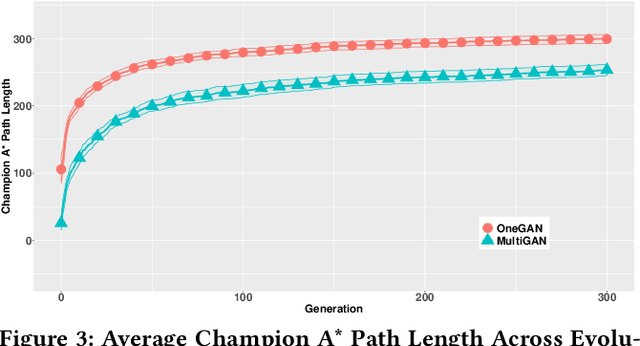

Using Multiple Generative Adversarial Networks to Build Better-Connected Levels for Mega Man

Jan 30, 2021

Abstract:Generative Adversarial Networks (GANs) can generate levels for a variety of games. This paper focuses on combining GAN-generated segments in a snaking pattern to create levels for Mega Man. Adjacent segments in such levels can be orthogonally adjacent in any direction, meaning that an otherwise fine segment might impose a barrier between its neighbor depending on what sorts of segments in the training set are being most closely emulated: horizontal, vertical, or corner segments. To pick appropriate segments, multiple GANs were trained on different types of segments to ensure better flow between segments. Flow was further improved by evolving the latent vectors for the segments being joined in the level to maximize the length of the level's solution path. Using multiple GANs to represent different types of segments results in significantly longer solution paths than using one GAN for all segment types, and a human subject study verifies that these levels are more fun and have more human-like design than levels produced by one GAN.

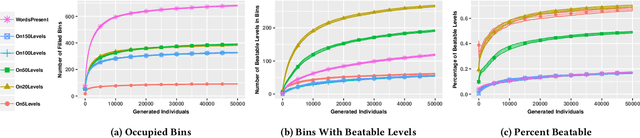

Illuminating the Space of Beatable Lode Runner Levels Produced By Various Generative Adversarial Networks

Jan 19, 2021

Abstract:Generative Adversarial Networks (GANs) are capable of generating convincing imitations of elements from a training set, but the distribution of elements in the training set affects to difficulty of properly training the GAN and the quality of the outputs it produces. This paper looks at six different GANs trained on different subsets of data from the game Lode Runner. The quality diversity algorithm MAP-Elites was used to explore the set of quality levels that could be produced by each GAN, where quality was defined as being beatable and having the longest solution path possible. Interestingly, a GAN trained on only 20 levels generated the largest set of diverse beatable levels while a GAN trained on 150 levels generated the smallest set of diverse beatable levels, thus challenging the notion that more is always better when training GANs.

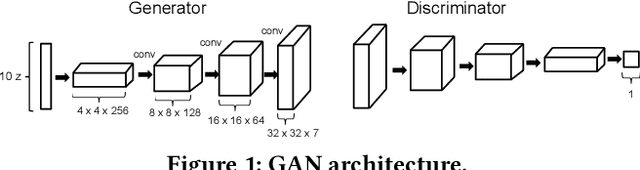

CPPN2GAN: Combining Compositional Pattern Producing Networks and GANs for Large-scale Pattern Generation

Apr 03, 2020

Abstract:Generative Adversarial Networks (GANs) are proving to be a powerful indirect genotype-to-phenotype mapping for evolutionary search, but they have limitations. In particular, GAN output does not scale to arbitrary dimensions, and there is no obvious way of combining multiple GAN outputs into a cohesive whole, which would be useful in many areas, such as the generation of video game levels. Game levels often consist of several segments, sometimes repeated directly or with variation, organized into an engaging pattern. Such patterns can be produced with Compositional Pattern Producing Networks (CPPNs). Specifically, a CPPN can define latent vector GAN inputs as a function of geometry, which provides a way to organize level segments output by a GAN into a complete level. This new CPPN2GAN approach is validated in both Super Mario Bros. and The Legend of Zelda. Specifically, divergent search via MAP-Elites demonstrates that CPPN2GAN can better cover the space of possible levels. The layouts of the resulting levels are also more cohesive and aesthetically consistent.

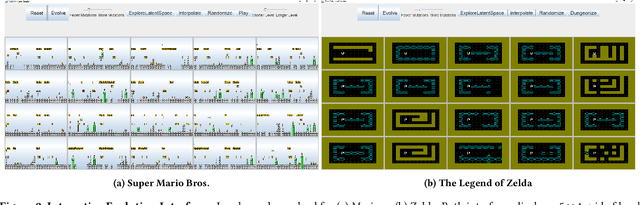

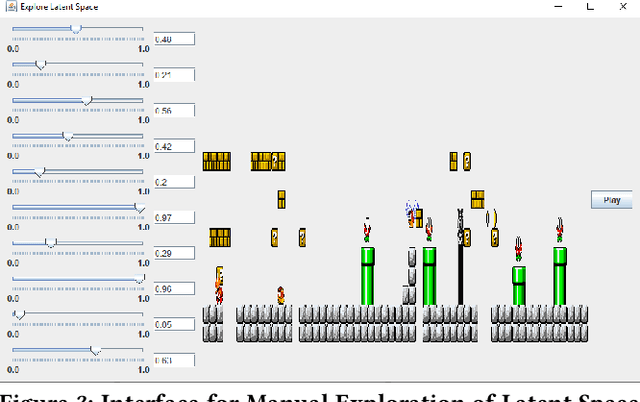

Interactive Evolution and Exploration Within Latent Level-Design Space of Generative Adversarial Networks

Mar 31, 2020

Abstract:Generative Adversarial Networks (GANs) are an emerging form of indirect encoding. The GAN is trained to induce a latent space on training data, and a real-valued evolutionary algorithm can search that latent space. Such Latent Variable Evolution (LVE) has recently been applied to game levels. However, it is hard for objective scores to capture level features that are appealing to players. Therefore, this paper introduces a tool for interactive LVE of tile-based levels for games. The tool also allows for direct exploration of the latent dimensions, and allows users to play discovered levels. The tool works for a variety of GAN models trained for both Super Mario Bros. and The Legend of Zelda, and is easily generalizable to other games. A user study shows that both the evolution and latent space exploration features are appreciated, with a slight preference for direct exploration, but combining these features allows users to discover even better levels. User feedback also indicates how this system could eventually grow into a commercial design tool, with the addition of a few enhancements.

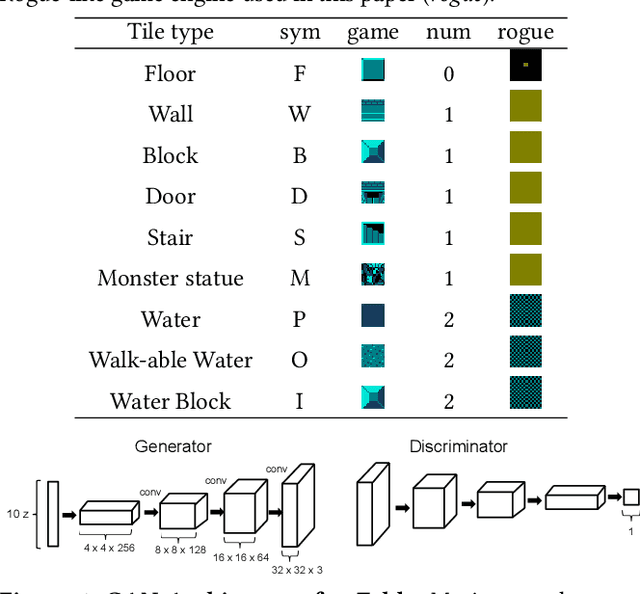

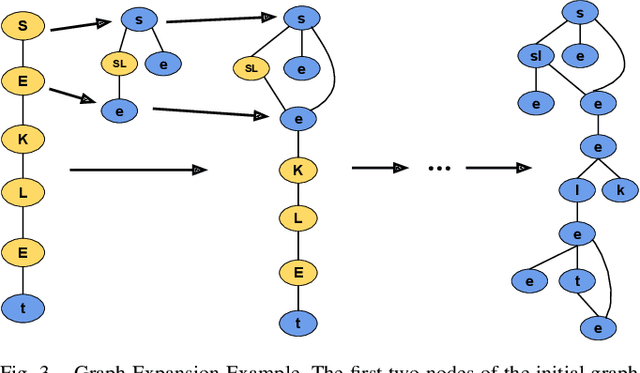

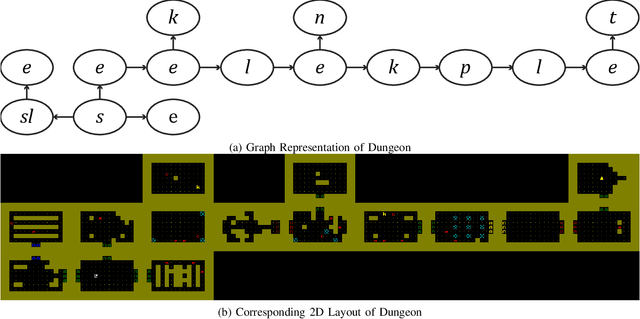

Generative Adversarial Network Rooms in Generative Graph Grammar Dungeons for The Legend of Zelda

Jan 14, 2020

Abstract:Generative Adversarial Networks (GANs) have demonstrated their ability to learn patterns in data and produce new exemplars similar to, but different from, their training set in several domains, including video games. However, GANs have a fixed output size, so creating levels of arbitrary size for a dungeon crawling game is difficult. GANs also have trouble encoding semantic requirements that make levels interesting and playable. This paper combines a GAN approach to generating individual rooms with a graph grammar approach to combining rooms into a dungeon. The GAN captures design principles of individual rooms, but the graph grammar organizes rooms into a global layout with a sequence of obstacles determined by a designer. Room data from The Legend of Zelda is used to train the GAN. This approach is validated by a user study, showing that GAN dungeons are as enjoyable to play as a level from the original game, and levels generated with a graph grammar alone. However, GAN dungeons have rooms considered more complex, and plain graph grammar's dungeons are considered least complex and challenging. Only the GAN approach creates an extensive supply of both layouts and rooms, where rooms span across the spectrum of those seen in the training set to new creations merging design principles from multiple rooms.

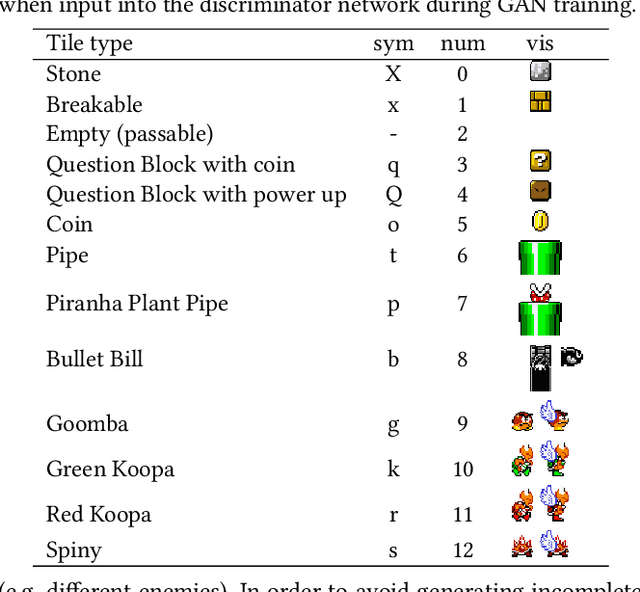

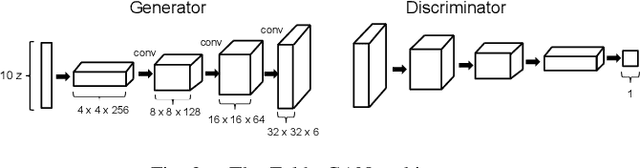

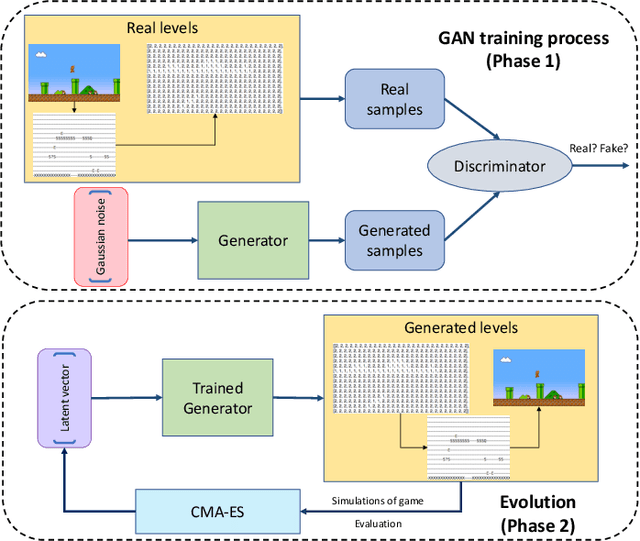

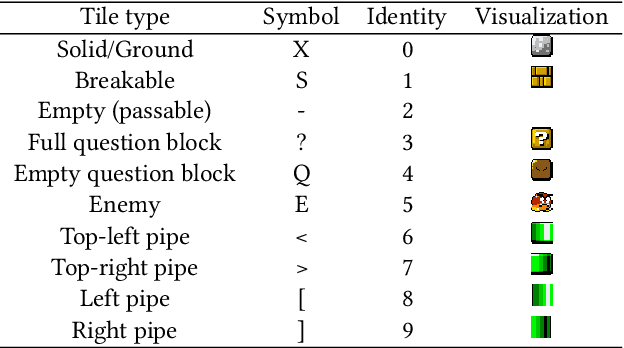

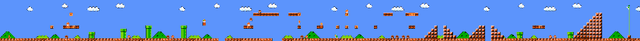

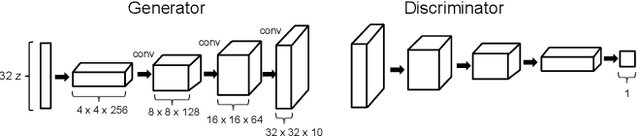

Evolving Mario Levels in the Latent Space of a Deep Convolutional Generative Adversarial Network

May 02, 2018

Abstract:Generative Adversarial Networks (GANs) are a machine learning approach capable of generating novel example outputs across a space of provided training examples. Procedural Content Generation (PCG) of levels for video games could benefit from such models, especially for games where there is a pre-existing corpus of levels to emulate. This paper trains a GAN to generate levels for Super Mario Bros using a level from the Video Game Level Corpus. The approach successfully generates a variety of levels similar to one in the original corpus, but is further improved by application of the Covariance Matrix Adaptation Evolution Strategy (CMA-ES). Specifically, various fitness functions are used to discover levels within the latent space of the GAN that maximize desired properties. Simple static properties are optimized, such as a given distribution of tile types. Additionally, the champion A* agent from the 2009 Mario AI competition is used to assess whether a level is playable, and how many jumping actions are required to beat it. These fitness functions allow for the discovery of levels that exist within the space of examples designed by experts, and also guide the search towards levels that fulfill one or more specified objectives.

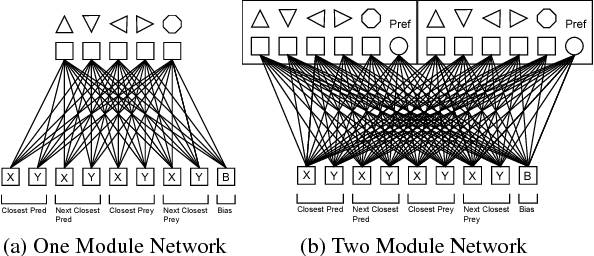

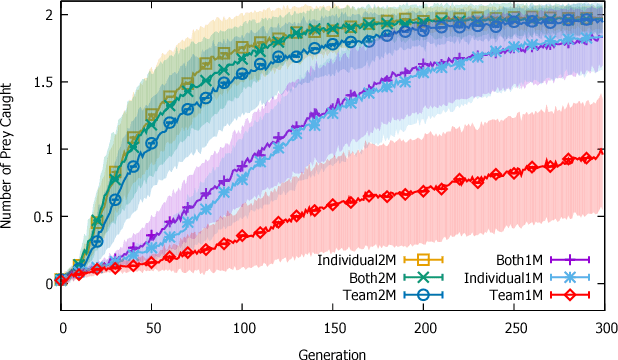

Balancing Selection Pressures, Multiple Objectives, and Neural Modularity to Coevolve Cooperative Agent Behavior

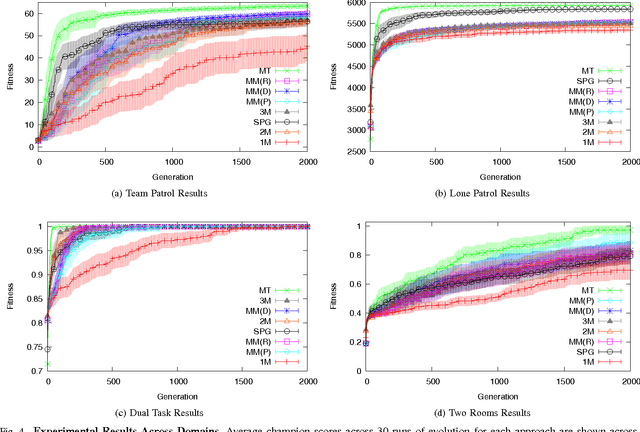

Mar 24, 2017

Abstract:Previous research using evolutionary computation in Multi-Agent Systems indicates that assigning fitness based on team vs.\ individual behavior has a strong impact on the ability of evolved teams of artificial agents to exhibit teamwork in challenging tasks. However, such research only made use of single-objective evolution. In contrast, when a multiobjective evolutionary algorithm is used, populations can be subject to individual-level objectives, team-level objectives, or combinations of the two. This paper explores the performance of cooperatively coevolved teams of agents controlled by artificial neural networks subject to these types of objectives. Specifically, predator agents are evolved to capture scripted prey agents in a torus-shaped grid world. Because of the tension between individual and team behaviors, multiple modes of behavior can be useful, and thus the effect of modular neural networks is also explored. Results demonstrate that fitness rewarding individual behavior is superior to fitness rewarding team behavior, despite being applied to a cooperative task. However, the use of networks with multiple modules allows predators to discover intelligent behavior, regardless of which type of objectives are used.

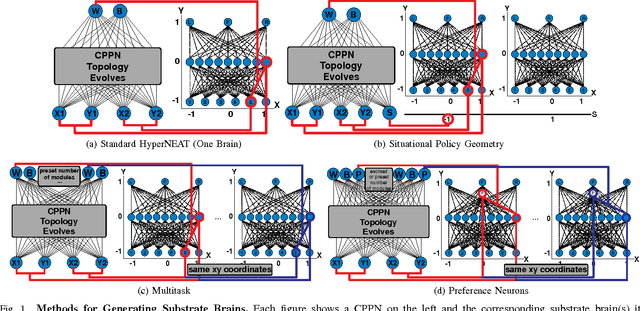

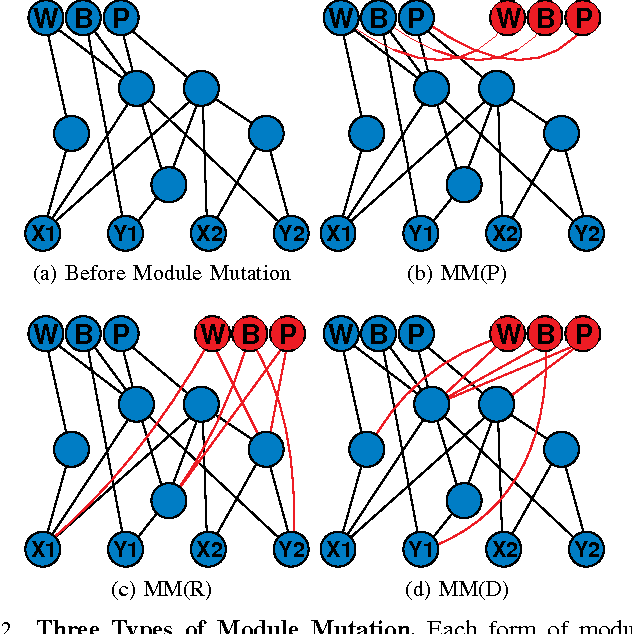

Using Indirect Encoding of Multiple Brains to Produce Multimodal Behavior

Apr 26, 2016

Abstract:An important challenge in neuroevolution is to evolve complex neural networks with multiple modes of behavior. Indirect encodings can potentially answer this challenge. Yet in practice, indirect encodings do not yield effective multimodal controllers. Thus, this paper introduces novel multimodal extensions to HyperNEAT, a popular indirect encoding. A previous multimodal HyperNEAT approach called situational policy geometry assumes that multiple brains benefit from being embedded within an explicit geometric space. However, experiments here illustrate that this assumption unnecessarily constrains evolution, resulting in lower performance. Specifically, this paper introduces HyperNEAT extensions for evolving many brains without assuming geometric relationships between them. The resulting Multi-Brain HyperNEAT can exploit human-specified task divisions to decide when each brain controls the agent, or can automatically discover when brains should be used, by means of preference neurons. A further extension called module mutation allows evolution to discover the number of brains, enabling multimodal behavior with even less expert knowledge. Experiments in several multimodal domains highlight that multi-brain approaches are more effective than HyperNEAT without multimodal extensions, and show that brains without a geometric relation to each other outperform situational policy geometry. The conclusion is that Multi-Brain HyperNEAT provides several promising techniques for evolving complex multimodal behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge