Kirby Steckel

Hybrid Encoding For Generating Large Scale Game Level Patterns With Local Variations Using a GAN

May 27, 2021

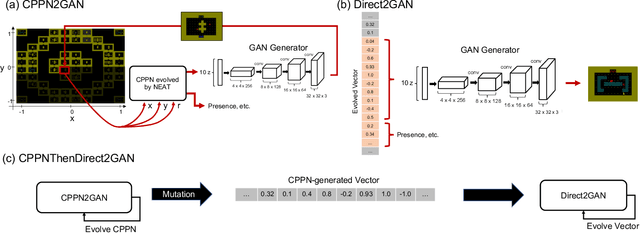

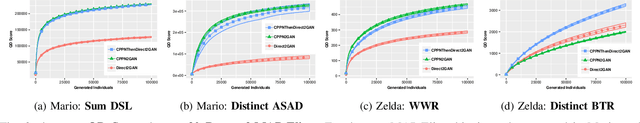

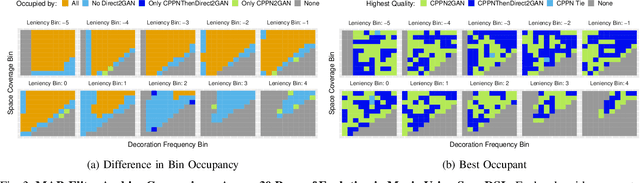

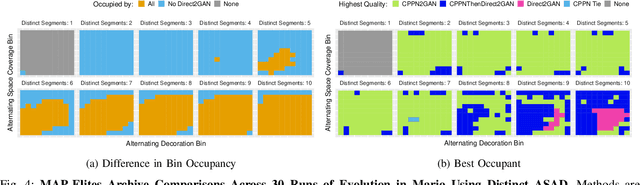

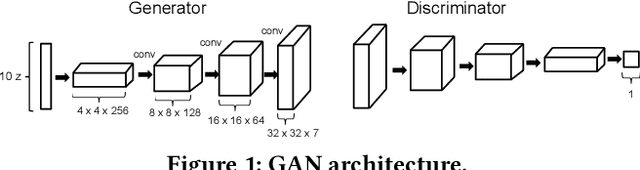

Abstract:Generative Adversarial Networks (GANs) are a powerful indirect genotype-to-phenotype mapping for evolutionary search, but they have limitations. In particular, GAN output does not scale to arbitrary dimensions, and there is no obvious way to combine GAN outputs into a cohesive whole, which would be useful in many areas, such as video game level generation. Game levels often consist of several segments, sometimes repeated directly or with variation, organized into an engaging pattern. Such patterns can be produced with Compositional Pattern Producing Networks (CPPNs). Specifically, a CPPN can define latent vector GAN inputs as a function of geometry, which provides a way to organize level segments output by a GAN into a complete level. However, a collection of latent vectors can also be evolved directly, to produce more chaotic levels. Here, we propose a new hybrid approach that evolves CPPNs first, but allows the latent vectors to evolve later, and combines the benefits of both approaches. These approaches are evaluated in Super Mario Bros. and The Legend of Zelda. We previously demonstrated via divergent search (MAP-Elites) that CPPNs better cover the space of possible levels than directly evolved levels. Here, we show that the hybrid approach can cover areas that neither of the other methods can and achieves comparable or superior QD scores.

Illuminating the Space of Beatable Lode Runner Levels Produced By Various Generative Adversarial Networks

Jan 19, 2021

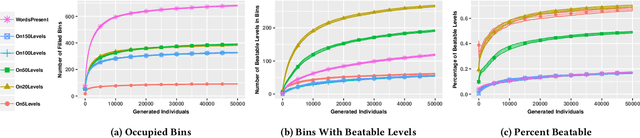

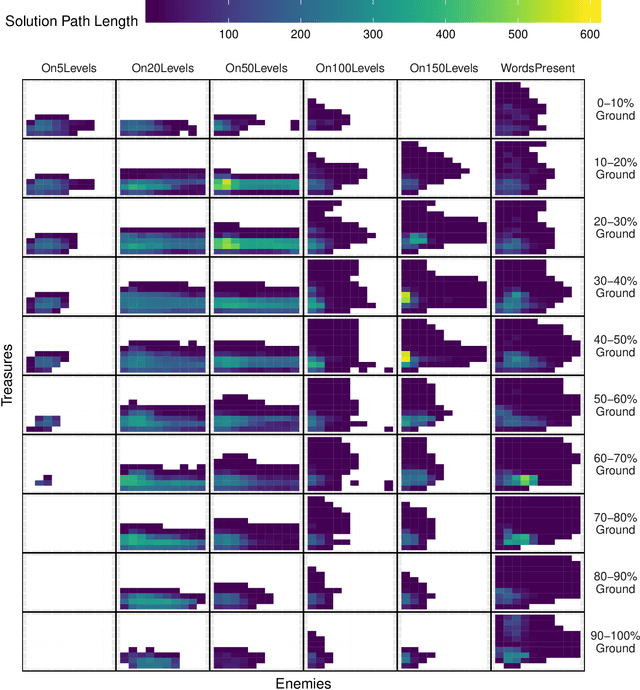

Abstract:Generative Adversarial Networks (GANs) are capable of generating convincing imitations of elements from a training set, but the distribution of elements in the training set affects to difficulty of properly training the GAN and the quality of the outputs it produces. This paper looks at six different GANs trained on different subsets of data from the game Lode Runner. The quality diversity algorithm MAP-Elites was used to explore the set of quality levels that could be produced by each GAN, where quality was defined as being beatable and having the longest solution path possible. Interestingly, a GAN trained on only 20 levels generated the largest set of diverse beatable levels while a GAN trained on 150 levels generated the smallest set of diverse beatable levels, thus challenging the notion that more is always better when training GANs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge