Jack Norfleet

Airway Skill Assessment with Spatiotemporal Attention Mechanisms Using Human Gaze

Jun 24, 2025Abstract:Airway management skills are critical in emergency medicine and are typically assessed through subjective evaluation, often failing to gauge competency in real-world scenarios. This paper proposes a machine learning-based approach for assessing airway skills, specifically endotracheal intubation (ETI), using human gaze data and video recordings. The proposed system leverages an attention mechanism guided by the human gaze to enhance the recognition of successful and unsuccessful ETI procedures. Visual masks were created from gaze points to guide the model in focusing on task-relevant areas, reducing irrelevant features. An autoencoder network extracts features from the videos, while an attention module generates attention from the visual masks, and a classifier outputs a classification score. This method, the first to use human gaze for ETI, demonstrates improved accuracy and efficiency over traditional methods. The integration of human gaze data not only enhances model performance but also offers a robust, objective assessment tool for clinical skills, particularly in high-stress environments such as military settings. The results show improvements in prediction accuracy, sensitivity, and trustworthiness, highlighting the potential for this approach to improve clinical training and patient outcomes in emergency medicine.

* 13 pages, 6 figures, 14 equations,

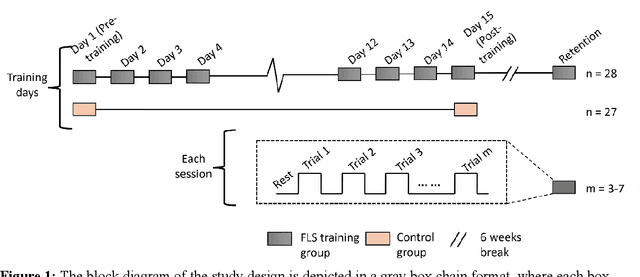

Beyond Performance Scores: Directed Functional Connectivity as a Brain-Based Biomarker for Motor Skill Learning and Retention

Feb 20, 2025

Abstract:Motor skill acquisition in fields like surgery, robotics, and sports involves learning complex task sequences through extensive training. Traditional performance metrics, like execution time and error rates, offer limited insight as they fail to capture the neural mechanisms underlying skill learning and retention. This study introduces directed functional connectivity (dFC), derived from electroencephalography (EEG), as a novel brain-based biomarker for assessing motor skill learning and retention. For the first time, dFC is applied as a biomarker to map the stages of the Fitts and Posner motor learning model, offering new insights into the neural mechanisms underlying skill acquisition and retention. Unlike traditional measures, it captures both the strength and direction of neural information flow, providing a comprehensive understanding of neural adaptations across different learning stages. The analysis demonstrates that dFC can effectively identify and track the progression through various stages of the Fitts and Posner model. Furthermore, its stability over a six-week washout period highlights its utility in monitoring long-term retention. No significant changes in dFC were observed in a control group, confirming that the observed neural adaptations were specific to training and not due to external factors. By offering a granular view of the learning process at the group and individual levels, dFC facilitates the development of personalized, targeted training protocols aimed at enhancing outcomes in fields where precision and long-term retention are critical, such as surgical education. These findings underscore the value of dFC as a robust biomarker that complements traditional performance metrics, providing a deeper understanding of motor skill learning and retention.

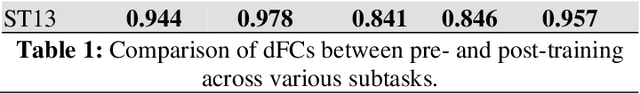

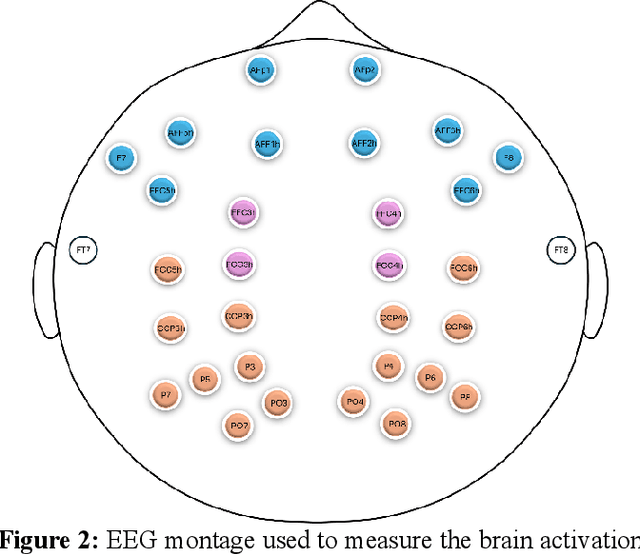

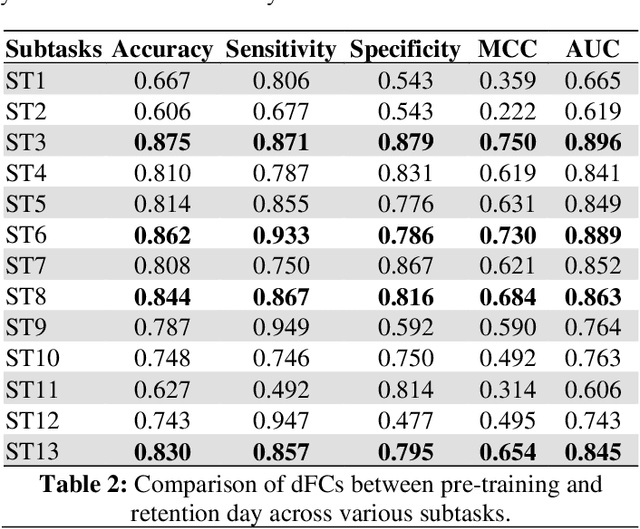

Dynamic directed functional connectivity as a neural biomarker for objective motor skill assessment

Feb 19, 2025

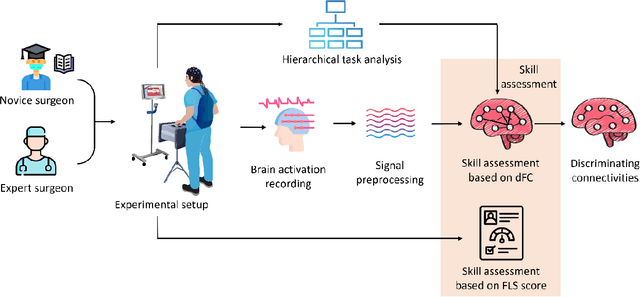

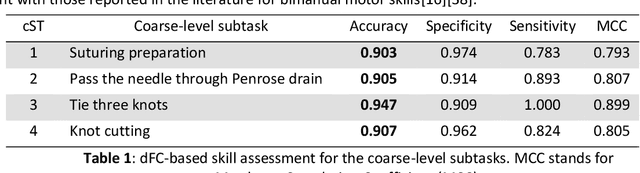

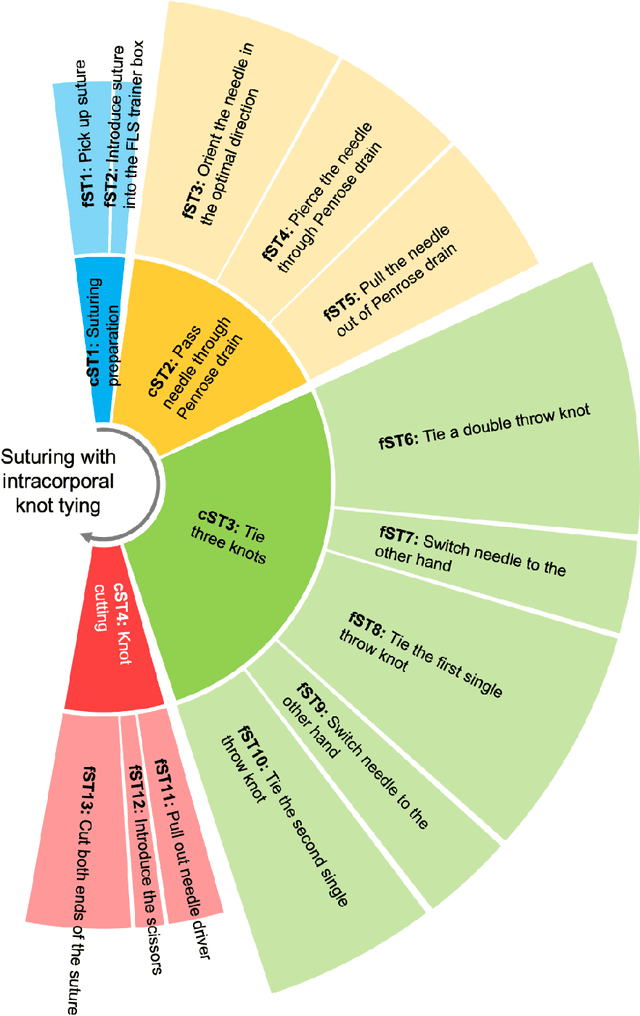

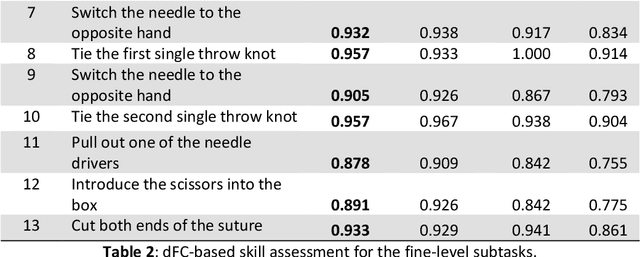

Abstract:Objective motor skill assessment plays a critical role in fields such as surgery, where proficiency is vital for certification and patient safety. Existing assessment methods, however, rely heavily on subjective human judgment, which introduces bias and limits reproducibility. While recent efforts have leveraged kinematic data and neural imaging to provide more objective evaluations, these approaches often overlook the dynamic neural mechanisms that differentiate expert and novice performance. This study proposes a novel method for motor skill assessment based on dynamic directed functional connectivity (dFC) as a neural biomarker. By using electroencephalography (EEG) to capture brain dynamics and employing an attention-based Long Short-Term Memory (LSTM) model for non-linear Granger causality analysis, we compute dFC among key brain regions involved in psychomotor tasks. Coupled with hierarchical task analysis (HTA), our approach enables subtask-level evaluation of motor skills, offering detailed insights into neural coordination that underpins expert proficiency. A convolutional neural network (CNN) is then used to classify skill levels, achieving greater accuracy and specificity than established performance metrics in laparoscopic surgery. This methodology provides a reliable, objective framework for assessing motor skills, contributing to the development of tailored training protocols and enhancing the certification process.

Deep Learning for Video-Based Assessment of Endotracheal Intubation Skills

Apr 17, 2024

Abstract:Endotracheal intubation (ETI) is an emergency procedure performed in civilian and combat casualty care settings to establish an airway. Objective and automated assessment of ETI skills is essential for the training and certification of healthcare providers. However, the current approach is based on manual feedback by an expert, which is subjective, time- and resource-intensive, and is prone to poor inter-rater reliability and halo effects. This work proposes a framework to evaluate ETI skills using single and multi-view videos. The framework consists of two stages. First, a 2D convolutional autoencoder (AE) and a pre-trained self-supervision network extract features from videos. Second, a 1D convolutional enhanced with a cross-view attention module takes the features from the AE as input and outputs predictions for skill evaluation. The ETI datasets were collected in two phases. In the first phase, ETI is performed by two subject cohorts: Experts and Novices. In the second phase, novice subjects perform ETI under time pressure, and the outcome is either Successful or Unsuccessful. A third dataset of videos from a single head-mounted camera for Experts and Novices is also analyzed. The study achieved an accuracy of 100% in identifying Expert/Novice trials in the initial phase. In the second phase, the model showed 85% accuracy in classifying Successful/Unsuccessful procedures. Using head-mounted cameras alone, the model showed a 96% accuracy on Expert and Novice classification while maintaining an accuracy of 85% on classifying successful and unsuccessful. In addition, GradCAMs are presented to explain the differences between Expert and Novice behavior and Successful and Unsuccessful trials. The approach offers a reliable and objective method for automated assessment of ETI skills.

A deep learning model for burn depth classification using ultrasound imaging

Mar 29, 2022

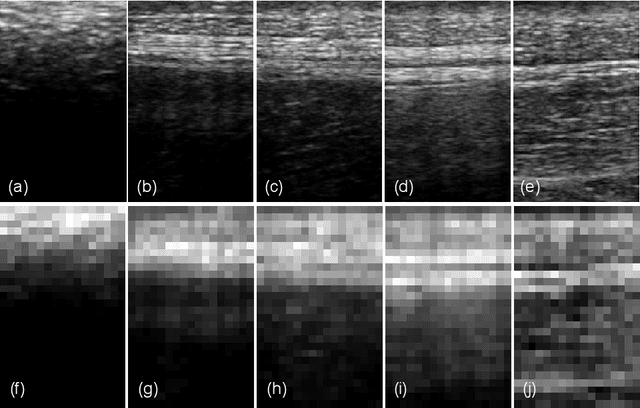

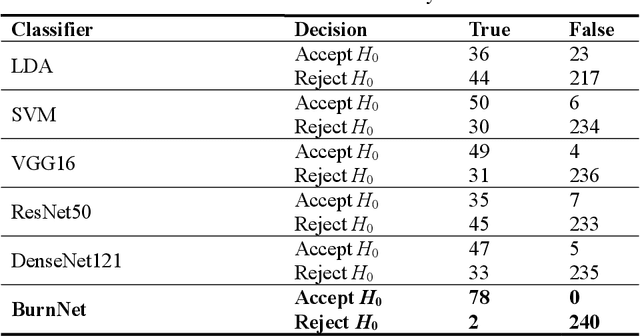

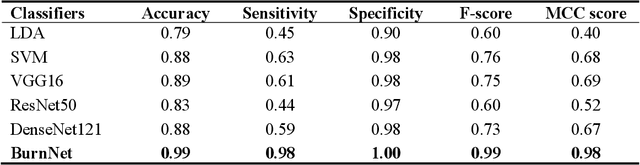

Abstract:Identification of burn depth with sufficient accuracy is a challenging problem. This paper presents a deep convolutional neural network to classify burn depth based on altered tissue morphology of burned skin manifested as texture patterns in the ultrasound images. The network first learns a low-dimensional manifold of the unburned skin images using an encoder-decoder architecture that reconstructs it from ultrasound images of burned skin. The encoder is then re-trained to classify burn depths. The encoder-decoder network is trained using a dataset comprised of B-mode ultrasound images of unburned and burned ex vivo porcine skin samples. The classifier is developed using B-mode images of burned in situ skin samples obtained from freshly euthanized postmortem pigs. The performance metrics obtained from 20-fold cross-validation show that the model can identify deep-partial thickness burns, which is the most difficult to diagnose clinically, with 99% accuracy, 98% sensitivity, and 100% specificity. The diagnostic accuracy of the classifier is further illustrated by the high area under the curve values of 0.99 and 0.95, respectively, for the receiver operating characteristic and precision-recall curves. A post hoc explanation indicates that the classifier activates the discriminative textural features in the B-mode images for burn classification. The proposed model has the potential for clinical utility in assisting the clinical assessment of burn depths using a widely available clinical imaging device.

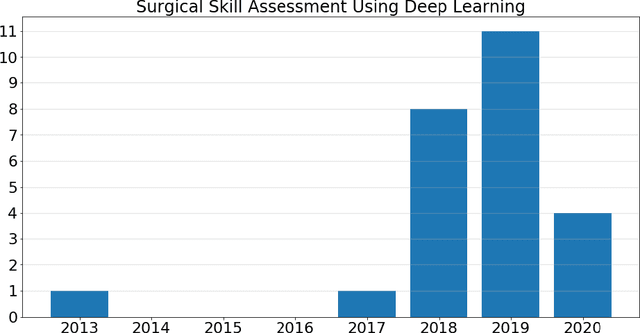

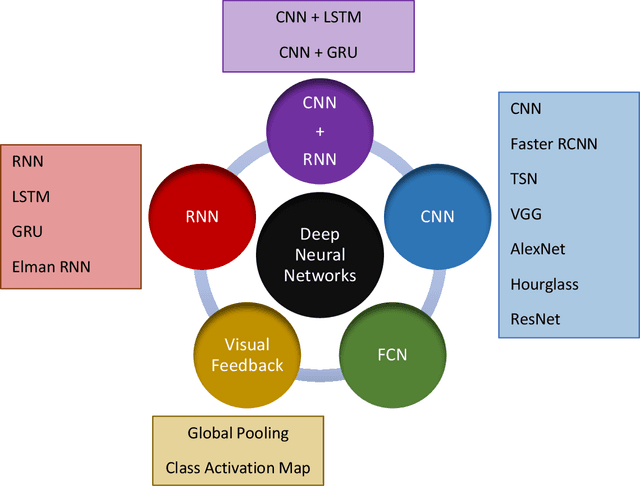

Deep Neural Networks for the Assessment of Surgical Skills: A Systematic Review

Mar 03, 2021

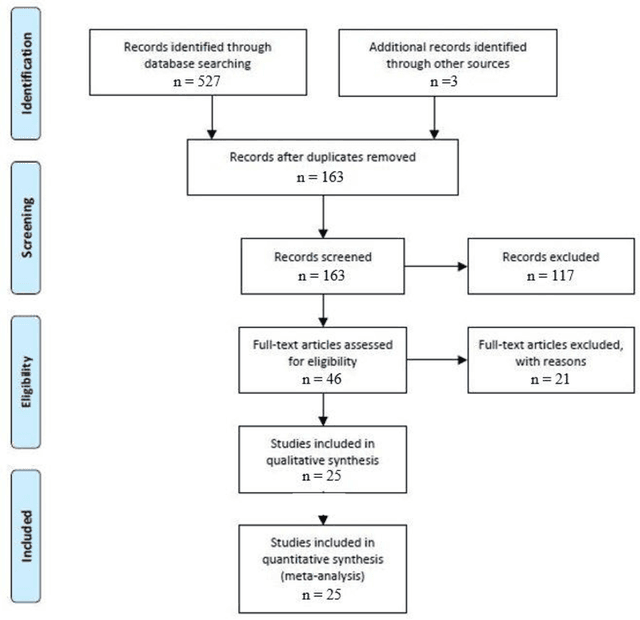

Abstract:Surgical training in medical school residency programs has followed the apprenticeship model. The learning and assessment process is inherently subjective and time-consuming. Thus, there is a need for objective methods to assess surgical skills. Here, we use the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines to systematically survey the literature on the use of Deep Neural Networks for automated and objective surgical skill assessment, with a focus on kinematic data as putative markers of surgical competency. There is considerable recent interest in deep neural networks (DNN) due to the availability of powerful algorithms, multiple datasets, some of which are publicly available, as well as efficient computational hardware to train and host them. We have reviewed 530 papers, of which we selected 25 for this systematic review. Based on this review, we concluded that DNNs are powerful tools for automated, objective surgical skill assessment using both kinematic and video data. The field would benefit from large, publicly available, annotated datasets that are representative of the surgical trainee and expert demographics and multimodal data beyond kinematics and videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge