Rahul Rahul

Beyond Performance Scores: Directed Functional Connectivity as a Brain-Based Biomarker for Motor Skill Learning and Retention

Feb 20, 2025

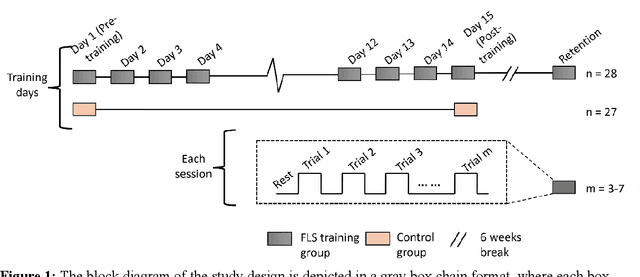

Abstract:Motor skill acquisition in fields like surgery, robotics, and sports involves learning complex task sequences through extensive training. Traditional performance metrics, like execution time and error rates, offer limited insight as they fail to capture the neural mechanisms underlying skill learning and retention. This study introduces directed functional connectivity (dFC), derived from electroencephalography (EEG), as a novel brain-based biomarker for assessing motor skill learning and retention. For the first time, dFC is applied as a biomarker to map the stages of the Fitts and Posner motor learning model, offering new insights into the neural mechanisms underlying skill acquisition and retention. Unlike traditional measures, it captures both the strength and direction of neural information flow, providing a comprehensive understanding of neural adaptations across different learning stages. The analysis demonstrates that dFC can effectively identify and track the progression through various stages of the Fitts and Posner model. Furthermore, its stability over a six-week washout period highlights its utility in monitoring long-term retention. No significant changes in dFC were observed in a control group, confirming that the observed neural adaptations were specific to training and not due to external factors. By offering a granular view of the learning process at the group and individual levels, dFC facilitates the development of personalized, targeted training protocols aimed at enhancing outcomes in fields where precision and long-term retention are critical, such as surgical education. These findings underscore the value of dFC as a robust biomarker that complements traditional performance metrics, providing a deeper understanding of motor skill learning and retention.

Dynamic directed functional connectivity as a neural biomarker for objective motor skill assessment

Feb 19, 2025

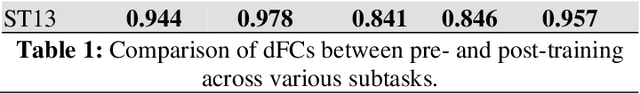

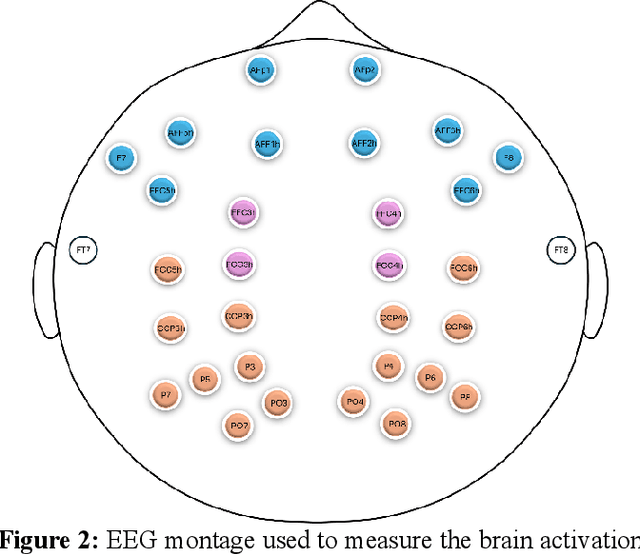

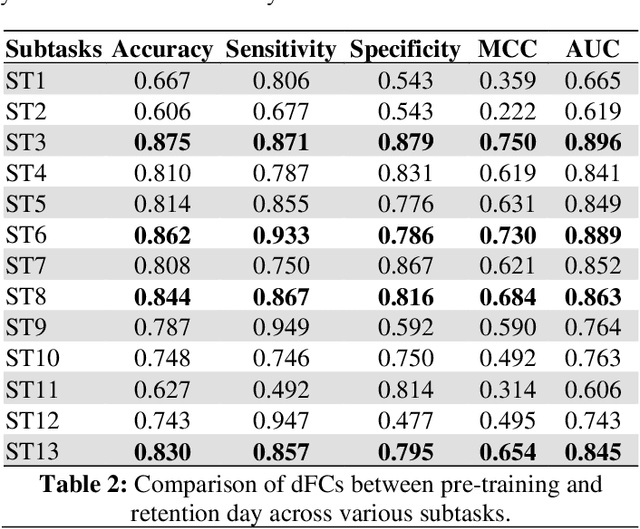

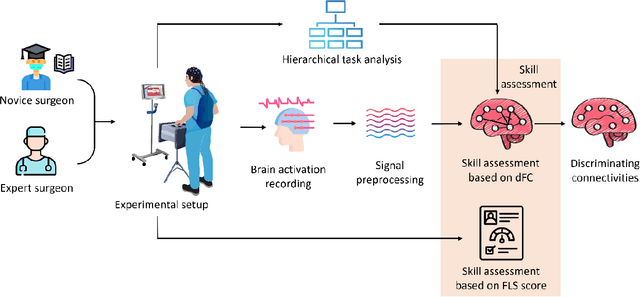

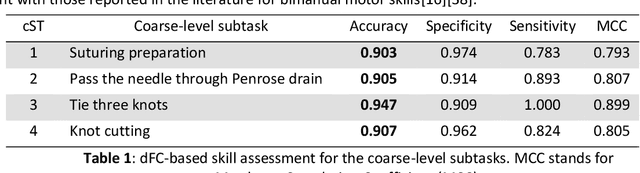

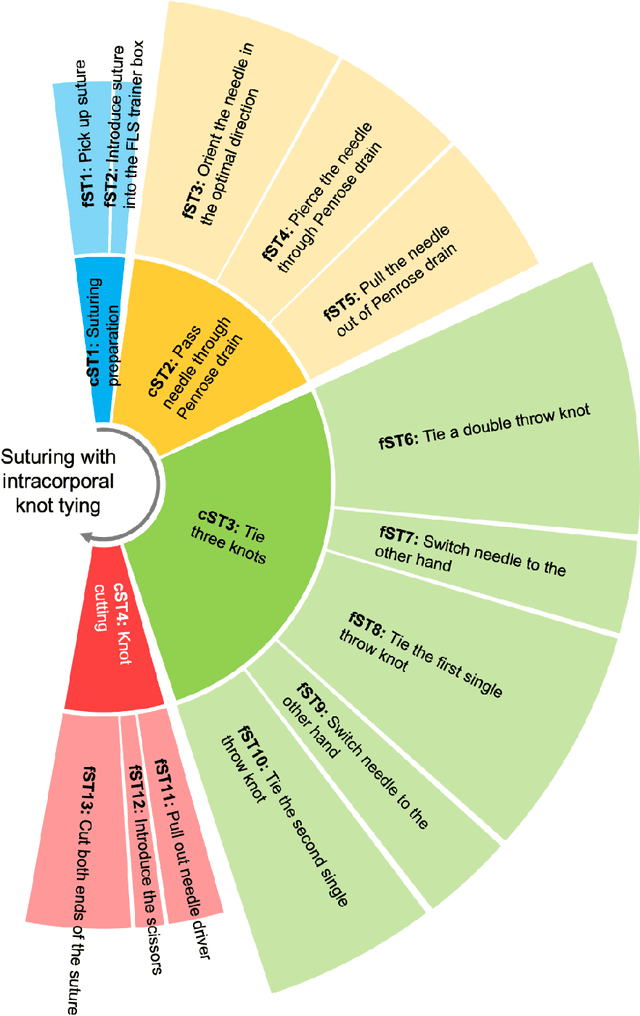

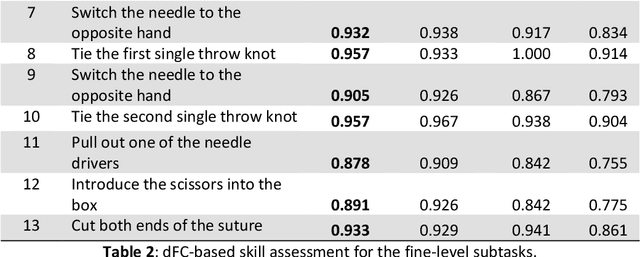

Abstract:Objective motor skill assessment plays a critical role in fields such as surgery, where proficiency is vital for certification and patient safety. Existing assessment methods, however, rely heavily on subjective human judgment, which introduces bias and limits reproducibility. While recent efforts have leveraged kinematic data and neural imaging to provide more objective evaluations, these approaches often overlook the dynamic neural mechanisms that differentiate expert and novice performance. This study proposes a novel method for motor skill assessment based on dynamic directed functional connectivity (dFC) as a neural biomarker. By using electroencephalography (EEG) to capture brain dynamics and employing an attention-based Long Short-Term Memory (LSTM) model for non-linear Granger causality analysis, we compute dFC among key brain regions involved in psychomotor tasks. Coupled with hierarchical task analysis (HTA), our approach enables subtask-level evaluation of motor skills, offering detailed insights into neural coordination that underpins expert proficiency. A convolutional neural network (CNN) is then used to classify skill levels, achieving greater accuracy and specificity than established performance metrics in laparoscopic surgery. This methodology provides a reliable, objective framework for assessing motor skills, contributing to the development of tailored training protocols and enhancing the certification process.

Deep Learning for Video-Based Assessment of Endotracheal Intubation Skills

Apr 17, 2024

Abstract:Endotracheal intubation (ETI) is an emergency procedure performed in civilian and combat casualty care settings to establish an airway. Objective and automated assessment of ETI skills is essential for the training and certification of healthcare providers. However, the current approach is based on manual feedback by an expert, which is subjective, time- and resource-intensive, and is prone to poor inter-rater reliability and halo effects. This work proposes a framework to evaluate ETI skills using single and multi-view videos. The framework consists of two stages. First, a 2D convolutional autoencoder (AE) and a pre-trained self-supervision network extract features from videos. Second, a 1D convolutional enhanced with a cross-view attention module takes the features from the AE as input and outputs predictions for skill evaluation. The ETI datasets were collected in two phases. In the first phase, ETI is performed by two subject cohorts: Experts and Novices. In the second phase, novice subjects perform ETI under time pressure, and the outcome is either Successful or Unsuccessful. A third dataset of videos from a single head-mounted camera for Experts and Novices is also analyzed. The study achieved an accuracy of 100% in identifying Expert/Novice trials in the initial phase. In the second phase, the model showed 85% accuracy in classifying Successful/Unsuccessful procedures. Using head-mounted cameras alone, the model showed a 96% accuracy on Expert and Novice classification while maintaining an accuracy of 85% on classifying successful and unsuccessful. In addition, GradCAMs are presented to explain the differences between Expert and Novice behavior and Successful and Unsuccessful trials. The approach offers a reliable and objective method for automated assessment of ETI skills.

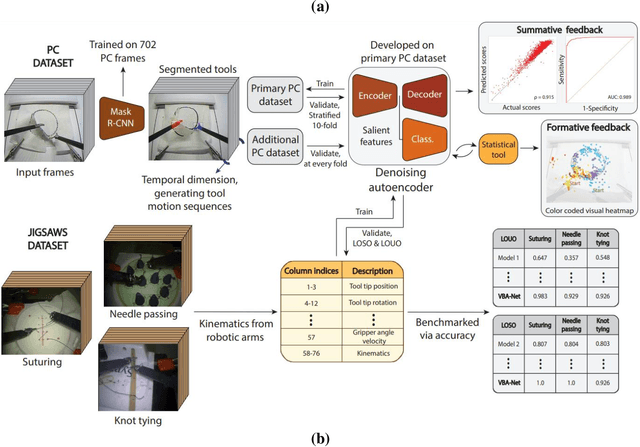

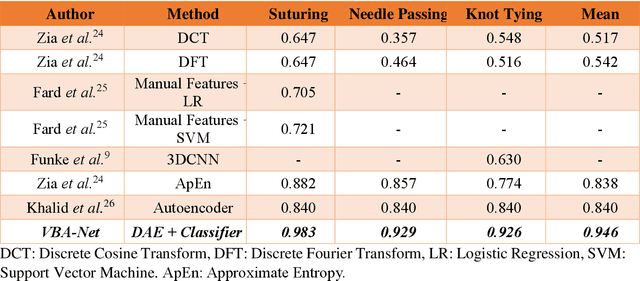

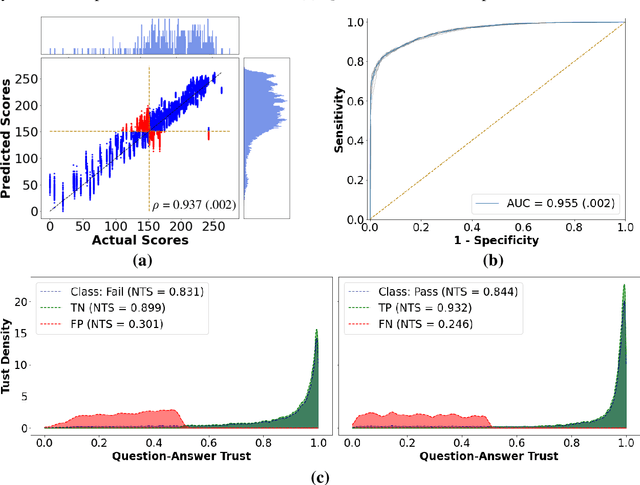

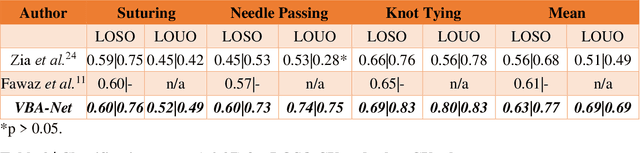

Video-based Formative and Summative Assessment of Surgical Tasks using Deep Learning

Mar 17, 2022

Abstract:To ensure satisfactory clinical outcomes, surgical skill assessment must be objective, time-efficient, and preferentially automated - none of which is currently achievable. Video-based assessment (VBA) is being deployed in intraoperative and simulation settings to evaluate technical skill execution. However, VBA remains manually- and time-intensive and prone to subjective interpretation and poor inter-rater reliability. Herein, we propose a deep learning (DL) model that can automatically and objectively provide a high-stakes summative assessment of surgical skill execution based on video feeds and low-stakes formative assessment to guide surgical skill acquisition. Formative assessment is generated using heatmaps of visual features that correlate with surgical performance. Hence, the DL model paves the way to the quantitative and reproducible evaluation of surgical tasks from videos with the potential for broad dissemination in surgical training, certification, and credentialing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge