Basiel Makled

A deep learning model for burn depth classification using ultrasound imaging

Mar 29, 2022

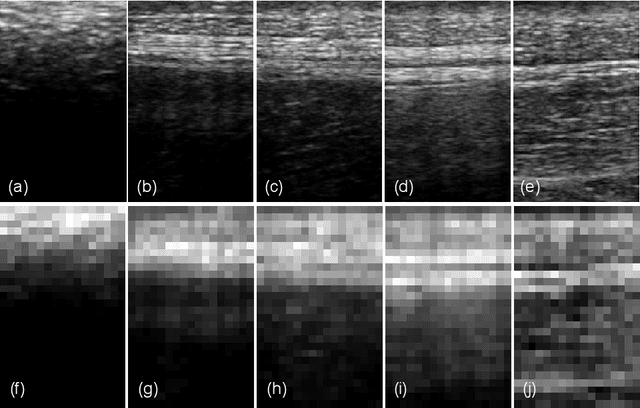

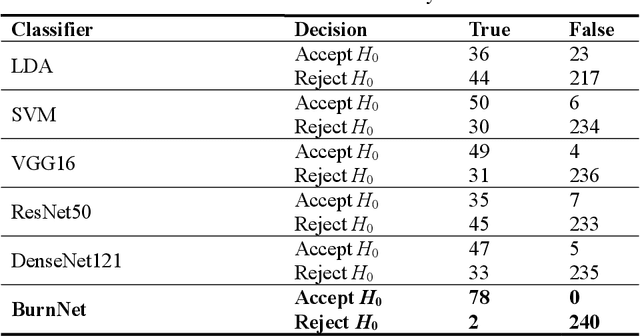

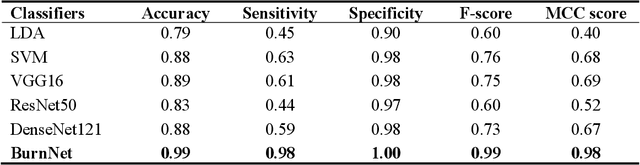

Abstract:Identification of burn depth with sufficient accuracy is a challenging problem. This paper presents a deep convolutional neural network to classify burn depth based on altered tissue morphology of burned skin manifested as texture patterns in the ultrasound images. The network first learns a low-dimensional manifold of the unburned skin images using an encoder-decoder architecture that reconstructs it from ultrasound images of burned skin. The encoder is then re-trained to classify burn depths. The encoder-decoder network is trained using a dataset comprised of B-mode ultrasound images of unburned and burned ex vivo porcine skin samples. The classifier is developed using B-mode images of burned in situ skin samples obtained from freshly euthanized postmortem pigs. The performance metrics obtained from 20-fold cross-validation show that the model can identify deep-partial thickness burns, which is the most difficult to diagnose clinically, with 99% accuracy, 98% sensitivity, and 100% specificity. The diagnostic accuracy of the classifier is further illustrated by the high area under the curve values of 0.99 and 0.95, respectively, for the receiver operating characteristic and precision-recall curves. A post hoc explanation indicates that the classifier activates the discriminative textural features in the B-mode images for burn classification. The proposed model has the potential for clinical utility in assisting the clinical assessment of burn depths using a widely available clinical imaging device.

Deep Neural Networks for the Assessment of Surgical Skills: A Systematic Review

Mar 03, 2021

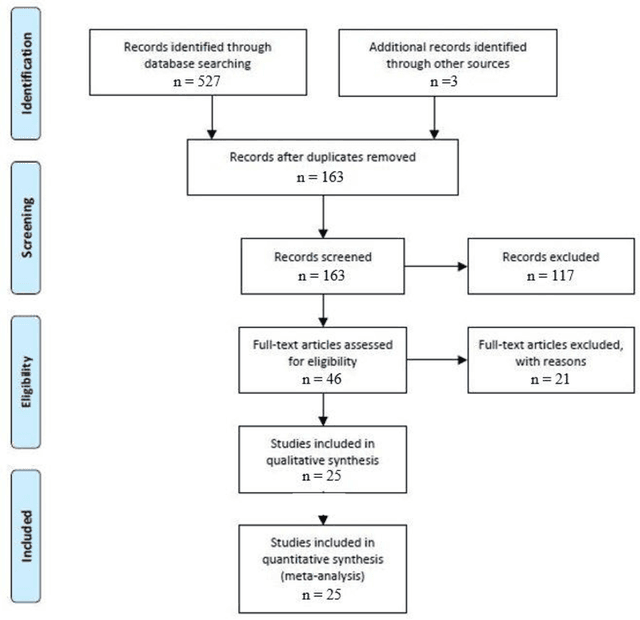

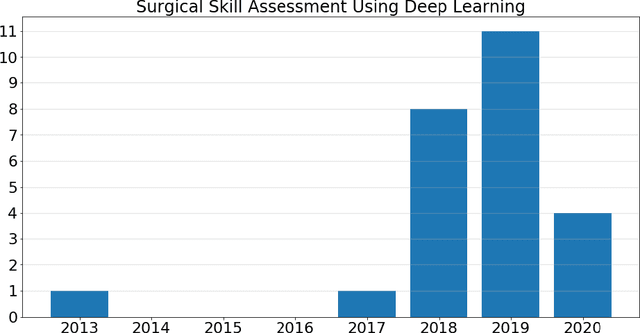

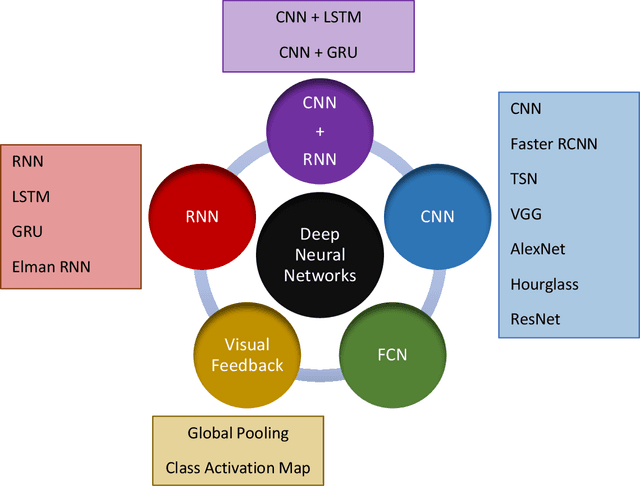

Abstract:Surgical training in medical school residency programs has followed the apprenticeship model. The learning and assessment process is inherently subjective and time-consuming. Thus, there is a need for objective methods to assess surgical skills. Here, we use the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines to systematically survey the literature on the use of Deep Neural Networks for automated and objective surgical skill assessment, with a focus on kinematic data as putative markers of surgical competency. There is considerable recent interest in deep neural networks (DNN) due to the availability of powerful algorithms, multiple datasets, some of which are publicly available, as well as efficient computational hardware to train and host them. We have reviewed 530 papers, of which we selected 25 for this systematic review. Based on this review, we concluded that DNNs are powerful tools for automated, objective surgical skill assessment using both kinematic and video data. The field would benefit from large, publicly available, annotated datasets that are representative of the surgical trainee and expert demographics and multimodal data beyond kinematics and videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge