J. Daniel Griffith

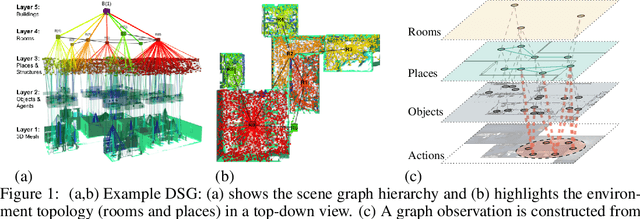

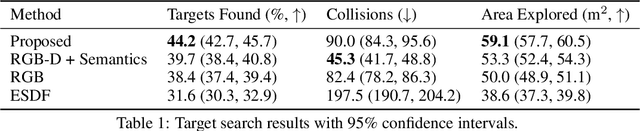

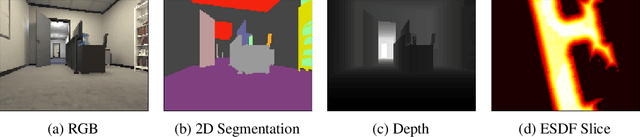

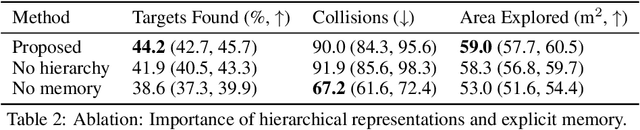

Hierarchical Representations and Explicit Memory: Learning Effective Navigation Policies on 3D Scene Graphs using Graph Neural Networks

Aug 02, 2021

Abstract:Representations are crucial for a robot to learn effective navigation policies. Recent work has shown that mid-level perceptual abstractions, such as depth estimates or 2D semantic segmentation, lead to more effective policies when provided as observations in place of raw sensor data (e.g., RGB images). However, such policies must still learn latent three-dimensional scene properties from mid-level abstractions. In contrast, high-level, hierarchical representations such as 3D scene graphs explicitly provide a scene's geometry, topology, and semantics, making them compelling representations for navigation. In this work, we present a reinforcement learning framework that leverages high-level hierarchical representations to learn navigation policies. Towards this goal, we propose a graph neural network architecture and show how to embed a 3D scene graph into an agent-centric feature space, which enables the robot to learn policies for low-level action in an end-to-end manner. For each node in the scene graph, our method uses features that capture occupancy and semantic content, while explicitly retaining memory of the robot trajectory. We demonstrate the effectiveness of our method against commonly used visuomotor policies in a challenging object search task. These experiments and supporting ablation studies show that our method leads to more effective object search behaviors, exhibits improved long-term memory, and successfully leverages hierarchical information to guide its navigation objectives.

Bridging Scene Understanding and Task Execution with Flexible Simulation Environments

Nov 20, 2020

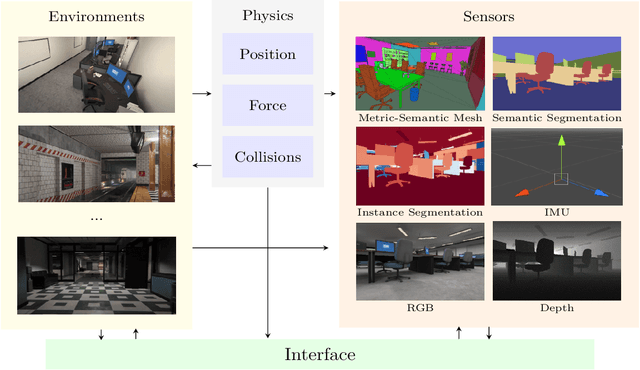

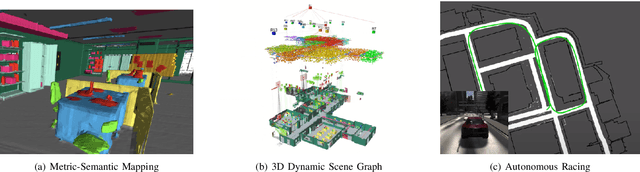

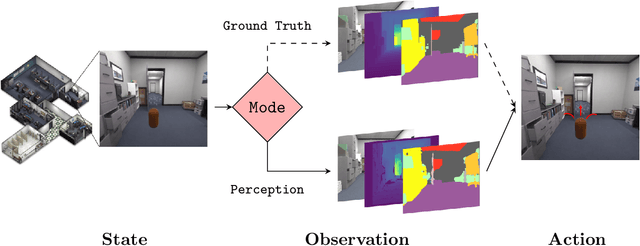

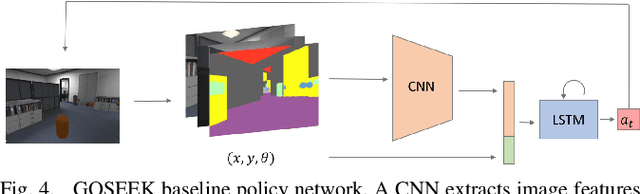

Abstract:Significant progress has been made in scene understanding which seeks to build 3D, metric and object-oriented representations of the world. Concurrently, reinforcement learning has made impressive strides largely enabled by advances in simulation. Comparatively, there has been less focus in simulation for perception algorithms. Simulation is becoming increasingly vital as sophisticated perception approaches such as metric-semantic mapping or 3D dynamic scene graph generation require precise 3D, 2D, and inertial information in an interactive environment. To that end, we present TESSE (Task Execution with Semantic Segmentation Environments), an open source simulator for developing scene understanding and task execution algorithms. TESSE has been used to develop state-of-the-art solutions for metric-semantic mapping and 3D dynamic scene graph generation. Additionally, TESSE served as the platform for the GOSEEK Challenge at the International Conference of Robotics and Automation (ICRA) 2020, an object search competition with an emphasis on reinforcement learning. Code for TESSE is available at https://github.com/MIT-TESSE.

A Comparison of Monte Carlo Tree Search and Mathematical Optimization for Large Scale Dynamic Resource Allocation

May 21, 2014

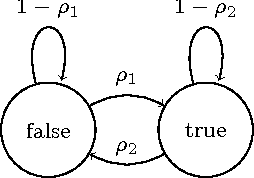

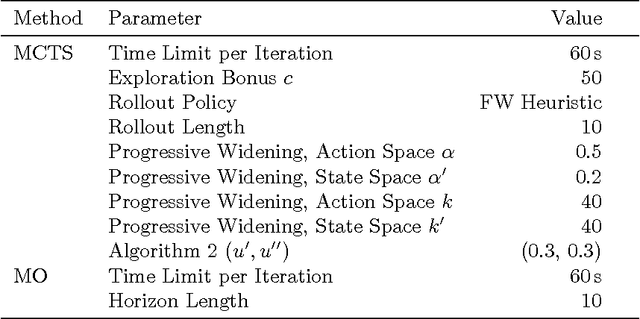

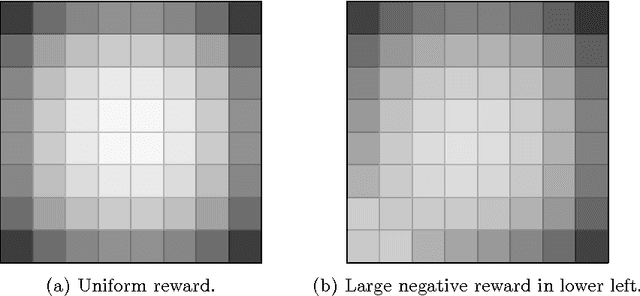

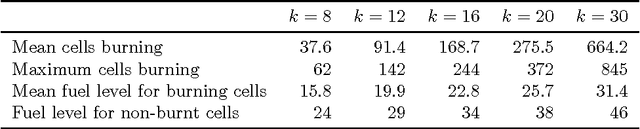

Abstract:Dynamic resource allocation (DRA) problems are an important class of dynamic stochastic optimization problems that arise in a variety of important real-world applications. DRA problems are notoriously difficult to solve to optimality since they frequently combine stochastic elements with intractably large state and action spaces. Although the artificial intelligence and operations research communities have independently proposed two successful frameworks for solving dynamic stochastic optimization problems---Monte Carlo tree search (MCTS) and mathematical optimization (MO), respectively---the relative merits of these two approaches are not well understood. In this paper, we adapt both MCTS and MO to a problem inspired by tactical wildfire and management and undertake an extensive computational study comparing the two methods on large scale instances in terms of both the state and the action spaces. We show that both methods are able to greatly improve on a baseline, problem-specific heuristic. On smaller instances, the MCTS and MO approaches perform comparably, but the MO approach outperforms MCTS as the size of the problem increases for a fixed computational budget.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge