Robert Moss

A Comparison of Monte Carlo Tree Search and Mathematical Optimization for Large Scale Dynamic Resource Allocation

May 21, 2014

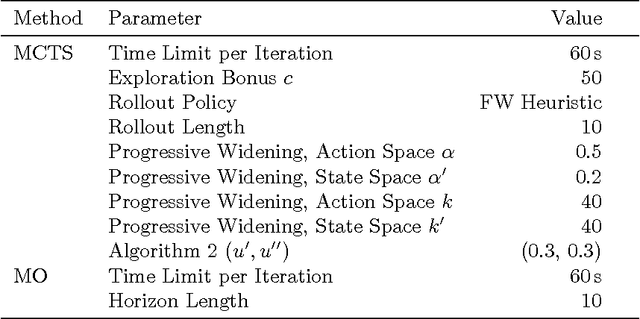

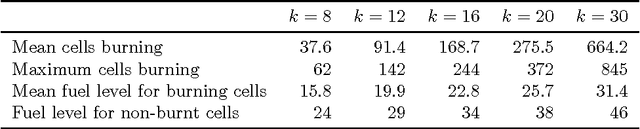

Abstract:Dynamic resource allocation (DRA) problems are an important class of dynamic stochastic optimization problems that arise in a variety of important real-world applications. DRA problems are notoriously difficult to solve to optimality since they frequently combine stochastic elements with intractably large state and action spaces. Although the artificial intelligence and operations research communities have independently proposed two successful frameworks for solving dynamic stochastic optimization problems---Monte Carlo tree search (MCTS) and mathematical optimization (MO), respectively---the relative merits of these two approaches are not well understood. In this paper, we adapt both MCTS and MO to a problem inspired by tactical wildfire and management and undertake an extensive computational study comparing the two methods on large scale instances in terms of both the state and the action spaces. We show that both methods are able to greatly improve on a baseline, problem-specific heuristic. On smaller instances, the MCTS and MO approaches perform comparably, but the MO approach outperforms MCTS as the size of the problem increases for a fixed computational budget.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge