Jörg Krüger

Feeling Machines: Ethics, Culture, and the Rise of Emotional AI

Jun 14, 2025Abstract:This paper explores the growing presence of emotionally responsive artificial intelligence through a critical and interdisciplinary lens. Bringing together the voices of early-career researchers from multiple fields, it explores how AI systems that simulate or interpret human emotions are reshaping our interactions in areas such as education, healthcare, mental health, caregiving, and digital life. The analysis is structured around four central themes: the ethical implications of emotional AI, the cultural dynamics of human-machine interaction, the risks and opportunities for vulnerable populations, and the emerging regulatory, design, and technical considerations. The authors highlight the potential of affective AI to support mental well-being, enhance learning, and reduce loneliness, as well as the risks of emotional manipulation, over-reliance, misrepresentation, and cultural bias. Key challenges include simulating empathy without genuine understanding, encoding dominant sociocultural norms into AI systems, and insufficient safeguards for individuals in sensitive or high-risk contexts. Special attention is given to children, elderly users, and individuals with mental health challenges, who may interact with AI in emotionally significant ways. However, there remains a lack of cognitive or legal protections which are necessary to navigate such engagements safely. The report concludes with ten recommendations, including the need for transparency, certification frameworks, region-specific fine-tuning, human oversight, and longitudinal research. A curated supplementary section provides practical tools, models, and datasets to support further work in this domain.

Physical Annotation for Automated Optical Inspection: A Concept for In-Situ, Pointer-Based Trainingdata Generation

Jun 05, 2025Abstract:This paper introduces a novel physical annotation system designed to generate training data for automated optical inspection. The system uses pointer-based in-situ interaction to transfer the valuable expertise of trained inspection personnel directly into a machine learning (ML) training pipeline. Unlike conventional screen-based annotation methods, our system captures physical trajectories and contours directly on the object, providing a more intuitive and efficient way to label data. The core technology uses calibrated, tracked pointers to accurately record user input and transform these spatial interactions into standardised annotation formats that are compatible with open-source annotation software. Additionally, a simple projector-based interface projects visual guidance onto the object to assist users during the annotation process, ensuring greater accuracy and consistency. The proposed concept bridges the gap between human expertise and automated data generation, enabling non-IT experts to contribute to the ML training pipeline and preventing the loss of valuable training samples. Preliminary evaluation results confirm the feasibility of capturing detailed annotation trajectories and demonstrate that integration with CVAT streamlines the workflow for subsequent ML tasks. This paper details the system architecture, calibration procedures and interface design, and discusses its potential contribution to future ML data generation for automated optical inspection.

Vanishing Depth: A Depth Adapter with Positional Depth Encoding for Generalized Image Encoders

Mar 25, 2025

Abstract:Generalized metric depth understanding is critical for precise vision-guided robotics, which current state-of-the-art (SOTA) vision-encoders do not support. To address this, we propose Vanishing Depth, a self-supervised training approach that extends pretrained RGB encoders to incorporate and align metric depth into their feature embeddings. Based on our novel positional depth encoding, we enable stable depth density and depth distribution invariant feature extraction. We achieve performance improvements and SOTA results across a spectrum of relevant RGBD downstream tasks - without the necessity of finetuning the encoder. Most notably, we achieve 56.05 mIoU on SUN-RGBD segmentation, 88.3 RMSE on Void's depth completion, and 83.8 Top 1 accuracy on NYUv2 scene classification. In 6D-object pose estimation, we outperform our predecessors of DinoV2, EVA-02, and Omnivore and achieve SOTA results for non-finetuned encoders in several related RGBD downstream tasks.

MVIP -- A Dataset and Methods for Application Oriented Multi-View and Multi-Modal Industrial Part Recognition

Feb 21, 2025Abstract:We present MVIP, a novel dataset for multi-modal and multi-view application-oriented industrial part recognition. Here we are the first to combine a calibrated RGBD multi-view dataset with additional object context such as physical properties, natural language, and super-classes. The current portfolio of available datasets offers a wide range of representations to design and benchmark related methods. In contrast to existing classification challenges, industrial recognition applications offer controlled multi-modal environments but at the same time have different problems than traditional 2D/3D classification challenges. Frequently, industrial applications must deal with a small amount or increased number of training data, visually similar parts, and varying object sizes, while requiring a robust near 100% top 5 accuracy under cost and time constraints. Current methods tackle such challenges individually, but direct adoption of these methods within industrial applications is complex and requires further research. Our main goal with MVIP is to study and push transferability of various state-of-the-art methods within related downstream tasks towards an efficient deployment of industrial classifiers. Additionally, we intend to push with MVIP research regarding several modality fusion topics, (automated) synthetic data generation, and complex data sampling -- combined in a single application-oriented benchmark.

On the Application of Egocentric Computer Vision to Industrial Scenarios

Jun 11, 2024

Abstract:Egocentric vision aims to capture and analyse the world from the first-person perspective. We explore the possibilities for egocentric wearable devices to improve and enhance industrial use cases w.r.t. data collection, annotation, labelling and downstream applications. This would contribute to easier data collection and allow users to provide additional context. We envision that this approach could serve as a supplement to the traditional industrial Machine Vision workflow. Code, Dataset and related resources will be available at: https://github.com/Vivek9Chavan/EgoVis24

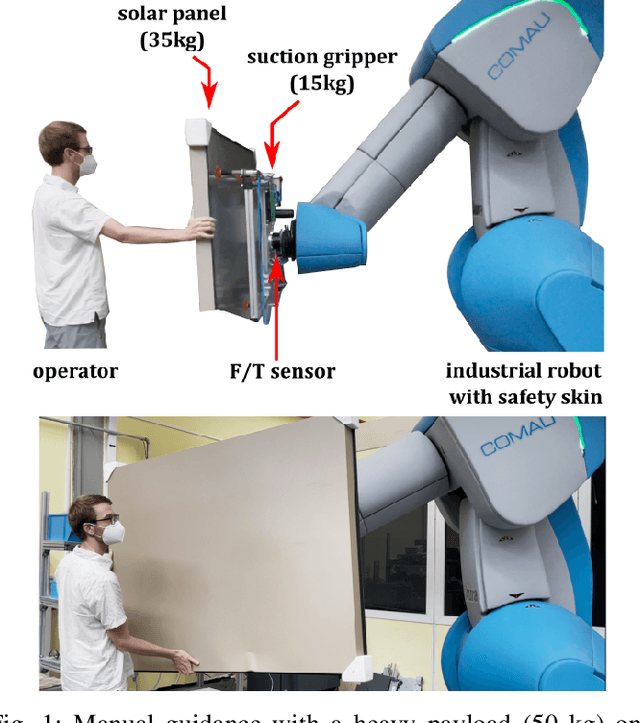

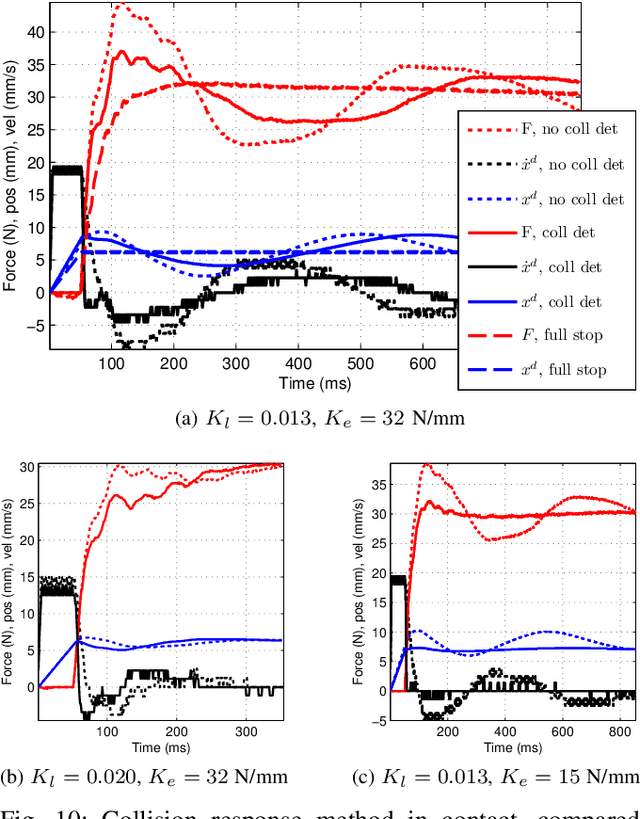

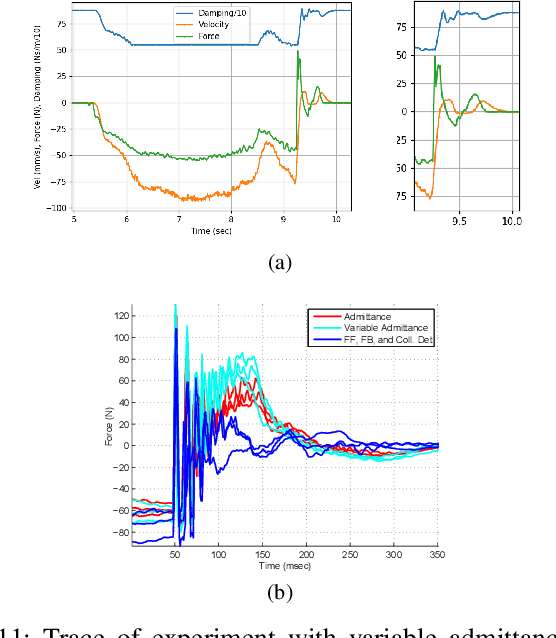

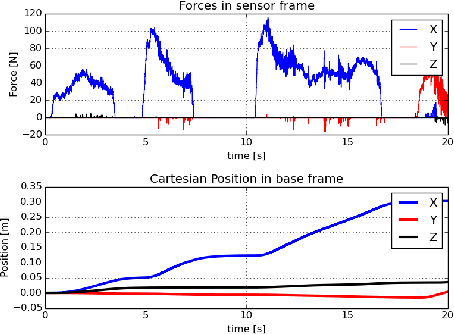

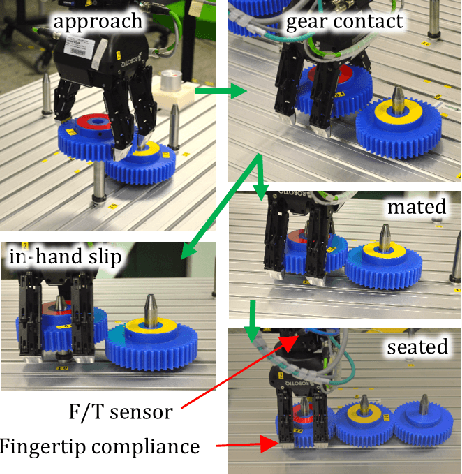

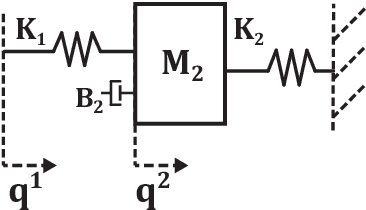

Towards High-Payload Admittance Control for Manual Guidance with Environmental Contact

Feb 02, 2022

Abstract:Force control enables hands-on teaching and physical collaboration, with the potential to improve ergonomics and flexibility of automation. Established methods for the design of compliance, impedance control, and \rev{collision response} can achieve free-space stability and acceptable peak contact force on lightweight, lower payload robots. Scaling collaboration to higher payloads can allow new applications, but introduces challenges due to the more significant payload dynamics and the use of higher-payload industrial robots. To achieve high-payload manual guidance with contact, this paper proposes and validates new mechatronic design methods: standard admittance control is extended with damping feedback, compliant structures are integrated to the environment, and a contact response method which allows continuous admittance control is proposed. These methods are compared with respect to free-space stability, contact stability, and peak contact force. The resulting methods are then applied to realize two contact-rich tasks on a 16 kg payload (peg in hole and slot assembly) and free-space co-manipulation of a 50 kg payload.

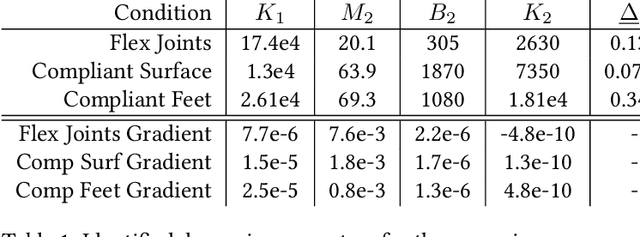

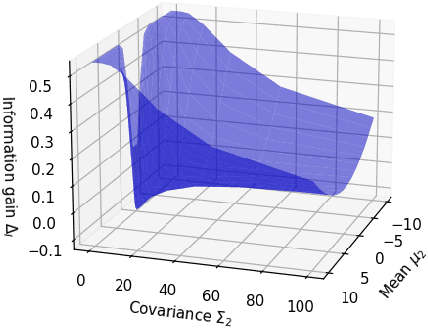

Contact Information Flow and Design of Compliance

Oct 24, 2021

Abstract:The objective of many contact-rich manipulation tasks can be expressed as desired contacts between environmental objects. Simulation and planning for rigid-body contact continues to advance, but the achievable performance is significantly impacted by hardware design, such as physical compliance and sensor placement. Much of mechatronic design for contact is done from a continuous controls perspective (e.g. peak collision force, contact stability), but hardware also affects the ability to infer discrete changes in contact. Robustly detecting contact state can support the correction of errors, both online and in trial-and-error learning. Here, discrete contact states are considered as changes in environmental dynamics, and the ability to infer this with proprioception (motor position and force sensors) is investigated. A metric of information gain is proposed, measuring the reduction in contact belief uncertainty from force/position measurements, and developed for fully- and partially-observed systems. The information gain depends on the coupled robot/environment dynamics and sensor placement, especially the location and degree of compliance. Hardware experiments over a range of physical compliance conditions validate that information gain predicts the speed and certainty with which contact is detected in (i) monitoring of contact-rich assembly and (ii) collision detection. Compliant environmental structures are then optimized to allow industrial robots to achieve safe, higher-speed contact.

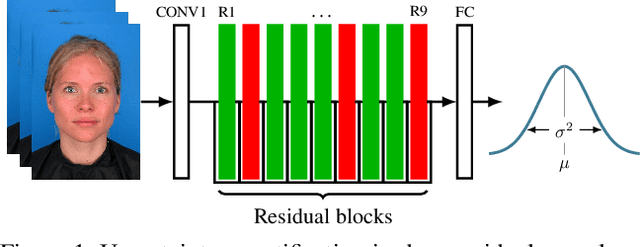

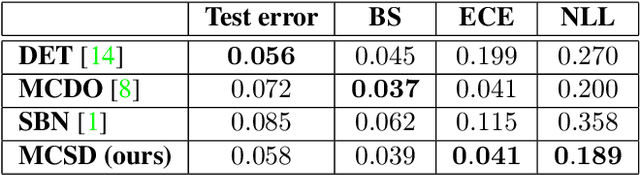

Uncertainty Quantification in Deep Residual Neural Networks

Jul 09, 2020

Abstract:Uncertainty quantification is an important and challenging problem in deep learning. Previous methods rely on dropout layers which are not present in modern deep architectures or batch normalization which is sensitive to batch sizes. In this work, we address the problem of uncertainty quantification in deep residual networks by using a regularization technique called stochastic depth. We show that training residual networks using stochastic depth can be interpreted as a variational approximation to the intractable posterior over the weights in Bayesian neural networks. We demonstrate that by sampling from a distribution of residual networks with varying depth and shared weights, meaningful uncertainty estimates can be obtained. Moreover, compared to the original formulation of residual networks, our method produces well-calibrated softmax probabilities with only minor changes to the network's structure. We evaluate our approach on popular computer vision datasets and measure the quality of uncertainty estimates. We also test the robustness to domain shift and show that our method is able to express higher predictive uncertainty on out-of-distribution samples. Finally, we demonstrate how the proposed approach could be used to obtain uncertainty estimates in facial verification applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge