Ilya Safro

Clemson University

Neural Architecture Search Algorithms for Quantum Autoencoders

Sep 18, 2025Abstract:The design of quantum circuits is currently driven by the specific objectives of the quantum algorithm in question. This approach thus relies on a significant manual effort by the quantum algorithm designer to design an appropriate circuit for the task. However this approach cannot scale to more complex quantum algorithms in the future without exponentially increasing the circuit design effort and introducing unwanted inductive biases. Motivated by this observation, we propose to automate the process of cicuit design by drawing inspiration from Neural Architecture Search (NAS). In this work, we propose two Quantum-NAS algorithms that aim to find efficient circuits given a particular quantum task. We choose quantum data compression as our driver quantum task and demonstrate the performance of our algorithms by finding efficient autoencoder designs that outperform baselines on three different tasks - quantum data denoising, classical data compression and pure quantum data compression. Our results indicate that quantum NAS algorithms can significantly alleviate the manual effort while delivering performant quantum circuits for any given task.

QAOA Parameter Transferability for Maximum Independent Set using Graph Attention Networks

Apr 29, 2025Abstract:The quantum approximate optimization algorithm (QAOA) is one of the promising variational approaches of quantum computing to solve combinatorial optimization problems. In QAOA, variational parameters need to be optimized by solving a series of nonlinear, nonconvex optimization programs. In this work, we propose a QAOA parameter transfer scheme using Graph Attention Networks (GAT) to solve Maximum Independent Set (MIS) problems. We prepare optimized parameters for graphs of 12 and 14 vertices and use GATs to transfer their parameters to larger graphs. Additionally, we design a hybrid distributed resource-aware algorithm for MIS (HyDRA-MIS), which decomposes large problems into smaller ones that can fit onto noisy intermediate-scale quantum (NISQ) computers. We integrate our GAT-based parameter transfer approach to HyDRA-MIS and demonstrate competitive results compared to KaMIS, a state-of-the-art classical MIS solver, on graphs with several thousands vertices.

QAOA-GPT: Efficient Generation of Adaptive and Regular Quantum Approximate Optimization Algorithm Circuits

Apr 23, 2025Abstract:Quantum computing has the potential to improve our ability to solve certain optimization problems that are computationally difficult for classical computers, by offering new algorithmic approaches that may provide speedups under specific conditions. In this work, we introduce QAOA-GPT, a generative framework that leverages Generative Pretrained Transformers (GPT) to directly synthesize quantum circuits for solving quadratic unconstrained binary optimization problems, and demonstrate it on the MaxCut problem on graphs. To diversify the training circuits and ensure their quality, we have generated a synthetic dataset using the adaptive QAOA approach, a method that incrementally builds and optimizes problem-specific circuits. The experiments conducted on a curated set of graph instances demonstrate that QAOA-GPT, generates high quality quantum circuits for new problem instances unseen in the training as well as successfully parametrizes QAOA. Our results show that using QAOA-GPT to generate quantum circuits will significantly decrease both the computational overhead of classical QAOA and adaptive approaches that often use gradient evaluation to generate the circuit and the classical optimization of the circuit parameters. Our work shows that generative AI could be a promising avenue to generate compact quantum circuits in a scalable way.

Cross-Problem Parameter Transfer in Quantum Approximate Optimization Algorithm: A Machine Learning Approach

Apr 14, 2025Abstract:Quantum Approximate Optimization Algorithm (QAOA) is one of the most promising candidates to achieve the quantum advantage in solving combinatorial optimization problems. The process of finding a good set of variational parameters in the QAOA circuit has proven to be challenging due to multiple factors, such as barren plateaus. As a result, there is growing interest in exploiting parameter transferability, where parameter sets optimized for one problem instance are transferred to another that could be more complex either to estimate the solution or to serve as a warm start for further optimization. But can we transfer parameters from one class of problems to another? Leveraging parameter sets learned from a well-studied class of problems could help navigate the less studied one, reducing optimization overhead and mitigating performance pitfalls. In this paper, we study whether pretrained QAOA parameters of MaxCut can be used as is or to warm start the Maximum Independent Set (MIS) circuits. Specifically, we design machine learning models to find good donor candidates optimized on MaxCut and apply their parameters to MIS acceptors. Our experimental results show that such parameter transfer can significantly reduce the number of optimization iterations required while achieving comparable approximation ratios.

QAdaPrune: Adaptive Parameter Pruning For Training Variational Quantum Circuits

Aug 23, 2024Abstract:In the present noisy intermediate scale quantum computing era, there is a critical need to devise methods for the efficient implementation of gate-based variational quantum circuits. This ensures that a range of proposed applications can be deployed on real quantum hardware. The efficiency of quantum circuit is desired both in the number of trainable gates and the depth of the overall circuit. The major concern of barren plateaus has made this need for efficiency even more acute. The problem of efficient quantum circuit realization has been extensively studied in the literature to reduce gate complexity and circuit depth. Another important approach is to design a method to reduce the \emph{parameter complexity} in a variational quantum circuit. Existing methods include hyperparameter-based parameter pruning which introduces an additional challenge of finding the best hyperparameters for different applications. In this paper, we present \emph{QAdaPrune} - an adaptive parameter pruning algorithm that automatically determines the threshold and then intelligently prunes the redundant and non-performing parameters. We show that the resulting sparse parameter sets yield quantum circuits that perform comparably to the unpruned quantum circuits and in some cases may enhance trainability of the circuits even if the original quantum circuit gets stuck in a barren plateau.\\ \noindent{\bf Reproducibility}: The source code and data are available at \url{https://github.com/aicaffeinelife/QAdaPrune.git}

Dyport: Dynamic Importance-based Hypothesis Generation Benchmarking Technique

Dec 06, 2023Abstract:This paper presents a novel benchmarking framework Dyport for evaluating biomedical hypothesis generation systems. Utilizing curated datasets, our approach tests these systems under realistic conditions, enhancing the relevance of our evaluations. We integrate knowledge from the curated databases into a dynamic graph, accompanied by a method to quantify discovery importance. This not only assesses hypothesis accuracy but also their potential impact in biomedical research which significantly extends traditional link prediction benchmarks. Applicability of our benchmarking process is demonstrated on several link prediction systems applied on biomedical semantic knowledge graphs. Being flexible, our benchmarking system is designed for broad application in hypothesis generation quality verification, aiming to expand the scope of scientific discovery within the biomedical research community. Availability and implementation: Dyport framework is fully open-source. All code and datasets are available at: https://github.com/IlyaTyagin/Dyport

QArchSearch: A Scalable Quantum Architecture Search Package

Oct 11, 2023

Abstract:The current era of quantum computing has yielded several algorithms that promise high computational efficiency. While the algorithms are sound in theory and can provide potentially exponential speedup, there is little guidance on how to design proper quantum circuits to realize the appropriate unitary transformation to be applied to the input quantum state. In this paper, we present \texttt{QArchSearch}, an AI based quantum architecture search package with the \texttt{QTensor} library as a backend that provides a principled and automated approach to finding the best model given a task and input quantum state. We show that the search package is able to efficiently scale the search to large quantum circuits and enables the exploration of more complex models for different quantum applications. \texttt{QArchSearch} runs at scale and high efficiency on high-performance computing systems using a two-level parallelization scheme on both CPUs and GPUs, which has been demonstrated on the Polaris supercomputer.

Literature-based Discovery for Landscape Planning

Jun 05, 2023Abstract:This project demonstrates how medical corpus hypothesis generation, a knowledge discovery field of AI, can be used to derive new research angles for landscape and urban planners. The hypothesis generation approach herein consists of a combination of deep learning with topic modeling, a probabilistic approach to natural language analysis that scans aggregated research databases for words that can be grouped together based on their subject matter commonalities; the word groups accordingly form topics that can provide implicit connections between two general research terms. The hypothesis generation system AGATHA was used to identify likely conceptual relationships between emerging infectious diseases (EIDs) and deforestation, with the objective of providing landscape planners guidelines for productive research directions to help them formulate research hypotheses centered on deforestation and EIDs that will contribute to the broader health field that asserts causal roles of landscape-level issues. This research also serves as a partial proof-of-concept for the application of medical database hypothesis generation to medicine-adjacent hypothesis discovery.

Learning To Optimize Quantum Neural Network Without Gradients

Apr 15, 2023Abstract:Quantum Machine Learning is an emerging sub-field in machine learning where one of the goals is to perform pattern recognition tasks by encoding data into quantum states. This extension from classical to quantum domain has been made possible due to the development of hybrid quantum-classical algorithms that allow a parameterized quantum circuit to be optimized using gradient based algorithms that run on a classical computer. The similarities in training of these hybrid algorithms and classical neural networks has further led to the development of Quantum Neural Networks (QNNs). However, in the current training regime for QNNs, the gradients w.r.t objective function have to be computed on the quantum device. This computation is highly non-scalable and is affected by hardware and sampling noise present in the current generation of quantum hardware. In this paper, we propose a training algorithm that does not rely on gradient information. Specifically, we introduce a novel meta-optimization algorithm that trains a \emph{meta-optimizer} network to output parameters for the quantum circuit such that the objective function is minimized. We empirically and theoretically show that we achieve a better quality minima in fewer circuit evaluations than existing gradient based algorithms on different datasets.

Towards Practical Explainability with Cluster Descriptors

Oct 20, 2022

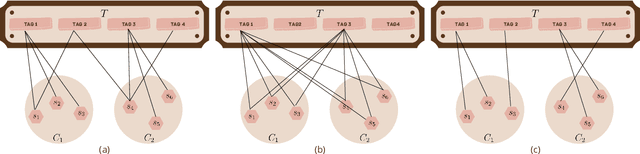

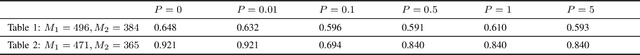

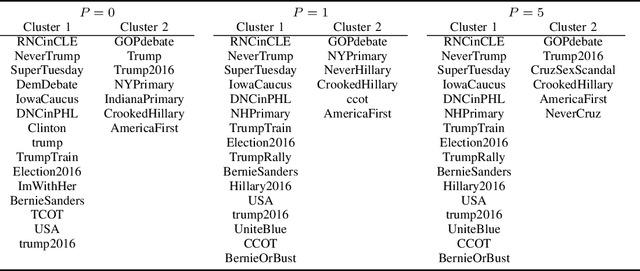

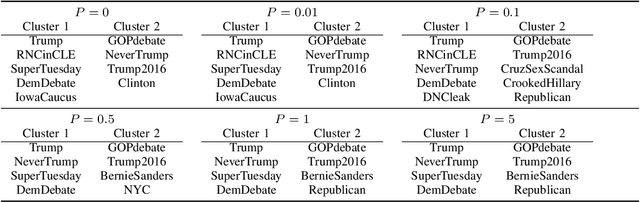

Abstract:With the rapid development of machine learning, improving its explainability has become a crucial research goal. We study the problem of making the clusters more explainable by investigating the cluster descriptors. Given a set of objects $S$, a clustering of these objects $\pi$, and a set of tags $T$ that have not participated in the clustering algorithm. Each object in $S$ is associated with a subset of $T$. The goal is to find a representative set of tags for each cluster, referred to as the cluster descriptors, with the constraint that these descriptors we find are pairwise disjoint, and the total size of all the descriptors is minimized. In general, this problem is NP-hard. We propose a novel explainability model that reinforces the previous models in such a way that tags that do not contribute to explainability and do not sufficiently distinguish between clusters are not added to the optimal descriptors. The proposed model is formulated as a quadratic unconstrained binary optimization problem which makes it suitable for solving on modern optimization hardware accelerators. We experimentally demonstrate how a proposed explainability model can be solved on specialized hardware for accelerating combinatorial optimization, the Fujitsu Digital Annealer, and use real-life Twitter and PubMed datasets for use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge