Iljoo Yoon

Matrix Encoding Networks for Neural Combinatorial Optimization

Jun 21, 2021

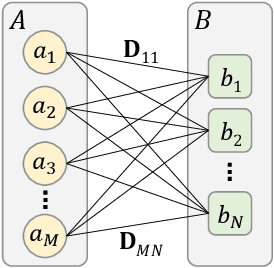

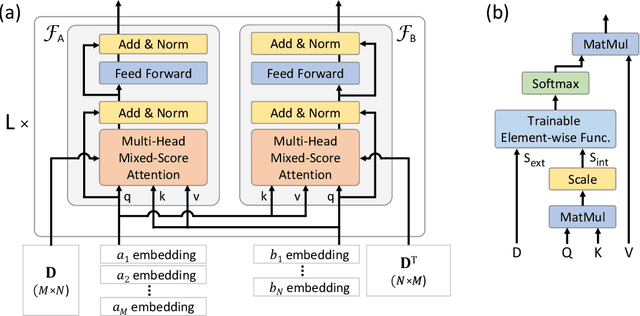

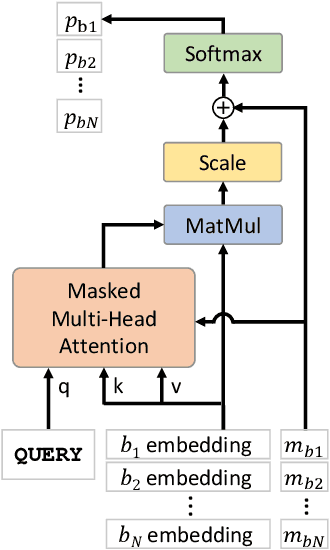

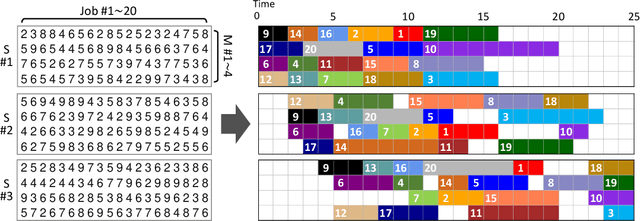

Abstract:Machine Learning (ML) can help solve combinatorial optimization (CO) problems better. A popular approach is to use a neural net to compute on the parameters of a given CO problem and extract useful information that guides the search for good solutions. Many CO problems of practical importance can be specified in a matrix form of parameters quantifying the relationship between two groups of items. There is currently no neural net model, however, that takes in such matrix-style relationship data as an input. Consequently, these types of CO problems have been out of reach for ML engineers. In this paper, we introduce Matrix Encoding Network (MatNet) and show how conveniently it takes in and processes parameters of such complex CO problems. Using an end-to-end model based on MatNet, we solve asymmetric traveling salesman (ATSP) and flexible flow shop (FFSP) problems as the earliest neural approach. In particular, for a class of FFSP we have tested MatNet on, we demonstrate a far superior empirical performance to any methods (neural or not) known to date.

POMO: Policy Optimization with Multiple Optima for Reinforcement Learning

Oct 30, 2020

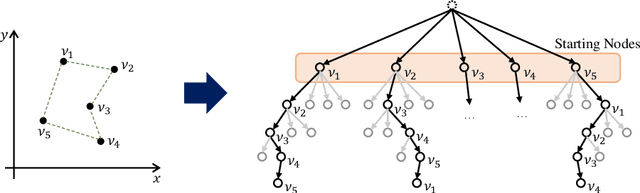

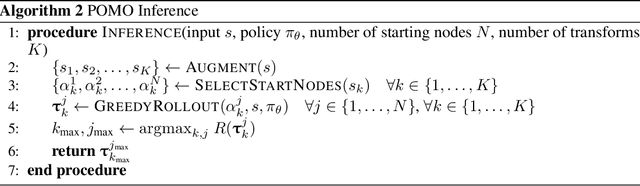

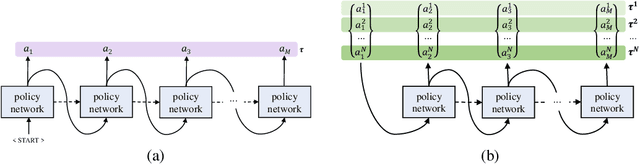

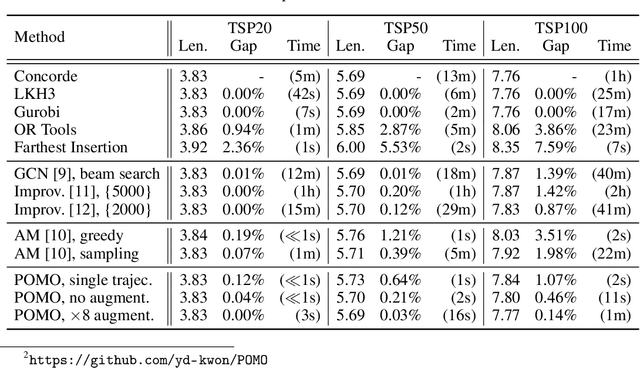

Abstract:In neural combinatorial optimization (CO), reinforcement learning (RL) can turn a deep neural net into a fast, powerful heuristic solver of NP-hard problems. This approach has a great potential in practical applications because it allows near-optimal solutions to be found without expert guides armed with substantial domain knowledge. We introduce Policy Optimization with Multiple Optima (POMO), an end-to-end approach for building such a heuristic solver. POMO is applicable to a wide range of CO problems. It is designed to exploit the symmetries in the representation of a CO solution. POMO uses a modified REINFORCE algorithm that forces diverse rollouts towards all optimal solutions. Empirically, the low-variance baseline of POMO makes RL training fast and stable, and it is more resistant to local minima compared to previous approaches. We also introduce a new augmentation-based inference method, which accompanies POMO nicely. We demonstrate the effectiveness of POMO by solving three popular NP-hard problems, namely, traveling salesman (TSP), capacitated vehicle routing (CVRP), and 0-1 knapsack (KP). For all three, our solver based on POMO shows a significant improvement in performance over all recent learned heuristics. In particular, we achieve the optimality gap of 0.14% with TSP100 while reducing inference time by more than an order of magnitude.

Cooperative Multi-Agent Reinforcement Learning Framework for Scalping Trading

Mar 31, 2019

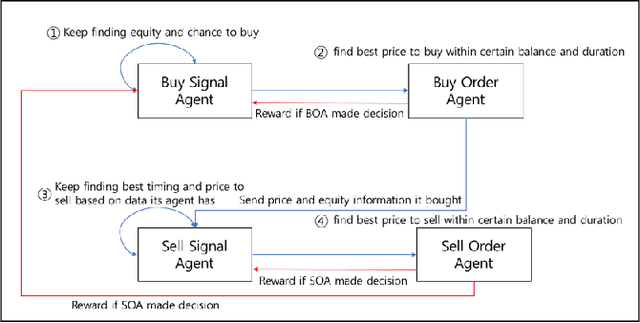

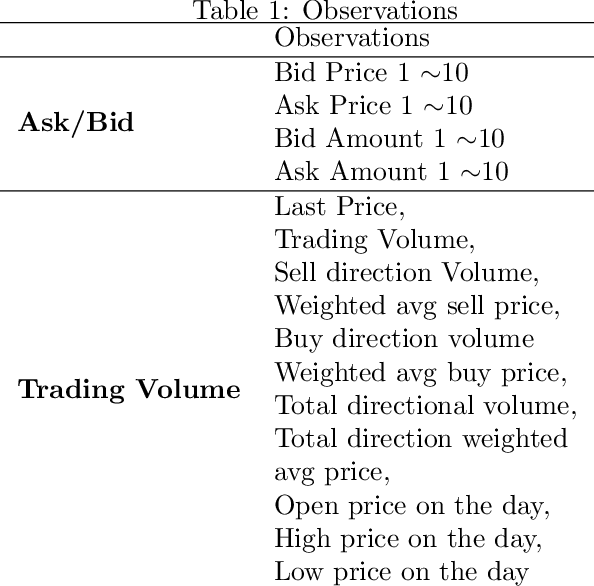

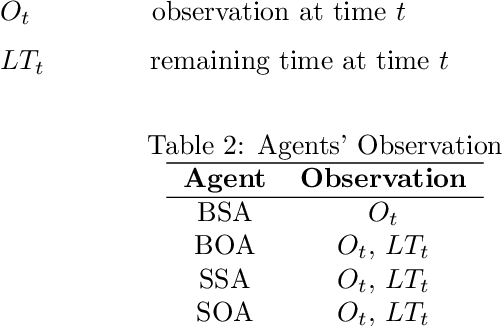

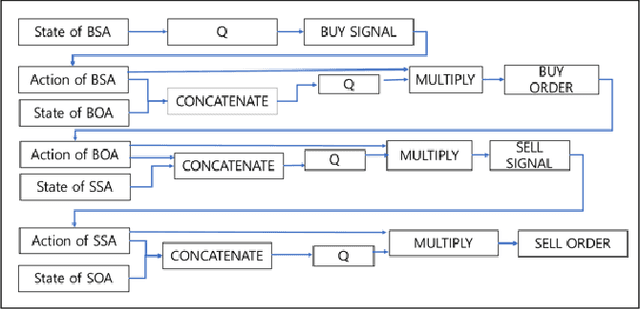

Abstract:We explore deep Reinforcement Learning(RL) algorithms for scalping trading and knew that there is no appropriate trading gym and agent examples. Thus we propose gym and agent like Open AI gym in finance. Not only that, we introduce new RL framework based on our hybrid algorithm which leverages between supervised learning and RL algorithm and uses meaningful observations such order book and settlement data from experience watching scalpers trading. That is very crucial information for traders behavior to be decided. To feed these data into our model, we use spatio-temporal convolution layer, called Conv3D for order book data and temporal CNN, called Conv1D for settlement data. Those are preprocessed by episode filter we developed. Agent consists of four sub agents divided to clarify their own goal to make best decision. Also, we adopted value and policy based algorithm to our framework. With these features, we could make agent mimic scalpers as much as possible. In many fields, RL algorithm has already begun to transcend human capabilities in many domains. This approach could be a starting point to beat human in the financial stock market, too and be a good reference for anyone who wants to design RL algorithm in real world domain. Finally, weexperiment our framework and gave you experiment progress.

Clear the Fog: Combat Value Assessment in Incomplete Information Games with Convolutional Encoder-Decoders

Nov 30, 2018Abstract:StarCraft, one of the most popular real-time strategy games, is a compelling environment for artificial intelligence research for both micro-level unit control and macro-level strategic decision making. In this study, we address an eminent problem concerning macro-level decision making, known as the 'fog-of-war', which rises naturally from the fact that information regarding the opponent's state is always provided in the incomplete form. For intelligent agents to play like human players, it is obvious that making accurate predictions of the opponent's status under incomplete information will increase its chance of winning. To reflect this fact, we propose a convolutional encoder-decoder architecture that predicts potential counts and locations of the opponent's units based on only partially visible and noisy information. To evaluate the performance of our proposed method, we train an additional classifier on the encoder-decoder output to predict the game outcome (win or lose). Finally, we designed an agent incorporating the proposed method and conducted simulation games against rule-based agents to demonstrate both effectiveness and practicality. All experiments were conducted on actual game replay data acquired from professional players.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge