Ilias Siniosoglou

Enhancing 3D Object Detection in Autonomous Vehicles Based on Synthetic Virtual Environment Analysis

Dec 10, 2024

Abstract:Autonomous Vehicles (AVs) use natural images and videos as input to understand the real world by overlaying and inferring digital elements, facilitating proactive detection in an effort to assure safety. A crucial aspect of this process is real-time, accurate object recognition through automatic scene analysis. While traditional methods primarily concentrate on 2D object detection, exploring 3D object detection, which involves projecting 3D bounding boxes into the three-dimensional environment, holds significance and can be notably enhanced using the AR ecosystem. This study examines an AI model's ability to deduce 3D bounding boxes in the context of real-time scene analysis while producing and evaluating the model's performance and processing time, in the virtual domain, which is then applied to AVs. This work also employs a synthetic dataset that includes artificially generated images mimicking various environmental, lighting, and spatiotemporal states. This evaluation is oriented in handling images featuring objects in diverse weather conditions, captured with varying camera settings. These variations pose more challenging detection and recognition scenarios, which the outcomes of this work can help achieve competitive results under most of the tested conditions.

Applied Federated Model Personalisation in the Industrial Domain: A Comparative Study

Sep 10, 2024

Abstract:The time-consuming nature of training and deploying complicated Machine and Deep Learning (DL) models for a variety of applications continues to pose significant challenges in the field of Machine Learning (ML). These challenges are particularly pronounced in the federated domain, where optimizing models for individual nodes poses significant difficulty. Many methods have been developed to tackle this problem, aiming to reduce training expenses and time while maintaining efficient optimisation. Three suggested strategies to tackle this challenge include Active Learning, Knowledge Distillation, and Local Memorization. These methods enable the adoption of smaller models that require fewer computational resources and allow for model personalization with local insights, thereby improving the effectiveness of current models. The present study delves into the fundamental principles of these three approaches and proposes an advanced Federated Learning System that utilises different Personalisation methods towards improving the accuracy of AI models and enhancing user experience in real-time NG-IoT applications, investigating the efficacy of these techniques in the local and federated domain. The results of the original and optimised models are then compared in both local and federated contexts using a comparison analysis. The post-analysis shows encouraging outcomes when it comes to optimising and personalising the models with the suggested techniques.

A Closer Look at Data Augmentation Strategies for Finetuning-Based Low/Few-Shot Object Detection

Aug 20, 2024

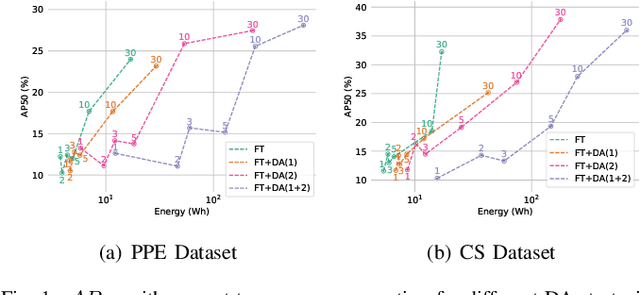

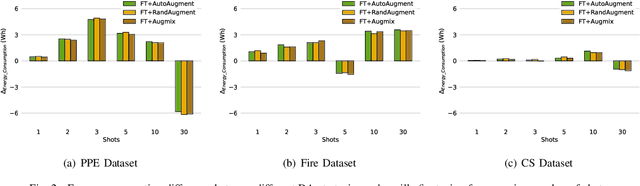

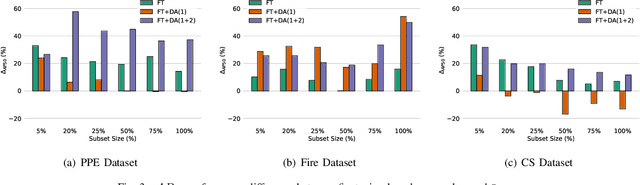

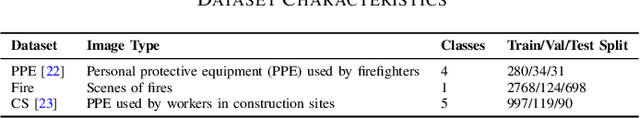

Abstract:Current methods for low- and few-shot object detection have primarily focused on enhancing model performance for detecting objects. One common approach to achieve this is by combining model finetuning with data augmentation strategies. However, little attention has been given to the energy efficiency of these approaches in data-scarce regimes. This paper seeks to conduct a comprehensive empirical study that examines both model performance and energy efficiency of custom data augmentations and automated data augmentation selection strategies when combined with a lightweight object detector. The methods are evaluated in three different benchmark datasets in terms of their performance and energy consumption, and the Efficiency Factor is employed to gain insights into their effectiveness considering both performance and efficiency. Consequently, it is shown that in many cases, the performance gains of data augmentation strategies are overshadowed by their increased energy usage, necessitating the development of more energy efficient data augmentation strategies to address data scarcity.

Enhancing Performance for Highly Imbalanced Medical Data via Data Regularization in a Federated Learning Setting

May 30, 2024Abstract:The increased availability of medical data has significantly impacted healthcare by enabling the application of machine / deep learning approaches in various instances. However, medical datasets are usually small and scattered across multiple providers, suffer from high class-imbalance, and are subject to stringent data privacy constraints. In this paper, the application of a data regularization algorithm, suitable for learning under high class-imbalance, in a federated learning setting is proposed. Specifically, the goal of the proposed method is to enhance model performance for cardiovascular disease prediction by tackling the class-imbalance that typically characterizes datasets used for this purpose, as well as by leveraging patient data available in different nodes of a federated ecosystem without compromising their privacy and enabling more resource sensitive allocation. The method is evaluated across four datasets for cardiovascular disease prediction, which are scattered across different clients, achieving improved performance. Meanwhile, its robustness under various hyperparameter settings, as well as its ability to adapt to different resource allocation scenarios, is verified.

Evaluating the Efficacy of AI Techniques in Textual Anonymization: A Comparative Study

May 09, 2024Abstract:In the digital era, with escalating privacy concerns, it's imperative to devise robust strategies that protect private data while maintaining the intrinsic value of textual information. This research embarks on a comprehensive examination of text anonymisation methods, focusing on Conditional Random Fields (CRF), Long Short-Term Memory (LSTM), Embeddings from Language Models (ELMo), and the transformative capabilities of the Transformers architecture. Each model presents unique strengths since LSTM is modeling long-term dependencies, CRF captures dependencies among word sequences, ELMo delivers contextual word representations using deep bidirectional language models and Transformers introduce self-attention mechanisms that provide enhanced scalability. Our study is positioned as a comparative analysis of these models, emphasising their synergistic potential in addressing text anonymisation challenges. Preliminary results indicate that CRF, LSTM, and ELMo individually outperform traditional methods. The inclusion of Transformers, when compared alongside with the other models, offers a broader perspective on achieving optimal text anonymisation in contemporary settings.

Benchmarking Advanced Text Anonymisation Methods: A Comparative Study on Novel and Traditional Approaches

Apr 22, 2024Abstract:In the realm of data privacy, the ability to effectively anonymise text is paramount. With the proliferation of deep learning and, in particular, transformer architectures, there is a burgeoning interest in leveraging these advanced models for text anonymisation tasks. This paper presents a comprehensive benchmarking study comparing the performance of transformer-based models and Large Language Models(LLM) against traditional architectures for text anonymisation. Utilising the CoNLL-2003 dataset, known for its robustness and diversity, we evaluate several models. Our results showcase the strengths and weaknesses of each approach, offering a clear perspective on the efficacy of modern versus traditional methods. Notably, while modern models exhibit advanced capabilities in capturing con textual nuances, certain traditional architectures still keep high performance. This work aims to guide researchers in selecting the most suitable model for their anonymisation needs, while also shedding light on potential paths for future advancements in the field.

Evaluating the Energy Efficiency of Few-Shot Learning for Object Detection in Industrial Settings

Mar 11, 2024

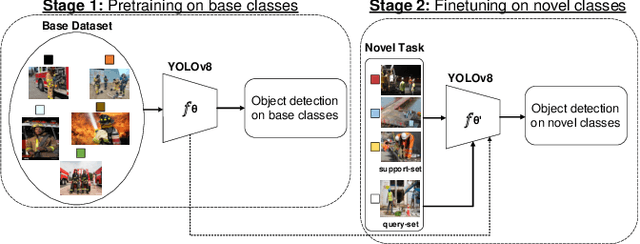

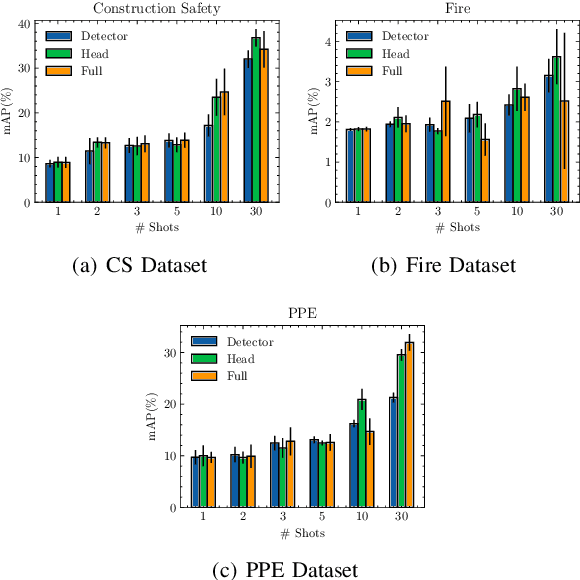

Abstract:In the ever-evolving era of Artificial Intelligence (AI), model performance has constituted a key metric driving innovation, leading to an exponential growth in model size and complexity. However, sustainability and energy efficiency have been critical requirements during deployment in contemporary industrial settings, necessitating the use of data-efficient approaches such as few-shot learning. In this paper, to alleviate the burden of lengthy model training and minimize energy consumption, a finetuning approach to adapt standard object detection models to downstream tasks is examined. Subsequently, a thorough case study and evaluation of the energy demands of the developed models, applied in object detection benchmark datasets from volatile industrial environments is presented. Specifically, different finetuning strategies as well as utilization of ancillary evaluation data during training are examined, and the trade-off between performance and efficiency is highlighted in this low-data regime. Finally, this paper introduces a novel way to quantify this trade-off through a customized Efficiency Factor metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge