Igor Sieradzki

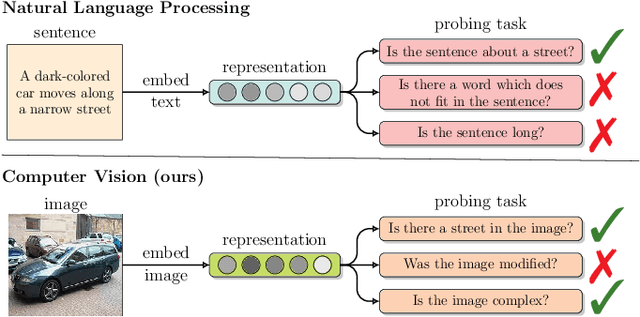

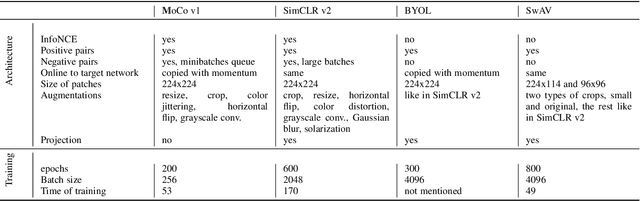

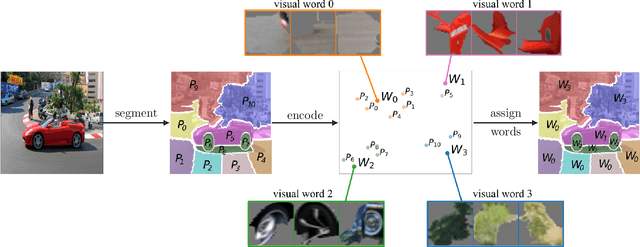

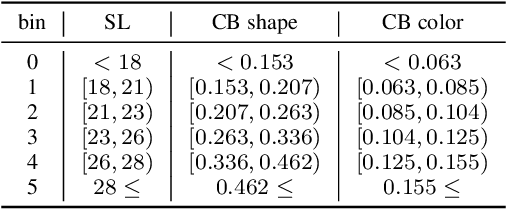

Visual Probing: Cognitive Framework for Explaining Self-Supervised Image Representations

Jun 21, 2021

Abstract:Recently introduced self-supervised methods for image representation learning provide on par or superior results to their fully supervised competitors, yet the corresponding efforts to explain the self-supervised approaches lag behind. Motivated by this observation, we introduce a novel visual probing framework for explaining the self-supervised models by leveraging probing tasks employed previously in natural language processing. The probing tasks require knowledge about semantic relationships between image parts. Hence, we propose a systematic approach to obtain analogs of natural language in vision, such as visual words, context, and taxonomy. Our proposal is grounded in Marr's computational theory of vision and concerns features like textures, shapes, and lines. We show the effectiveness and applicability of those analogs in the context of explaining self-supervised representations. Our key findings emphasize that relations between language and vision can serve as an effective yet intuitive tool for discovering how machine learning models work, independently of data modality. Our work opens a plethora of research pathways towards more explainable and transparent AI.

On Latent Distributions Without Finite Mean in Generative Models

Jun 05, 2018

Abstract:We investigate the properties of multidimensional probability distributions in the context of latent space prior distributions of implicit generative models. Our work revolves around the phenomena arising while decoding linear interpolations between two random latent vectors -- regions of latent space in close proximity to the origin of the space are sampled causing distribution mismatch. We show that due to the Central Limit Theorem, this region is almost never sampled during the training process. As a result, linear interpolations may generate unrealistic data and their usage as a tool to check quality of the trained model is questionable. We propose to use multidimensional Cauchy distribution as the latent prior. Cauchy distribution does not satisfy the assumptions of the CLT and has a number of properties that allow it to work well in conjunction with linear interpolations. We also provide two general methods of creating non-linear interpolations that are easily applicable to a large family of common latent distributions. Finally we empirically analyze the quality of data generated from low-probability-mass regions for the DCGAN model on the CelebA dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge