Hyunju Kim

DETACH : Decomposed Spatio-Temporal Alignment for Exocentric Video and Ambient Sensors with Staged Learning

Dec 23, 2025

Abstract:Aligning egocentric video with wearable sensors have shown promise for human action recognition, but face practical limitations in user discomfort, privacy concerns, and scalability. We explore exocentric video with ambient sensors as a non-intrusive, scalable alternative. While prior egocentric-wearable works predominantly adopt Global Alignment by encoding entire sequences into unified representations, this approach fails in exocentric-ambient settings due to two problems: (P1) inability to capture local details such as subtle motions, and (P2) over-reliance on modality-invariant temporal patterns, causing misalignment between actions sharing similar temporal patterns with different spatio-semantic contexts. To resolve these problems, we propose DETACH, a decomposed spatio-temporal framework. This explicit decomposition preserves local details, while our novel sensor-spatial features discovered via online clustering provide semantic grounding for context-aware alignment. To align the decomposed features, our two-stage approach establishes spatial correspondence through mutual supervision, then performs temporal alignment via a spatial-temporal weighted contrastive loss that adaptively handles easy negatives, hard negatives, and false negatives. Comprehensive experiments with downstream tasks on Opportunity++ and HWU-USP datasets demonstrate substantial improvements over adapted egocentric-wearable baselines.

DiffIM: Differentiable Influence Minimization with Surrogate Modeling and Continuous Relaxation

Feb 03, 2025Abstract:In social networks, people influence each other through social links, which can be represented as propagation among nodes in graphs. Influence minimization (IMIN) is the problem of manipulating the structures of an input graph (e.g., removing edges) to reduce the propagation among nodes. IMIN can represent time-critical real-world applications, such as rumor blocking, but IMIN is theoretically difficult and computationally expensive. Moreover, the discrete nature of IMIN hinders the usage of powerful machine learning techniques, which requires differentiable computation. In this work, we propose DiffIM, a novel method for IMIN with two differentiable schemes for acceleration: (1) surrogate modeling for efficient influence estimation, which avoids time-consuming simulations (e.g., Monte Carlo), and (2) the continuous relaxation of decisions, which avoids the evaluation of individual discrete decisions (e.g., removing an edge). We further propose a third accelerating scheme, gradient-driven selection, that chooses edges instantly based on gradients without optimization (spec., gradient descent iterations) on each test instance. Through extensive experiments on real-world graphs, we show that each proposed scheme significantly improves speed with little (or even no) IMIN performance degradation. Our method is Pareto-optimal (i.e., no baseline is faster and more effective than it) and typically several orders of magnitude (spec., up to 15,160X) faster than the most effective baseline while being more effective.

Robust Weight Initialization for Tanh Neural Networks with Fixed Point Analysis

Oct 03, 2024Abstract:As a neural network's depth increases, it can achieve strong generalization performance. Training, however, becomes challenging due to gradient issues. Theoretical research and various methods have been introduced to address this issues. However, research on weight initialization methods that can be effectively applied to tanh neural networks of varying sizes still needs to be completed. This paper presents a novel weight initialization method for Feedforward Neural Networks with tanh activation function. Based on an analysis of the fixed points of the function $\tanh(ax)$, our proposed method aims to determine values of $a$ that prevent the saturation of activations. A series of experiments on various classification datasets demonstrate that the proposed method is more robust to network size variations than the existing method. Furthermore, when applied to Physics-Informed Neural Networks, the method exhibits faster convergence and robustness to variations of the network size compared to Xavier initialization in problems of Partial Differential Equations.

FlowerFormer: Empowering Neural Architecture Encoding using a Flow-aware Graph Transformer

Mar 21, 2024Abstract:The success of a specific neural network architecture is closely tied to the dataset and task it tackles; there is no one-size-fits-all solution. Thus, considerable efforts have been made to quickly and accurately estimate the performances of neural architectures, without full training or evaluation, for given tasks and datasets. Neural architecture encoding has played a crucial role in the estimation, and graphbased methods, which treat an architecture as a graph, have shown prominent performance. For enhanced representation learning of neural architectures, we introduce FlowerFormer, a powerful graph transformer that incorporates the information flows within a neural architecture. FlowerFormer consists of two key components: (a) bidirectional asynchronous message passing, inspired by the flows; (b) global attention built on flow-based masking. Our extensive experiments demonstrate the superiority of FlowerFormer over existing neural encoding methods, and its effectiveness extends beyond computer vision models to include graph neural networks and auto speech recognition models. Our code is available at http://github.com/y0ngjaenius/CVPR2024_FLOWERFormer.

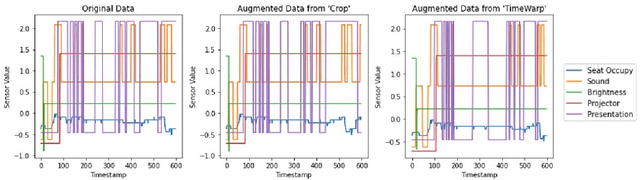

DOO-RE: A dataset of ambient sensors in a meeting room for activity recognition

Jan 17, 2024Abstract:With the advancement of IoT technology, recognizing user activities with machine learning methods is a promising way to provide various smart services to users. High-quality data with privacy protection is essential for deploying such services in the real world. Data streams from surrounding ambient sensors are well suited to the requirement. Existing ambient sensor datasets only support constrained private spaces and those for public spaces have yet to be explored despite growing interest in research on them. To meet this need, we build a dataset collected from a meeting room equipped with ambient sensors. The dataset, DOO-RE, includes data streams from various ambient sensor types such as Sound and Projector. Each sensor data stream is segmented into activity units and multiple annotators provide activity labels through a cross-validation annotation process to improve annotation quality. We finally obtain 9 types of activities. To our best knowledge, DOO-RE is the first dataset to support the recognition of both single and group activities in a real meeting room with reliable annotations.

CLAN: A Contrastive Learning based Novelty Detection Framework for Human Activity Recognition

Jan 17, 2024

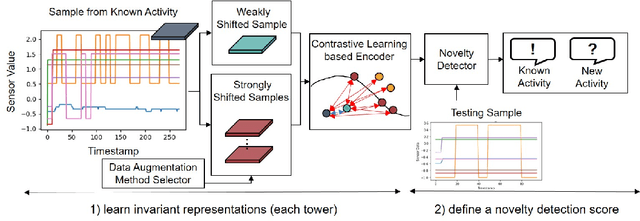

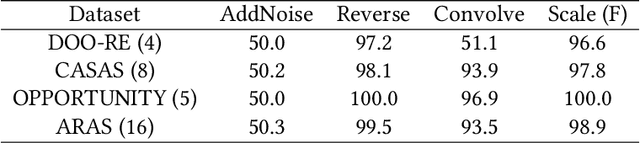

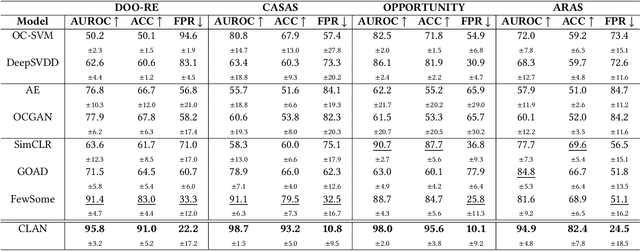

Abstract:In ambient assisted living, human activity recognition from time series sensor data mainly focuses on predefined activities, often overlooking new activity patterns. We propose CLAN, a two-tower contrastive learning-based novelty detection framework with diverse types of negative pairs for human activity recognition. It is tailored to challenges with human activity characteristics, including the significance of temporal and frequency features, complex activity dynamics, shared features across activities, and sensor modality variations. The framework aims to construct invariant representations of known activity robust to the challenges. To generate suitable negative pairs, it selects data augmentation methods according to the temporal and frequency characteristics of each dataset. It derives the key representations against meaningless dynamics by contrastive and classification losses-based representation learning and score function-based novelty detection that accommodate dynamic numbers of the different types of augmented samples. The proposed two-tower model extracts the representations in terms of time and frequency, mutually enhancing expressiveness for distinguishing between new and known activities, even when they share common features. Experiments on four real-world human activity datasets show that CLAN surpasses the best performance of existing novelty detection methods, improving by 8.3%, 13.7%, and 53.3% in AUROC, balanced accuracy, and FPR@TPR0.95 metrics respectively.

A Causality-Aware Pattern Mining Scheme for Group Activity Recognition in a Pervasive Sensor Space

Dec 01, 2023

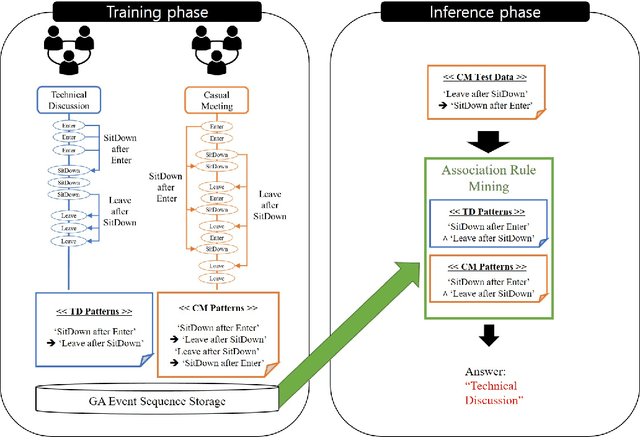

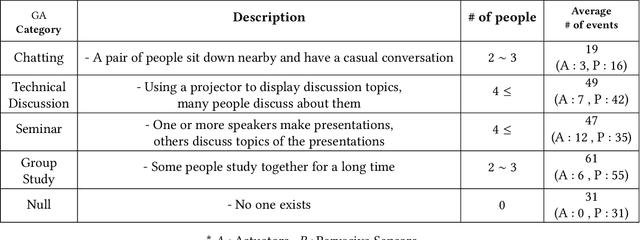

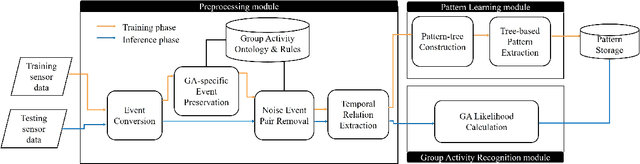

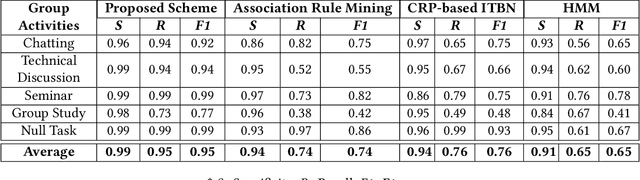

Abstract:Human activity recognition (HAR) is a key challenge in pervasive computing and its solutions have been presented based on various disciplines. Specifically, for HAR in a smart space without privacy and accessibility issues, data streams generated by deployed pervasive sensors are leveraged. In this paper, we focus on a group activity by which a group of users perform a collaborative task without user identification and propose an efficient group activity recognition scheme which extracts causality patterns from pervasive sensor event sequences generated by a group of users to support as good recognition accuracy as the state-of-the-art graphical model. To filter out irrelevant noise events from a given data stream, a set of rules is leveraged to highlight causally related events. Then, a pattern-tree algorithm extracts frequent causal patterns by means of a growing tree structure. Based on the extracted patterns, a weighted sum-based pattern matching algorithm computes the likelihoods of stored group activities to the given test event sequence by means of matched event pattern counts for group activity recognition. We evaluate the proposed scheme using the data collected from our testbed and CASAS datasets where users perform their tasks on a daily basis and validate its effectiveness in a real environment. Experiment results show that the proposed scheme performs higher recognition accuracy and with a small amount of runtime overhead than the existing schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge