Hulingxiao He

Analyzing and Boosting the Power of Fine-Grained Visual Recognition for Multi-modal Large Language Models

Jan 25, 2025Abstract:Multi-modal large language models (MLLMs) have shown remarkable abilities in various visual understanding tasks. However, MLLMs still struggle with fine-grained visual recognition (FGVR), which aims to identify subordinate-level categories from images. This can negatively impact more advanced capabilities of MLLMs, such as object-centric visual question answering and reasoning. In our study, we revisit three quintessential capabilities of MLLMs for FGVR, including object information extraction, category knowledge reserve, object-category alignment, and position of the root cause as a misalignment problem. To address this issue, we present Finedefics, an MLLM that enhances the model's FGVR capability by incorporating informative attribute descriptions of objects into the training phase. We employ contrastive learning on object-attribute pairs and attribute-category pairs simultaneously and use examples from similar but incorrect categories as hard negatives, naturally bringing representations of visual objects and category names closer. Extensive evaluations across multiple popular FGVR datasets demonstrate that Finedefics outperforms existing MLLMs of comparable parameter sizes, showcasing its remarkable efficacy. The code is available at https://github.com/PKU-ICST-MIPL/Finedefics_ICLR2025.

Firzen: Firing Strict Cold-Start Items with Frozen Heterogeneous and Homogeneous Graphs for Recommendation

Oct 10, 2024

Abstract:Recommendation models utilizing unique identities (IDs) to represent distinct users and items have dominated the recommender systems literature for over a decade. Since multi-modal content of items (e.g., texts and images) and knowledge graphs (KGs) may reflect the interaction-related users' preferences and items' characteristics, they have been utilized as useful side information to further improve the recommendation quality. However, the success of such methods often limits to either warm-start or strict cold-start item recommendation in which some items neither appear in the training data nor have any interactions in the test stage: (1) Some fail to learn the embedding of a strict cold-start item since side information is only utilized to enhance the warm-start ID representations; (2) The others deteriorate the performance of warm-start recommendation since unrelated multi-modal content or entities in KGs may blur the final representations. In this paper, we propose a unified framework incorporating multi-modal content of items and KGs to effectively solve both strict cold-start and warm-start recommendation termed Firzen, which extracts the user-item collaborative information over frozen heterogeneous graph (collaborative knowledge graph), and exploits the item-item semantic structures and user-user behavioral association over frozen homogeneous graphs (item-item relation graph and user-user co-occurrence graph). Furthermore, we build four unified strict cold-start evaluation benchmarks based on publicly available Amazon datasets and a real-world industrial dataset from Weixin Channels via rearranging the interaction data and constructing KGs. Extensive empirical results demonstrate that our model yields significant improvements for strict cold-start recommendation and outperforms or matches the state-of-the-art performance in the warm-start scenario.

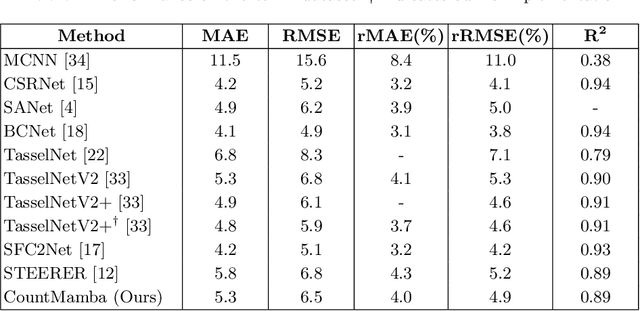

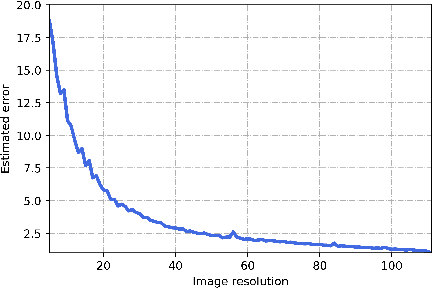

CountMamba: Exploring Multi-directional Selective State-Space Models for Plant Counting

Oct 10, 2024

Abstract:Plant counting is essential in every stage of agriculture, including seed breeding, germination, cultivation, fertilization, pollination yield estimation, and harvesting. Inspired by the fact that humans count objects in high-resolution images by sequential scanning, we explore the potential of handling plant counting tasks via state space models (SSMs) for generating counting results. In this paper, we propose a new counting approach named CountMamba that constructs multiple counting experts to scan from various directions simultaneously. Specifically, we design a Multi-directional State-Space Group to process the image patch sequences in multiple orders and aim to simulate different counting experts. We also design Global-Local Adaptive Fusion to adaptively aggregate global features extracted from multiple directions and local features extracted from the CNN branch in a sample-wise manner. Extensive experiments demonstrate that the proposed CountMamba performs competitively on various plant counting tasks, including maize tassels, wheat ears, and sorghum head counting.

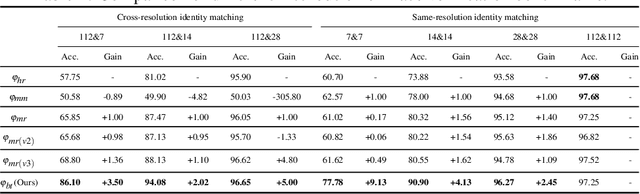

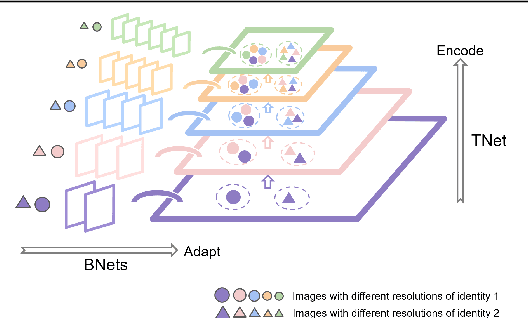

Learning Unified Representations for Multi-Resolution Face Recognition

Oct 14, 2023

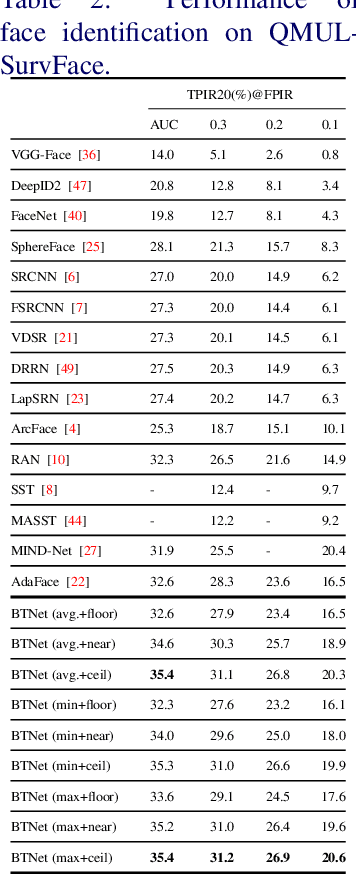

Abstract:In this work, we propose Branch-to-Trunk network (BTNet), a representation learning method for multi-resolution face recognition. It consists of a trunk network (TNet), namely a unified encoder, and multiple branch networks (BNets), namely resolution adapters. As per the input, a resolution-specific BNet is used and the output are implanted as feature maps in the feature pyramid of TNet, at a layer with the same resolution. The discriminability of tiny faces is significantly improved, as the interpolation error introduced by rescaling, especially up-sampling, is mitigated on the inputs. With branch distillation and backward-compatible training, BTNet transfers discriminative high-resolution information to multiple branches while guaranteeing representation compatibility. Our experiments demonstrate strong performance on face recognition benchmarks, both for multi-resolution identity matching and feature aggregation, with much less computation amount and parameter storage. We establish new state-of-the-art on the challenging QMUL-SurvFace 1: N face identification task. Our code is available at https://github.com/StevenSmith2000/BTNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge