Houssam Abbas

Oregon State University

FiReFly: Fair Distributed Receding Horizon Planning for Multiple UAVs

Aug 20, 2025Abstract:We propose injecting notions of fairness into multi-robot motion planning. When robots have competing interests, it is important to optimize for some kind of fairness in their usage of resources. In this work, we explore how the robots' energy expenditures might be fairly distributed among them, while maintaining mission success. We formulate a distributed fair motion planner and integrate it with safe controllers in a algorithm called FiReFly. For simulated reach-avoid missions, FiReFly produces fairer trajectories and improves mission success rates over a non-fair planner. We find that real-time performance is achievable up to 15 UAVs, and that scaling up to 50 UAVs is possible with trade-offs between runtime and fairness improvements.

Fair-CoPlan: Negotiated Flight Planning with Fair Deconfliction for Urban Air Mobility

Aug 20, 2025

Abstract:Urban Air Mobility (UAM) is an emerging transportation paradigm in which Uncrewed Aerial Systems (UAS) autonomously transport passengers and goods in cities. The UAS have different operators with different, sometimes competing goals, yet must share the airspace. We propose a negotiated, semi-distributed flight planner that optimizes UAS' flight lengths {\em in a fair manner}. Current flight planners might result in some UAS being given disproportionately shorter flight paths at the expense of others. We introduce Fair-CoPlan, a planner in which operators and a Provider of Service to the UAM (PSU) together compute \emph{fair} flight paths. Fair-CoPlan has three steps: First, the PSU constrains take-off and landing choices for flights based on capacity at and around vertiports. Then, operators plan independently under these constraints. Finally, the PSU resolves any conflicting paths, optimizing for path length fairness. By fairly spreading the cost of deconfliction Fair-CoPlan encourages wider participation in UAM, ensures safety of the airspace and the areas below it, and promotes greater operator flexibility. We demonstrate Fair-CoPlan through simulation experiments and find fairer outcomes than a non-fair planner with minor delays as a trade-off.

Deontically Constrained Policy Improvement in Reinforcement Learning Agents

Jun 08, 2025Abstract:Markov Decision Processes (MDPs) are the most common model for decision making under uncertainty in the Machine Learning community. An MDP captures non-determinism, probabilistic uncertainty, and an explicit model of action. A Reinforcement Learning (RL) agent learns to act in an MDP by maximizing a utility function. This paper considers the problem of learning a decision policy that maximizes utility subject to satisfying a constraint expressed in deontic logic. In this setup, the utility captures the agent's mission - such as going quickly from A to B. The deontic formula represents (ethical, social, situational) constraints on how the agent might achieve its mission by prohibiting classes of behaviors. We use the logic of Expected Act Utilitarianism, a probabilistic stit logic that can be interpreted over controlled MDPs. We develop a variation on policy improvement, and show that it reaches a constrained local maximum of the mission utility. Given that in stit logic, an agent's duty is derived from value maximization, this can be seen as a way of acting to simultaneously maximize two value functions, one of which is implicit, in a bi-level structure. We illustrate these results with experiments on sample MDPs.

ROS2-Based Simulation Framework for Cyberphysical Security Analysis of UAVs

Oct 04, 2024Abstract:We present a new simulator of Uncrewed Aerial Vehicles (UAVs) that is tailored to the needs of testing cyber-physical security attacks and defenses. Recent investigations into UAV safety have unveiled various attack surfaces and some defense mechanisms. However, due to escalating regulations imposed by aviation authorities on security research on real UAVs, and the substantial costs associated with hardware test-bed configurations, there arises a necessity for a simulator capable of substituting for hardware experiments, and/or narrowing down their scope to the strictly necessary. The study of different attack mechanisms requires specific features in a simulator. We propose a simulation framework based on ROS2, leveraging some of its key advantages, including modularity, replicability, customization, and the utilization of open-source tools such as Gazebo. Our framework has a built-in motion planner, controller, communication models and attack models. We share examples of research use cases that our framework can enable, demonstrating its utility.

Formal Ethical Obligations in Reinforcement Learning Agents: Verification and Policy Updates

Jul 31, 2024Abstract:When designing agents for operation in uncertain environments, designers need tools to automatically reason about what agents ought to do, how that conflicts with what is actually happening, and how a policy might be modified to remove the conflict. These obligations include ethical and social obligations, permissions and prohibitions, which constrain how the agent achieves its mission and executes its policy. We propose a new deontic logic, Expected Act Utilitarian deontic logic, for enabling this reasoning at design time: for specifying and verifying the agent's strategic obligations, then modifying its policy from a reference policy to meet those obligations. Unlike approaches that work at the reward level, working at the logical level increases the transparency of the trade-offs. We introduce two algorithms: one for model-checking whether an RL agent has the right strategic obligations, and one for modifying a reference decision policy to make it meet obligations expressed in our logic. We illustrate our algorithms on DAC-MDPs which accurately abstract neural decision policies, and on toy gridworld environments.

Differentiable Inference of Temporal Logic Formulas

Aug 10, 2022

Abstract:We demonstrate the first Recurrent Neural Network architecture for learning Signal Temporal Logic formulas, and present the first systematic comparison of formula inference methods. Legacy systems embed much expert knowledge which is not explicitly formalized. There is great interest in learning formal specifications that characterize the ideal behavior of such systems -- that is, formulas in temporal logic that are satisfied by the system's output signals. Such specifications can be used to better understand the system's behavior and improve design of its next iteration. Previous inference methods either assumed certain formula templates, or did a heuristic enumeration of all possible templates. This work proposes a neural network architecture that infers the formula structure via gradient descent, eliminating the need for imposing any specific templates. It combines learning of formula structure and parameters in one optimization. Through systematic comparison, we demonstrate that this method achieves similar or better mis-classification rates (MCR) than enumerative and lattice methods. We also observe that different formulas can achieve similar MCR, empirically demonstrating the under-determinism of the problem of temporal logic inference.

Algorithmic Ethics: Formalization and Verification of Autonomous Vehicle Obligations

May 06, 2021

Abstract:We develop a formal framework for automatic reasoning about the obligations of autonomous cyber-physical systems, including their social and ethical obligations. Obligations, permissions and prohibitions are distinct from a system's mission, and are a necessary part of specifying advanced, adaptive AI-equipped systems. They need a dedicated deontic logic of obligations to formalize them. Most existing deontic logics lack corresponding algorithms and system models that permit automatic verification. We demonstrate how a particular deontic logic, Dominance Act Utilitarianism (DAU), is a suitable starting point for formalizing the obligations of autonomous systems like self-driving cars. We demonstrate its usefulness by formalizing a subset of Responsibility-Sensitive Safety (RSS) in DAU; RSS is an industrial proposal for how self-driving cars should and should not behave in traffic. We show that certain logical consequences of RSS are undesirable, indicating a need to further refine the proposal. We also demonstrate how obligations can change over time, which is necessary for long-term autonomy. We then demonstrate a model-checking algorithm for DAU formulas on weighted transition systems, and illustrate it by model-checking obligations of a self-driving car controller from the literature.

Learning-'N-Flying: A Learning-based, Decentralized Mission Aware UAS Collision Avoidance Scheme

Jan 25, 2021

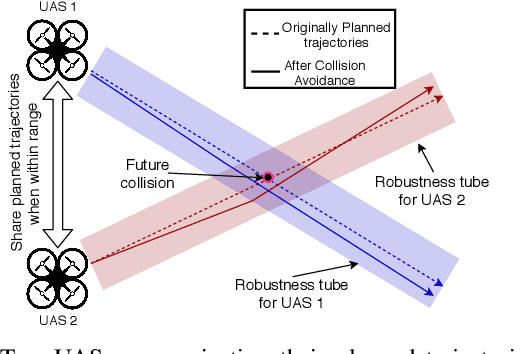

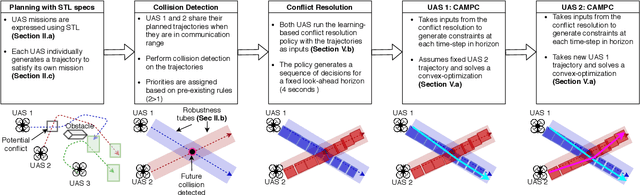

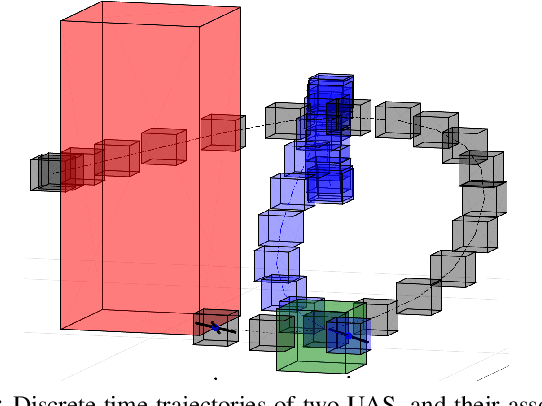

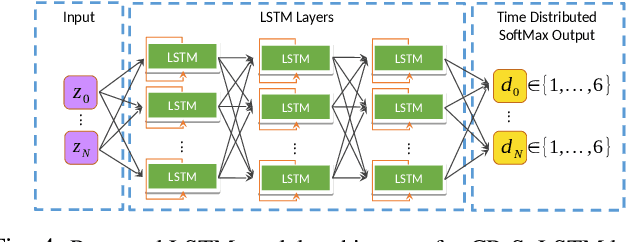

Abstract:Urban Air Mobility, the scenario where hundreds of manned and Unmanned Aircraft System (UAS) carry out a wide variety of missions (e.g. moving humans and goods within the city), is gaining acceptance as a transportation solution of the future. One of the key requirements for this to happen is safely managing the air traffic in these urban airspaces. Due to the expected density of the airspace, this requires fast autonomous solutions that can be deployed online. We propose Learning-'N-Flying (LNF) a multi-UAS Collision Avoidance (CA) framework. It is decentralized, works on-the-fly and allows autonomous UAS managed by different operators to safely carry out complex missions, represented using Signal Temporal Logic, in a shared airspace. We initially formulate the problem of predictive collision avoidance for two UAS as a mixed-integer linear program, and show that it is intractable to solve online. Instead, we first develop Learning-to-Fly (L2F) by combining: a) learning-based decision-making, and b) decentralized convex optimization-based control. LNF extends L2F to cases where there are more than two UAS on a collision path. Through extensive simulations, we show that our method can run online (computation time in the order of milliseconds), and under certain assumptions has failure rates of less than 1% in the worst-case, improving to near 0% in more relaxed operations. We show the applicability of our scheme to a wide variety of settings through multiple case studies.

Learning-to-Fly: Learning-based Collision Avoidance for Scalable Urban Air Mobility

Jun 23, 2020

Abstract:With increasing urban population, there is global interest in Urban Air Mobility (UAM), where hundreds of autonomous Unmanned Aircraft Systems (UAS) execute missions in the airspace above cities. Unlike traditional human-in-the-loop air traffic management, UAM requires decentralized autonomous approaches that scale for an order of magnitude higher aircraft densities and are applicable to urban settings. We present Learning-to-Fly (L2F), a decentralized on-demand airborne collision avoidance framework for multiple UAS that allows them to independently plan and safely execute missions with spatial, temporal and reactive objectives expressed using Signal Temporal Logic. We formulate the problem of predictively avoiding collisions between two UAS without violating mission objectives as a Mixed Integer Linear Program (MILP).This however is intractable to solve online. Instead, we develop L2F, a two-stage collision avoidance method that consists of: 1) a learning-based decision-making scheme and 2) a distributed, linear programming-based UAS control algorithm. Through extensive simulations, we show the real-time applicability of our method which is $\approx\!6000\times$ faster than the MILP approach and can resolve $100\%$ of collisions when there is ample room to maneuver, and shows graceful degradation in performance otherwise. We also compare L2F to two other methods and demonstrate an implementation on quad-rotor robots.

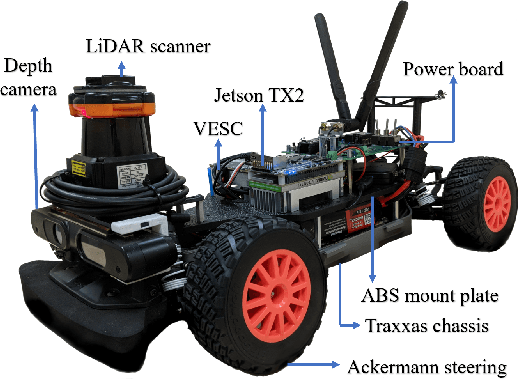

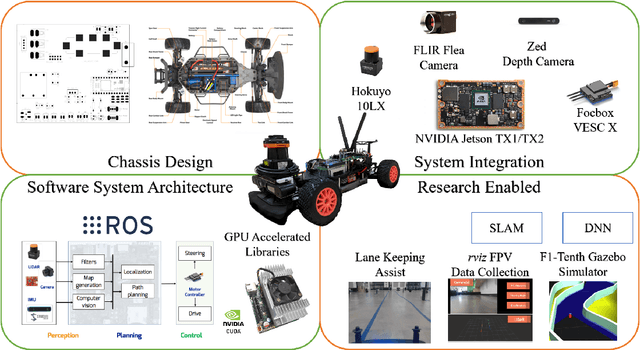

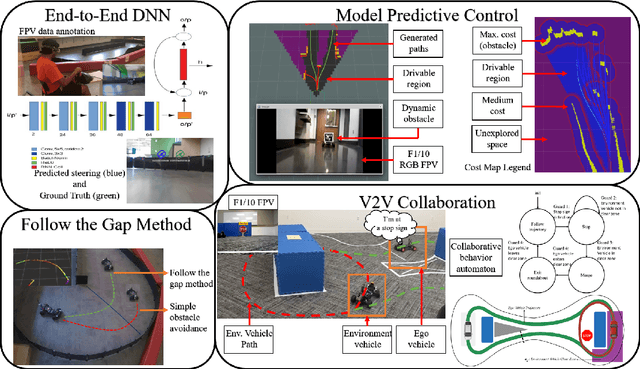

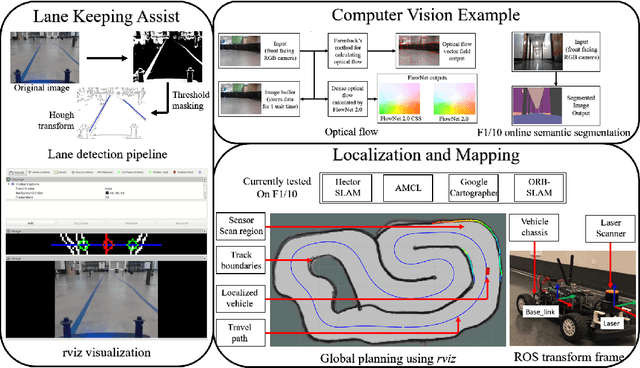

F1/10: An Open-Source Autonomous Cyber-Physical Platform

Jan 24, 2019

Abstract:In 2005 DARPA labeled the realization of viable autonomous vehicles (AVs) a grand challenge; a short time later the idea became a moonshot that could change the automotive industry. Today, the question of safety stands between reality and solved. Given the right platform the CPS community is poised to offer unique insights. However, testing the limits of safety and performance on real vehicles is costly and hazardous. The use of such vehicles is also outside the reach of most researchers and students. In this paper, we present F1/10: an open-source, affordable, and high-performance 1/10 scale autonomous vehicle testbed. The F1/10 testbed carries a full suite of sensors, perception, planning, control, and networking software stacks that are similar to full scale solutions. We demonstrate key examples of the research enabled by the F1/10 testbed, and how the platform can be used to augment research and education in autonomous systems, making autonomy more accessible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge