Hoeseok Yang

Elastic-DETR: Making Image Resolution Learnable with Content-Specific Network Prediction

Dec 09, 2024Abstract:Multi-scale image resolution is a de facto standard approach in modern object detectors, such as DETR. This technique allows for the acquisition of various scale information from multiple image resolutions. However, manual hyperparameter selection of the resolution can restrict its flexibility, which is informed by prior knowledge, necessitating human intervention. This work introduces a novel strategy for learnable resolution, called Elastic-DETR, enabling elastic utilization of multiple image resolutions. Our network provides an adaptive scale factor based on the content of the image with a compact scale prediction module (< 2 GFLOPs). The key aspect of our method lies in how to determine the resolution without prior knowledge. We present two loss functions derived from identified key components for resolution optimization: scale loss, which increases adaptiveness according to the image, and distribution loss, which determines the overall degree of scaling based on network performance. By leveraging the resolution's flexibility, we can demonstrate various models that exhibit varying trade-offs between accuracy and computational complexity. We empirically show that our scheme can unleash the potential of a wide spectrum of image resolutions without constraining flexibility. Our models on MS COCO establish a maximum accuracy gain of 3.5%p or 26% decrease in computation than MS-trained DN-DETR.

Simplifying Two-Stage Detectors for On-Device Inference in Remote Sensing

Apr 11, 2024

Abstract:Deep learning has been successfully applied to object detection from remotely sensed images. Images are typically processed on the ground rather than on-board due to the computation power of the ground system. Such offloaded processing causes delays in acquiring target mission information, which hinders its application to real-time use cases. For on-device object detection, researches have been conducted on designing efficient detectors or model compression to reduce inference latency. However, highly accurate two-stage detectors still need further exploitation for acceleration. In this paper, we propose a model simplification method for two-stage object detectors. Instead of constructing a general feature pyramid, we utilize only one feature extraction in the two-stage detector. To compensate for the accuracy drop, we apply a high pass filter to the RPN's score map. Our approach is applicable to any two-stage detector using a feature pyramid network. In the experiments with state-of-the-art two-stage detectors such as ReDet, Oriented-RCNN, and LSKNet, our method reduced computation costs upto 61.2% with the accuracy loss within 2.1% on the DOTAv1.5 dataset. Source code will be released.

DyRA: Dynamic Resolution Adjustment for Scale-robust Object Detection

Dec 07, 2023

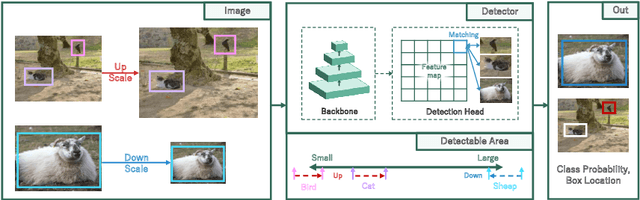

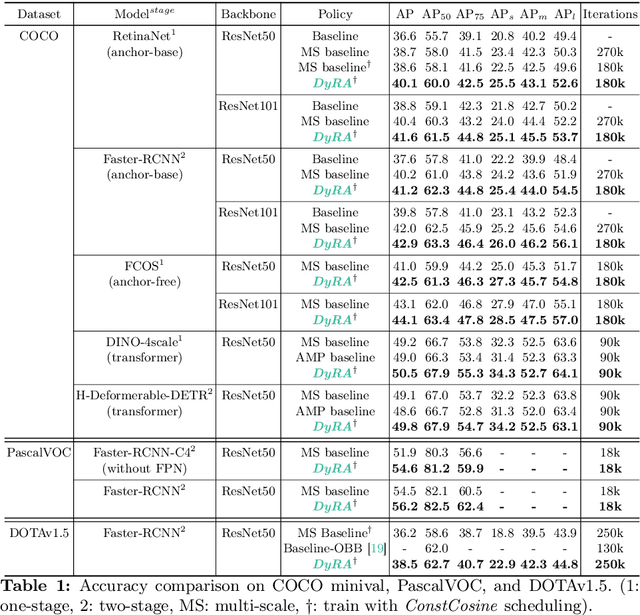

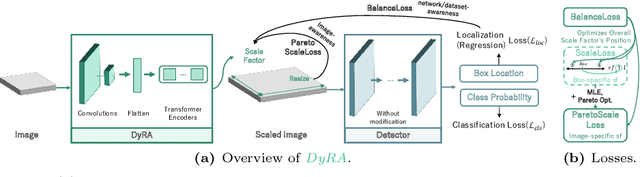

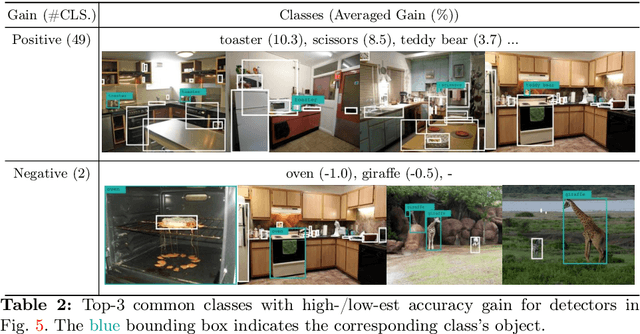

Abstract:In object detection, achieving constant accuracy is challenging due to the variability of object sizes. One possible solution to this problem is to optimize the input resolution, known as a multi-resolution strategy. Previous approaches for optimizing resolution are often based on pre-defined resolutions or a dynamic neural network, but there is a lack of study for run-time resolution optimization for existing architecture. In this paper, we propose an adaptive resolution scaling network called DyRA, which comprises convolutions and transformer encoder blocks, for existing detectors. Our DyRA returns a scale factor from an input image, which enables instance-specific scaling. This network is jointly trained with detectors with specially designed loss functions, namely ParetoScaleLoss and BalanceLoss. The ParetoScaleLoss produces an adaptive scale factor from the image, while the BalanceLoss optimizes the scale factor according to localization power for the dataset. The loss function is designed to minimize accuracy drop about the contrasting objective of small and large objects. Our experiments on COCO, RetinaNet, Faster-RCNN, FCOS, and Mask-RCNN achieved 1.3%, 1.1%, 1.3%, and 0.8% accuracy improvement than a multi-resolution baseline with solely resolution adjustment. The code is available at https://github.com/DaEunFullGrace/DyRA.git.

Machine Learning-Driven Burrowing with a Snake-Like Robot

Sep 19, 2023Abstract:Subterranean burrowing is inherently difficult for robots because of the high forces experienced as well as the high amount of uncertainty in this domain. Because of the difficulty in modeling forces in granular media, we propose the use of a novel machine-learning control strategy to obtain optimal techniques for vertical self-burrowing. In this paper, we realize a snake-like bio-inspired robot that is equipped with an IMU and two triple-axis magnetometers. Utilizing magnetic field strength as an analog for depth, a novel deep learning architecture was proposed based on sinusoidal and random data in order to obtain a more efficient strategy for vertical self-burrowing. This strategy was able to outperform many other standard burrowing techniques and was able to automatically reach targeted burrowing depths. We hope these results will serve as a proof of concept for how optimization can be used to unlock the secrets of navigating in the subterranean world more efficiently.

EURETILE D7.3 - Dynamic DAL benchmark coding, measurements on MPI version of DPSNN-STDP (distributed plastic spiking neural net) and improvements to other DAL codes

Aug 20, 2014

Abstract:The EURETILE project required the selection and coding of a set of dedicated benchmarks. The project is about the software and hardware architecture of future many-tile distributed fault-tolerant systems. We focus on dynamic workloads characterised by heavy numerical processing requirements. The ambition is to identify common techniques that could be applied to both the Embedded Systems and HPC domains. This document is the first public deliverable of Work Package 7: Challenging Tiled Applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge