Henrik Wann Jensen

An Improved NeuMIP with Better Accuracy

Jul 19, 2023

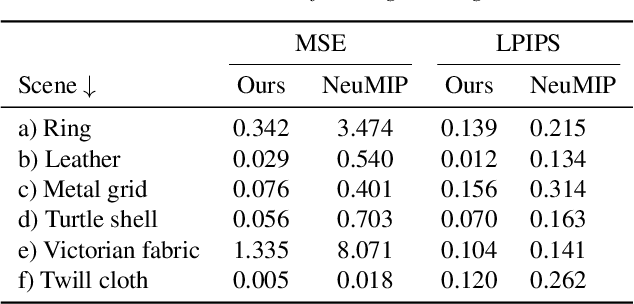

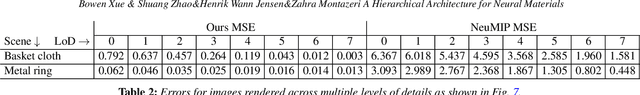

Abstract:Neural reflectance models are capable of accurately reproducing the spatially-varying appearance of many real-world materials at different scales. However, existing methods have difficulties handling highly glossy materials. To address this problem, we introduce a new neural reflectance model which, compared with existing methods, better preserves not only specular highlights but also fine-grained details. To this end, we enhance the neural network performance by encoding input data to frequency space, inspired by NeRF, to better preserve the details. Furthermore, we introduce a gradient-based loss and employ it in multiple stages, adaptive to the progress of the learning phase. Lastly, we utilize an optional extension to the decoder network using the Inception module for more accurate yet costly performance. We demonstrate the effectiveness of our method using a variety of synthetic and real examples.

Photon-Driven Neural Path Guiding

Oct 05, 2020

Abstract:Although Monte Carlo path tracing is a simple and effective algorithm to synthesize photo-realistic images, it is often very slow to converge to noise-free results when involving complex global illumination. One of the most successful variance-reduction techniques is path guiding, which can learn better distributions for importance sampling to reduce pixel noise. However, previous methods require a large number of path samples to achieve reliable path guiding. We present a novel neural path guiding approach that can reconstruct high-quality sampling distributions for path guiding from a sparse set of samples, using an offline trained neural network. We leverage photons traced from light sources as the input for sampling density reconstruction, which is highly effective for challenging scenes with strong global illumination. To fully make use of our deep neural network, we partition the scene space into an adaptive hierarchical grid, in which we apply our network to reconstruct high-quality sampling distributions for any local region in the scene. This allows for highly efficient path guiding for any path bounce at any location in path tracing. We demonstrate that our photon-driven neural path guiding method can generalize well on diverse challenging testing scenes that are not seen in training. Our approach achieves significantly better rendering results of testing scenes than previous state-of-the-art path guiding methods.

Deep Photon Mapping

Apr 25, 2020

Abstract:Recently, deep learning-based denoising approaches have led to dramatic improvements in low sample-count Monte Carlo rendering. These approaches are aimed at path tracing, which is not ideal for simulating challenging light transport effects like caustics, where photon mapping is the method of choice. However, photon mapping requires very large numbers of traced photons to achieve high-quality reconstructions. In this paper, we develop the first deep learning-based method for particle-based rendering, and specifically focus on photon density estimation, the core of all particle-based methods. We train a novel deep neural network to predict a kernel function to aggregate photon contributions at shading points. Our network encodes individual photons into per-photon features, aggregates them in the neighborhood of a shading point to construct a photon local context vector, and infers a kernel function from the per-photon and photon local context features. This network is easy to incorporate in many previous photon mapping methods (by simply swapping the kernel density estimator) and can produce high-quality reconstructions of complex global illumination effects like caustics with an order of magnitude fewer photons compared to previous photon mapping methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge