Harideep Nair

Exploration of Unary Arithmetic-Based Matrix Multiply Units for Low Precision DL Accelerators

Jan 31, 2026Abstract:General matrix multiplication (GEMM) is a fundamental operation in deep learning (DL). With DL moving increasingly toward low precision, recent works have proposed novel unary GEMM designs as an alternative to conventional binary GEMM hardware. A rigorous evaluation of recent unary and binary GEMM designs is needed to assess the potential of unary hardware for future DL compute. This paper focuses on unary GEMM designs for integer-based DL inference and performs a detailed evaluation of three latest unary design proposals, namely, uGEMM, tuGEMM and tubGEMM, by comparing them to a conventional binary GEMM. Rigorous post-synthesis evaluations beyond prior works are performed across varying bit-widths and matrix sizes to assess the designs' tradeoffs and determine optimal sweetspots. Further, we perform weight sparsity analysis across eight pretrained convolutional neural networks (CNNs) and the LLaMA2 large language model (LLM). In this work, we demonstrate how unary GEMM can be effectively used for energy-efficient compute in future edge AI accelerators.

Tempus Core: Area-Power Efficient Temporal-Unary Convolution Core for Low-Precision Edge DLAs

Dec 25, 2024

Abstract:The increasing complexity of deep neural networks (DNNs) poses significant challenges for edge inference deployment due to resource and power constraints of edge devices. Recent works on unary-based matrix multiplication hardware aim to leverage data sparsity and low-precision values to enhance hardware efficiency. However, the adoption and integration of such unary hardware into commercial deep learning accelerators (DLA) remain limited due to processing element (PE) array dataflow differences. This work presents Tempus Core, a convolution core with highly scalable unary-based PE array comprising of tub (temporal-unary-binary) multipliers that seamlessly integrates with the NVDLA (NVIDIA's open-source DLA for accelerating CNNs) while maintaining dataflow compliance and boosting hardware efficiency. Analysis across various datapath granularities shows that for INT8 precision in 45nm CMOS, Tempus Core's PE cell unit (PCU) yields 59.3% and 15.3% reductions in area and power consumption, respectively, over NVDLA's CMAC unit. Considering a 16x16 PE array in Tempus Core, area and power improves by 75% and 62%, respectively, while delivering 5x and 4x iso-area throughput improvements for INT8 and INT4 precisions. Post-place and route analysis of Tempus Core's PCU shows that the 16x4 PE array for INT4 precision in 45nm CMOS requires only 0.017 mm^2 die area and consumes only 6.2mW of total power. We demonstrate that area-power efficient unary-based hardware can be seamlessly integrated into conventional DLAs, paving the path for efficient unary hardware for edge AI inference.

TNNGen: Automated Design of Neuromorphic Sensory Processing Units for Time-Series Clustering

Dec 23, 2024

Abstract:Temporal Neural Networks (TNNs), a special class of spiking neural networks, draw inspiration from the neocortex in utilizing spike-timings for information processing. Recent works proposed a microarchitecture framework and custom macro suite for designing highly energy-efficient application-specific TNNs. These recent works rely on manual hardware design, a labor-intensive and time-consuming process. Further, there is no open-source functional simulation framework for TNNs. This paper introduces TNNGen, a pioneering effort towards the automated design of TNNs from PyTorch software models to post-layout netlists. TNNGen comprises a novel PyTorch functional simulator (for TNN modeling and application exploration) coupled with a Python-based hardware generator (for PyTorch-to-RTL and RTL-to-Layout conversions). Seven representative TNN designs for time-series signal clustering across diverse sensory modalities are simulated and their post-layout hardware complexity and design runtimes are assessed to demonstrate the effectiveness of TNNGen. We also highlight TNNGen's ability to accurately forecast silicon metrics without running hardware process flow.

tuGEMM: Area-Power-Efficient Temporal Unary GEMM Architecture for Low-Precision Edge AI

Dec 23, 2024Abstract:General matrix multiplication (GEMM) is a ubiquitous computing kernel/algorithm for data processing in diverse applications, including artificial intelligence (AI) and deep learning (DL). Recent shift towards edge computing has inspired GEMM architectures based on unary computing, which are predominantly stochastic and rate-coded systems. This paper proposes a novel GEMM architecture based on temporal-coding, called tuGEMM, that performs exact computation. We introduce two variants of tuGEMM, serial and parallel, with distinct area/power-latency trade-offs. Post-synthesis Power-Performance-Area (PPA) in 45 nm CMOS are reported for 2-bit, 4-bit, and 8-bit computations. The designs illustrate significant advantages in area-power efficiency over state-of-the-art stochastic unary systems especially at low precisions, e.g. incurring just 0.03 mm^2 and 9 mW for 4 bits, and 0.01 mm^2 and 4 mW for 2 bits. This makes tuGEMM ideal for power constrained mobile and edge devices performing always-on real-time sensory processing.

TNN7: A Custom Macro Suite for Implementing Highly Optimized Designs of Neuromorphic TNNs

May 16, 2022

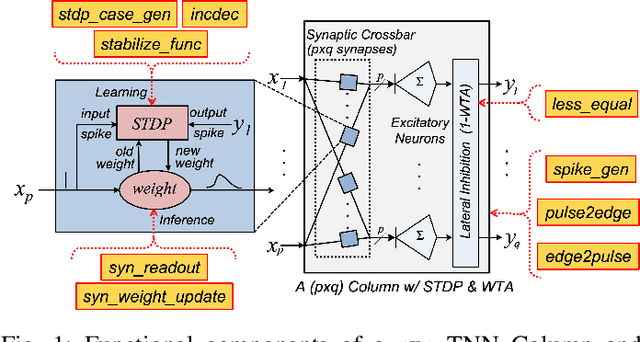

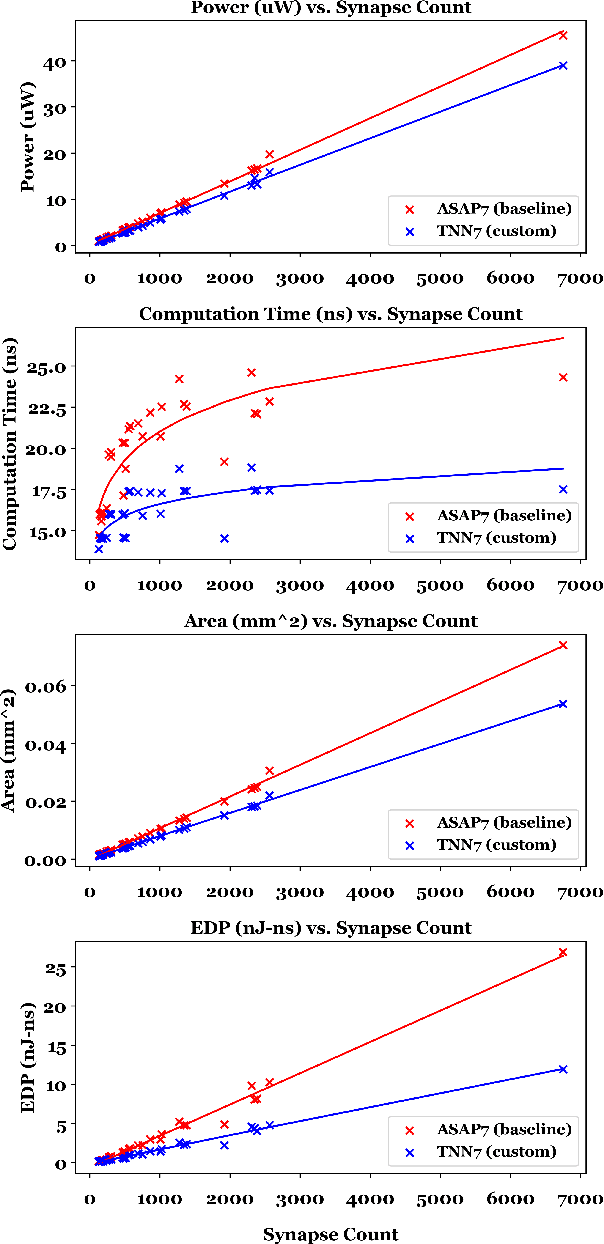

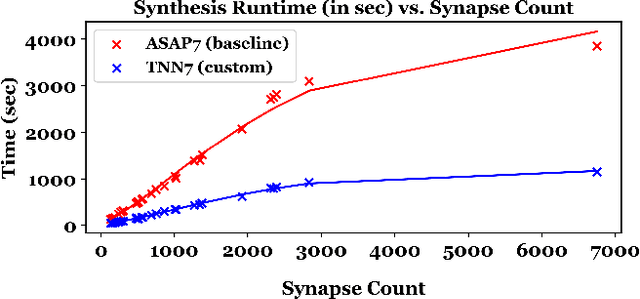

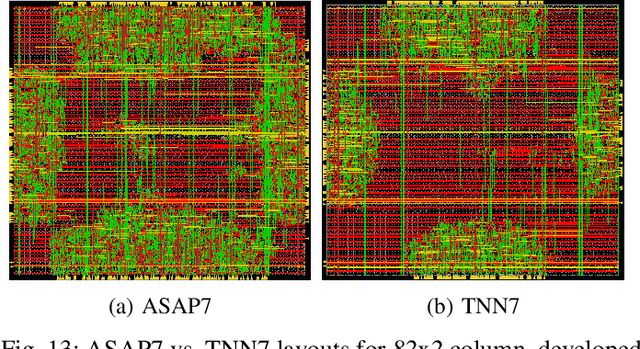

Abstract:Temporal Neural Networks (TNNs), inspired from the mammalian neocortex, exhibit energy-efficient online sensory processing capabilities. Recent works have proposed a microarchitecture design framework for implementing TNNs and demonstrated competitive performance on vision and time-series applications. Building on them, this work proposes TNN7, a suite of nine highly optimized custom macros developed using a predictive 7nm Process Design Kit (PDK), to enhance the efficiency, modularity and flexibility of the TNN design framework. TNN prototypes for two applications are used for evaluation of TNN7. An unsupervised time-series clustering TNN delivering competitive performance can be implemented within 40 uW power and 0.05 mm^2 area, while a 4-layer TNN that achieves an MNIST error rate of 1% consumes only 18 mW and 24.63 mm^2. On average, the proposed macros reduce power, delay, area, and energy-delay product by 14%, 16%, 28%, and 45%, respectively. Furthermore, employing TNN7 significantly reduces the synthesis runtime of TNN designs (by more than 3x), allowing for highly-scaled TNN implementations to be realized.

A Microarchitecture Implementation Framework for Online Learning with Temporal Neural Networks

Jun 02, 2021

Abstract:Temporal Neural Networks (TNNs) are spiking neural networks that use time as a resource to represent and process information, similar to the mammalian neocortex. In contrast to compute-intensive deep neural networks that employ separate training and inference phases, TNNs are capable of extremely efficient online incremental/continual learning and are excellent candidates for building edge-native sensory processing units. This work proposes a microarchitecture framework for implementing TNNs using standard CMOS. Gate-level implementations of three key building blocks are presented: 1) multi-synapse neurons, 2) multi-neuron columns, and 3) unsupervised and supervised online learning algorithms based on Spike Timing Dependent Plasticity (STDP). The proposed microarchitecture is embodied in a set of characteristic scaling equations for assessing the gate count, area, delay and power for any TNN design. Post-synthesis results (in 45nm CMOS) for the proposed designs are presented, and their online incremental learning capability is demonstrated.

Unsupervised Clustering of Time Series Signals using Neuromorphic Energy-Efficient Temporal Neural Networks

Feb 18, 2021

Abstract:Unsupervised time series clustering is a challenging problem with diverse industrial applications such as anomaly detection, bio-wearables, etc. These applications typically involve small, low-power devices on the edge that collect and process real-time sensory signals. State-of-the-art time-series clustering methods perform some form of loss minimization that is extremely computationally intensive from the perspective of edge devices. In this work, we propose a neuromorphic approach to unsupervised time series clustering based on Temporal Neural Networks that is capable of ultra low-power, continuous online learning. We demonstrate its clustering performance on a subset of UCR Time Series Archive datasets. Our results show that the proposed approach either outperforms or performs similarly to most of the existing algorithms while being far more amenable for efficient hardware implementation. Our hardware assessment analysis shows that in 7 nm CMOS the proposed architecture, on average, consumes only about 0.005 mm^2 die area and 22 uW power and can process each signal with about 5 ns latency.

A Custom 7nm CMOS Standard Cell Library for Implementing TNN-based Neuromorphic Processors

Dec 10, 2020

Abstract:A set of highly-optimized custom macro extensions is developed for a 7nm CMOS cell library for implementing Temporal Neural Networks (TNNs) that can mimic brain-like sensory processing with extreme energy efficiency. A TNN prototype (13,750 neurons and 315,000 synapses) for MNIST requires only 1.56mm2 die area and consumes only 1.69mW.

Direct CMOS Implementation of Neuromorphic Temporal Neural Networks for Sensory Processing

Aug 27, 2020

Abstract:Temporal Neural Networks (TNNs) use time as a resource to represent and process information, mimicking the behavior of the mammalian neocortex. This work focuses on implementing TNNs using off-the-shelf digital CMOS technology. A microarchitecture framework is introduced with a hierarchy of building blocks including: multi-neuron columns, multi-column layers, and multi-layer TNNs. We present the direct CMOS gate-level implementation of the multi-neuron column model as the key building block for TNNs. Post-synthesis results are obtained using Synopsys tools and the 45 nm CMOS standard cell library. The TNN microarchitecture framework is embodied in a set of characteristic equations for assessing the total gate count, die area, compute time, and power consumption for any TNN design. We develop a multi-layer TNN prototype of 32M gates. In 7 nm CMOS process, it consumes only 1.54 mm^2 die area and 7.26 mW power and can process 28x28 images at 107M FPS (9.34 ns per image). We evaluate the prototype's performance and complexity relative to a recent state-of-the-art TNN model.

Hardware Aware Neural Network Architectures using FbNet

Jun 17, 2019

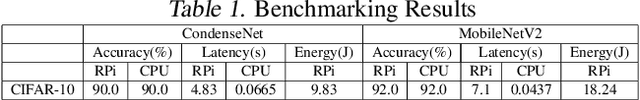

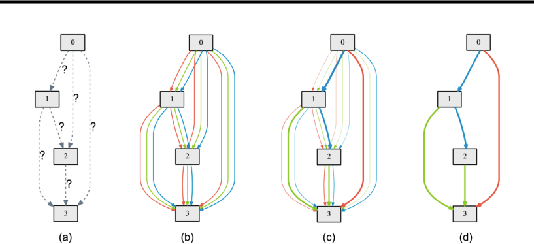

Abstract:We implement a differentiable Neural Architecture Search (NAS) method inspired by FBNet for discovering neural networks that are heavily optimized for a particular target device. The FBNet NAS method discovers a neural network from a given search space by optimizing over a loss function which accounts for accuracy and target device latency. We extend this loss function by adding an energy term. This will potentially enhance the ``hardware awareness" and help us find a neural network architecture that is optimal in terms of accuracy, latency and energy consumption, given a target device (Raspberry Pi in our case). We name our trained child architecture obtained at the end of search process as Hardware Aware Neural Network Architecture (HANNA). We prove the efficacy of our approach by benchmarking HANNA against two other state-of-the-art neural networks designed for mobile/embedded applications, namely MobileNetv2 and CondenseNet for CIFAR-10 dataset. Our results show that HANNA provides a speedup of about 2.5x and 1.7x, and reduces energy consumption by 3.8x and 2x compared to MobileNetv2 and CondenseNet respectively. HANNA is able to provide such significant speedup and energy efficiency benefits over the state-of-the-art baselines at the cost of a tolerable 4-5% drop in accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge