Hamid Zafar

IQA: Interactive Query Construction in Semantic Question Answering Systems

Jun 25, 2020

Abstract:Semantic Question Answering (SQA) systems automatically interpret user questions expressed in a natural language in terms of semantic queries. This process involves uncertainty, such that the resulting queries do not always accurately match the user intent, especially for more complex and less common questions. In this article, we aim to empower users in guiding SQA systems towards the intended semantic queries through interaction. We introduce IQA - an interaction scheme for SQA pipelines. This scheme facilitates seamless integration of user feedback in the question answering process and relies on Option Gain - a novel metric that enables efficient and intuitive user interaction. Our evaluation shows that using the proposed scheme, even a small number of user interactions can lead to significant improvements in the performance of SQA systems.

MDP-based Shallow Parsing in Distantly Supervised QA Systems

Sep 27, 2019

Abstract:Question answering systems over knowledge graphs commonly consist of multiple components such as shallow parser, entity/relation linker, query generation and answer retrieval. We focus on the first task, shallow parsing, which so far received little attention in the QA community. Despite the lack of gold annotations for shallow parsing in question answering datasets, we devise a Reinforcement Learning based model called MDP-Parser, and show that it outperforms the current state-of-the-art approaches. Furthermore, it can be easily embedded into the existing entity/relation linking tools to boost the overall accuracy.

ML-Schema: Exposing the Semantics of Machine Learning with Schemas and Ontologies

Jul 14, 2018

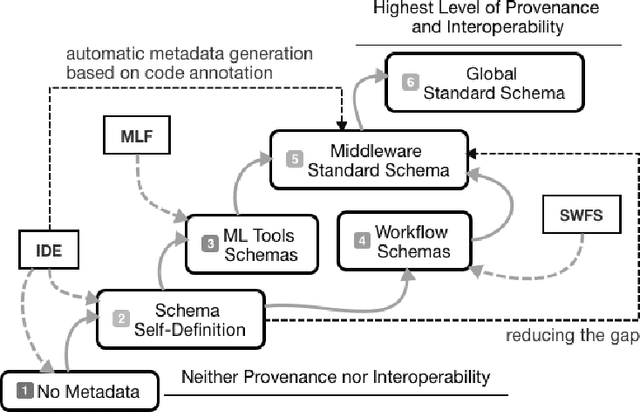

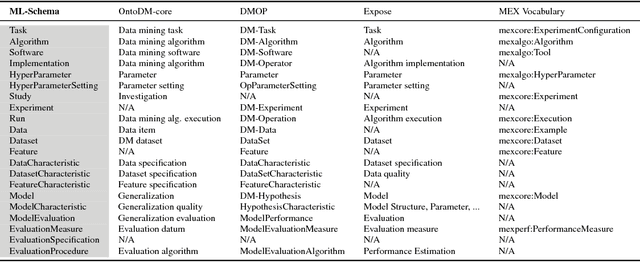

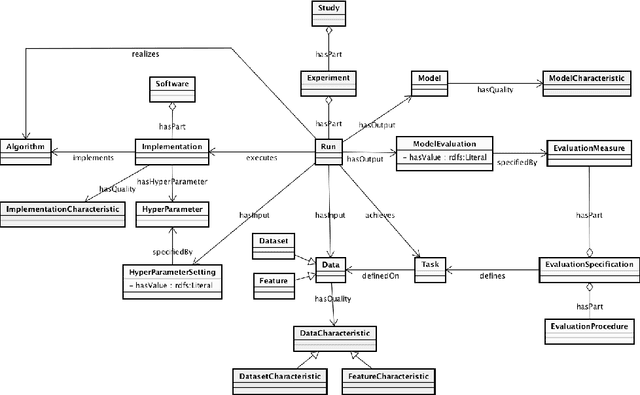

Abstract:The ML-Schema, proposed by the W3C Machine Learning Schema Community Group, is a top-level ontology that provides a set of classes, properties, and restrictions for representing and interchanging information on machine learning algorithms, datasets, and experiments. It can be easily extended and specialized and it is also mapped to other more domain-specific ontologies developed in the area of machine learning and data mining. In this paper we overview existing state-of-the-art machine learning interchange formats and present the first release of ML-Schema, a canonical format resulted of more than seven years of experience among different research institutions. We argue that exposing semantics of machine learning algorithms, models, and experiments through a canonical format may pave the way to better interpretability and to realistically achieve the full interoperability of experiments regardless of platform or adopted workflow solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge