Hai Victor Habi

Inverse Problem Sampling in Latent Space Using Sequential Monte Carlo

Feb 09, 2025

Abstract:In image processing, solving inverse problems is the task of finding plausible reconstructions of an image that was corrupted by some (usually known) degradation model. Commonly, this process is done using a generative image model that can guide the reconstruction towards solutions that appear natural. The success of diffusion models over the last few years has made them a leading candidate for this task. However, the sequential nature of diffusion models makes this conditional sampling process challenging. Furthermore, since diffusion models are often defined in the latent space of an autoencoder, the encoder-decoder transformations introduce additional difficulties. Here, we suggest a novel sampling method based on sequential Monte Carlo (SMC) in the latent space of diffusion models. We use the forward process of the diffusion model to add additional auxiliary observations and then perform an SMC sampling as part of the backward process. Empirical evaluations on ImageNet and FFHQ show the benefits of our approach over competing methods on various inverse problem tasks.

Efficient Image Restoration via Latent Consistency Flow Matching

Feb 05, 2025

Abstract:Recent advances in generative image restoration (IR) have demonstrated impressive results. However, these methods are hindered by their substantial size and computational demands, rendering them unsuitable for deployment on edge devices. This work introduces ELIR, an Efficient Latent Image Restoration method. ELIR operates in latent space by first predicting the latent representation of the minimum mean square error (MMSE) estimator and then transporting this estimate to high-quality images using a latent consistency flow-based model. Consequently, ELIR is more than 4x faster compared to the state-of-the-art diffusion and flow-based approaches. Moreover, ELIR is also more than 4x smaller, making it well-suited for deployment on resource-constrained edge devices. Comprehensive evaluations of various image restoration tasks show that ELIR achieves competitive results, effectively balancing distortion and perceptual quality metrics while offering improved efficiency in terms of memory and computation.

Learned Bayesian Cramér-Rao Bound for Unknown Measurement Models Using Score Neural Networks

Feb 02, 2025Abstract:The Bayesian Cram\'er-Rao bound (BCRB) is a crucial tool in signal processing for assessing the fundamental limitations of any estimation problem as well as benchmarking within a Bayesian frameworks. However, the BCRB cannot be computed without full knowledge of the prior and the measurement distributions. In this work, we propose a fully learned Bayesian Cram\'er-Rao bound (LBCRB) that learns both the prior and the measurement distributions. Specifically, we suggest two approaches to obtain the LBCRB: the Posterior Approach and the Measurement-Prior Approach. The Posterior Approach provides a simple method to obtain the LBCRB, whereas the Measurement-Prior Approach enables us to incorporate domain knowledge to improve the sample complexity and {interpretability}. To achieve this, we introduce a Physics-encoded score neural network which enables us to easily incorporate such domain knowledge into a neural network. We {study the learning} errors of the two suggested approaches theoretically, and validate them numerically. We demonstrate the two approaches on several signal processing examples, including a linear measurement problem with unknown mixing and Gaussian noise covariance matrices, frequency estimation, and quantized measurement. In addition, we test our approach on a nonlinear signal processing problem of frequency estimation with real-world underwater ambient noise.

Data Generation for Hardware-Friendly Post-Training Quantization

Oct 29, 2024

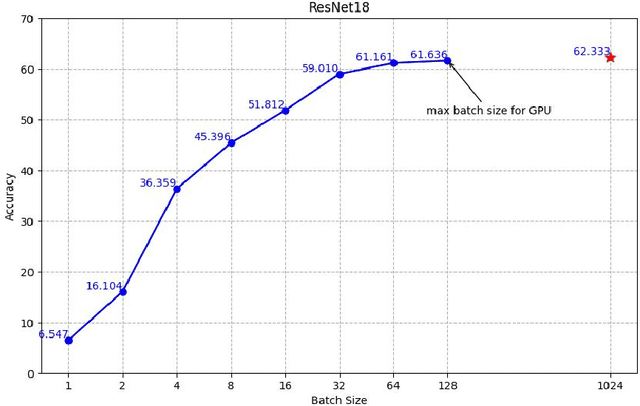

Abstract:Zero-shot quantization (ZSQ) using synthetic data is a key approach for post-training quantization (PTQ) under privacy and security constraints. However, existing data generation methods often struggle to effectively generate data suitable for hardware-friendly quantization, where all model layers are quantized. We analyze existing data generation methods based on batch normalization (BN) matching and identify several gaps between synthetic and real data: 1) Current generation algorithms do not optimize the entire synthetic dataset simultaneously; 2) Data augmentations applied during training are often overlooked; and 3) A distribution shift occurs in the final model layers due to the absence of BN in those layers. These gaps negatively impact ZSQ performance, particularly in hardware-friendly quantization scenarios. In this work, we propose Data Generation for Hardware-friendly quantization (DGH), a novel method that addresses these gaps. DGH jointly optimizes all generated images, regardless of the image set size or GPU memory constraints. To address data augmentation mismatches, DGH includes a preprocessing stage that mimics the augmentation process and enhances image quality by incorporating natural image priors. Finally, we propose a new distribution-stretching loss that aligns the support of the feature map distribution between real and synthetic data. This loss is applied to the model's output and can be adapted to various tasks. DGH demonstrates significant improvements in quantization performance across multiple tasks, achieving up to a 30% increase in accuracy for hardware-friendly ZSQ in both classification and object detection, often performing on par with real data.

EPTQ: Enhanced Post-Training Quantization via Label-Free Hessian

Sep 20, 2023Abstract:Quantization of deep neural networks (DNN) has become a key element in the efforts of embedding such networks on end-user devices. However, current quantization methods usually suffer from costly accuracy degradation. In this paper, we propose a new method for Enhanced Post Training Quantization named EPTQ. The method is based on knowledge distillation with an adaptive weighting of layers. In addition, we introduce a new label-free technique for approximating the Hessian trace of the task loss, named Label-Free Hessian. This technique removes the requirement of a labeled dataset for computing the Hessian. The adaptive knowledge distillation uses the Label-Free Hessian technique to give greater attention to the sensitive parts of the model while performing the optimization. Empirically, by employing EPTQ we achieve state-of-the-art results on a wide variety of models, tasks, and datasets, including ImageNet classification, COCO object detection, and Pascal-VOC for semantic segmentation. We demonstrate the performance and compatibility of EPTQ on an extended set of architectures, including CNNs, Transformers, hybrid, and MLP-only models.

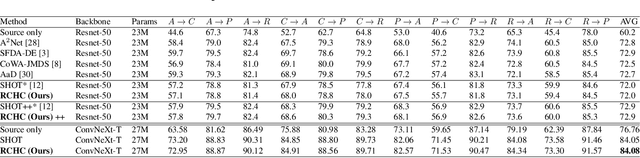

Reconciling a Centroid-Hypothesis Conflict in Source-Free Domain Adaptation

Dec 07, 2022

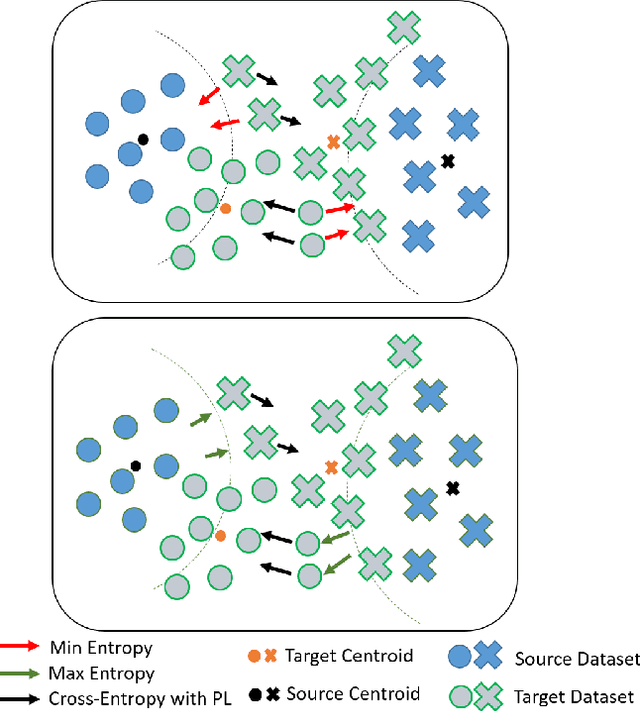

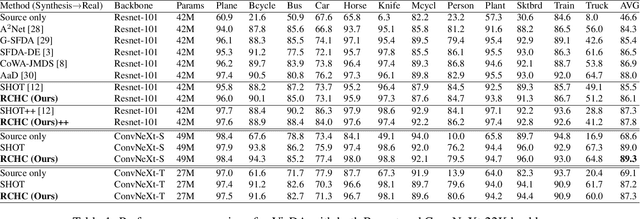

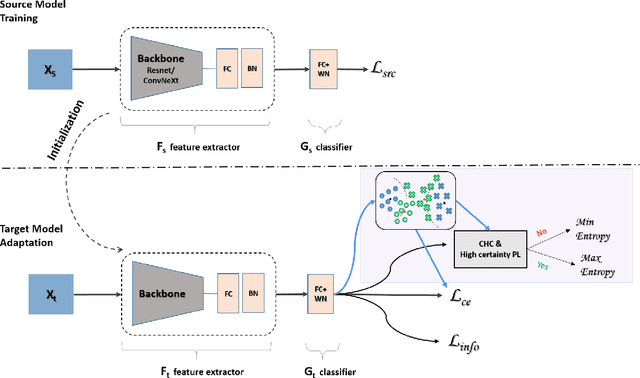

Abstract:Source-free domain adaptation (SFDA) aims to transfer knowledge learned from a source domain to an unlabeled target domain, where the source data is unavailable during adaptation. Existing approaches for SFDA focus on self-training usually including well-established entropy minimization techniques. One of the main challenges in SFDA is to reduce accumulation of errors caused by domain misalignment. A recent strategy successfully managed to reduce error accumulation by pseudo-labeling the target samples based on class-wise prototypes (centroids) generated by their clustering in the representation space. However, this strategy also creates cases for which the cross-entropy of a pseudo-label and the minimum entropy have a conflict in their objectives. We call this conflict the centroid-hypothesis conflict. We propose to reconcile this conflict by aligning the entropy minimization objective with that of the pseudo labels' cross entropy. We demonstrate the effectiveness of aligning the two loss objectives on three domain adaptation datasets. In addition, we provide state-of-the-art results using up-to-date architectures also showing the consistency of our method across these architectures.

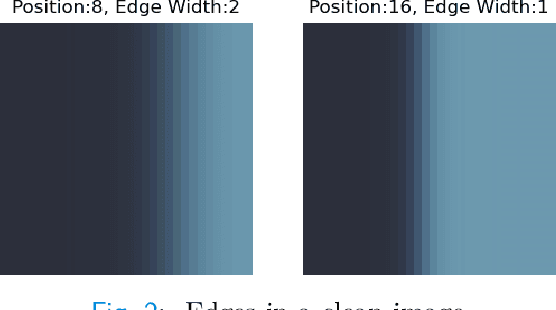

Learning to Bound: A Generative Cramér-Rao Bound

Mar 07, 2022

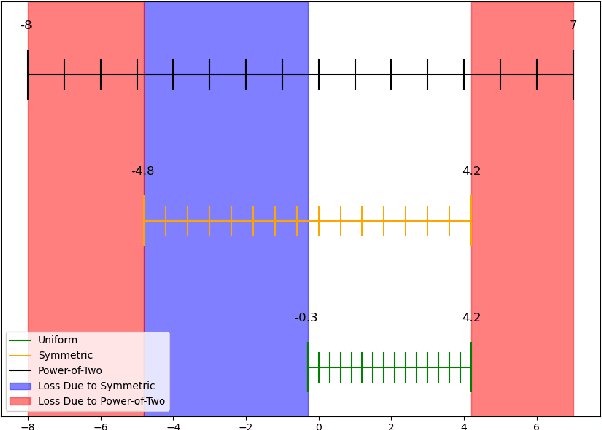

Abstract:The Cram\'er-Rao bound (CRB), a well-known lower bound on the performance of any unbiased parameter estimator, has been used to study a wide variety of problems. However, to obtain the CRB, requires an analytical expression for the likelihood of the measurements given the parameters, or equivalently a precise and explicit statistical model for the data. In many applications, such a model is not available. Instead, this work introduces a novel approach to approximate the CRB using data-driven methods, which removes the requirement for an analytical statistical model. This approach is based on the recent success of deep generative models in modeling complex, high-dimensional distributions. Using a learned normalizing flow model, we model the distribution of the measurements and obtain an approximation of the CRB, which we call Generative Cram\'er-Rao Bound (GCRB). Numerical experiments on simple problems validate this approach, and experiments on two image processing tasks of image denoising and edge detection with a learned camera noise model demonstrate its power and benefits.

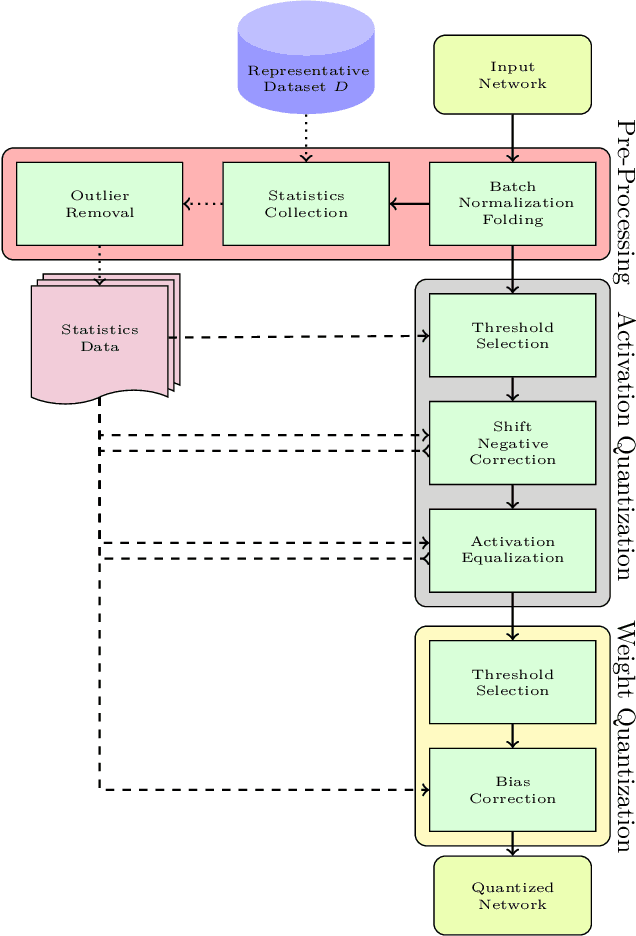

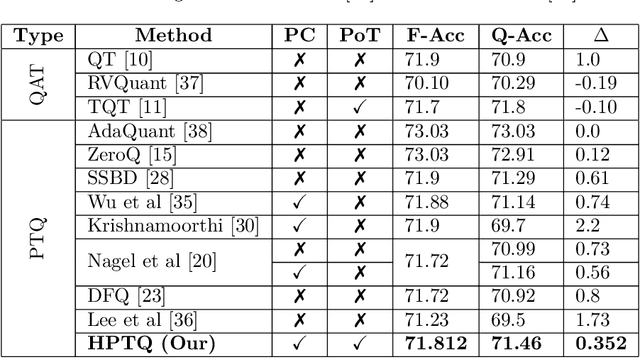

HPTQ: Hardware-Friendly Post Training Quantization

Sep 26, 2021

Abstract:Neural network quantization enables the deployment of models on edge devices. An essential requirement for their hardware efficiency is that the quantizers are hardware-friendly: uniform, symmetric, and with power-of-two thresholds. To the best of our knowledge, current post-training quantization methods do not support all of these constraints simultaneously. In this work, we introduce a hardware-friendly post training quantization (HPTQ) framework, which addresses this problem by synergistically combining several known quantization methods. We perform a large-scale study on four tasks: classification, object detection, semantic segmentation and pose estimation over a wide variety of network architectures. Our extensive experiments show that competitive results can be obtained under hardware-friendly constraints.

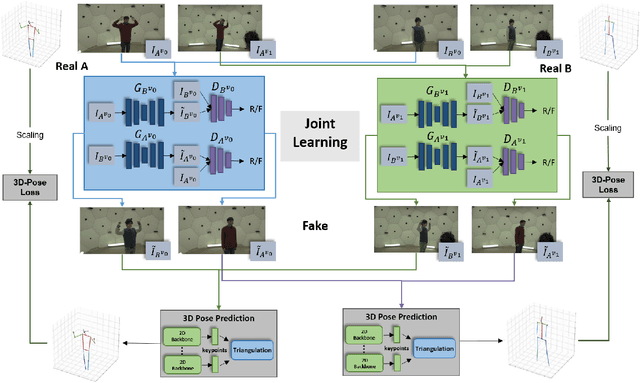

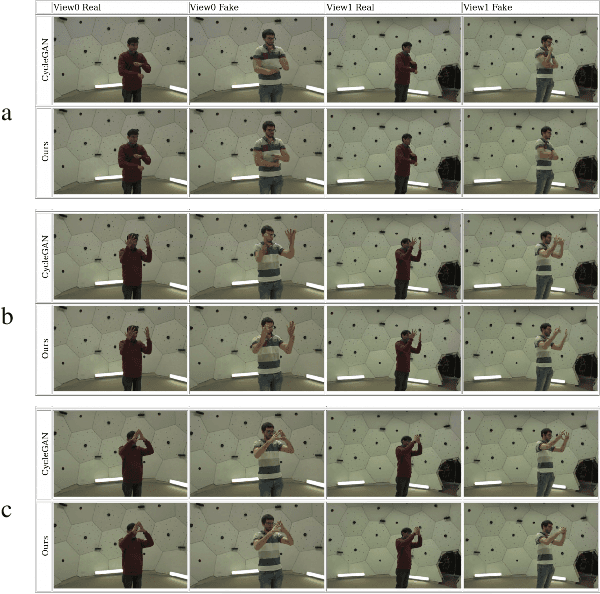

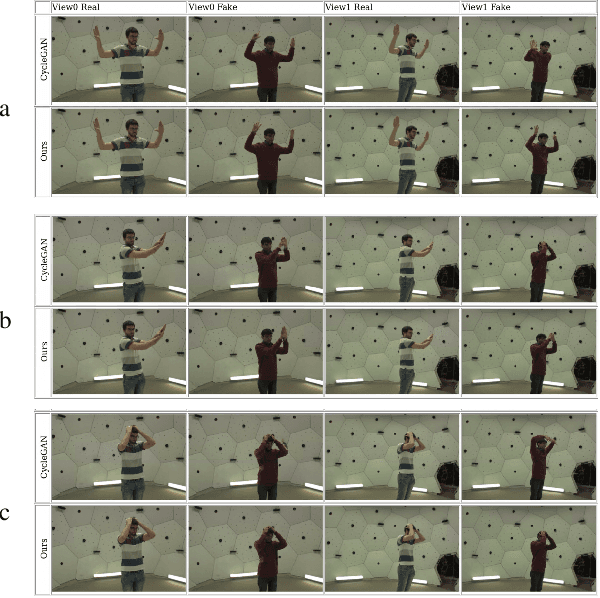

Multi-View Image-to-Image Translation Supervised by 3D Pose

Apr 12, 2021

Abstract:We address the task of multi-view image-to-image translation for person image generation. The goal is to synthesize photo-realistic multi-view images with pose-consistency across all views. Our proposed end-to-end framework is based on a joint learning of multiple unpaired image-to-image translation models, one per camera viewpoint. The joint learning is imposed by constraints on the shared 3D human pose in order to encourage the 2D pose projections in all views to be consistent. Experimental results on the CMU-Panoptic dataset demonstrate the effectiveness of the suggested framework in generating photo-realistic images of persons with new poses that are more consistent across all views in comparison to a standard Image-to-Image baseline. The code is available at: https://github.com/sony-si/MultiView-Img2Img

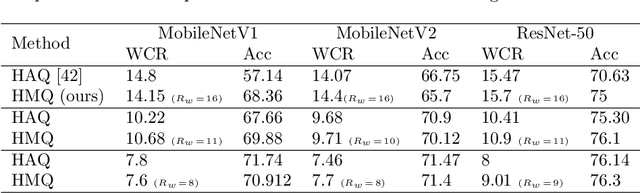

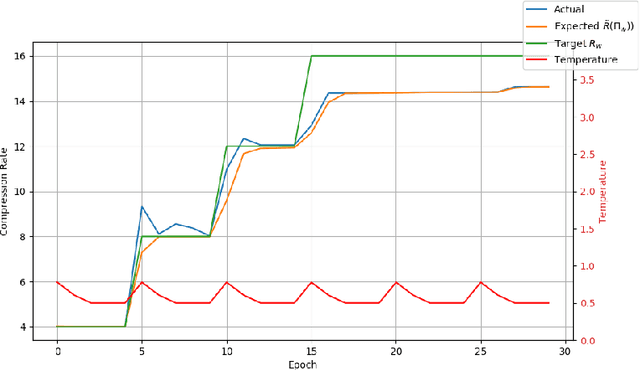

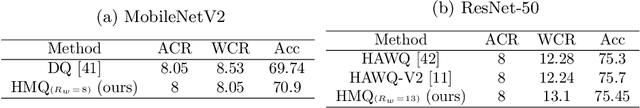

HMQ: Hardware Friendly Mixed Precision Quantization Block for CNNs

Jul 20, 2020

Abstract:Recent work in network quantization produced state-of-the-art results using mixed precision quantization. An imperative requirement for many efficient edge device hardware implementations is that their quantizers are uniform and with power-of-two thresholds. In this work, we introduce the Hardware Friendly Mixed Precision Quantization Block (HMQ) in order to meet this requirement. The HMQ is a mixed precision quantization block that repurposes the Gumbel-Softmax estimator into a smooth estimator of a pair of quantization parameters, namely, bit-width and threshold. HMQs use this to search over a finite space of quantization schemes. Empirically, we apply HMQs to quantize classification models trained on CIFAR10 and ImageNet. For ImageNet, we quantize four different architectures and show that, in spite of the added restrictions to our quantization scheme, we achieve competitive and, in some cases, state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge