Lior Dikstein

Data Generation for Hardware-Friendly Post-Training Quantization

Oct 29, 2024

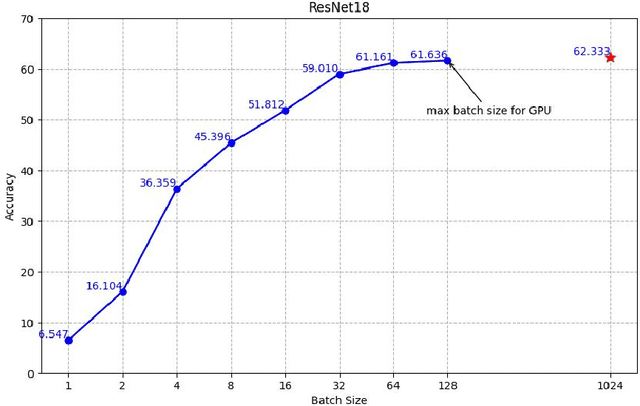

Abstract:Zero-shot quantization (ZSQ) using synthetic data is a key approach for post-training quantization (PTQ) under privacy and security constraints. However, existing data generation methods often struggle to effectively generate data suitable for hardware-friendly quantization, where all model layers are quantized. We analyze existing data generation methods based on batch normalization (BN) matching and identify several gaps between synthetic and real data: 1) Current generation algorithms do not optimize the entire synthetic dataset simultaneously; 2) Data augmentations applied during training are often overlooked; and 3) A distribution shift occurs in the final model layers due to the absence of BN in those layers. These gaps negatively impact ZSQ performance, particularly in hardware-friendly quantization scenarios. In this work, we propose Data Generation for Hardware-friendly quantization (DGH), a novel method that addresses these gaps. DGH jointly optimizes all generated images, regardless of the image set size or GPU memory constraints. To address data augmentation mismatches, DGH includes a preprocessing stage that mimics the augmentation process and enhances image quality by incorporating natural image priors. Finally, we propose a new distribution-stretching loss that aligns the support of the feature map distribution between real and synthetic data. This loss is applied to the model's output and can be adapted to various tasks. DGH demonstrates significant improvements in quantization performance across multiple tasks, achieving up to a 30% increase in accuracy for hardware-friendly ZSQ in both classification and object detection, often performing on par with real data.

HPTQ: Hardware-Friendly Post Training Quantization

Sep 26, 2021

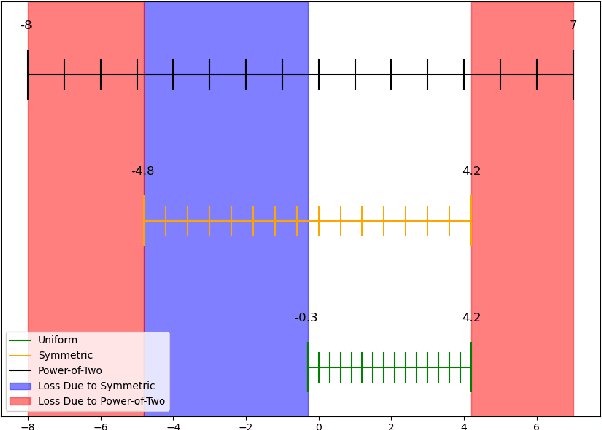

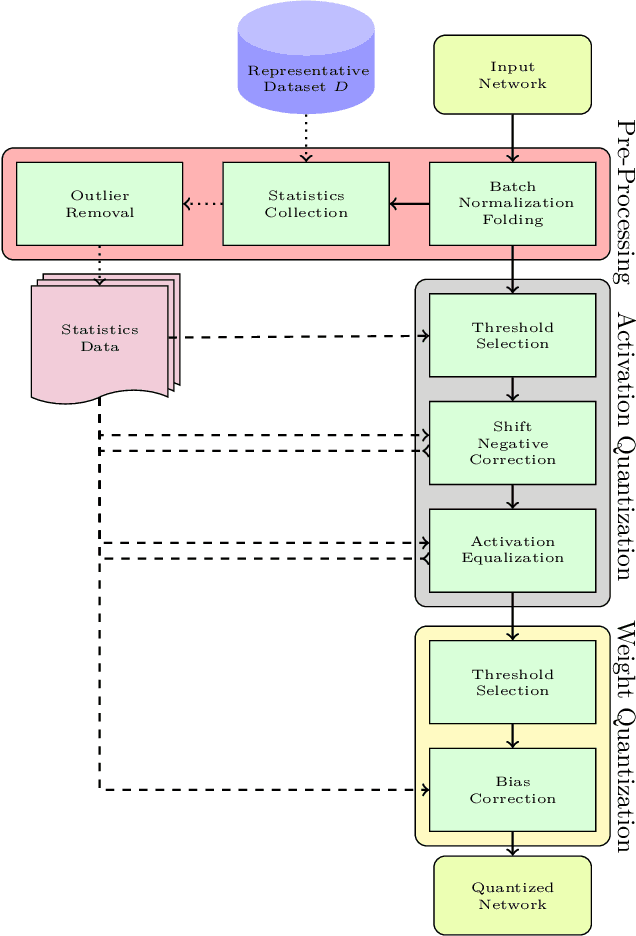

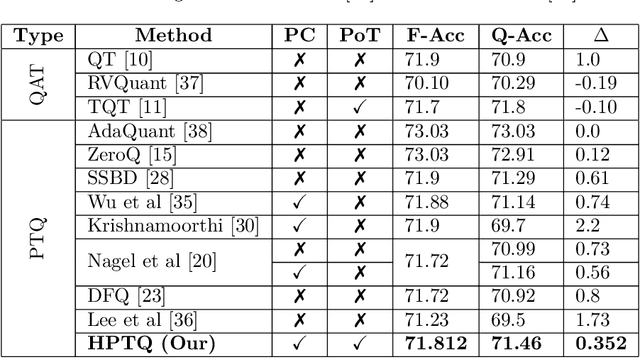

Abstract:Neural network quantization enables the deployment of models on edge devices. An essential requirement for their hardware efficiency is that the quantizers are hardware-friendly: uniform, symmetric, and with power-of-two thresholds. To the best of our knowledge, current post-training quantization methods do not support all of these constraints simultaneously. In this work, we introduce a hardware-friendly post training quantization (HPTQ) framework, which addresses this problem by synergistically combining several known quantization methods. We perform a large-scale study on four tasks: classification, object detection, semantic segmentation and pose estimation over a wide variety of network architectures. Our extensive experiments show that competitive results can be obtained under hardware-friendly constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge