Guoxiang Zhang

Drive-JEPA: Video JEPA Meets Multimodal Trajectory Distillation for End-to-End Driving

Jan 29, 2026Abstract:End-to-end autonomous driving increasingly leverages self-supervised video pretraining to learn transferable planning representations. However, pretraining video world models for scene understanding has so far brought only limited improvements. This limitation is compounded by the inherent ambiguity of driving: each scene typically provides only a single human trajectory, making it difficult to learn multimodal behaviors. In this work, we propose Drive-JEPA, a framework that integrates Video Joint-Embedding Predictive Architecture (V-JEPA) with multimodal trajectory distillation for end-to-end driving. First, we adapt V-JEPA for end-to-end driving, pretraining a ViT encoder on large-scale driving videos to produce predictive representations aligned with trajectory planning. Second, we introduce a proposal-centric planner that distills diverse simulator-generated trajectories alongside human trajectories, with a momentum-aware selection mechanism to promote stable and safe behavior. When evaluated on NAVSIM, the V-JEPA representation combined with a simple transformer-based decoder outperforms prior methods by 3 PDMS in the perception-free setting. The complete Drive-JEPA framework achieves 93.3 PDMS on v1 and 87.8 EPDMS on v2, setting a new state-of-the-art.

Anything in Any Scene: Photorealistic Video Object Insertion

Jan 30, 2024Abstract:Realistic video simulation has shown significant potential across diverse applications, from virtual reality to film production. This is particularly true for scenarios where capturing videos in real-world settings is either impractical or expensive. Existing approaches in video simulation often fail to accurately model the lighting environment, represent the object geometry, or achieve high levels of photorealism. In this paper, we propose Anything in Any Scene, a novel and generic framework for realistic video simulation that seamlessly inserts any object into an existing dynamic video with a strong emphasis on physical realism. Our proposed general framework encompasses three key processes: 1) integrating a realistic object into a given scene video with proper placement to ensure geometric realism; 2) estimating the sky and environmental lighting distribution and simulating realistic shadows to enhance the light realism; 3) employing a style transfer network that refines the final video output to maximize photorealism. We experimentally demonstrate that Anything in Any Scene framework produces simulated videos of great geometric realism, lighting realism, and photorealism. By significantly mitigating the challenges associated with video data generation, our framework offers an efficient and cost-effective solution for acquiring high-quality videos. Furthermore, its applications extend well beyond video data augmentation, showing promising potential in virtual reality, video editing, and various other video-centric applications. Please check our project website https://anythinginanyscene.github.io for access to our project code and more high-resolution video results.

Self-optimizing loop sifting and majorization for 3D reconstruction

Apr 22, 2021

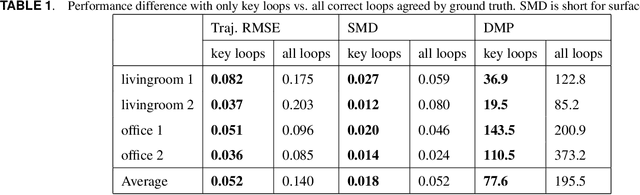

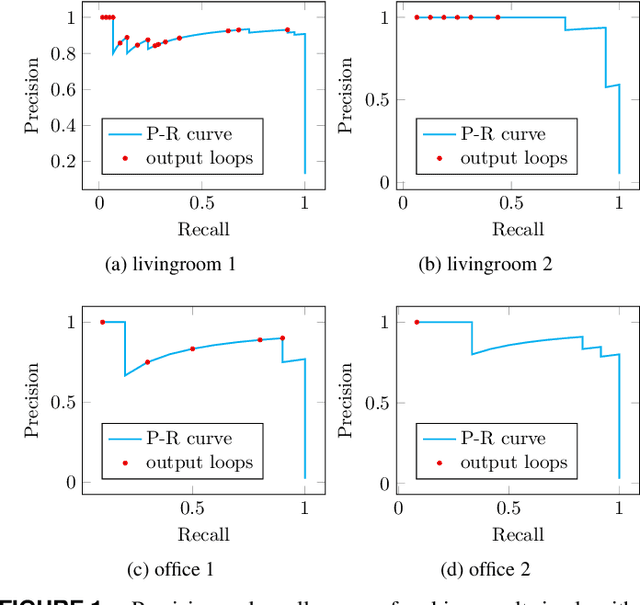

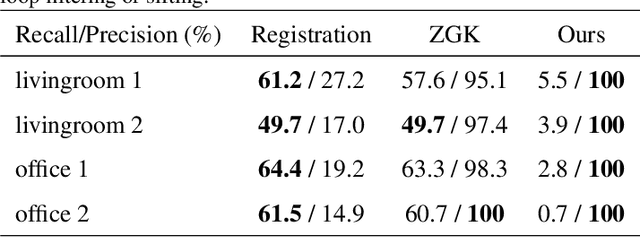

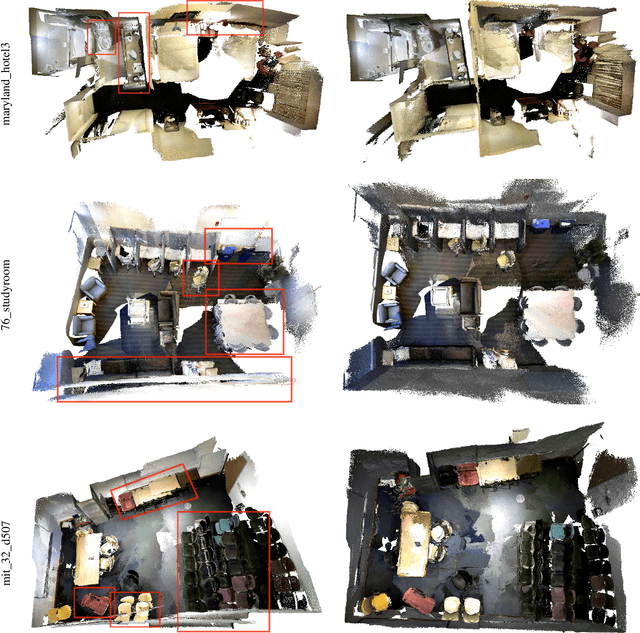

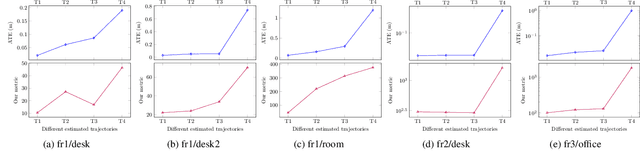

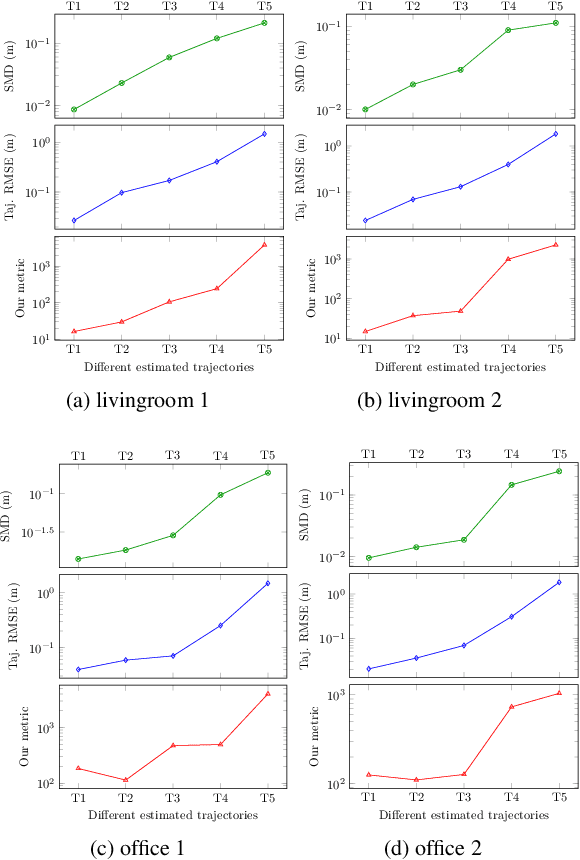

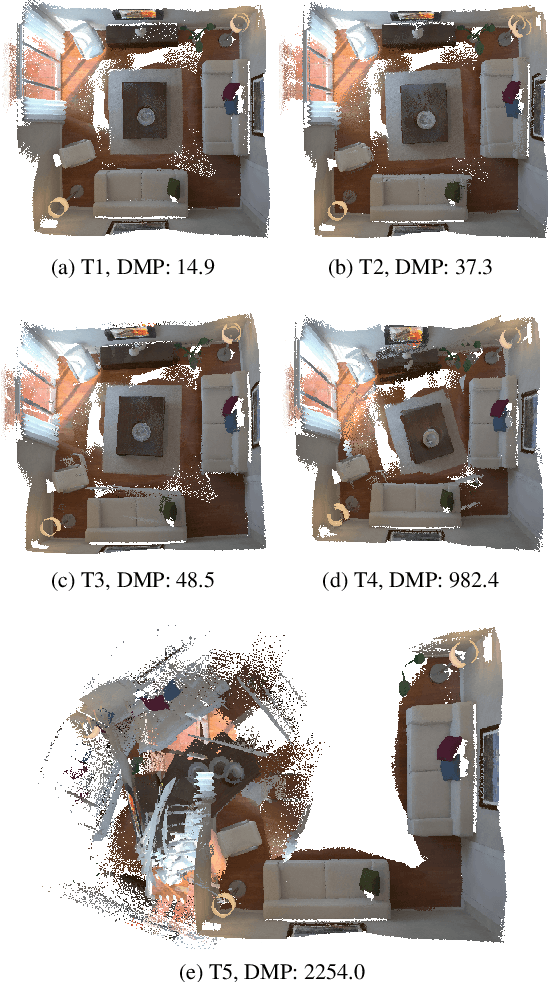

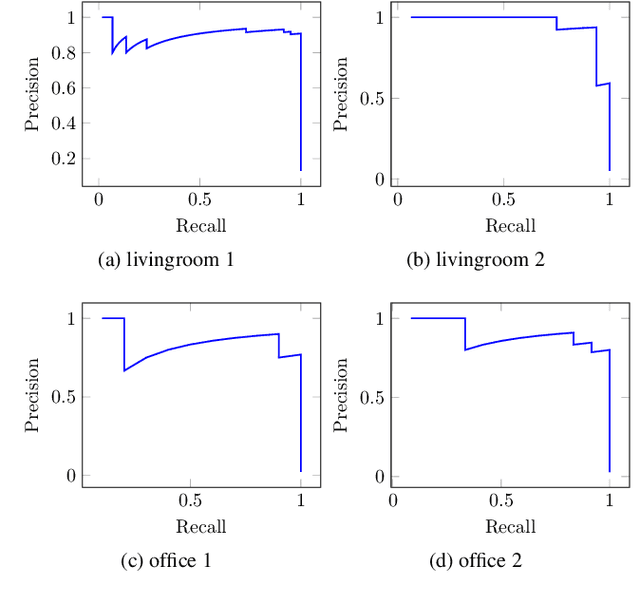

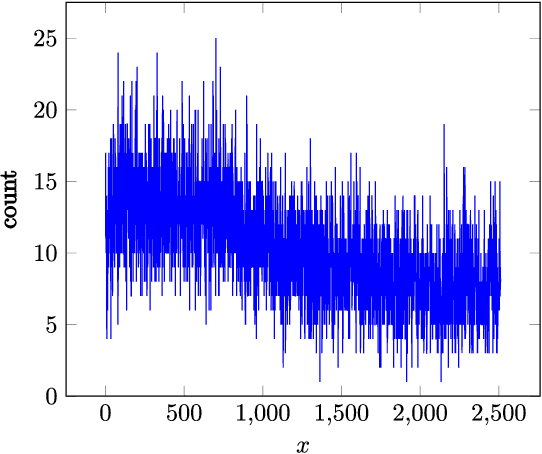

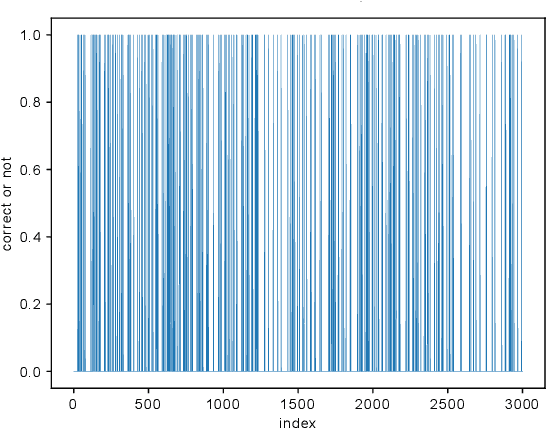

Abstract:Visual simultaneous localization and mapping (vSLAM) and 3D reconstruction methods have gone through impressive progress. These methods are very promising for autonomous vehicle and consumer robot applications because they can map large-scale environments such as cities and indoor environments without the need for much human effort. However, when it comes to loop detection and optimization, there is still room for improvement. vSLAM systems tend to add the loops very conservatively to reduce the severe influence of the false loops. These conservative checks usually lead to correct loops rejected, thus decrease performance. In this paper, an algorithm that can sift and majorize loop detections is proposed. Our proposed algorithm can compare the usefulness and effectiveness of different loops with the dense map posterior (DMP) metric. The algorithm tests and decides the acceptance of each loop without a single user-defined threshold. Thus it is adaptive to different data conditions. The proposed method is general and agnostic to sensor type (as long as depth or LiDAR reading presents), loop detection, and optimization methods. Neither does it require a specific type of SLAM system. Thus it has great potential to be applied to various application scenarios. Experiments are conducted on public datasets. Results show that the proposed method outperforms state-of-the-art methods.

A metric for evaluating 3D reconstruction and mapping performance with no ground truthing

Jan 25, 2021

Abstract:It is not easy when evaluating 3D mapping performance because existing metrics require ground truth data that can only be collected with special instruments. In this paper, we propose a metric, dense map posterior (DMP), for this evaluation. It can work without any ground truth data. Instead, it calculates a comparable value, reflecting a map posterior probability, from dense point cloud observations. In our experiments, the proposed DMP is benchmarked against ground truth-based metrics. Results show that DMP can provide a similar evaluation capability. The proposed metric makes evaluating different methods more flexible and opens many new possibilities, such as self-supervised methods and more available datasets.

More Informed Random Sample Consensus

Nov 18, 2020

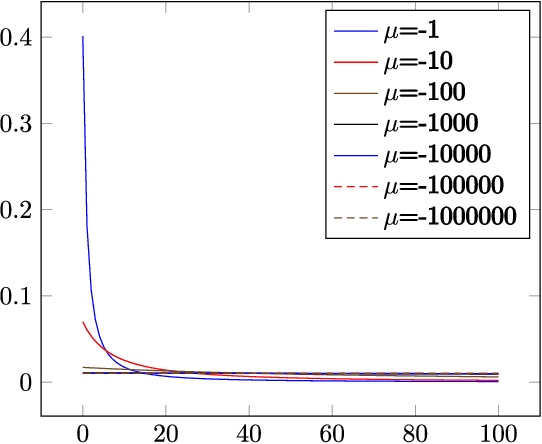

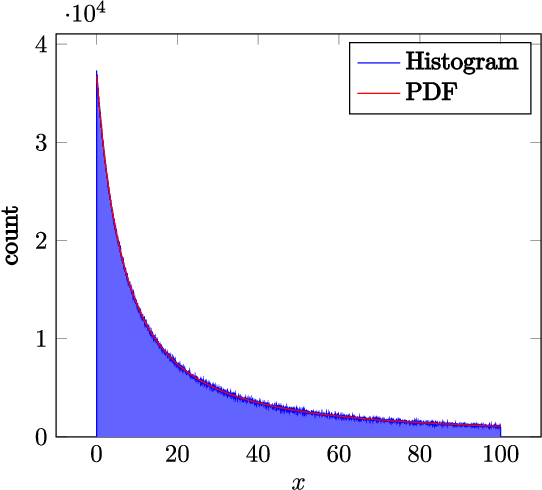

Abstract:Random sample consensus (RANSAC) is a robust model-fitting algorithm. It is widely used in many fields including image-stitching and point cloud registration. In RANSAC, data is uniformly sampled for hypothesis generation. However, this uniform sampling strategy does not fully utilize all the information on many problems. In this paper, we propose a method that samples data with a L\'{e}vy distribution together with a data sorting algorithm. In the hypothesis sampling step of the proposed method, data is sorted with a sorting algorithm we proposed, which sorts data based on the likelihood of a data point being in the inlier set. Then, hypotheses are sampled from the sorted data with L\'{e}vy distribution. The proposed method is evaluated on both simulation and real-world public datasets. Our method shows better results compared with the uniform baseline method.

LoopSmart: Smart Visual SLAM Through Surface Loop Closure

Jan 04, 2018

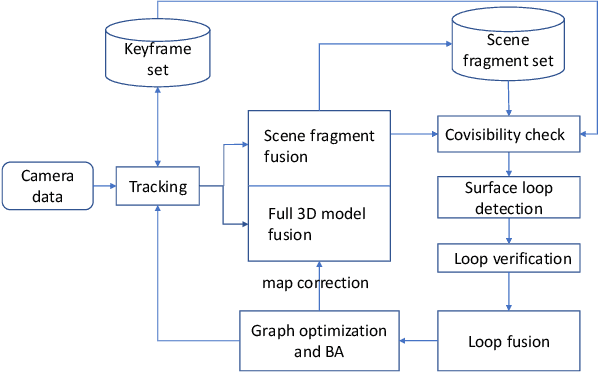

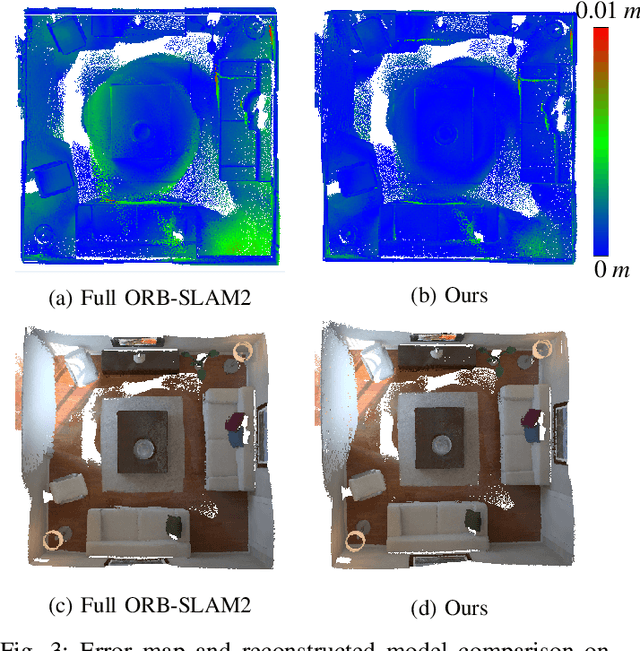

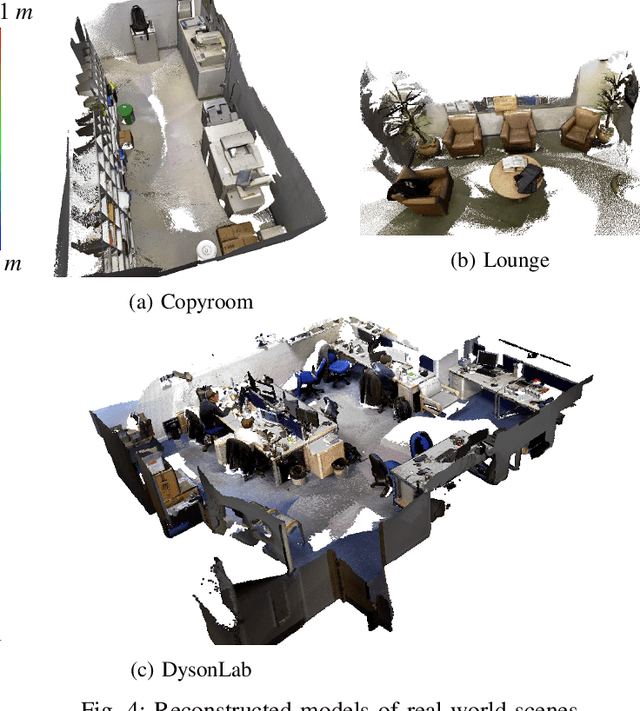

Abstract:We present a visual simultaneous localization and mapping (SLAM) framework of closing surface loops. It combines both sparse feature matching and dense surface alignment. Sparse feature matching is used for visual odometry and globally camera pose fine-tuning when dense loops are detected, while dense surface alignment is the way of closing large loops and solving surface mismatching problem. To achieve smart dense surface loop closure, a highly efficient CUDA-based global point cloud registration method and a map content dependent loop verification method are proposed. We run extensive experiments on different datasets, our method outperforms state-of-the-art ones in terms of both camera trajectory and surface reconstruction accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge