Guangming Wu

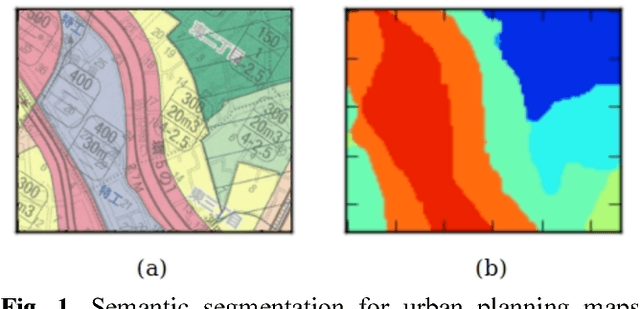

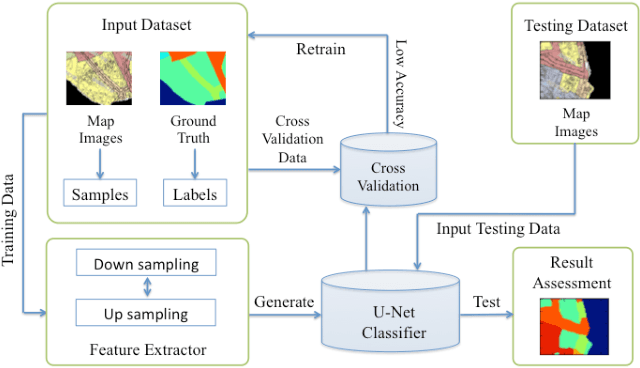

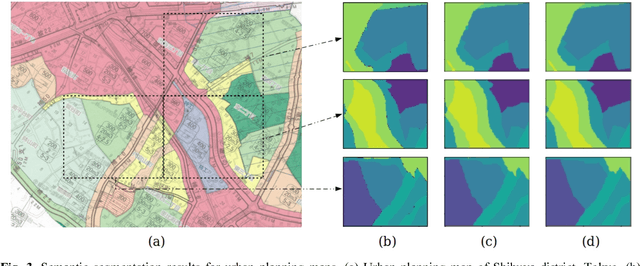

Semantic Segmentation for Urban Planning Maps based on U-Net

Oct 01, 2018

Abstract:The automatic digitizing of paper maps is a significant and challenging task for both academia and industry. As an important procedure of map digitizing, the semantic segmentation section mainly relies on manual visual interpretation with low efficiency. In this study, we select urban planning maps as a representative sample and investigate the feasibility of utilizing U-shape fully convolutional based architecture to perform end-to-end map semantic segmentation. The experimental results obtained from the test area in Shibuya district, Tokyo, demonstrate that our proposed method could achieve a very high Jaccard similarity coefficient of 93.63% and an overall accuracy of 99.36%. For implementation on GPGPU and cuDNN, the required processing time for the whole Shibuya district can be less than three minutes. The results indicate the proposed method can serve as a viable tool for urban planning map semantic segmentation task with high accuracy and efficiency.

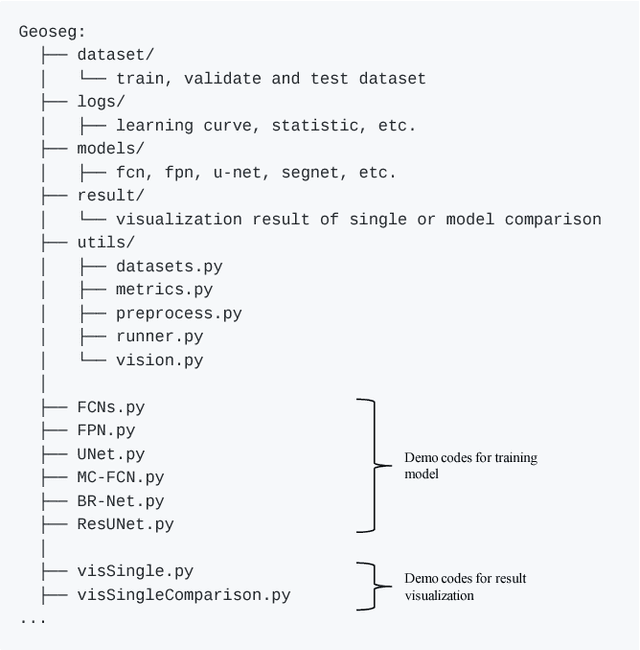

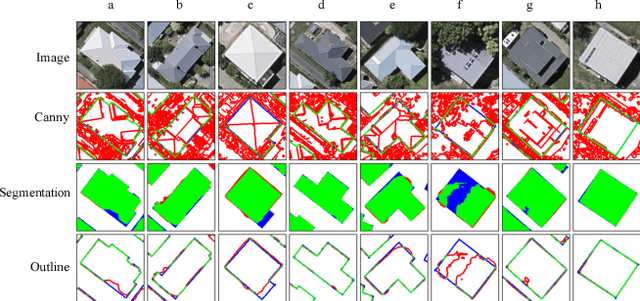

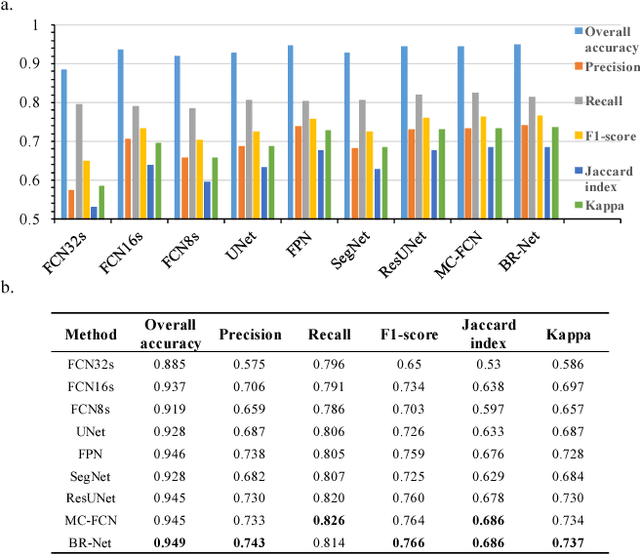

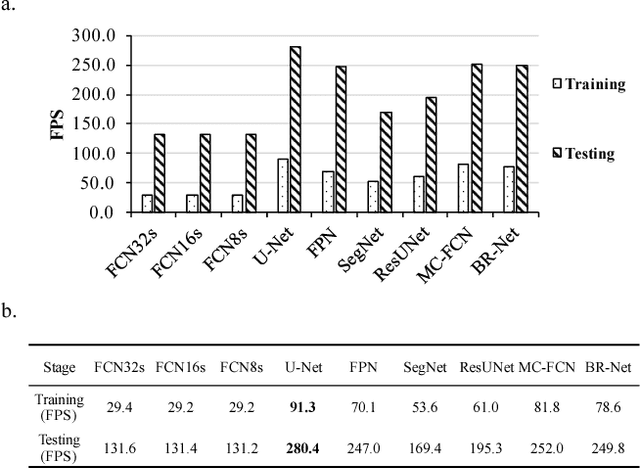

Geoseg: A Computer Vision Package for Automatic Building Segmentation and Outline Extraction

Sep 10, 2018

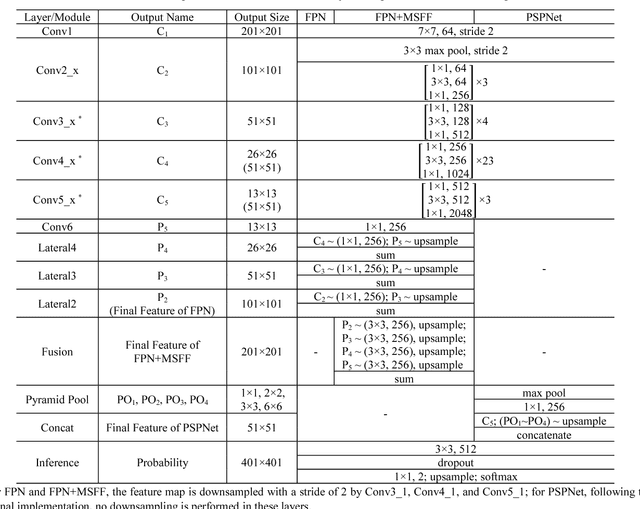

Abstract:Recently, deep learning algorithms, especially fully convolutional network based methods, are becoming very popular in the field of remote sensing. However, these methods are implemented and evaluated through various datasets and deep learning frameworks. There has not been a package that covers these methods in a unifying manner. In this study, we introduce a computer vision package termed Geoseg that focus on building segmentation and outline extraction. Geoseg implements over nine state-of-the-art models as well as utility scripts needed to conduct model training, logging, evaluating and visualization. The implementation of Geoseg emphasizes unification, simplicity, and flexibility. The performance and computational efficiency of all implemented methods are evaluated by comparison experiment through a unified, high-quality aerial image dataset.

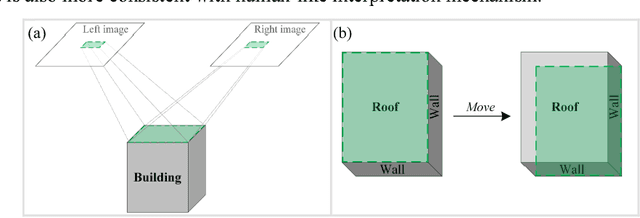

Aerial Imagery for Roof Segmentation: A Large-Scale Dataset towards Automatic Mapping of Buildings

Jul 27, 2018

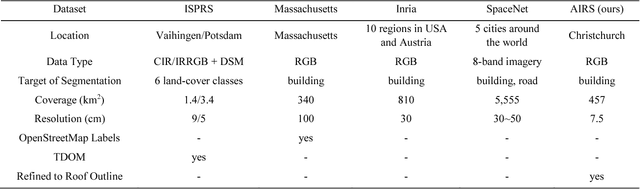

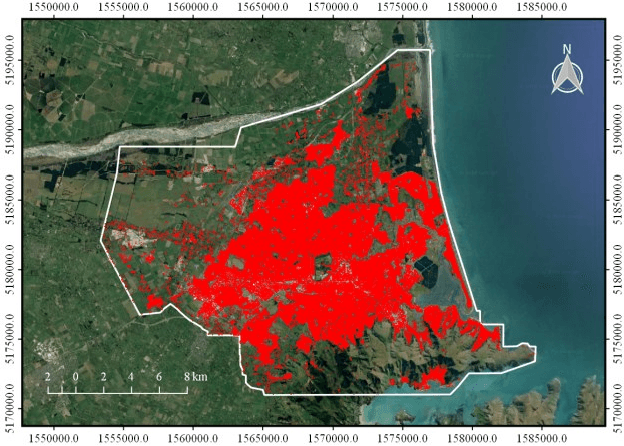

Abstract:As an important branch of deep learning, convolutional neural network has largely improved the performance of building detection. For further accelerating the development of building detection toward automatic mapping, a benchmark dataset bears significance in fair comparisons. However, several problems still remain in the current public datasets that address this task. First, although building detection is generally considered equivalent to extracting roof outlines, most datasets directly provide building footprints as ground truths for testing and evaluation; the challenges of these benchmarks are more complicated than roof segmentation, as relief displacement leads to varying degrees of misalignment between roof outlines and footprints. On the other hand, an image dataset should feature a large quantity and high spatial resolution to effectively train a high-performance deep learning model for accurate mapping of buildings. Unfortunately, the remote sensing community still lacks proper benchmark datasets that can simultaneously satisfy these requirements. In this paper, we present a new large-scale benchmark dataset termed Aerial Imagery for Roof Segmentation (AIRS). This dataset provides a wide coverage of aerial imagery with 7.5 cm resolution and contains over 220,000 buildings. The task posed for AIRS is defined as roof segmentation. We implement several state-of-the-art deep learning methods of semantic segmentation for performance evaluation and analysis of the proposed dataset. The results can serve as the baseline for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge